TheContact

Member

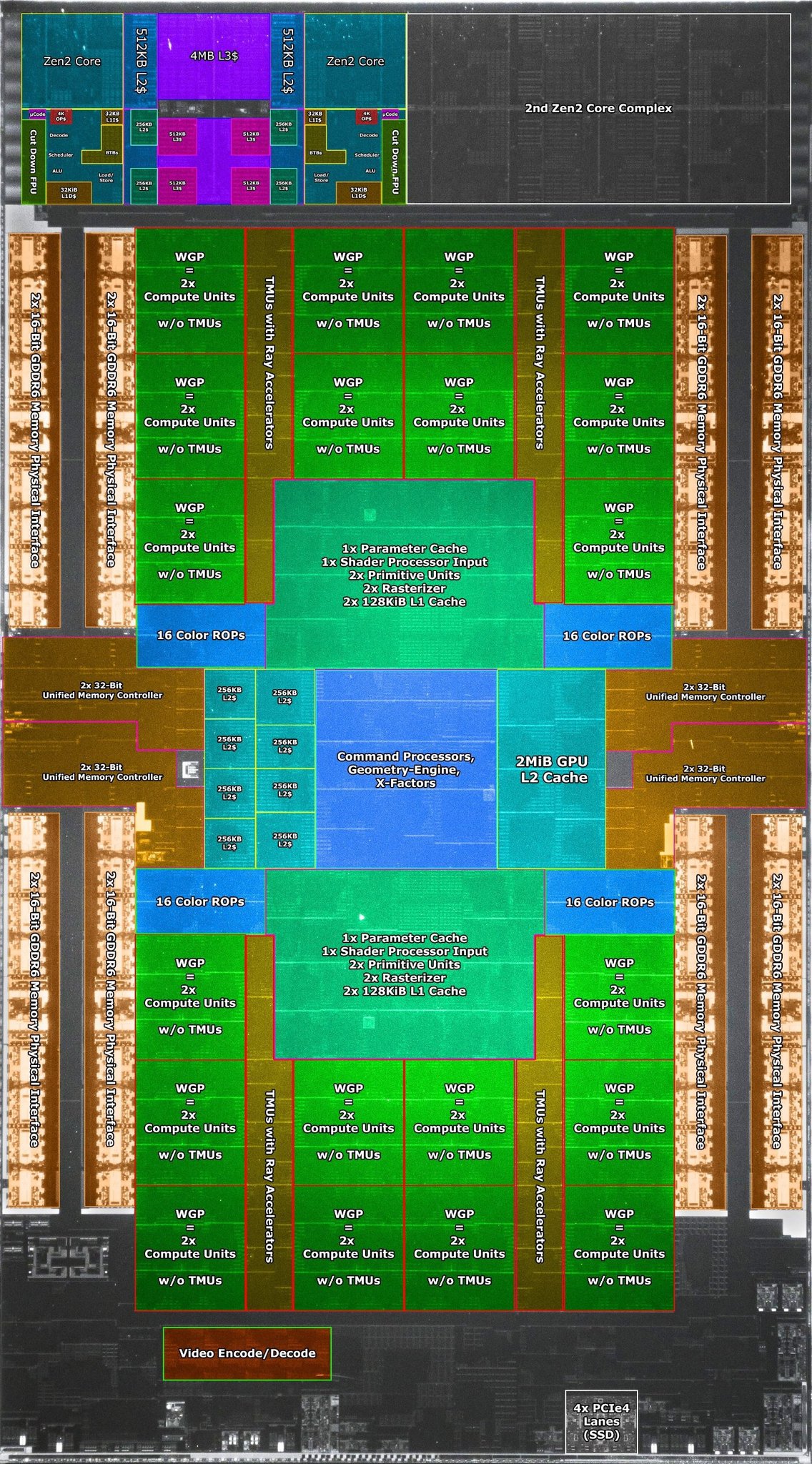

The article says it is not 100% RDNA2 because there is no Infinite Cache.

"making the console not fully RDNA 2 as previously thought.

What is missing from the appeal within PS5, it would seem to be the Infinity Cache..."

Do you know any other console that doesn't have Infinite Cache?

was it ever thought that it was full rnda2? there was a ton of speculation about what the chip actually contained because they kept calling it a "custom rdna2". i never saw any legit statement that it was full rdna2