Chittagong

Gold Member

I take it as we're missing a significant piece of Durango specs. And maybe more so for Orbis.

Put it this way. Any news/specs that are spot on will be removed on NeoGAF. Just like it has happened before.

it has? When?

I take it as we're missing a significant piece of Durango specs. And maybe more so for Orbis.

Put it this way. Any news/specs that are spot on will be removed on NeoGAF. Just like it has happened before.

Sweetvar's earlier posts were removed.it has? When?

Or they realised that removal played into the hands of the people searching for info and have decided to let it lie?I take it as we're missing a significant piece of Durango specs. And maybe more so for Orbis.

Put it this way. Any news/specs that are spot on will be removed on NeoGAF. Just like it has happened before.

Sweetvar's earlier posts were removed.

It's all custom silicon if you can't buy it at Fry's. AMD already has one of the most efficient high performance GPU core technologies available. No one at MS came along and said "hey, if you added this tiny unit it would make your whole GPU 3 times faster". All MS is doing is deciding how much money and time they can spend and how much heat they can get rid of. AMD will make the GPU as big as they want, and they'll give it whatever memory you want, but there is no secret thing they can "customize" to magically make it work 200% better.

Could be anything but it's nice to hear about the progress.The fact that devkit has 4GB of GDDR5 doesn't mean that console will have as much. Sadly it's almost never true, I think one of X360's devkits is the only that has same amount of ram as the console. With PS3 devktis, it's had 50-100% more memory (console 512, kit 768 or 1GB)

Here is a exchange from Bkilian over at B3D who is a confirmed (ex) MS insider.

I think with the above it's almost confirmation Durango has multiple customisations. Of Orbis could too, we just don't have a talkative Sony insider afaik.

Explain the 360 GPU then.

The fact that devkit has 4GB of GDDR5 doesn't mean that console will have as much. Sadly it's almost never true, I think one of X360's devkits is the only that has same amount of ram as the console. With PS3 devktis, it's had 50-100% more memory (console 512, kit 768 or 1GB)

Think we need to clarify.

I believe thuway's claim is of the target spec being changed to 4GB - i.e. the spec for the final box. Which would align with VG247's rumour a while ago of more recent kits have 'either 8 or 16GB'.

It would probably be wise not to place blind faith in some magical fairy dust the Durango GPU is supposed to have, but that no one can name. We all saw how that kind of thinking turned out for the Nintendo stalwarts in the WiiU Speculation threads.

The ultimate goal for the hardware, we were told, is for it to be able to run 1080p60 games in 3D with no problem,

I see that a few people are really getting ahead of themselves without actually knowing how these things work. There's this "I want to close the deal" attitude which is totally unwarranted at this point.

4GB of unified GDDR5 alone doesn't make any sense. About 1GB of that will need to be allocated to the OS and multitasking (browser, in-game applications, cross game functions and such).

It's completely poor design to pay for an expensive memory and waste it like that to hold almost static datas. At the same time it sounds weird that Sony was shooting for 2GB of total memory and that's it, it's obvious to everyone that that was not enough to run next gen games on top of other OS functionalities in the background.

These things are not designed by amateurish PC assemblers, but by the best engineers in the industry.

Also you can make all the customizations you want but there's no thing as magic or violating basic phisics principles. There aren't magic components which can multiply the performance, there are tricks which allow developers to take shortcuts to do things more efficiently but that's it. Also if those things existed, they wouldn't be invented now, they would be already used in more high end products in different ways.

So yeah, there's a bit of misinformation here, I bit "I want to believe" there. And then there's the fact that we're still stucked with old confusing information.

Personally I'm going to wait for more rumors and details to unfold, right now things don't sound clear to me.

Stacked DDR4 with 1024bit bus could reach 192 GB/s... and we all know that Sony likes stacking.GDDR5 is maybe present only in early devkits.

It would be amazing if Sony could pull off interposer with great APU and 192GB/s memory on it.

I believe Bkilian specialized in audio at MS, so I think we can assume one is an audio processor of some kind. Useful, sure, not magic. We can probably assume the one in the GPU is the embedded memory. Again, useful, especially is the main memory bandwidth is not hig, but again: not magic. That leaves the "more general purpose" hardware that can help with graphics. Possibly a vector co-processor, or some enhancement to the Jaguar architecture's FPUs. OK. Potentially useful, but not magic. None of these things are the "force multiplier" some are trying to imply the Durango's "custom silicon" will be. We already have rumored equivalents to 2/3 of those for the Orbis: some kind of hig bandwidth memory (GDDR5 or Stacked DDR3/4) and an audio processor capable of "200+ mp3 streams", and potentially full HSA, or higher single threaded performance (if they went with Steamroller) addressing the last point to some degree

I believe Bkilian specialized in audio at MS, so I think we can assume one is an audio processor of some kind. Useful, sure, not magic. We can probably assume the one in the GPU is the embedded memory. Again, useful, especially is the main memory bandwidth is not hig, but again: not magic. That leaves the "more general purpose" hardware that can help with graphics. Possibly a vector co-processor, or some enhancement to the Jaguar architecture's FPUs. OK. Potentially useful, but not magic. None of these things are the "force multiplier" some are trying to imply the Durango's "custom silicon" will be. We already have rumored equivalents to 2/3 of those for the Orbis: some kind of hig bandwidth memory (GDDR5 or Stacked DDR3/4) and an audio processor capable of "200+ mp3 streams", and potentially full HSA, or higher single threaded performance (if they went with Steamroller) addressing the last point to some degree.

Info at this point in time is really "sensitive" and subject to removal. I would say close to E3 spec leaks will start to get more accurate.

That would be pretty great indeed. I think BF3 for example uses approx 1GB of GDDR5 and 1GB of DDR3 ram even on ultra settings (unless you go to some stupid high resolutions or multi monitors, that is).Correct. His excitedPM to me was that it was the target for the final machine.

I think it uses 1.5GB GDDR5 on ultra @ 1080p.That would be pretty great indeed. I think BF3 for example uses approx 1GB of GDDR5 and 1GB of DDR3 ram even on ultra settings (unless you go to some stupid high resolutions or multi monitors, that is).

Any way you cut it, these AMD based x86 CPU's in both consoles, suck donkey balls in terms of very low IPC performance.

And they're not even real cores, just 'modules' which share resource's..kind of like hardware assisted hyper threading.

It's why AMD are so uncompetitive in the PC enthusiast market.

Bobcat/Jaguar doesn't use modules. Get your facts straight.

Didn't most recent PS4 dev kits have 8GB and 16GB of ram in them?

since when was the 720's gpu rumored to be 1.1-1.3 TF? Its rumored to be an HD 8800. The 7800 chip is a 2.5 TF chip at spec's speed. Its mobile variant, named the 7970M, runs at 850mhz and is a 2.17TF chip @ 212mm^2.yeah, i totally agree. to expect a rumored 1.1-3 tf gpu to perform like a 680 seems foolhardy. There may be some optimizations but they probably wont do miracles.

they may indeed get it to perform closer to the ps4's rumored higher flop count, though.

that would mean a 4gb or 8gb confirmation for the final unit i think.

feelings are for husbands who beat their wives with golf trophies, professionals have standards.....and that standard needs to be 8gbI have a feeling it will be 8GB just a "feeling"....

If they are just going with an apu these systems are going to be cheap and under powered. Steam box save us!

APU doesn't indicate anything of its performance. All it means is that the cpu/gpu is on one die. It has nothing to do with AMD's retail apus that are geared towards laptops.If they are just going with an apu these systems are going to be cheap and under powered. Steam box save us!

I have a feeling it will be 8GB just a "feeling"....

If they are just going with an apu these systems are going to be cheap and under powered. Steam box save us!

We're not talking about trinity AMD 5800k APU's here with an inbuilt GPU, at least I hope we're not, what we're talking about is a CPU and shrunken down 7800/8800 class discrete GPU on one single physical package sharing a load of fast RAM, in the same way the WiiU has it's CPU & GPU on a single chip.

We're not talking about trinity AMD 5800k APU's here with an inbuilt GPU, at least I hope we're not, what we're talking about is a CPU and shrunken down 7800/8800 class discrete GPU on one single physical package sharing a load of fast RAM, in the same way the WiiU has it's CPU & GPU on a single chip.

I wonder why Sony settles for the 6GBit/s GDDR5 modules is there a big price difference? According to that Micron PDF 5/6/7 modules have all the same voltage requirements. But a 7Gbit/s module would result in 224GB/s instead of 192.

Wii U is a MCM. Separate chips on the same package.

We don't even know what kind of memory system they're gonna use, let alone the definitive frequency

Well if the 192GB/s rumour is true it has to be a GDDR5 combination with 6Gbit/s modules at 1500MHz on a 256Bit Bus.

1500 x 4 x 256 / 8 / 100 = 192

7Gbit modules would be 1725MHz.

And for DDR4 it would be 1500MHz and 1024Bit Bus.

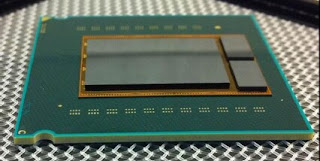

http://www.gslb.cleanrooms.com/index/packaging/packaging-blogs/ap-blog-display/blogs/ap-blog/post987_8253609600977646002.html said:Yole is forecasting that the IBM's Power 8 chip and the Intel Haswell and the Sony PS4 will all be based on 2.5D interposer technology. [see IFTLE 88: Apple TSV Interposer rumors; Betting the Ranch ; TSV for Sony PS-4; Top Chip Fabricators in Last 25 Years] The Sony GPU + memory device may look something like the Global Foundries demonstrator shown below.

Both Micron and Samsung have announced that they will be ready to release wide IO 3D stacked memory in 2013.

I wonder why Sony settles for the 6GBit/s GDDR5 modules is there a big price difference? According to that Micron PDF 5/6/7 modules have all the same voltage requirements. But a 7Gbit/s module would result in 224GB/s instead of 192.

Well if the 192GB/s rumour is true it has to be a GDDR5 combination with 6Gbit/s modules at 1500MHz on a 256Bit Bus.

1500 x 4 x 256 / 8 / 100 = 192

7Gbit modules would be 1725MHz.

And for DDR4 it would be 1500MHz and 1024Bit Bus.

Yields? On one wafer probably vast majority of working chips can work on 6GBit/s but only handful of picked ones can reliably work at 7. I'm just speculating.

Also heat.

Oh, I may have got my terminology wrong, but I was correct in what we're talking about in terms the tech I described, wasn't I.?

I see that a few people are really getting ahead of themselves without actually knowing how these things work. There's this "I want to close the deal" attitude which is totally unwarranted at this point.

4GB of unified GDDR5 alone doesn't make any sense. About 1GB of that will need to be allocated to the OS and multitasking (browser, in-game applications, cross game functions and such).

It's completely poor design to pay for an expensive memory and waste it like that to hold almost static datas. At the same time it sounds weird that Sony was shooting for 2GB of total memory and that's it, it's obvious to everyone that that was not enough to run next gen games on top of other OS functionalities in the background.

These things are not designed by amateurish PC assemblers, but by the best engineers in the industry.

Also you can make all the customizations you want but there's no thing as magic or violating basic phisics principles. There aren't magic components which can multiply the performance, there are tricks which allow developers to take shortcuts to do things more efficiently but that's it. Also if those things existed, they wouldn't be invented now, they would be already used in more high end products in different ways.

So yeah, there's a bit of misinformation here, I bit "I want to believe" there. And then there's the fact that we're still stucked with old confusing information.

Personally I'm going to wait for more rumors and details to unfold, right now things don't sound clear to me.

that would mean a 4gb or 8gb confirmation for the final unit i think.

Not really. In a longer run (and consoles are a long living products) it's better to have a UMA architecture with simplier board than a NUMA architecture with several memory buses/types on board. Memory prices will go down, board complexity is fixed cost mostly.I see that a few people are really getting ahead of themselves without actually knowing how these things work. There's this "I want to close the deal" attitude which is totally unwarranted at this point.

4GB of unified GDDR5 alone doesn't make any sense. About 1GB of that will need to be allocated to the OS and multitasking (browser, in-game applications, cross game functions and such).

It's completely poor design to pay for an expensive memory and waste it like that to hold almost static datas.

It's not weird for a platform holder with financial troubles to shoot for the cheapest setup possible.At the same time it sounds weird that Sony was shooting for 2GB of total memory and that's it, it's obvious to everyone that that was not enough to run next gen games on top of other OS functionalities in the background.

There are some things you can do in a closed console hardware dedicated to just one main goal and intended to work in a rather small range of screen resolutions. Off chip eDRAM is one such thing, large on chip memory is another. It all comes down to having more bandwidth for less money anyway and that's probably the only place where we can expect something that's not avialable in a high end PC space.Also you can make all the customizations you want but there's no thing as magic or violating basic phisics principles. There aren't magic components which can multiply the performance, there are tricks which allow developers to take shortcuts to do things more efficiently but that's it. Also if those things existed, they wouldn't be invented now, they would be already used in more high end products in different ways.

The way I understand it is a MCM/SoC are basically the same. Multiple separate dies on a large package.

An APU is a single die that contains everything.

I'm sure the more technical gaffer can explain it better than me.

APU doesn't indicate anything of its performance. All it means is that the cpu/gpu is on one die. It has nothing to do with AMD's retail apus that are geared towards laptops.