Corporal.Hicks

Member

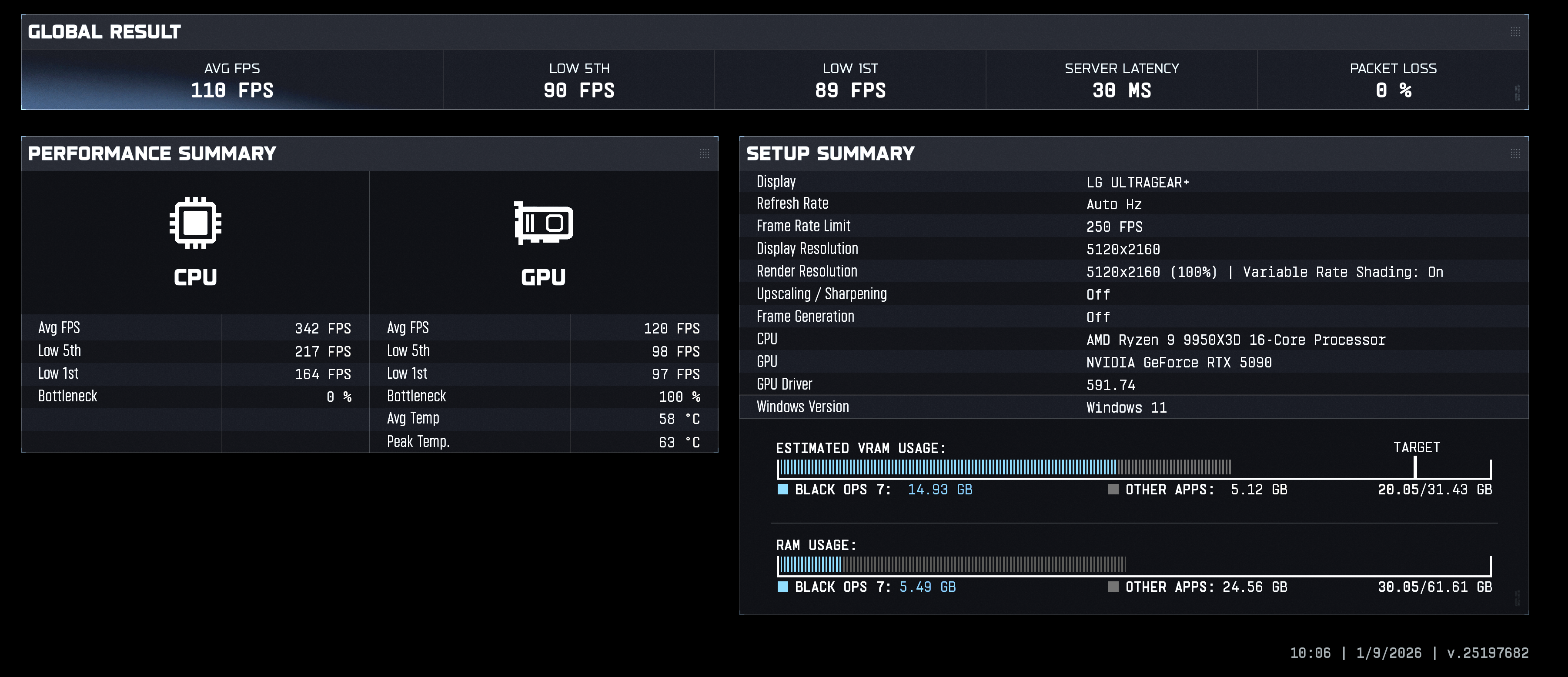

On average, it's 83%, but there are games in which the 5090 is twice as fast. For example, I saw Borderlands 3 gameplay on an RTX 5090 at 240 fps. On my PC, I get 90–120 fps. I've seen more examples like that, especially if game use heavy RT. There are even games that heavily rely on memory bandwidth where the 5090 is twice as fast, even compared to the 4090 (Red Dead Redemption 2 with MSAAx8 at 4K).I didn't show any of those games in the screenshots I posted though? The 4080S is a good card. I used to have one. Went from 4090 -> 4080 Super -> 5090.

It could be twice as fast? Last I checked, on techpowerup it was 83% faster so things might have changed. Look at how expensive the 5090 is, the idea of dropping settings for such a price premium is a tad ridiculous to me.

That being said, everyone has their preferences.

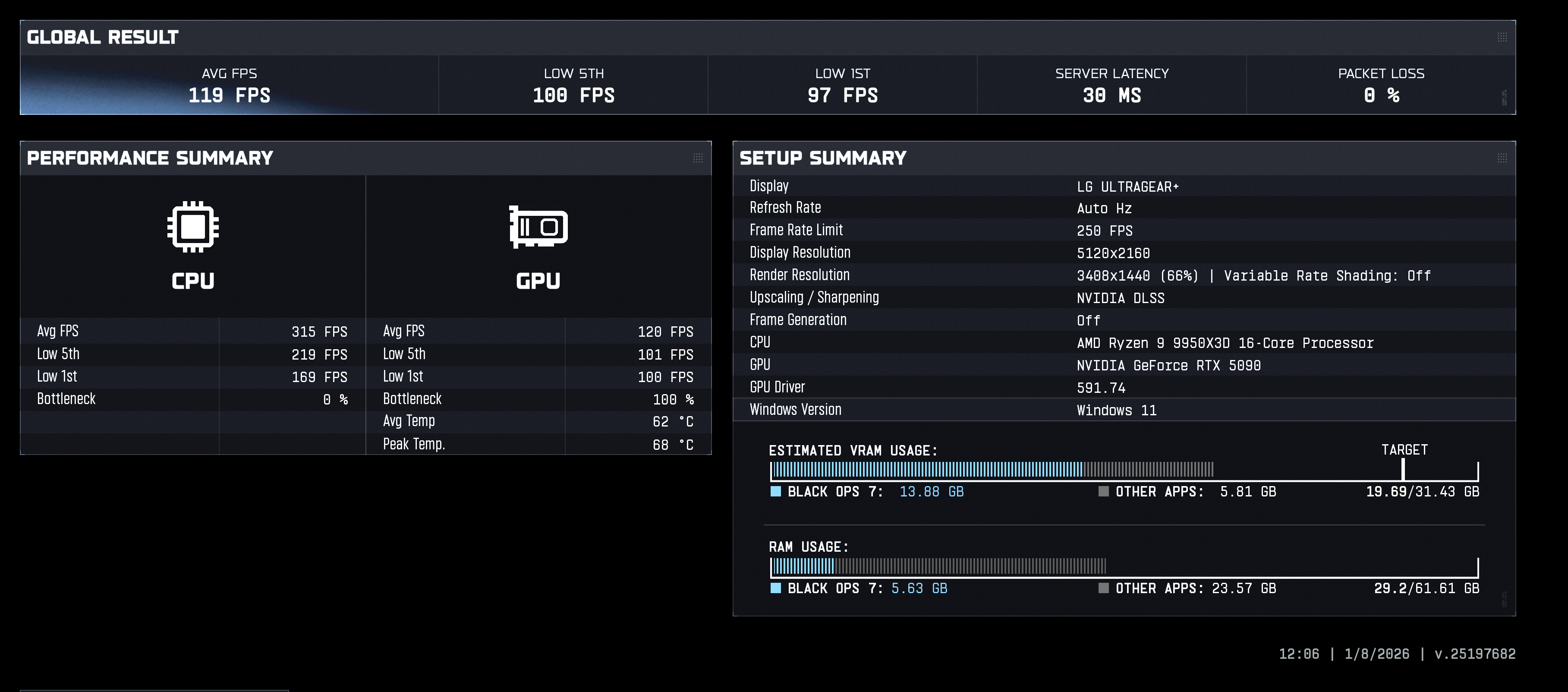

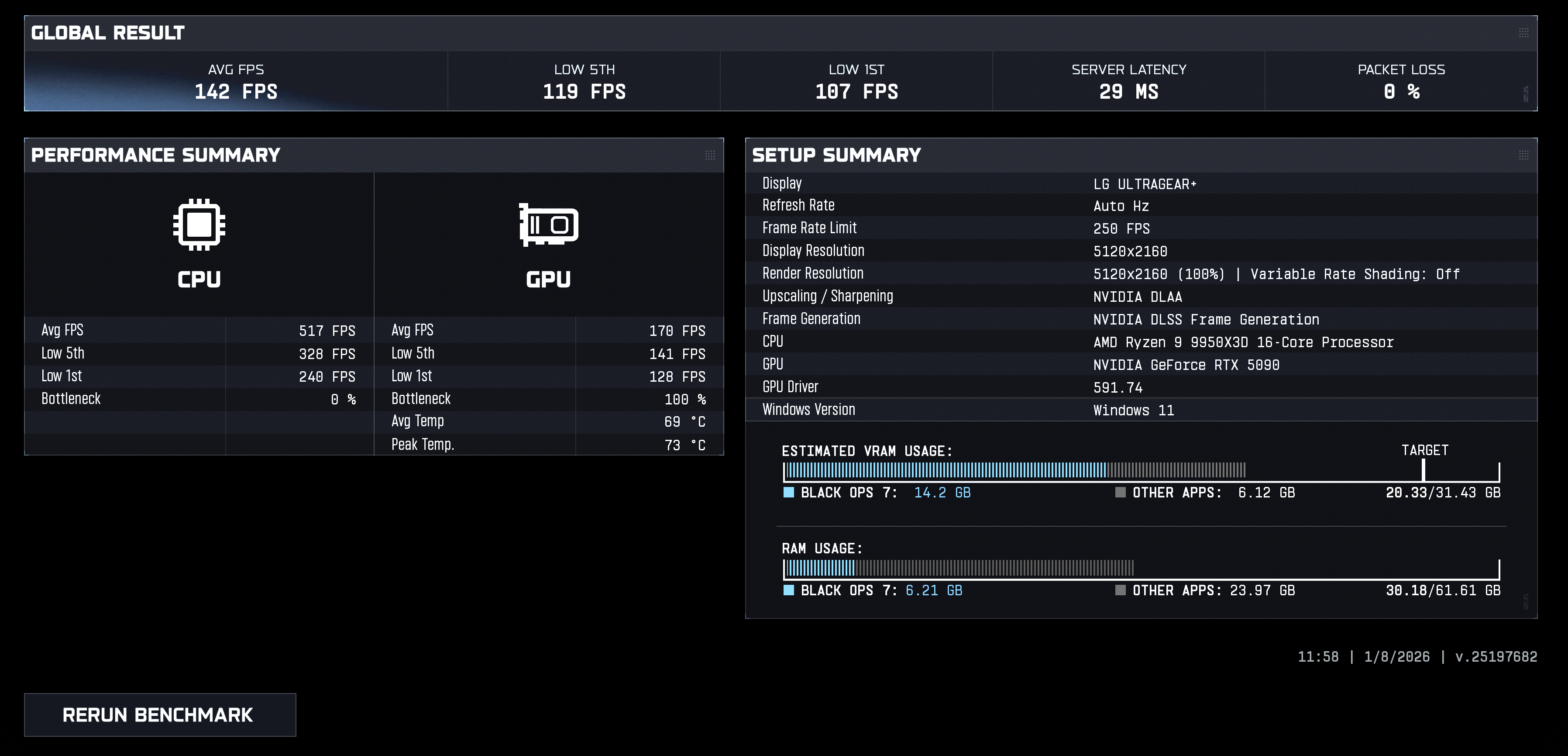

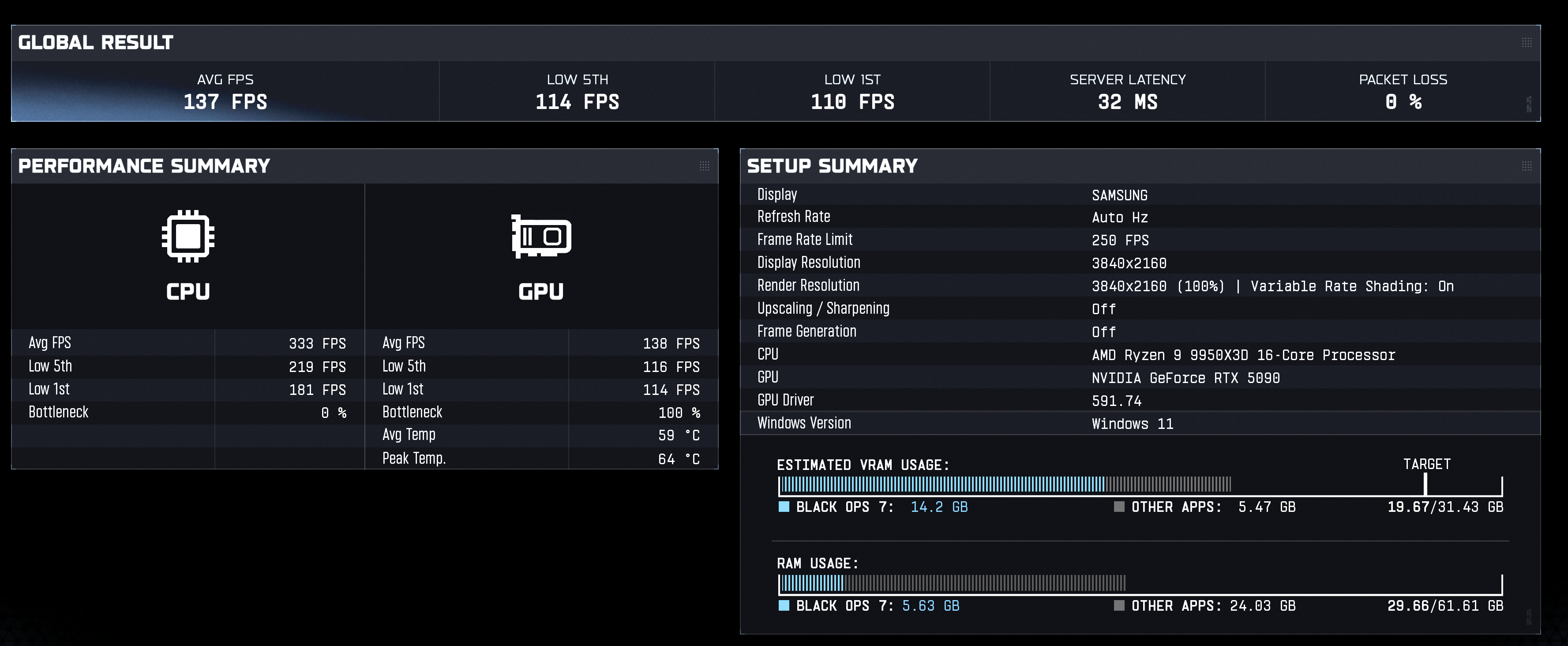

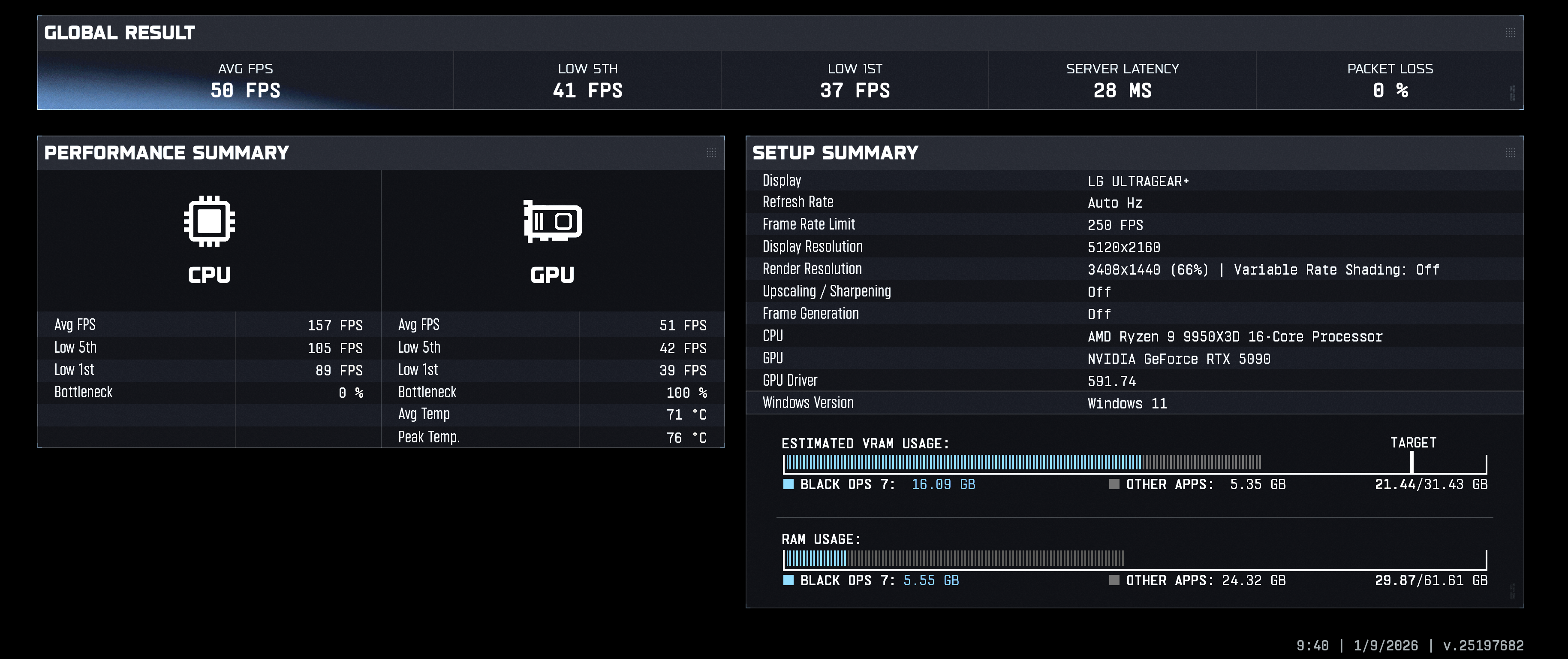

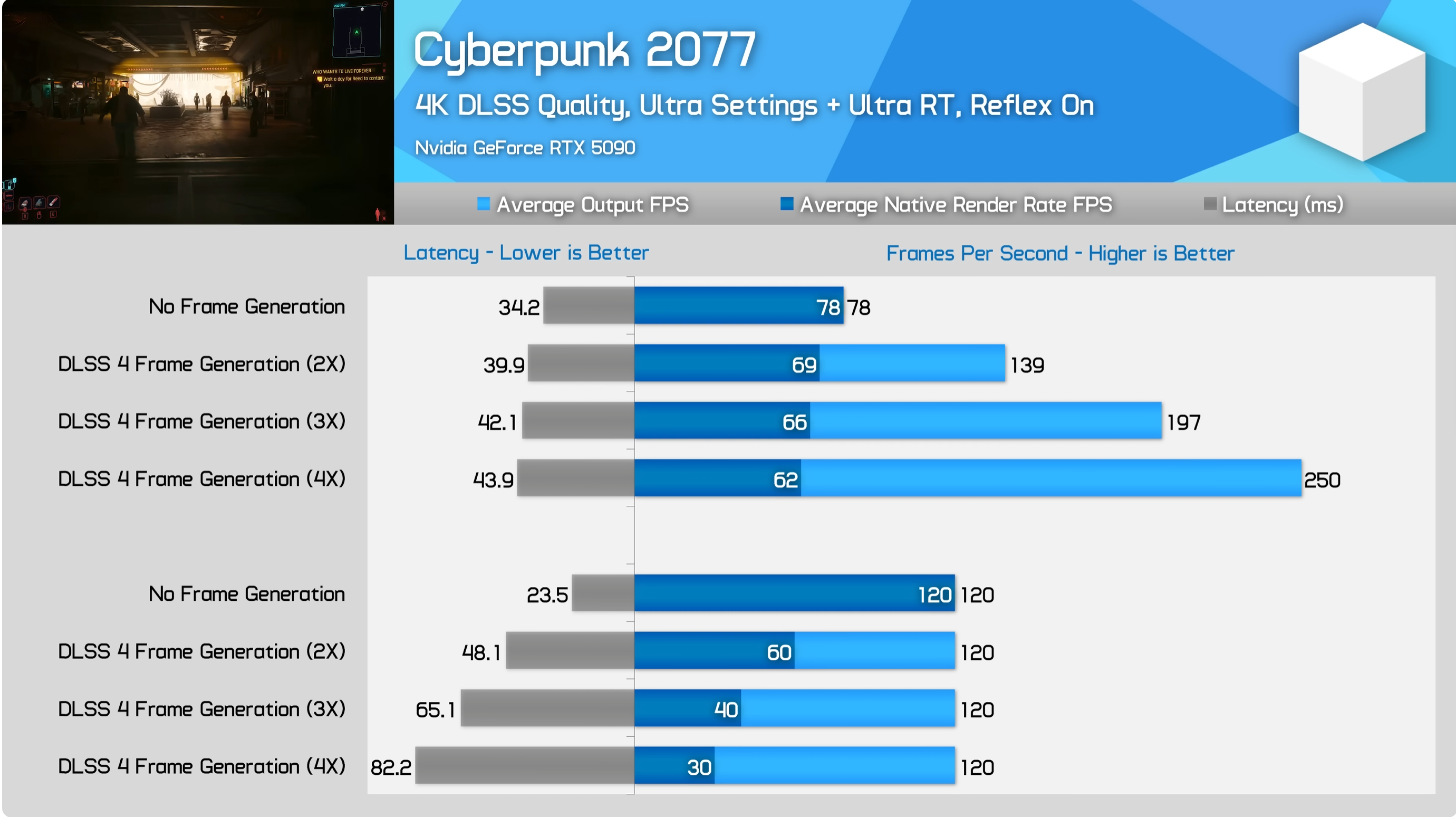

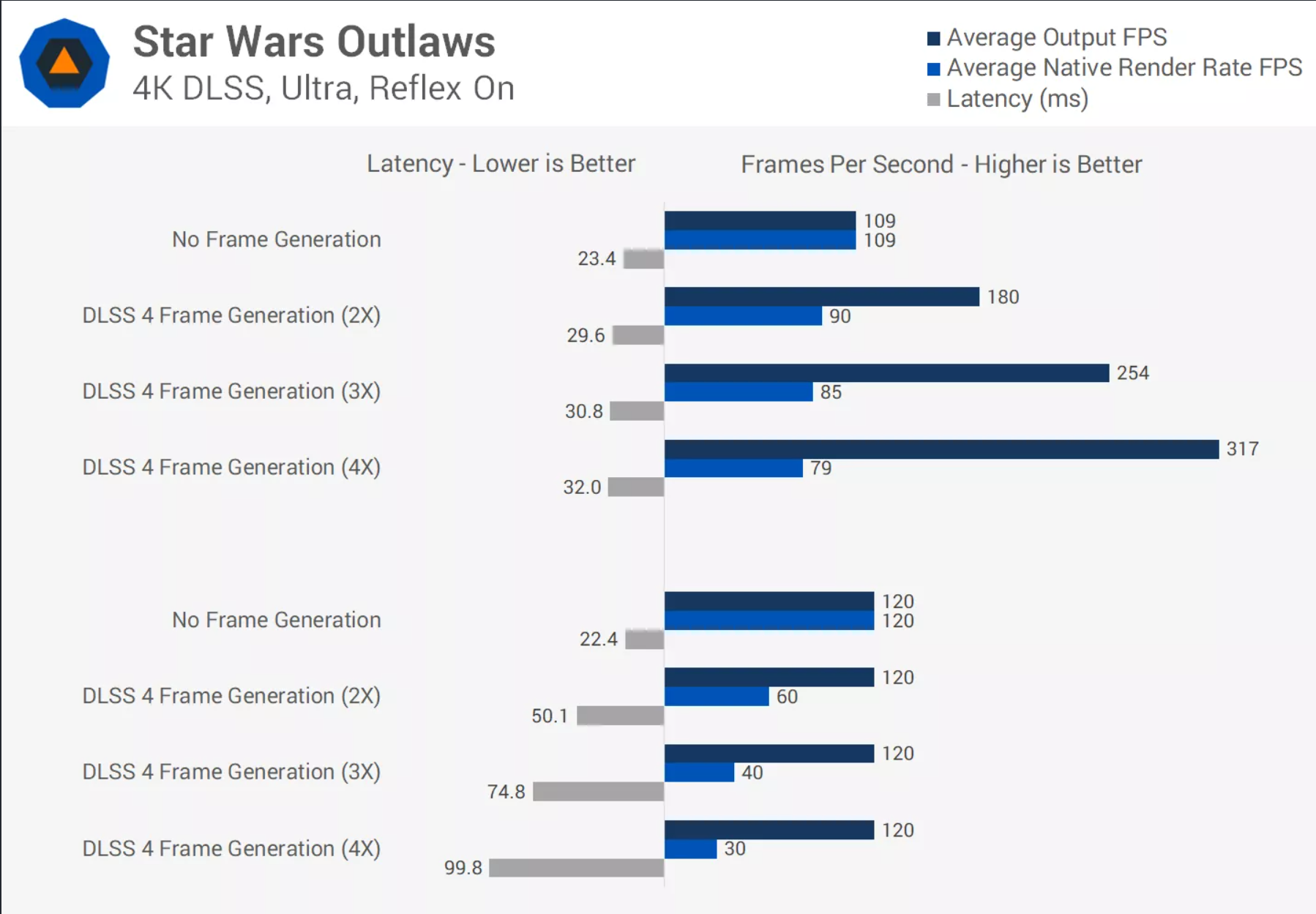

However, I agree with you that dropping settings on such an expensive card shouldn't be necessary. I don't know who's to blame: Nvidia or the developers. That said, DLSS isn't a significant compromise. I can't imagine 5090 owners preferring to lower the resolution or settings instead of using DLSS. With DLSS and MFG, the 5090 can take full advantage of a 4K, 240 Hz monitor, even in demanding UE5 games. The 5090 is only a 1440p card if people aren't willing to use DLSS or adjust some settings in the least optimized games.