falconhoof

Member

A super in-depth look at one particular comparison between all current, existing upscaling methods. I was tempted to play the wedding music throughout this video to show you the torture I endured in the 4 days I spent making this video but I'm a kind person.All was tested at presets which attempt to upscale 360p to 720p. The ones zoomed in will therefore represent LESS than 360p base resolution as it's only showing a fraction of the screen, so in the close-ups of the dancers it's more like what 180p upscaled to 360p would look like.

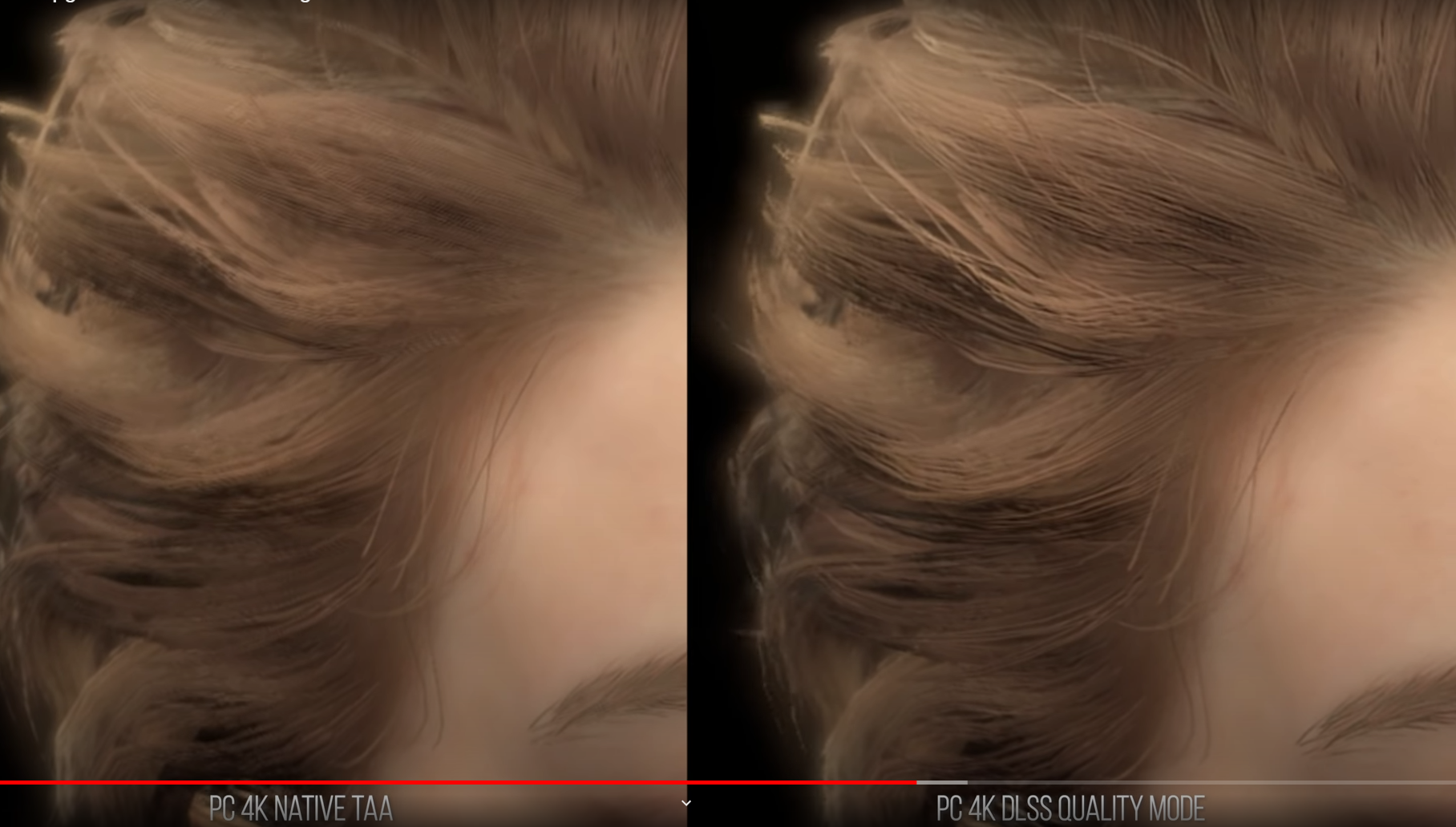

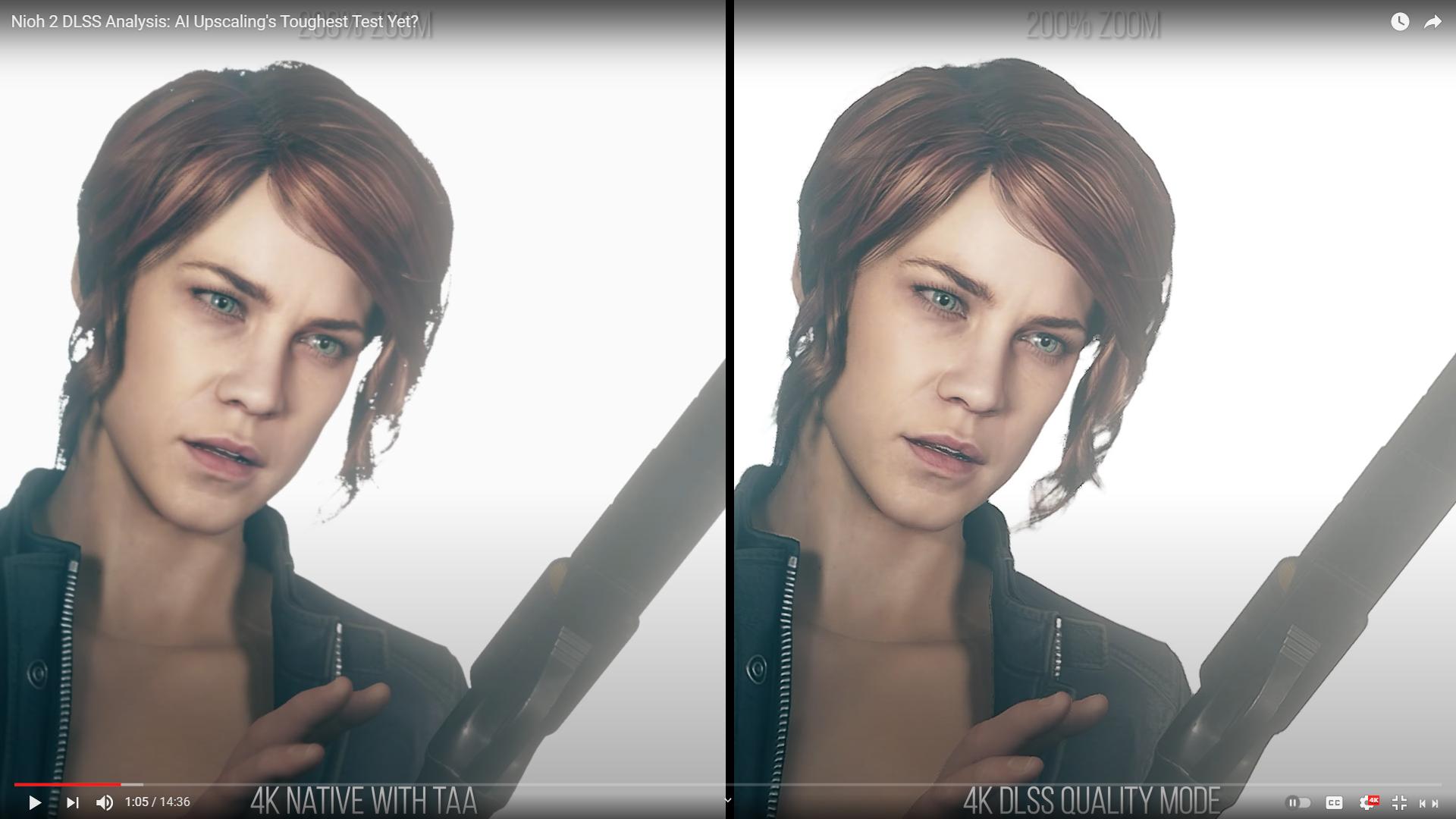

The 4k downsample comparison is really impressive.