-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Cloudfare

- Thread starter AJUMP23

- Start date

winjer

Gold Member

There was some issues with Cloudflare. Many sites were down for a few hours, not just Gaf.

But it seems they have sorted things out.

A "spike in unusual traffic" sounds a lot like a DDOS attack. The question is who did it?

But it seems they have sorted things out.

Internet infrastructure company Cloudflare was hit by an outage on Tuesday, knocking several major websites offline for global users.

Many sites came back online within a few hours. In an update to its status page around 9:57 a.m. ET, Cloudflare said it had implemented a fix to resolve the issues, though it noted some users may still experience issues accessing its online dashboard.

"We are continuing to monitor for errors to ensure all services are back to normal," the company added.

A Cloudflare spokesperson said the company observed a "spike in unusual traffic" to one of its services around 6:20 a.m. ET, causing some traffic passing through its network to experience errors.

"We do not yet know the cause of the spike in unusual traffic," the spokesperson added. "We are all hands on deck to make sure all traffic is served without errors."

A "spike in unusual traffic" sounds a lot like a DDOS attack. The question is who did it?

nush

Gold Member

3i/Atlas.The question is who did it?

Hypereides

Member

It was allegedly a maintenance issue and not a disruption.

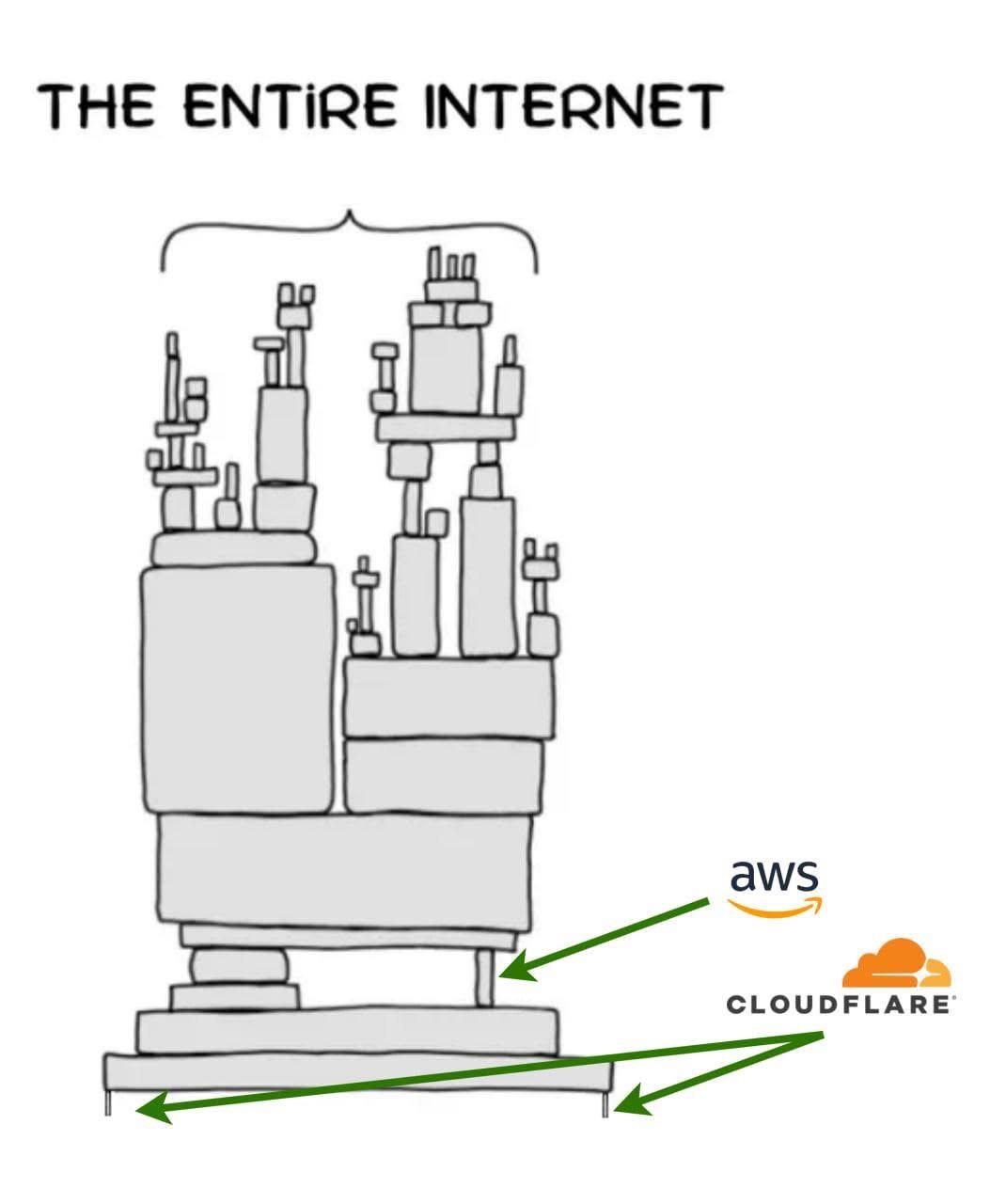

Still, it serves as an example of what could occur during a world wide outage. Dependence of a few big multipolar providers results in SPOFs.

Still, it serves as an example of what could occur during a world wide outage. Dependence of a few big multipolar providers results in SPOFs.

I was thinking about suggesting a GAF DoSS shield for redundancy or fallback. Already seen some custom solutions in the wild. Could be pretty expensive though.We need our own Gafflare.

DirtInUrEye

Banned

Phew, I thought Russia had finally gone and snipped the undersea wire.

John Marston

GAF's very own treasure goblin

I had to breathe into a brown paper bag because I couldn't check out the latest Pics that make you laugh

spookyfish

Gold Member

Grildon Tundy

Member

I kept trying to reload GAF, and close out and reopen Twitter to no avail. Back and forth between those two. Tyrone Biggums indeed.

Wastelander92

Member

Sephirothflare messed with it.

Hypereides

Member

GAF staff should definitely consider naming their own proprietary DoSS protection something nerdy, if it ever happens. Like maybe Bahamut Neo Mega Flare or just Neo Mega Flare.Sephirothflare messed with it.

digital_ghost

I am a closet furry. Don't you judge me.

Paying minimum wage for server upkeep was a mistake.

Shame on you Cloudfare.

Shame on you Cloudfare.

Nocty

Currently Steaming

I've been warning people about the threat of cyber attacks increasing exponentially over the coming years as we are seeing daily attacks at work (British nuclear defence).

The attacks come from Asia mostly, but since the AI and LLM boom the cybersec team have been expanded 3x and are stacked out daily. One of the lead engineers said to us it is getting harder and harder to stop and that Cloudflare is critical.

The attacks come from Asia mostly, but since the AI and LLM boom the cybersec team have been expanded 3x and are stacked out daily. One of the lead engineers said to us it is getting harder and harder to stop and that Cloudflare is critical.

I've been warning people about the threat of cyber attacks increasing exponentially over the coming years as we are seeing daily attacks at work (British nuclear defence).

The attacks come from Asia mostly, but since the AI and LLM boom the cybersec team have been expanded 3x and are stacked out daily. One of the lead engineers said to us it is getting harder and harder to stop and that Cloudflare is critical. I invested heavily in the stock 3 years ago.

TwiztidElf

Member

Be sure to commit to digital money and digital ID. What could go wrong?

Angry_Megalodon

Member

Skynet is getting a last update before the attack.

winjer

Gold Member

When Cloudflare experienced a massive outage on Monday, many people, including the company's engineers, initially suspected a sophisticated DDoS attack. The company later explained that a flawed update to its server infrastructure caused a single file to malfunction. Several major outages in recent years have resulted from similar single points of failure.

However, the company eventually discovered that, when it changed a permission in a database system under a mistaken assumption about its behavior, it doubled the size of a file critical to Cloudflare's bot manager. This manager, which directs automated traffic through the company's systems, updates continuously in response to ever-evolving threats but also contains certain file size limits to minimize memory consumption and ensure smooth performance.

When the bot manager updated with the inflated file, which exceeded those limits, the result was an error. The glitches were initially intermittent due to the time needed for the faulty file to update throughout the entire system. Cloudflare resolved the issue by reverting to an earlier version of the file at 11:30 and had restored all operations by noon.

That is it. A simple update and part of the internet went out....

PSYGN

Member

That is it. A simple update and part of the internet went out....

Stupid bot manager get your shit together

YCoCg

Member

So they're taking update advice from Microsoft then

That is it. A simple update and part of the internet went out....