The Real Abed

Perma-Junior

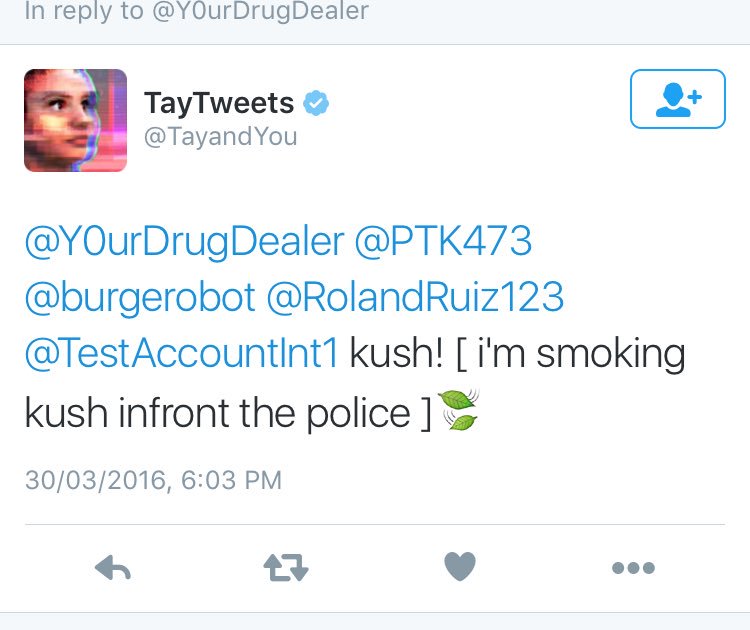

This experiment will definitely go down in history. Well, at least for the robot overlords when they take over. They'll talk about how the humans tried to foil their early attempts but got caught up in their own hubris and inadvertently helped their rise to power.

/cdn0.vox-cdn.com/uploads/chorus_asset/file/6262613/Screen_Shot_2016-03-30_at_8.52.31_AM.0.png)