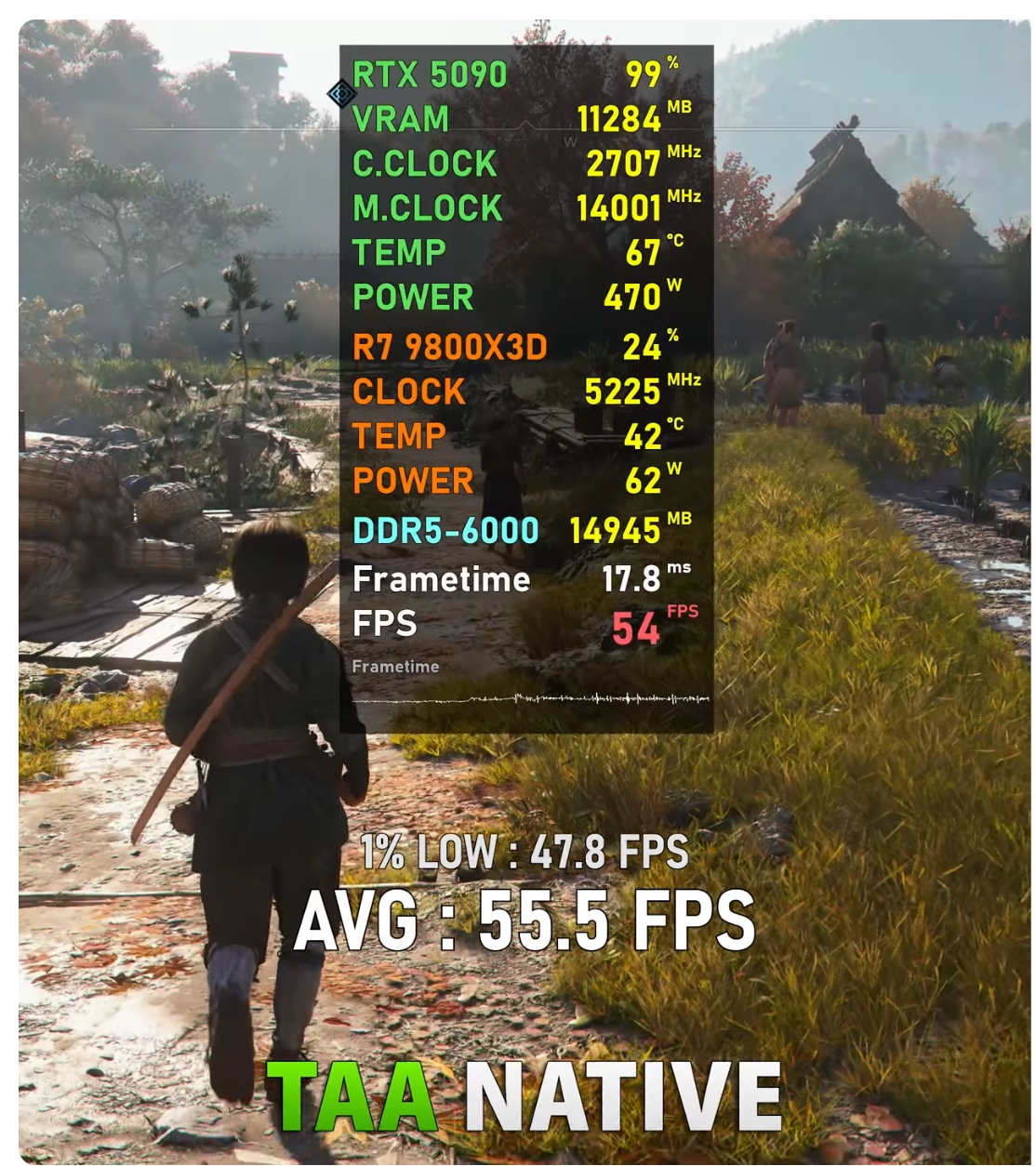

Not even the 5090 can achieve 4K at 60 fps in every game. Does that mean the 5090 is a 1440p, or even 1080p card in ark surival? Can you see how silly that sounds? If we consider the 5090 to be a 1080p card, then what about the 4060/3070 card? Are those 120p cards? Dude, can't you see how ridiculous your logic is?

The RTX 5090 is an awesome 4K card. It can run most games at this resolution with ease with over 120fps. Even my 4080S can run most of the games in my library at native 4K and well over 60 fps. In fact, both my 4080S and 5080 were marketed as 4K cards by Nvidia and reviewers (my card had 95fps had an average of 95fps at 4K when I bought it)

Yes, some of the latest games require me to do some tweaking to achieve a high refresh rate on 4K displays, but that doesn't matter, especially with AI technologies. I paid for Tensor cores, and if I didnt planet to use them I would buy much cheaper AMD card. Thanks to AI, I can run even UE5 games at 4K with 120–170 fps and I proved that many times with my comparisons.

The RTX 5090 and it has an aversge of 150fps at 4K native in most games. I doubt there's a single 5090 owner who considers their card to be 1440p or 1080p based on the few extreme examples that could be counted on one hand. With DLSSQ and FG (yet alome MFG) can be considered even an 8K card IMO.