Gamer_By_Proxy

About to beat off

Waiting for the counter argument . . .

"Devs need to quit treating PC gamers like the red headed step child"

"Devs need to quit treating PC gamers like the red headed step child"

I don't think it's a "treating like a baby" thing, but more of a "it's easier" thing

They put some presets and lots of quality control are diminished

In this era of backwards compatibility it's getting obnoxious. I sit here with an unopened copy of Xenoblade X for Switch and it's bothering me. Will it get patched for Switch 2? Will they want to charge me again for a new version? Or will I just be stuck playing at 30 fps?

What is stopping devs from giving console games simple graphics settings? I can play PC games from 15 years ago at 4k/ whatever frame rate without mods.

- Bloodborne is 1080p/30 fps on ps5

- RDR2 is 1080p/ 30 fps on ps5

- Smash Ultimate is 1080p on Switch 2

- Etc etc etc

Just bury the framerate/ res options in an advanced user menu or something. At best they are saying you're too dumb to know what these options mean. At worst they are doing it because they want to sell you the same games over and over. Waiting for patches is getting old and paying upgrade fees for devs to change an ini file is retarded. And in the case of something like Smash it's likely to never get patched because all those license holders would want a taste of the upgrade fee.

This one still hurts.Spyro Reignited Trilogy & Crash Team Racing Nitro-Fueled still being limited to 30fps on current gen consoles when it's literally only a matter of changing a few values in an XML file is legitimately shameful. Console players should be causing a stink about it.

You thought of PC too when you read the OP, admit it.In before PC warriors suggest you need a PC.

Oh too late.

But some of the replies to this thread have convinced me that console gamers are in fact babies and should be buying the same things over and over and paying for online.

Devs need to stop treating PC gamers like basement dwellers neckbearded virgins...Waiting for the counter argument . . .

"Devs need to quit treating PC gamers like the red headed step child"

In before PC warriors suggest you need a PC.

Oh too late.

You thought of PC too when you read the OP, admit it.

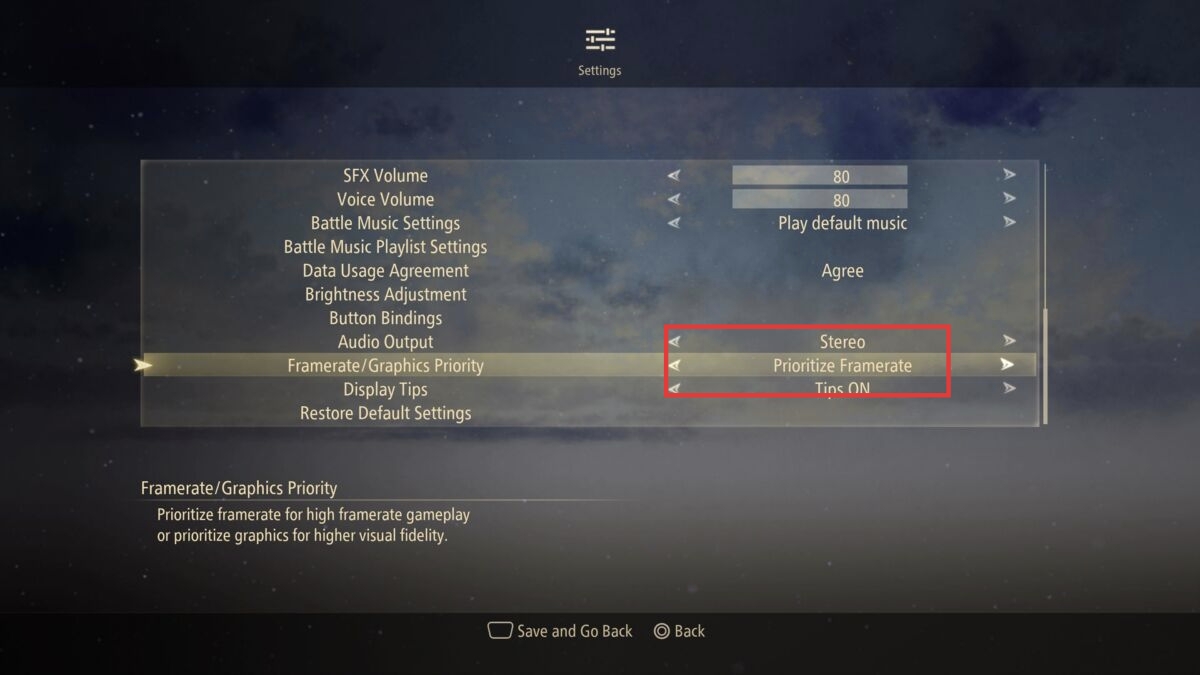

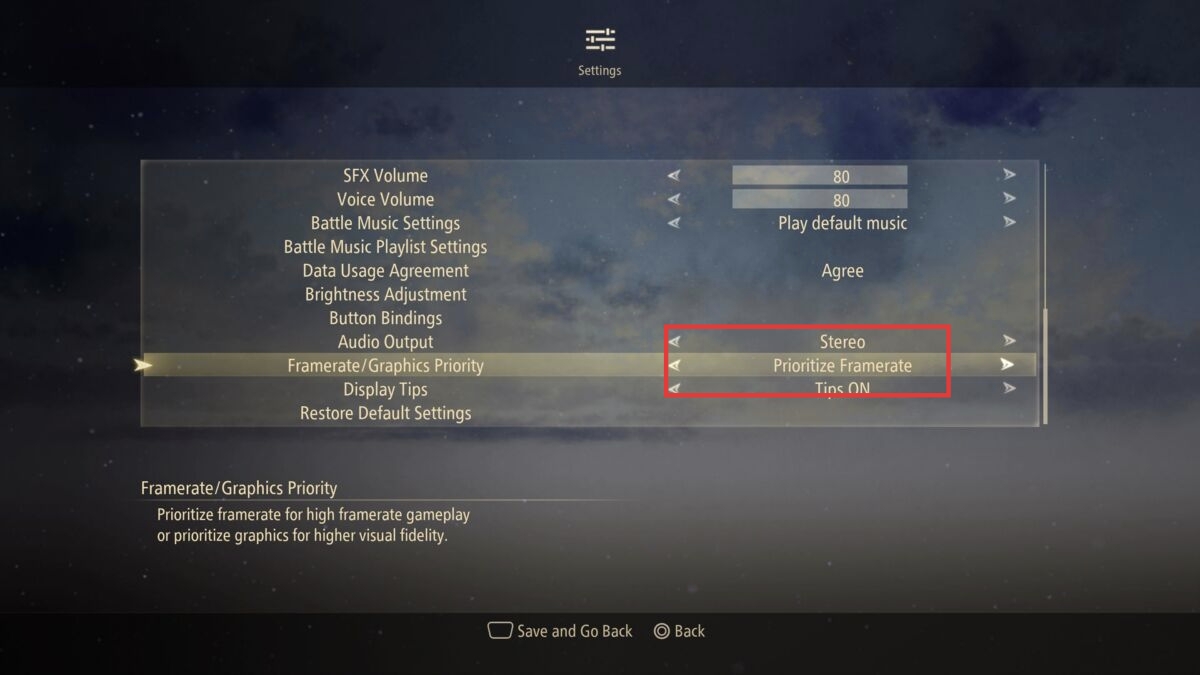

This requirement implementation and testingthey don't have to target any spec. they just need to allow you to toggle between different FPS targets, and between different resolution targets... that would already be more than enough.

•1080p - 1440p - 2160p

•30fps - 60fps - 120fps

•RT On - RT Off

that's all we really need.

Sony actually does it decently well, even though their "unlocked fps" settings are not really unlocked... usually they have a sub-120fps target for some reason, when they should all target 120fps.

It's not always so easy to just switch a hidden toggle and unlock more performance. Chances are you're not going to get stable 60fps and some work may be still needed to make sure it's super smooth. Batman Arkham City Remaster is a good example. The game wasn't running in stable 60fps when it launched, so what did WB do? They've downscaled to 30fps through a patch rather than optimizing the remaster. You can still play 60fps version of the game using an unpatched disc release, but the framerate is all over the place and it definitely needed a lot of work.

There's also the case of being able to pick up and play something on the consoles without messing with the settings to find the optimal solution. I can agree that more games should have performance/quality modes, but I don't want to test individual settings to check if I'm going to gain or lose 5 fps.

The point of consoles is that the devs know everyone who owns the machine has the same specs.

This makes optimization easy, unlike in the PC market.

Now, the fact that devs are not optimizing their games to begin with, that's an entirely different conversation. Blame the devs for that.

Fiddling with graphical settings is already annoying enough on PC. I can't believe people are unironically demanding them for consoles.

Absolutely not, if I wanted to fiddle with settings I wouldn't play games on console. It's up to the developer to decide how to best present the game.

but they are guaranteed to work

Unless it's linked to game mechanics like regen or swing speed setting framerate is a single line in the INI file.I don't know what you're so worked up about OP. We all know that games that have locked framerates require a patch to unlock them. Many companies don't feel it's worth their time or money to make such patches for older games since it won't generate significant new sales. They'd rather release a remaster and charge for it. R* is famous for this. GTA V never even got a PS4 Pro patch.

Tell me you don't understand how computer work without telling me you don't understand why computers work. You guys really think console hardware is some esoteric magic shit?The options they put in console games are limited, but they are guaranteed to work. If you allow changing settings at will, it could cause unforeseen behavior including damaging the console.

This requirement implementation and testing

And as majority of console players don't care, most games found it implausible to spend resources on it

Wanna tinker things - there is a PC for you.

I'm the opposite. More options the better. Why limit yourself. Part of the fun of pc gaming is being able to enable extra graphics settings and turn down stuff so you can play on older or cheaper hardware.Kind of goes against the whole plug and play ideal of consoles, though. I don't even like the current quality/performace toggles you get in some games.

Developers should just pick the intended settings, I don't want to be messing with menus and checking the impact on performance in something like Smash Bros. I hate having to do that on PC, always makes me think there's some setting I might have missed that makes the game smoother or better looking.

It's just frame rate settings.I can't believe people are unironically demanding them for consoles.

"Closes" the thread full of hypocrisy.Imagine if this sentiment extended to settings like joystick sensitivity, FOV, brightness/contrast, etc. Or does it, actually, in your case? Do you think the developer should be the final arbiter of the player's experience as relates to those elements, too?

If not, why draw an arbitrary line between those and graphics/performance settings? Why not give those who do want to fiddle with settings to optimize their experience the option to do so, while everyone else can remain content with the developer-chosen defaults?

I completely agree, having to toggle on and off those pesky performance modes the last 5 years killed my reason to live.

The options they put in console games are limited, but they are guaranteed to work. If you allow changing settings at will, it could cause unforeseen behavior including damaging the console.

So don't. I never do this for PC games. Usually a full screen and motion blur toggle is all I need. Options aren't a bad thingI'm a PC gamer too. But I find that lately I really dislike going into the menus and figuring out the optimal way to run every single game.

I just need the devs to spend their precious time on optimizing the "Performance" option. No idea why should i be the one thinkering with all kinds of options until it becomes acceptable, that's not my job.

Henry Cavill knows a solution

Be more like Henry Cavill

PC is faster hardware. The pc version probably could afford to have a free camera at the time while the Ps2 version could not. (That's what my Wheaties analogy was supposed to represent lol.)review scores are irrelevant.

anyone who played both versions at the time knows how much better the PC version felt to play.

GTA was always insanely overrated either way. like, those games took 3 iterations on console to finally get the brilliant innovation of... A FREE MOVING CAMERA... an an open world game nonetheless.

the gunplay was worse than that of most N64 games

Don't you know you need a nuclear fission degree to change resolution in the settings? That's what i heard from console guys...In this era of backwards compatibility it's getting obnoxious. I sit here with an unopened copy of Xenoblade X for Switch and it's bothering me. Will it get patched for Switch 2? Will they want to charge me again for a new version? Or will I just be stuck playing at 30 fps?

What is stopping devs from giving console games simple graphics settings? I can play PC games from 15 years ago at 4k/ whatever frame rate without mods.

- Bloodborne is 1080p/30 fps on ps5

- RDR2 is 1080p/ 30 fps on ps5

- Smash Ultimate is 1080p on Switch 2

- Etc etc etc

Just bury the framerate/ res options in an advanced user menu or something. At best they are saying you're too dumb to know what these options mean. At worst they are doing it because they want to sell you the same games over and over. Waiting for patches is getting old and paying upgrade fees for devs to change an ini file is retarded. And in the case of something like Smash it's likely to never get patched because all those license holders would want a taste of the upgrade fee.

PC is faster hardware. The pc version probably could afford to have a free camera at the time while the Ps2 version could not. (That's what my Wheaties analogy was supposed to represent lol.)

IT's not only trying to paint too fine a line in the first place by saying a game rated a 95 and a big hit could have been better, but it's also comparing apples to oranges in terms of platforms.

Not really...It's more like you bought the apple juice in a plastic bottle (PS4 game) and you enjoyed it for what it, and now you want to move that juice from a plastic bottle (PS4) into a glass bottle (PS5) and you cant because the plastic bottle juice was not designed to change recipientBasically you bought apple juice and now you're upset it doesn't taste like orange juice. Next time just buy the orange juice.

Absolutely saying what I said. You're talking PS2 and pc back in the day.what in the actual fuck?

are you unironically insinuating that the lack of a free moving camera was a performance limitation?

you can not be serious... like... you can't...

that's just not possibly what I read just now. I must be hallucinating.

Gladly this is not a "written rule", so tales out of the ass and at least 30, 60, 120fps options are more than very welcome.The appeal of a fucking console is that you get a game that is "as the developers intended" without fucking around in menus.

I don't think it's a "treating like a baby" thing, but more of a "it's easier" thing

They put some presets and lots of quality control are diminished

Sure they -could- put time into developing a 'maybe this will run on a box that allows 60fps' option... but then people who are inherently unpleasable will inevitably complain that it doesn't run at 120fps on future box + 2.

Your average console gamer just does not give a shit, they're too busy enjoying the game.

This requirement implementation and testing

And as majority of console players don't care, most games found it implausible to spend resources on it

Wanna tinker things - there is a PC for you.

The point of consoles is that the devs know everyone who owns the machine has the same specs.

This makes optimization easy, unlike in the PC market.

Now, the fact that devs are not optimizing their games to begin with, that's an entirely different conversation. Blame the devs for that.

Fiddling with graphical settings is already annoying enough on PC. I can't believe people are unironically demanding them for consoles.

Absolutely saying what I said. You're talking PS2 and pc back in the day.

I'm flabbergasted you're trying to still hold onto this nothing burger argument that Michael Jordan could have been better if only.