LectureMaster

Or is it just one of Adam's balls in my throat?

Monster Hunter Wilds - we can't recommend the PC version

Alex Battaglia takes on Monster Hunter Wilds on PC. Why do we keep having to mention the #stutterstruggle for PC releases?

This is where I have to start theorising. I can't be 100 percent sure, but if I had to guess, I would say that this behaviour is due to DirectStorage GPU decompression. The game's files do include DirectStorage DLLs, so it's possible that the game is streaming (and potentially decompressing) textures on the GPU in an overly aggressive manner when turning the camera, despite the VRAM headroom shown by RTSS and CapFrameX, leading to the frame-rate impact that's felt most strongly on compute-challenged cards like the RTX 4060.

This is all theoretical, of course, but the measured behaviour is sufficient for us to simply not recommend this game to anyone with a graphics card with 8GB of VRAM or less. Having to decide between high textures that cause stutters while looking sub-par and medium textures that look even worse, neither is a choice we can particularly recommend. I also saw similar issues on the RTX 2070 Super, RX 5700 and Arc A770, with Intel's GPU in particular running extremely poorly, at around 20fps with non-loading textures despite similar settings.

For higher-end GPUs I would also advise caution. Playing through the introduction on the RTX 4070 I saw consistent stuttering even on the high texture setting, so it would be logical to assume this could get even worse with the higher-res texture pack installed - and that's backed up by results from the benchmark tool. Based on my measurements in the base game, I believe the stutters are not related to running out of VRAM, but could in fact be related to constant streaming and perhaps real-time decompression.

To sum up, lower-end graphics cards with lower VRAM allocations should avoid Monster Hunter Wilds until these issues are rectified. Higher-end hardware can brute-force the game's shortcomings to some degree, but it's still hard to recommend such a flawed technical outing - or even derive optimised settings. For now then, we'll leave things there, but I hope we see some much-needed improvements before too long.

Full article:

That's a surprise when our initial impressions of the game on PC are fairly positive. The game uses a long shader compilation step on first launch, taking around six minutes on a Ryzen 7 9800X3D, and more than 13 minutes on a Ryzen 5 3600. The graphical settings menu is also nicely designed, with fine-grained options, a VRAM metre, component-specific performance implications and preview images for particular settings.

However, there's some weirdness too. The game prompts you to enable frame generation before it even starts with a pop-up, even on low-end machines where frame generation doesn't really make sense. (Neither AMD nor Nvidia recommends using frame-gen with a low base frame-rate - 30fps, for example.) If you decline this offer, the game makes sure to tell you that you can turn it on in the settings menu, which as a reviewer paints a poor picture of the game's potential performance.

After all the pop-ups, like any casual user I used the game's default detected settings and set DLSS to balanced mode at 1440p on the RTX 4060. Loading up the game presented more issues, with PS3-level texture quality in many shots and many textures that looked like they had loaded incorrectly - one character's white coat was rendered multi-colour by mosaic artefacts due to poorly configured texture compression.

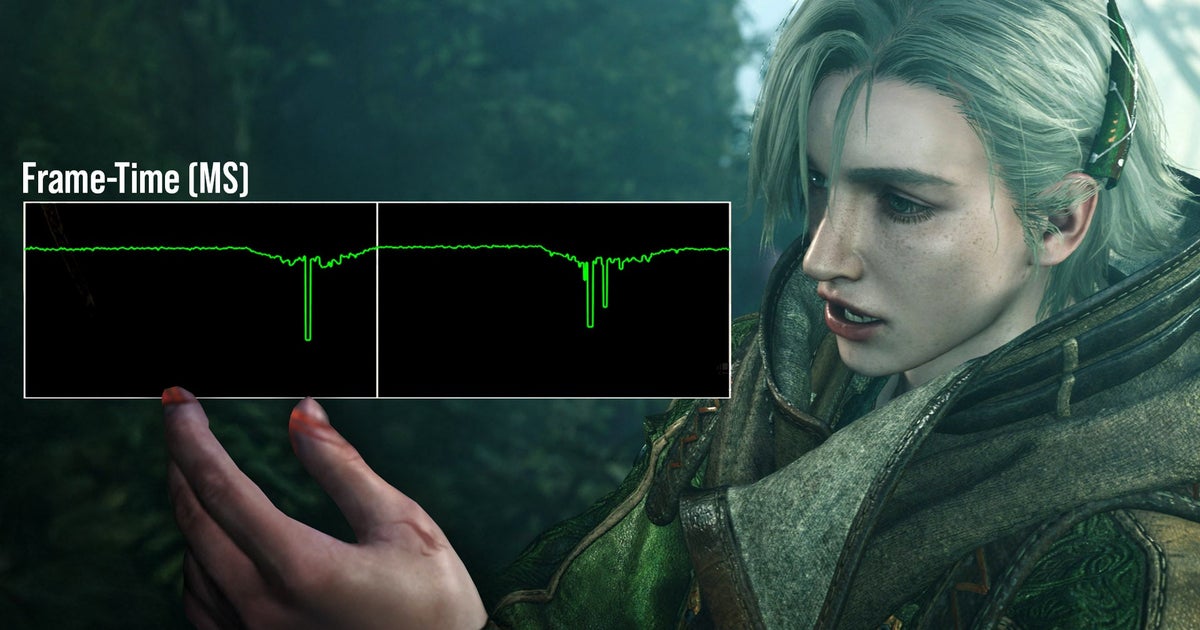

After getting character control following the intro cutscenes, each turn of the camera was greeted by noticeable stutters. This sort of stutter is often triggered by streaming textures into VRAM, but the VRAM metre in the settings was showing good performance - making it far from a helpful indicator for a casual user. Turning down the setting from the default high to medium solved the camera turn stutter issue on the RTX 4060 8GB, but I saw similar texture quality problems on the RTX 4070 12GB too. It's also worth noting that users of higher-end GPUs can download "highest" quality textures as separate DLC, but this wasn't available during our testing before release.

Back on the RTX 4060 with medium textures in place, despite lacking the huge stutters we had before, the results still aren't great. The actual texture quality is now reminiscent of games from the early 2000s, and the frame-rate was still dipping noticeably with a hit to frame health when standing in place and turning the camera. If we turn the camera extremely gradually, the sinusoidal rhythm we experienced previously becomes smoother as frame health improves. This is a very odd performance characteristic in what is essentially an empty desert with tiled sand textures, and not something we expect to see in any game, let alone a triple-A release in the year of our lord 2025.

I've seen similar behaviour before with games that exhbited occlusion culling issues on slower CPUs, but this game is running on a Ryzen 7 9800X3D. Otherwise, I've only seen this sort of issue when the game is doing something noticeably suboptimal when streaming things onto the graphics card, and this is true even when dropping the texture resolution to its lowest setting. This could be a problem for the RTX 4060 in particular, but the 4070 shows similar issues, albeit with higher base frame-rates.

This is where I have to start theorising. I can't be 100 percent sure, but if I had to guess, I would say that this behaviour is due to DirectStorage GPU decompression. The game's files do include DirectStorage DLLs, so it's possible that the game is streaming (and potentially decompressing) textures on the GPU in an overly aggressive manner when turning the camera, despite the VRAM headroom shown by RTSS and CapFrameX, leading to the frame-rate impact that's felt most strongly on compute-challenged cards like the RTX 4060.

This is all theoretical, of course, but the measured behaviour is sufficient for us to simply not recommend this game to anyone with a graphics card with 8GB of VRAM or less. Having to decide between high textures that cause stutters while looking sub-par and medium textures that look even worse, neither is a choice we can particularly recommend. I also saw similar issues on the RTX 2070 Super, RX 5700 and Arc A770, with Intel's GPU in particular running extremely poorly, at around 20fps with non-loading textures despite similar settings.

For higher-end GPUs I would also advise caution. Playing through the introduction on the RTX 4070 I saw consistent stuttering even on the high texture setting, so it would be logical to assume this could get even worse with the higher-res texture pack installed - and that's backed up by results from the benchmark tool. Based on my measurements in the base game, I believe the stutters are not related to running out of VRAM, but could in fact be related to constant streaming and perhaps real-time decompression.

To sum up, lower-end graphics cards with lower VRAM allocations should avoid Monster Hunter Wilds until these issues are rectified. Higher-end hardware can brute-force the game's shortcomings to some degree, but it's still hard to recommend such a flawed technical outing - or even derive optimised settings. For now then, we'll leave things there, but I hope we see some much-needed improvements before too long.