Buggy Loop

Gold Member

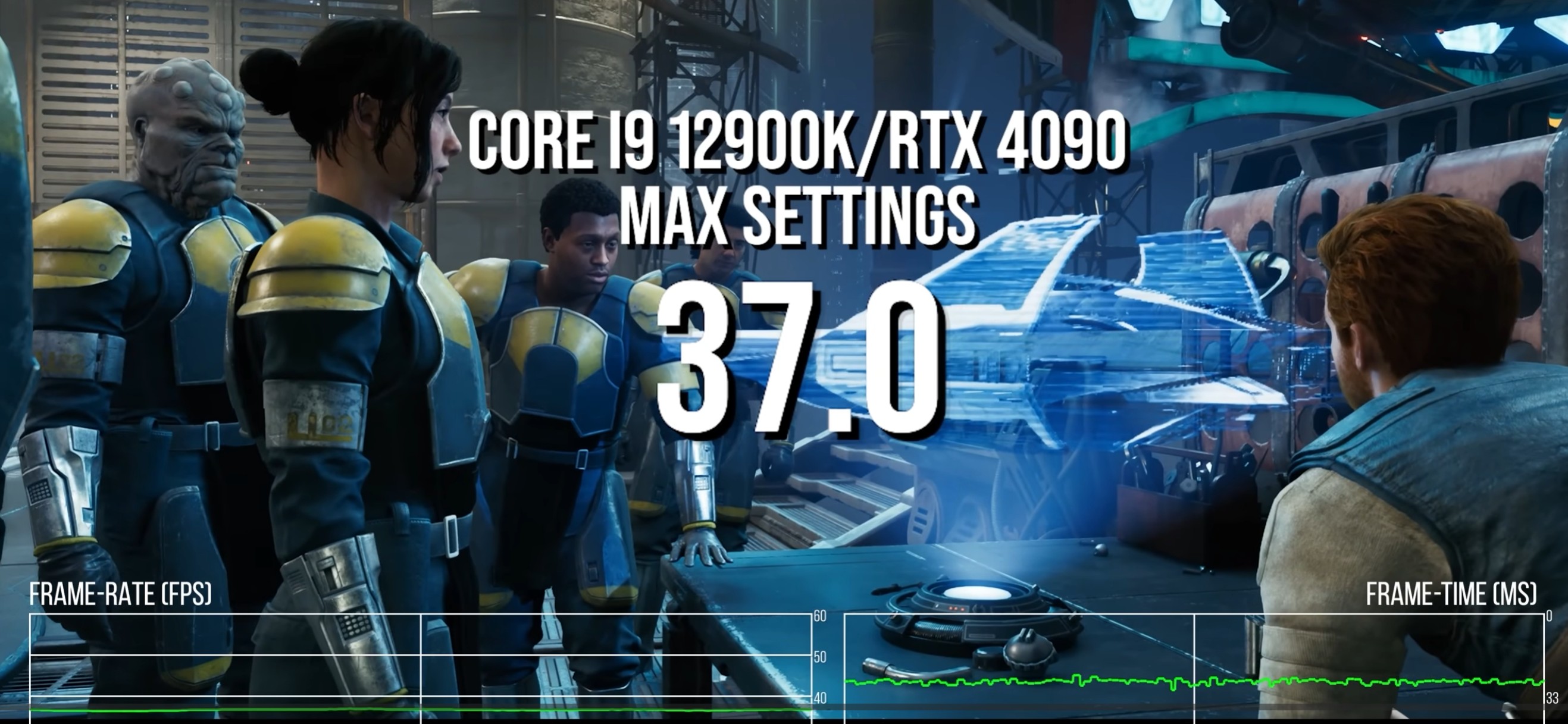

While looking into denoising with DLSS 4.5 on Unreal Engine 5 games, Alex made an intriguing discover: Star Wars Jedi Survivor has custom console commands that ease the CPU burden and vastly improve the camera and animation errors still seen in the game to this day. Alex believes these may be CPU optimisations for consoles that are dormant on the PC build - but can be used. So is this a complete "fix" for this most troublesome of PC ports? As you might imagine, the reality is a little more nuanced.

When script kiddies improve the game from a studio supported by EA. Fucking shame Respawn. What a mess the Jedi games have been on PC.