Topher

Identifies as young

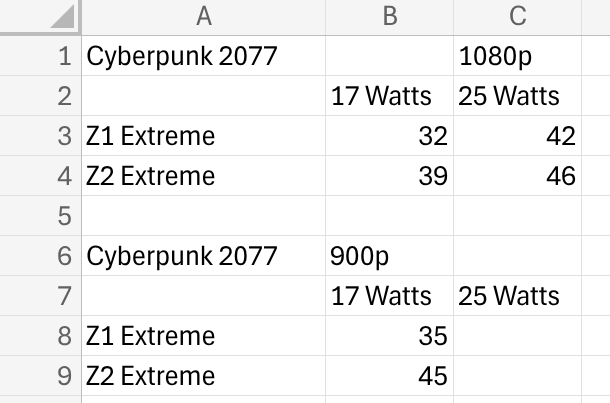

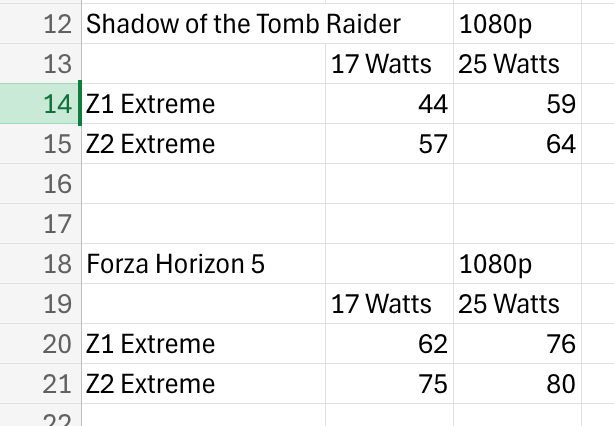

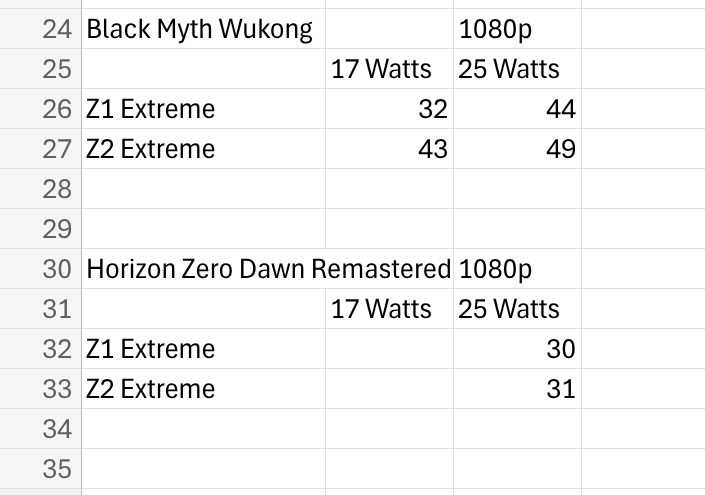

Summarized the testing below. The blank cells are where he skipped the test.

Based on this, only reason to get a Z2 Extreme is if you really want to max out performance on battery, but even at 17 watts you are not seeing massive gains here. At 25 watts, the difference is very small. Between 1 and 5 fps.