feynoob

Banned

MSI A320M/acWhat's your motherboard?

That's the most important thing for determining whether or not you have a viable upgrade path or if you'll need to do a new build from scratch.

MSI A320M/acWhat's your motherboard?

That's the most important thing for determining whether or not you have a viable upgrade path or if you'll need to do a new build from scratch.

MSI A320M/ac

But the PS5 version runs like shit, so....Next gen. Time to upgrade ya shit or.........get a PS5.

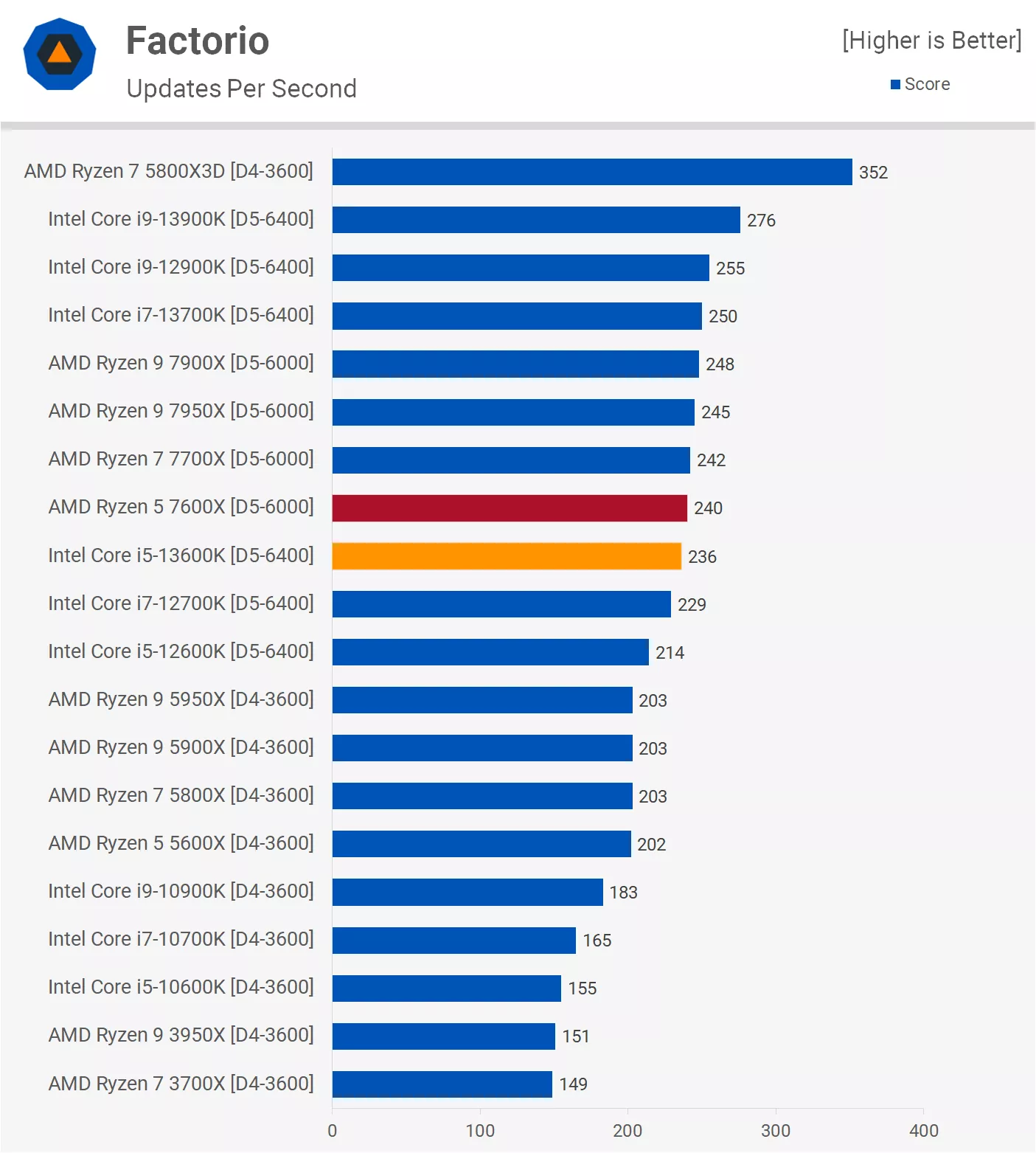

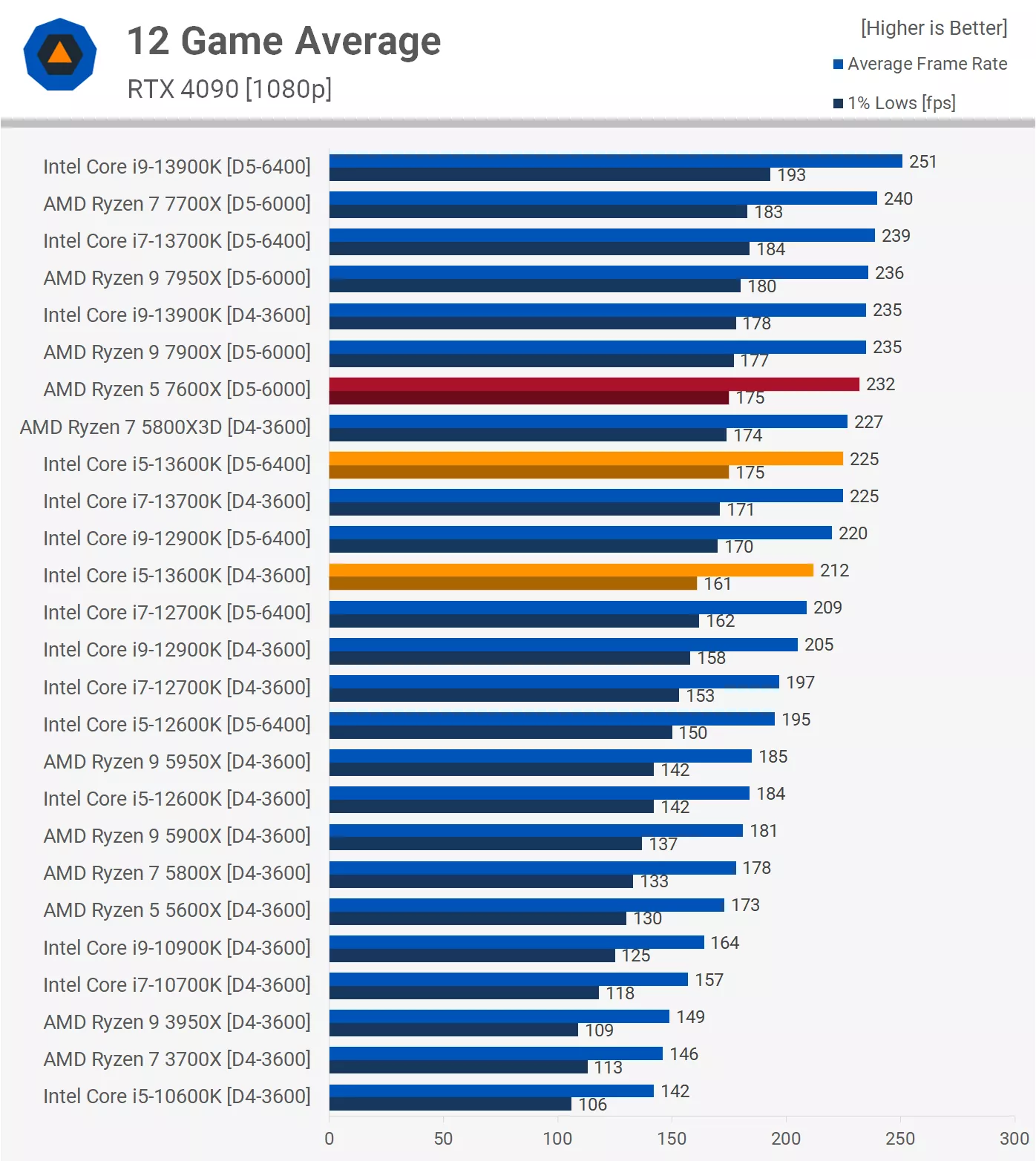

Best take in this thread when it comes to the number of cores discussion. IPC is king and total cpu performance matter way more than # of cores for gaming since most of them will run the same when having 6 cores (often even 4) enabled as when having 8+ and some even run a tiny bit worse with hpet/smt enabled than without it.Ive been effectively saying:

Once you are at 6 cores the number of cores needed for gaming this generation wont matter as long as IPC and cache keeps going up.

The 3600 was more than enough when it launched.

The 5600 was more than enough when it launched.

The 7600 was more than enough when it launched.

At no point since Ryzen 3000/10th generation have more than 6 cores proven to be a thing thats needed for high end gaming.

And Im still standing by those statements that this generation there wont be many games that actually load up more cores and have any sort of major benefit assuming you are comparing similar IPC.

Obviously new generation parts are better than old generation parts no one is denying that and it isnt something that needs to be stated.

But "in generation" 6 cores have been within 5% of their higher core brothers pretty much every time......X3D notwithstanding.

If you have the IPC, games use a master thread and worker threads, the master thread does most of the heavy lifting, so if your IPC is high enough 6 cores will suffice even for high level gaming.

If we are targeting 60 then even older generations of 6 cores CPUs will be able to hold their own (10600K is still an absolutely stellar chip).

Once we reach 4K and really start getting GPU bound then those extra 10 cores matter even less.

When it comes to the 12th gen CPUs.

The 12400 is a worse bin than the 126/7/9K which is where the advantage is really being made, not because they also have e-cores or more p-cores.

So if you are building a new system you can save money by buying the current creme of the crop 6 core and enjoy 99% of the performance of the most expensive chip.

You are in generation so if inexplicably that ~10% differential in 1% lows start to bother you in however many years, then there is an upgrade path.

Or get whatever the current generations 6 core CPU is.

Its that motherboard.Can't find an MSI model with that exact name but there's this Asrock one? :

https://www.asrock.com/mb/AMD/A320Mac/index.asp#CPU

If it's that then with a bios update you'll have some very good CPU upgrade path options but you will need to check what the VRMs on your motherboard are capable of.

I'd start with a CPU and RAM upgrade, run some benchmarks to make sure your motherboard is up to the task and if it is then look at the GPU (and likely the power supply as well).

I'm seeing it at full price? Also how are they selling it in the first place, I thought it was only supposed to be available on the actual launchers, like steam?So it's 20% at Greenmangaming lol

Remember when entire publisher's made stinks over this in the past? Not sure how to take if Square is okay with that launch discount

They sell keys. Sign in with an account it's 55.99I'm seeing it at full price? Also how are they selling it in the first place, I thought it was only supposed to be available on the actual launchers, like steam?

Looking forward to all the smarts ass and sassy attitude YT compilations of Frey this game will spawn.Still think this game surprises people sleeping on it.

I got a 13600k.I was only in the GPU threads unfortunately.

If you're going for the 13600K then you needn't worry too much due to the architectural changes.

However if you're going for one of the older AMD 6 core chips then I'm sureBlack_Stride will pay for your upgrade when your games start stuttering

Its that motherboard.

I definitely need a ram upgrade. I am on 8gb ram as of now. Also my graphic card is rx570, which isnt sustainable at this point. Its hard to play GOW on it.

Would ram and GPu upgrade sustain me little bit, until I can upgrade from ryzen 3 3100?

Came close to getting this. Then considered the 13700K instead because I was afraid it would start to not quite deliver in a few years from now. Ended up getting the 13900K because I had the money and do a lot of other stuff that require a beefy CPU.I got a 13600k.

I guess that if i don't care about going over 60 frames at 4k i should be fine.

I'm sure GHG would be okay to provide upgrade money for people who gets bottlenecked at 40FPS (see GKnights, A Plague Tale Requiem, Witcher 3 Novigrad and many more that will follow) with their shiny new 4070 combined with their almighty 8 core beast 3700x (since he belives in generations, I guess if you go for a 3700x + 3070 build, and you keep your CPU around, that CPU should be able to reliably feed the new 4070. No? its just one generation of GPU difference. I mean it WAS a 8 core expensive behemoth beast CPU that you should've picked over 3600 if you wanted futureproof and upgrade paths for your next GPU. surely, it should at least allow for 1 generation of GPU upgrade. Imagine the horrors when nearly %40 of the 4070 sits chilling at 1440p waiting the CPU to render frames. Surely, 3700x should've made a difference over the puny shitty unusable 6 core POS that is called 3600. If not, I'm sure compensation would be in order by the person who suggested that CPU over the 3600, since that extra money paid for 3700x now practically has no meaning, since the person in question will also have to upgrade to altogether new CPU to keep the new 4070 beast fed. After all having %10-15 better %1s and %5-10 more framerate here and there won't able to fill that huge %40 GPU utilization that 4070 would have with 3700x. That is, if that ever happens, which is most likely to happen.

Bitches be like "next gen, upgrade pc or get a ps5"

My brother in Christ the ps5 is equivalent to a rtx 2060 super and a 3700x

It's entirely on the port

If you keep using your 13900k in 2028 and do not upgrade or invest in a newer platform for video games by that time; I will award you a Neogaf gold. You can note this down if you want to. Legit, promise. If Neogaf, you or I exist by that time that is.Came close to getting this. Then considered the 13700K instead because I was afraid it would start to not quite deliver in a few years from now. Ended up getting the 13900K because I had the money and do a lot of other stuff that require a beefy CPU.

13600K is the best bang for your buck high-end CPU right now.

Your CPU is heavily bottlenecking even if just to reach 60 fps, have good frame pacing or good input lag... I now because I had to upgrade from Ryzen 2200g to my current 5600x and that alone removed tons input lag, I was then very GPU bottlenecked by a 1060 3gb but playing Shadow of the tomb raider at around 40 fps felt way better, framerate wasn't much better but frame pacing and input lag were on another level... If you had better CPU I'd say go for GPU first but in your case I'd rather update it firstIts that motherboard.

I definitely need a ram upgrade. I am on 8gb ram as of now. Also my graphic card is rx570, which isnt sustainable at this point. Its hard to play GOW on it.

Would ram and GPu upgrade sustain me little bit, until I can upgrade from ryzen 3 3100?

Doesn't it use FSR? The whole of FSR is to run at lower resolutions and reconstruct. These DF style analyses are irrelevant in a world with FSR/DLSS.Yes, very impressive

Didn't the 3600-3700 launch before the 30 series? You talk like they are the same gen but they aren't? The 5000 series cpus and the 30 series gpus launched the same year so thatd make them the same gen no? Youd need to compare to the 5700 perhaps?I'm sure GHG would be okay to provide upgrade money for people who gets bottlenecked at 40FPS (see GKnights, A Plague Tale Requiem, Witcher 3 Novigrad and many more that will follow) with their shiny new 4070 combined with their almighty 8 core beast 3700x (since he belives in generations, I guess if you go for a 3700x + 3070 build, and you keep your CPU around, that CPU should be able to reliably feed the new 4070. No? its just one generation of GPU difference. I mean it WAS a 8 core expensive behemoth beast CPU that you should've picked over 3600 if you wanted futureproof and upgrade paths for your next GPU. surely, it should at least allow for 1 generation of GPU upgrade. Imagine the horrors when nearly %40 of the 4070 sits chilling at 1440p waiting the CPU to render frames. Surely, 3700x should've made a difference over the puny shitty unusable 6 core POS that is called 3600. If not, I'm sure compensation would be in order by the person who suggested that CPU over the 3600, since that extra money paid for 3700x now practically has no meaning, since the person in question will also have to upgrade to altogether new CPU to keep the new 4070 beast fed. After all having %10-15 better %1s and %5-10 more framerate here and there won't able to fill that huge %40 GPU utilization that 4070 would have with 3700x. That is, if that ever happens, which is most likely to happen.

No, my post was satire. In my previous posts, I stated that Zen 3 was meant for Ampere. But they're adamant on thinking 3700x and 3080 is a popular match. I simply took a jab at them with that post. After all, they are the ones who think 3700x would give leeway for future GPU upgrades (that's why my post is satirical, since the damn CPU cannot even feed a 3080 EVEN at 4K to begin with in 2020. many ray traced games at 4k dlss quality/balanced became heavily bottlenecked. they claim it was a popular match somehow, but Zen 2 was the cheapskate generation and most people simply did not invest into the Zen 2 hype, especially if they were chasing after 2080ti/3080 and beyond. sure, ryzen 3600/3700x+2060-2080/3060ti matchings were very popular, but few dared to combine those slow threaded CPUs with upper high end Ampere beasts. most people went with the safe route on Intel or waited for Zen 3 or simply upgraded to Zen 3. there are literally MOST likely thousands of disgruntled "3700x" users who found huge solace and frametime quality in a cheap 5600x alternative for their super high end Ampere back then).Didn't the 3600-3700 launch before the 30 series? You talk like they are the same gen but they aren't? The 5000 series cpus and the 30 series gpus launched the same year so thatd make them the same gen no? Youd need to compare to the 5700 perhaps?

I know this because I built my 3600 + 2080s with 32gb ram in in late 2019 (still going strong but looking to upgrade this year).

wait wait wait... 720p on ps5??

Sadly yes.Is it true that this game was made by the same studio that worked on FFXV? Not even getting this on sale if thats the case.

The news 7700x is like twice as fast as 3700x which surprised me very much and questions my pc gaming alliance even morne lol. Feels like I got this shitty cpu and platform just recently and it was actually July 2019!!!!!!I'm sure GHG would be okay to provide upgrade money for people who gets bottlenecked at 40FPS (see GKnights, A Plague Tale Requiem, Witcher 3 Novigrad and many more that will follow) with their shiny new 4070 combined with their almighty 8 core beast 3700x (since he belives in generations, I guess if you go for a 3700x + 3070 build, and you keep your CPU around, that CPU should be able to reliably feed the new 4070. No? its just one generation of GPU difference. I mean it WAS a 8 core expensive behemoth beast CPU that you should've picked over 3600 if you wanted futureproof and upgrade paths for your next GPU. surely, it should at least allow for 1 generation of GPU upgrade. Imagine the horrors when nearly %40 of the 4070 sits chilling at 1440p waiting the CPU to render frames. Surely, 3700x should've made a difference over the puny shitty unusable 6 core POS that is called 3600. If not, I'm sure compensation would be in order by the person who suggested that CPU over the 3600, since that extra money paid for 3700x now practically has no meaning, since the person in question will also have to upgrade to altogether new CPU to keep the new 4070 beast fed. After all having %10-15 better %1s and %5-10 more framerate here and there won't able to fill that huge %40 GPU utilization that 4070 would have with 3700x. That is, if that ever happens, which is most likely to happen.

When the 136K starts to "not quite deliver" the 139K will also "not quite deliver".Came close to getting this. Then considered the 13700K instead because I was afraid it would start to not quite deliver in a few years from now. Ended up getting the 13900K because I had the money and do a lot of other stuff that require a beefy CPU.

13600K is the best bang for your buck high-end CPU right now.

Considering that the 13900K can be up to 20% faster than the 13600K in gaming, I wouldn't be so smug with my comments.When the 136K starts to "not quite deliver" the 139K will also "not quite deliver".

But since you are doing other thread heavy stuff with your CPU then it makes sense to get the most cores you can.

But of those thread heavy things.....gaming is not one of them.

i'd ditch the 3700x, really. you dont even need a mobo or platform change. a cheap 5600 wrecks it sadly. NVIDIA GPUs have serious driver overheads, which reshuffles threads constantly, causing internal bottlenecks on anything older than zen 3. if you want my honest opinion, none of zen /zen+/zen 2 cpus were meant for such GPUs that relies on CPU bound driver scheduling. but here we are. of course naturally, this driver thing surfaced much later on, funny enough, to coincide with zen 3's release.The news 7700x is like twice as fast as 3700x which surprised me very much and questions my pc gaming alliance even morne lol. Feels like I got this shitty cpu and platform just recently and it was actually July 2019!!!!!!

I can tell you, cpu was good but that x570 was terrible at first and installing new bios every fucking week was getting tired fast.

1 minute cold boot coming from 20seconds on 2500k was not what I expected but they ironed it out after a year.

Is it time for new platform, cpu and mobo ?

Yes.

Will I upgrade? Fuck no

they matter if you're pushing for a 4090 instead of a 4080. i'd also match a 13900k and 4090. or 13700k with a 4080. and then, 13600k with a 4070ti and below.Considering that the 13900K can be up to 20% faster than the 13600K in gaming, I wouldn't be so smug with my comments.

And 12% faster on average in gaming with higher 10% higher 1% lows. Not worth the 70% price premium at all but the 13900K can be appreciably faster already. But I forgot that for you guys, those 10,15, or 20% differences don't matter.

i'd ditch the 3700x, really. you dont even need a mobo or platform change. a cheap 5600 wrecks it sadly. NVIDIA GPUs have serious driver overheads, which reshuffles threads constantly, causing internal bottlenecks on anything older than zen 3. if you want my honest opinion, none of zen /zen+/zen 2 cpus were meant for such GPUs that relies on CPU bound driver scheduling. but here we are. of course naturally, this driver thing surfaced much later on, funny enough, to coincide with zen 3's release.

I mean look at this man. This is on a freaking 3070ti. Imagine the 3080 and horrors and ray tracing.I know you like to play with super GPU bound settings at 4K and manage to feed your GPU but you're an outlier, which I respect that (meself is also an outlier, using a decrepit 2700 with a 3070, but here we are. I don't suggest my imbalanced people to others, because not everyone has your or mine priority.) but I'm sure you would still benefit from 5600x or 5800x .most of the stutters or inconsistenies you experience OTHER than shader compilation stutters are most likely caused by mismatched pair of CPU and GPU. the zen 2 CPUs are bound to have random stutters and hitches due to internal CCX latency stuff.

look at its frametimes, and this is without ray tracing (ray tracing is more catastrophic on zen 2 cpus)

or if you want your 8 core loyalty, go for a 5800x 3D and seal the deal for the generation.

i mean u already invested a lot on an expensive x570, might as well make use of it lol

this test is broken. i have no idea how he got those results. especially that shadow of the tomb raider CPU bound town benchmark sequence is super SUS. that place is super single thread bound which gives 5600x a huge advantage

believe whomwever you want man. i dont trust that channel. you may not trust Odin's channel as well.

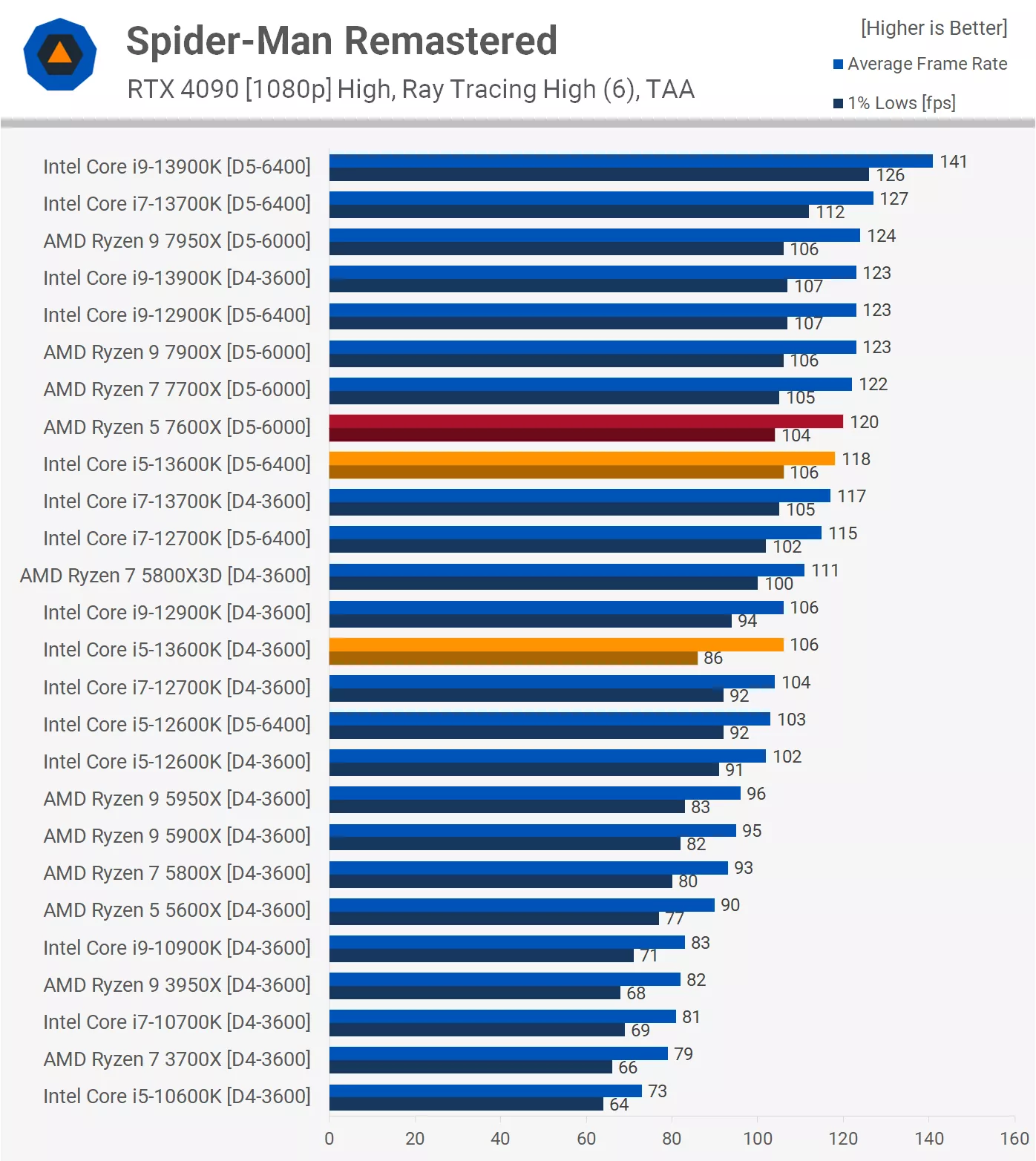

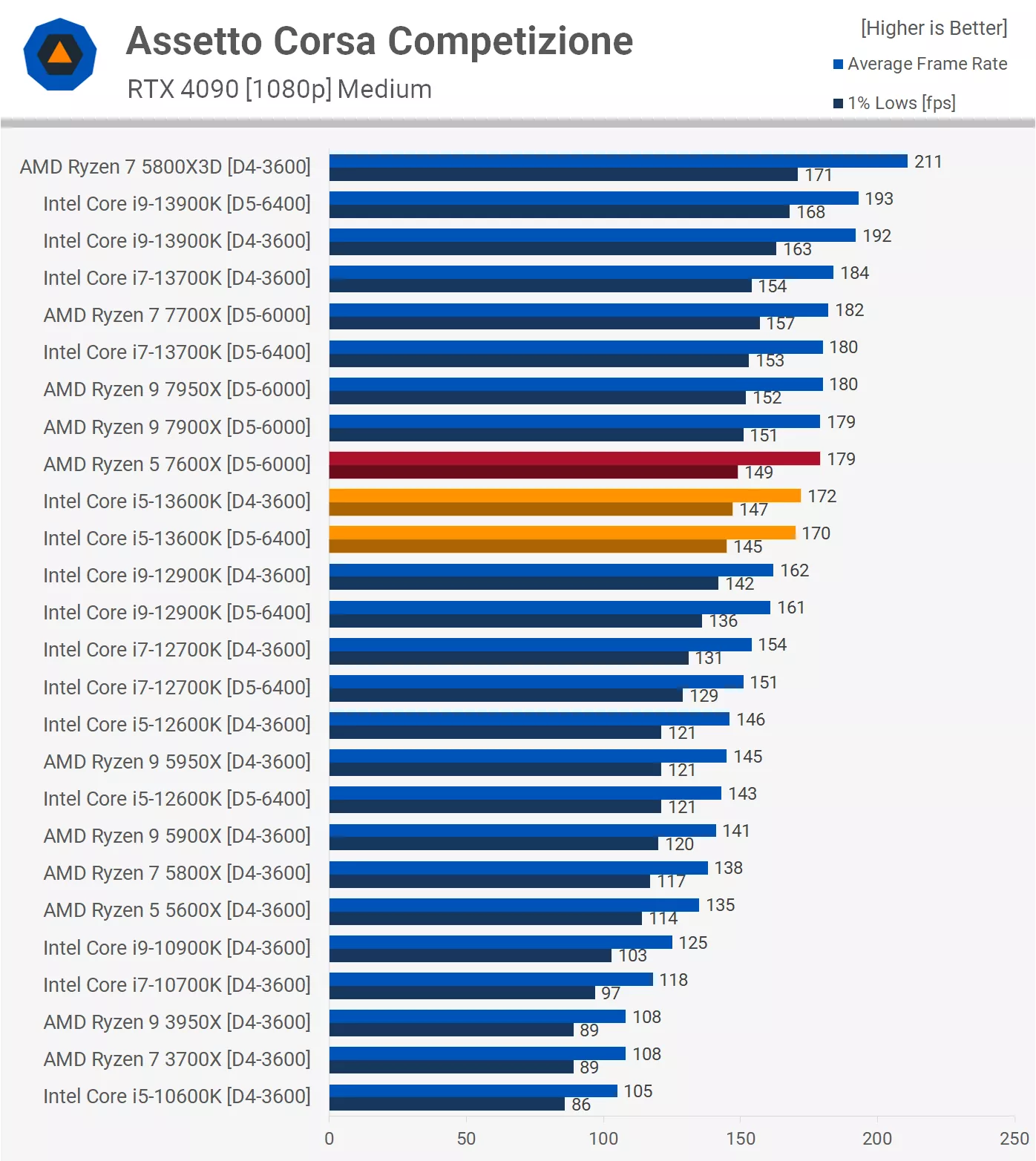

Meanwhile your own nitpicked graph shows the 6 core 7600X, the 8 core 7700X, the 10 core 7900X and the 16 core 7950X all imperceptibly within range of each other?Considering that the 13900K can be up to 20% faster than the 13600K in gaming, I wouldn't be so smug with my comments.

And 12% faster on average in gaming with higher 10% higher 1% lows. Not worth the 70% price premium at all but the 13900K can be appreciably faster already. But I forgot that for you guys, those 10,15, or 20% differences don't matter.

at least they are not going to play a PS5 game on a resolution suitable for 720p TVs....Pc fanboys getting but hurt in this thread

4090....FHD...Considering that the 13900K can be up to 20% faster than the 13600K in gaming, I wouldn't be so smug with my comments.

And 12% faster on average in gaming with higher 10% higher 1% lows. Not worth the 70% price premium at all but the 13900K can be appreciably faster already. But I forgot that for you guys, those 10,15, or 20% differences don't matter.

at least they are not going to play a PS5 game on a resolution suitable for 720p TVs....

Considering that the 13900K can be up to 20% faster than the 13600K in gaming, I wouldn't be so smug with my comments.

And 12% faster on average in gaming with higher 10% higher 1% lows. Not worth the 70% price premium at all but the 13900K can be appreciably faster already. But I forgot that for you guys, those 10,15, or 20% differences don't matter.

are you telling me that PC is more expensive than PS5? No shit !! how did you come up with that conclusion by yourself?At least it will at 60fps and not be 480p 20fps on a pc of equivalent value

Think mark, think!1. The game drops as low as 720p on PS5

2. Do you know how the game runs on PC? Where are the benchmarks? Instead of garbage posts like these, think.

At least it will at 60fps and not be 480p 20fps on a pc of equivalent value

450euro ?At least it will at 60fps and not be 480p 20fps on a pc of equivalent value

The 5080-90 won't be twice as fast as their predecessors.they matter if you're pushing for a 4090 instead of a 4080. i'd also match a 13900k and 4090. or 13700k with a 4080. and then, 13600k with a 4070ti and below.

but none of the will matter once you will want to upgrade a to GPU that is 2x faster; namely 5080-5090

look at table yourself, imagine you bought a super high end 10900k just 3 years earlier. how would that serve you with a 4090 upgrade? based on that graph, in CPU bound situations, you're losing nearly %53 performance compared to that. (and don't play the "it is 1080p, it does not matter!! card. you used them tables to make the 13900k worthwhile to begin with, so that argument is out of the window)

say you went for a 3070 10900k build in 2020. (not 10600k, because per your beliefs, 10900k should serve better for future upgrades). but now 10900k also bottlenecks the 4070ti heavily also (in this case, I'm referring to bottlencked situations where 4070ti is capable: Cyberpunk with ray tracing for example). what gives? neither 10600k nor 10900k matter for the new 4070ti, unless you play with niche graphical settings that put all the load on GPU (which is not your run off the mill PC user).

if you went for a 3090 and 10900k to start with, and use that rig for 5-7 years, its all fine.

but you went for a 10900k and 3070 rig in the hopes of upgrading to a 4070ti later on? 4070ti is already TOO damn fast for the damn 10900k. so in the end, 10900k only mattered over 10600k when you were choosing a 3090 over a 3080 or choosing a 3080 over a 3070. but it didn't, it doesn't and it won't matter once you go upper tiers, from 3090 to 4090, for example.

i'm not saying there is not a market for 13700k and 13900k. they matter when you claw those final %15-25 performances some GPUs can provide additionally, where 4090 comes in play. but once you push enormous 2-2.5x GPU performance increase by the virtue of sheer advancement or aggresive settings/DLSS2, stuff tends to haywire on unbalanced builds.

even 5800x 3d bottlenecks the 4070ti in CPU intensive situations where 4070ti is more than capable of for GPU operations. not even zen 3 is meant for ADA LOVELACE.

And you still don't get it. The 13900K is still appreciably faster than the 13600K when you implied there was no difference. 20% in some scenarios is a substantial difference. Furthermore, you're still hung up on that 6-core bit which isn't the point. 6-core isn't the the disease, it's the symptom. The problem aren't the 6-core, it's their placement in the market and what chiefly Intel does to justify the higher SKUs. Take the 9600K which is an i5 part that always had hyperthreading but they decided to disable it to upsell the 9700K and 9900K. While 6-core 12 threads is fine, 6-core 6 threads isn't so good. Same for the 12400K. 6-core part but a much worse bin, resulting in it being a budget performer. Taking a look at the ACTUAL 6-core CPUs over the past 6 years shows us that they have aged worse than their bigger cousins and that's not necessarily due to them being 6-core but they have aged worse nonetheless.Meanwhile your own nitpicked graph shows the 6 core 7600X, the 8 core 7700X, the 10 core 7900X and the 16 core 7950X all imperceptibly within range of each other?

Something must amiss dont you think?

Maybe binning?

CCX latency?

4090....FHD...

I... like... I... wat.....

Way smaller but I honestly don't game at 4K. This is purely a CPU benchmark.Is the difference as big even at 4k?