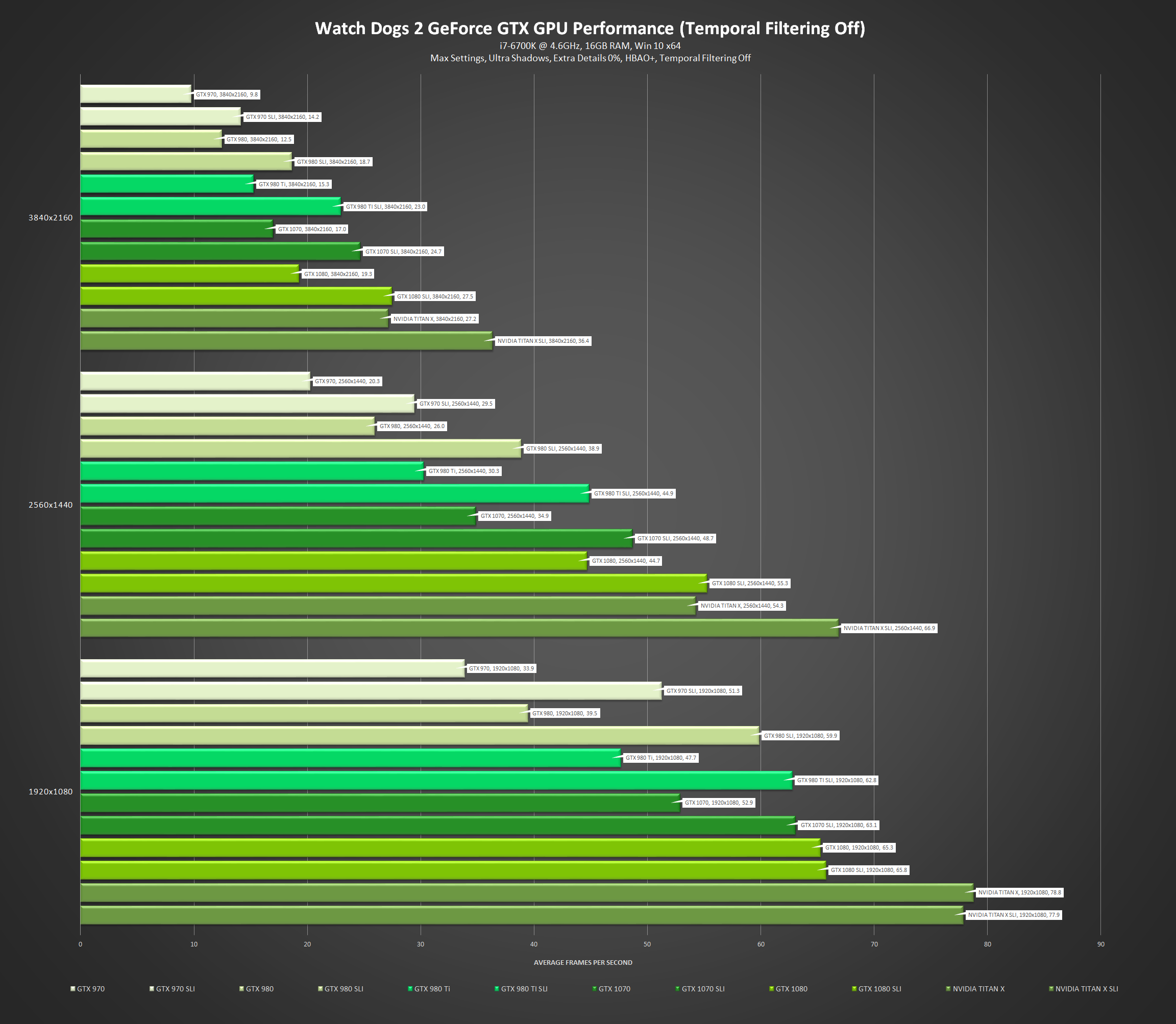

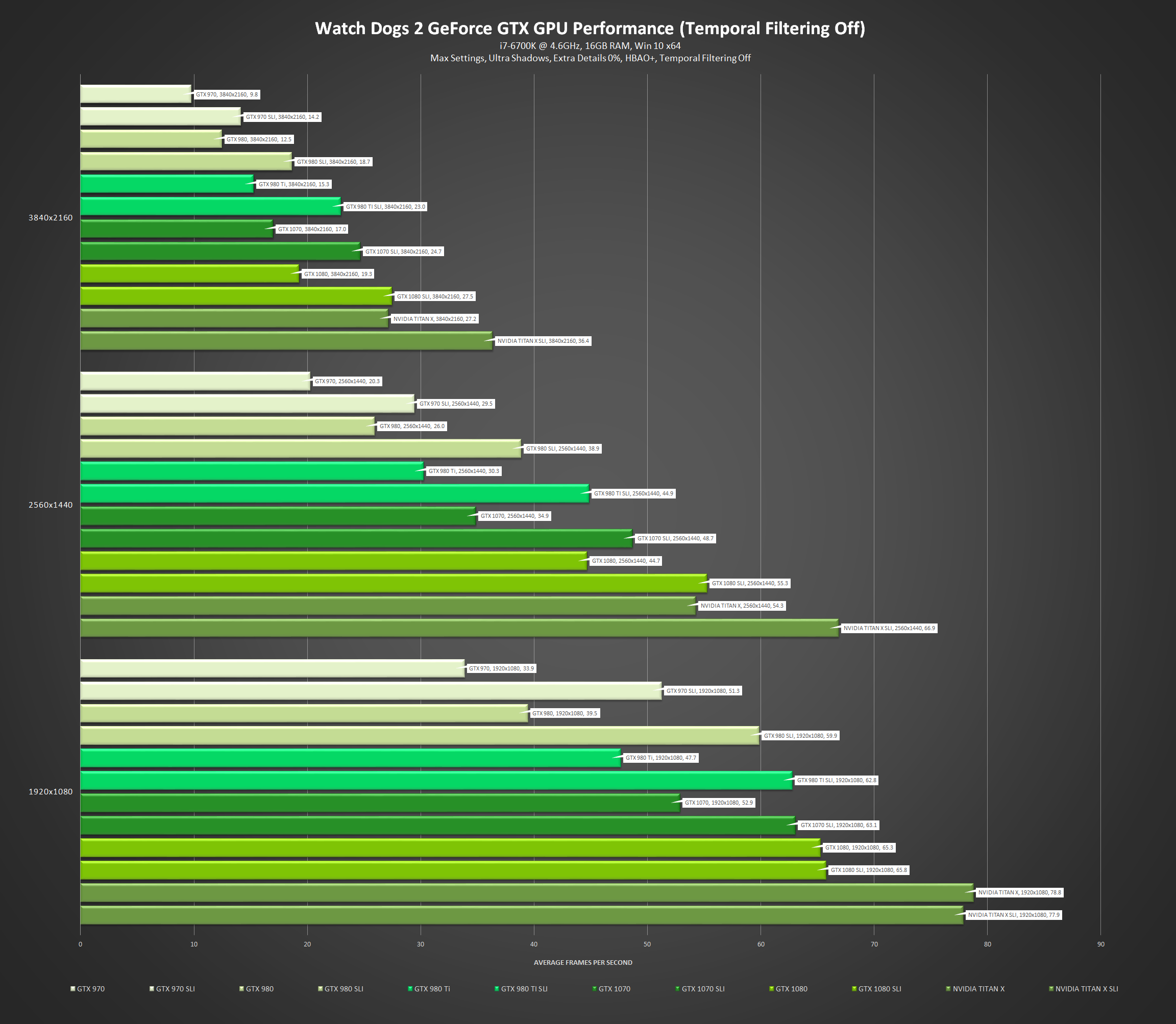

This is quite odd. According to NVIDIA, a 1080 SLI only manages 27.5 FPS on average at 4K. Could Very High vs Ultra make all this difference?

This is quite odd. According to NVIDIA, a 1080 SLI only manages 27.5 FPS on average at 4K. Could Very High vs Ultra make all this difference?

Question, does using the Temporal Filtering with a 1080p resolution makes the game too blurry?

Will I lose too much image quality by playing at the High Preset instead of Very High for example? I have a slightly overclocked GTX970 and would like to achieve a close to 60FPS @ 1080p if possible.

I don't think this game is very VRAM dependent, because I'm using half of my 8GB and I'm still getting drops to 50 using certain settings.

Maybe I'm misunderstanding how these things work, though.

We will test at Ultra Quality with HMSSAO and SMAA, we'll leave MSAA off for now as this mode is heavy on any GPU. For testing HMSSAO has been enabled as this option is available to both AMD and Nvidia graphics cards. We and you should leave temporal filtering disabled, as it is pretty bad (half the image quality).

checkerboarding on ps4pro cuts the horizontal res in half and samples alternate each frame. i dont think msaa is used? the ubi paper says their temporal filtering results in half the samples of native res which makes sense with msaa2x

Try this (my 1070 settings):

1440p+1.2 pixel density

Geometry: V.High

Extra Detail: 0%

Terrain: Ultra

Vegetation: Ultra

Textures: Ultra

AF: Ultra

Shadows: V.High

Headlight: 2 cars

Water: High

Reflections: High

Screenspace Reflections: OFF

Fog: On (not encountered yet)

DoF: On

Motion Blur: On

Bloom: On

AO: HBAO+

AA: SMAA

You could even go a bit higher, I guess.

Game runs beautifully for me. Running @1080P at 60FPS. Max settings with the texture pack. i7 4790K, 32gbs of DDR3 RAM, 980ti.

My 970 can't get a consistent 60fps with everything on low settings. Open world on low settings seems to float around 55fps, high settings is 45fps.

Hmm I wonder if you're CPU bound? What CPU do you have what's the speed of your ram?

Here's the GTX 970 with a i7 6700K.

Watch Dogs 2 GTX 970 OC | 1080p Ultra - Very High & High SMAA | FRAME-RATE TEST

Man, VRAM requirements in PC games are hiking more rapidly these days. 4GB is low end already ffs.

I was thinking about getting a 6GB 1060, and now it sounds like a very bad idea for investment.

Do we know how to use afterburner with this yet?

Having only the fps count is killing me lol.

All GPUs are "a very bad idea for investment".

So. Used Origin to get the game. But its going through Uplay. When i try to play the game i get a message from Uplay saying this product can't be activated at this time.

Anyone having this same issue?

Man, VRAM requirements in PC games are hiking more rapidly these days. 4GB is low end already ffs.

I was thinking about getting a 6GB 1060, and now it sounds like a very bad idea for investment.

Do we know how to use afterburner with this yet?

Having only the fps count is killing me lol.

1060 is essentially a 1080p/60 card, 6 GB is more than adequate.

When you launch through Origin you get the CD key that you input in Uplay. After that you can launch the game and Origin will clean up the install files, freeing up HDD space.

After that you should be able to just start the game through Uplay.

No, it looks like we'll need RTSS update to make the AB overlay work in this one.

Yeah, Kabylake should hit retail in January.yeah i had extra details at 5% which caused frequent hitches, turned that off helped.

averaging around 40-50fps, which is fine for me with g-sync. Will play around some more later when I get home.

I really should get around to getting a 6700k, but shouldn't the next gen be coming soonish? hmmmm

But according to the benchmark above, the game uses more than 6gb of VRAM even at 1080p.

I mean, at least when 2GB cards are still hot they're still capable of doing their job for a few years.

4GB cards have just barely released like a couple or more years ago and it's already obselete. And it's even worse for 6GB cards.

I mean, at least when 2GB cards are still hot they're still capable of doing their job for a few years.

4GB cards have just barely released like a couple or more years ago and it's already obselete. And it's even worse for 6GB cards.

Firstly, this tech moves quickly and a couple of years is an age in GPU terms. You've been able to buy GPUs with 4GB since 2012 or so. Wanting some "standard" that means you don't have to spend money again is a hope beyond hope. Spend what you can afford and don't fucking worry about it. The second-hand GPU market is very healthy.

Secondly, 4GB GPUs (I guess you're talking about Maxwell 2 mainly) are far from obsolete. This is hyperbole. Over time, if you stick with the same hardware, you will have to turn down graphical features on new releases. This is progress.

Thirdly, you're looking at a benchmark that only shows average FPS for brand new game, set to maximum settings that has several cutting edge features, and then concluding that 4GB is over. The TechPowerUp tests were run with the high-resolution texture pack. A feature that is recommended for GPUs with 6GB or more. Maybe just settle for High textures if you're using a > 2 -year-old GPU. I know, it's the end of the world as we know it.

People's understanding of VRAM usage in game software is woefully basic. Well-coded software will use what memory is available. Available VRAM that's not used is serving no function at all, but it doesn't stop people freaking out when software uses all available video memory.

The amount of VRAM a game uses while running != minimum amount of VRAM that game requires to run properly.

Calling BS on that TechPowerUp benchmark.

I have a 1080 and you only get close to 60FPS inside. The openworld is closer to the mid-40s.

16gb RAM, i5-4690k at standard clock

Watch Dog 2: PC graphics performance benchmark review

What is "checkerboarding" in cutting horizontal rez in half? Was it confirmed to be working this way? I'm thinking that checkerboarding is exactly that - a checkerboard quad pattern alternating between frames. Would give a nice half 4K shading rate for Pro's 2x PS4 flops figure.

But according to the benchmark above, the game uses more than 6gb of VRAM even at 1080p.

Way to give literally no indication of what settings you're running at champ.

If you're running heavy non-PP AA, then maybe that's why.

Clearly implying I was using exactly the same settings/resolution as TechPowerUP champ. Or maybe you could have viewed my post a a single page back where I did list my specs/settings. But who bothers to read anything but the first and last pages of a thread, right?

My guess is that their benchmark was the opening of the game only. Things drop considerably once you're in the open world.