-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

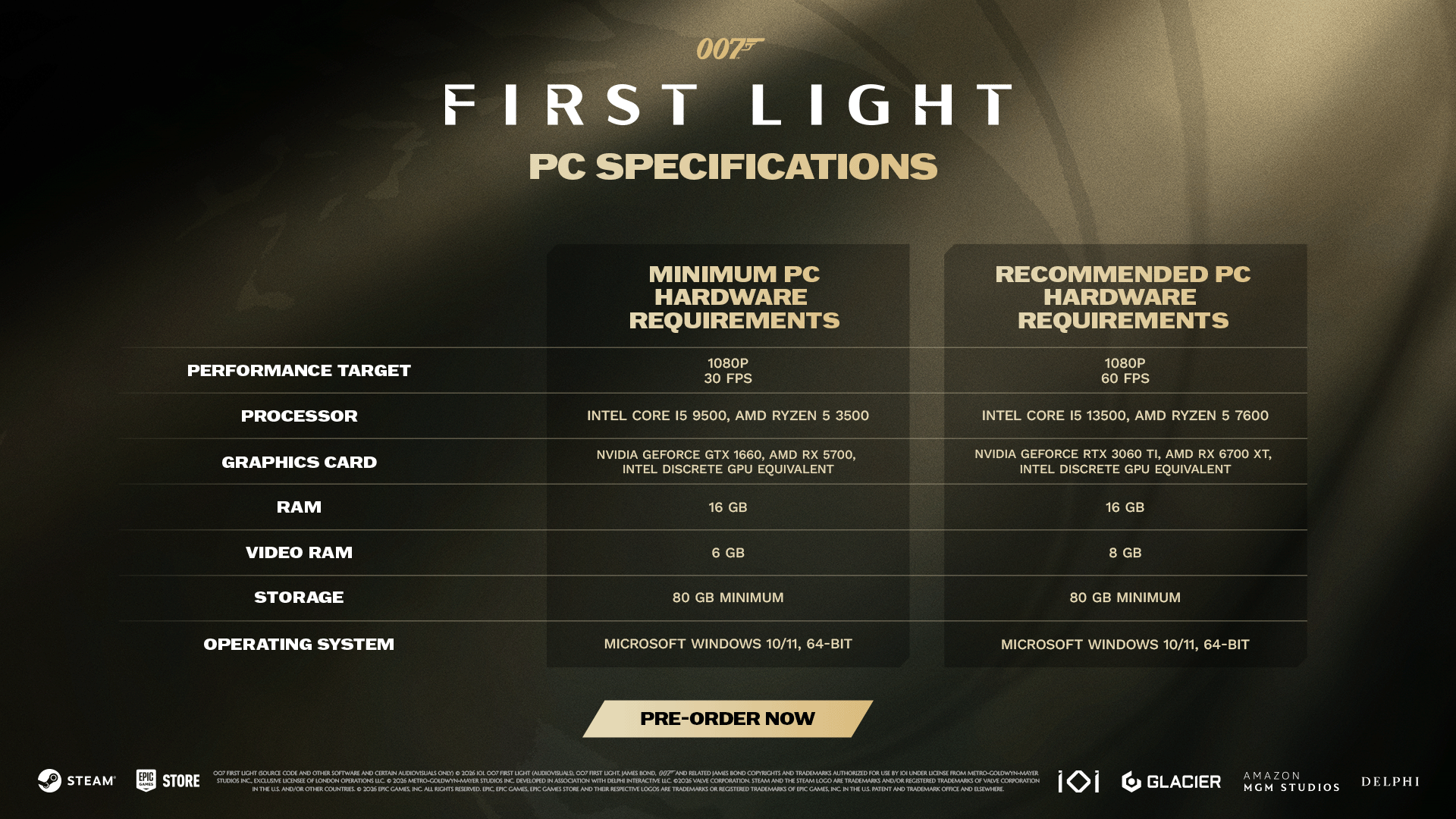

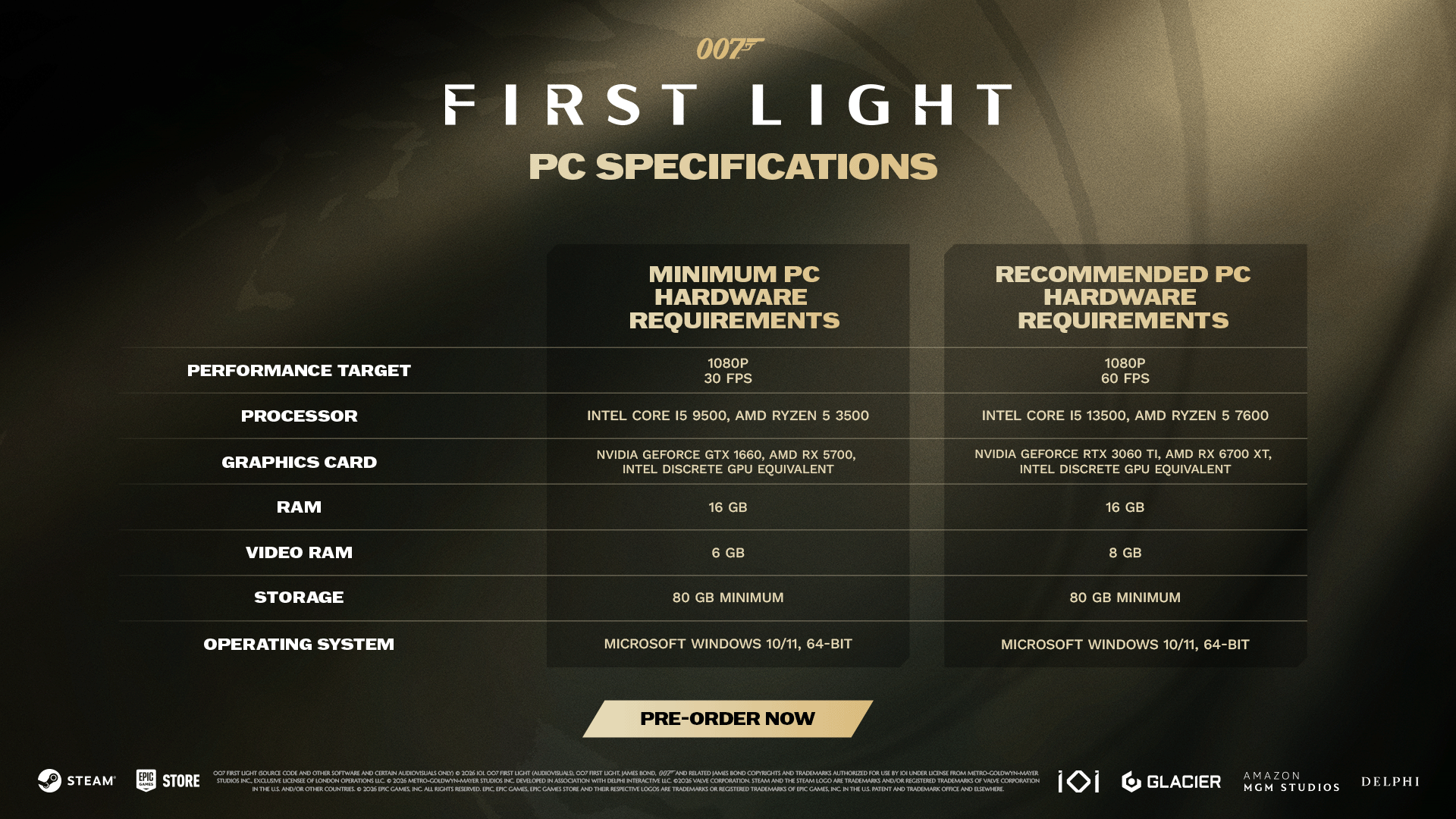

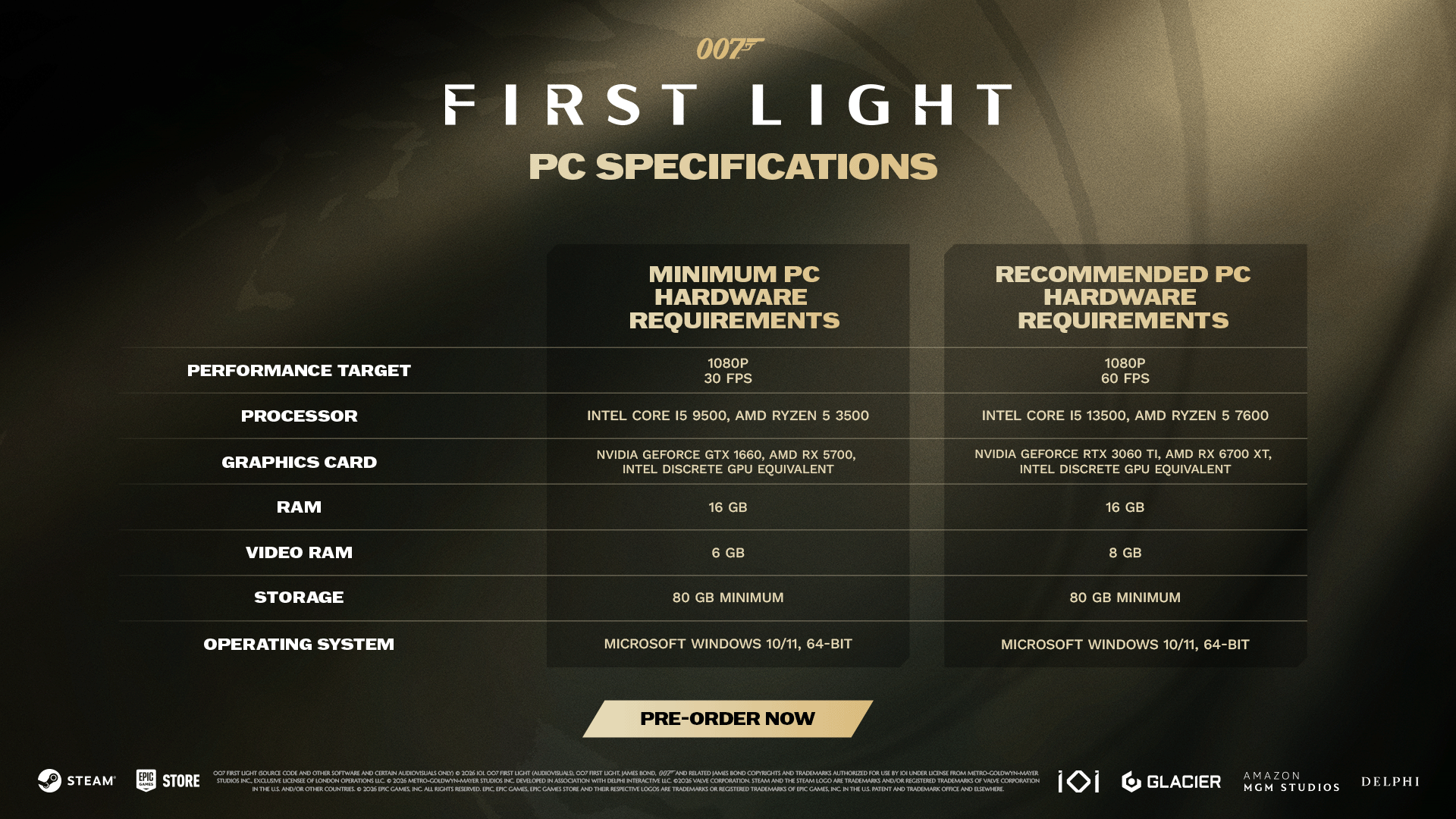

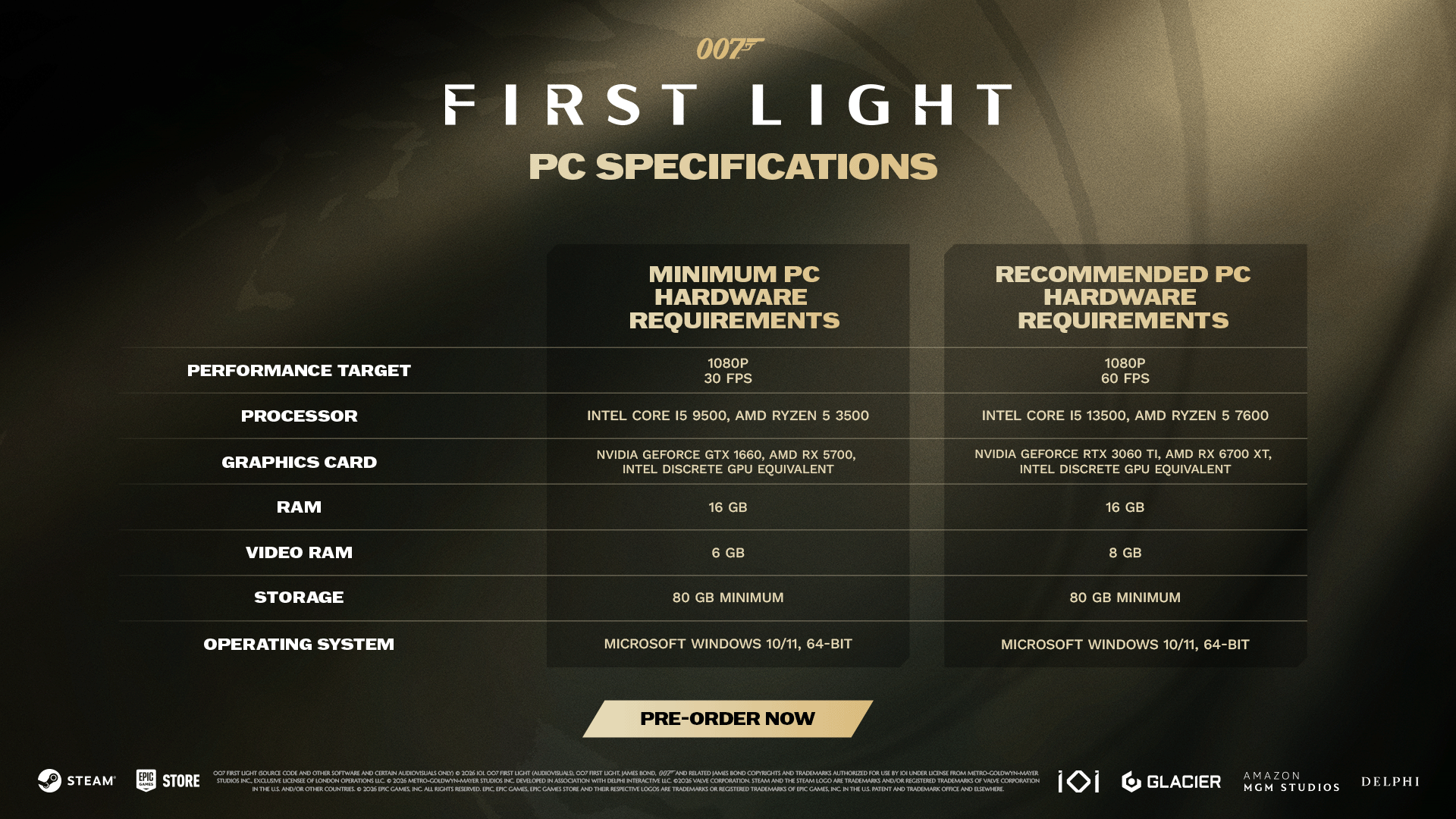

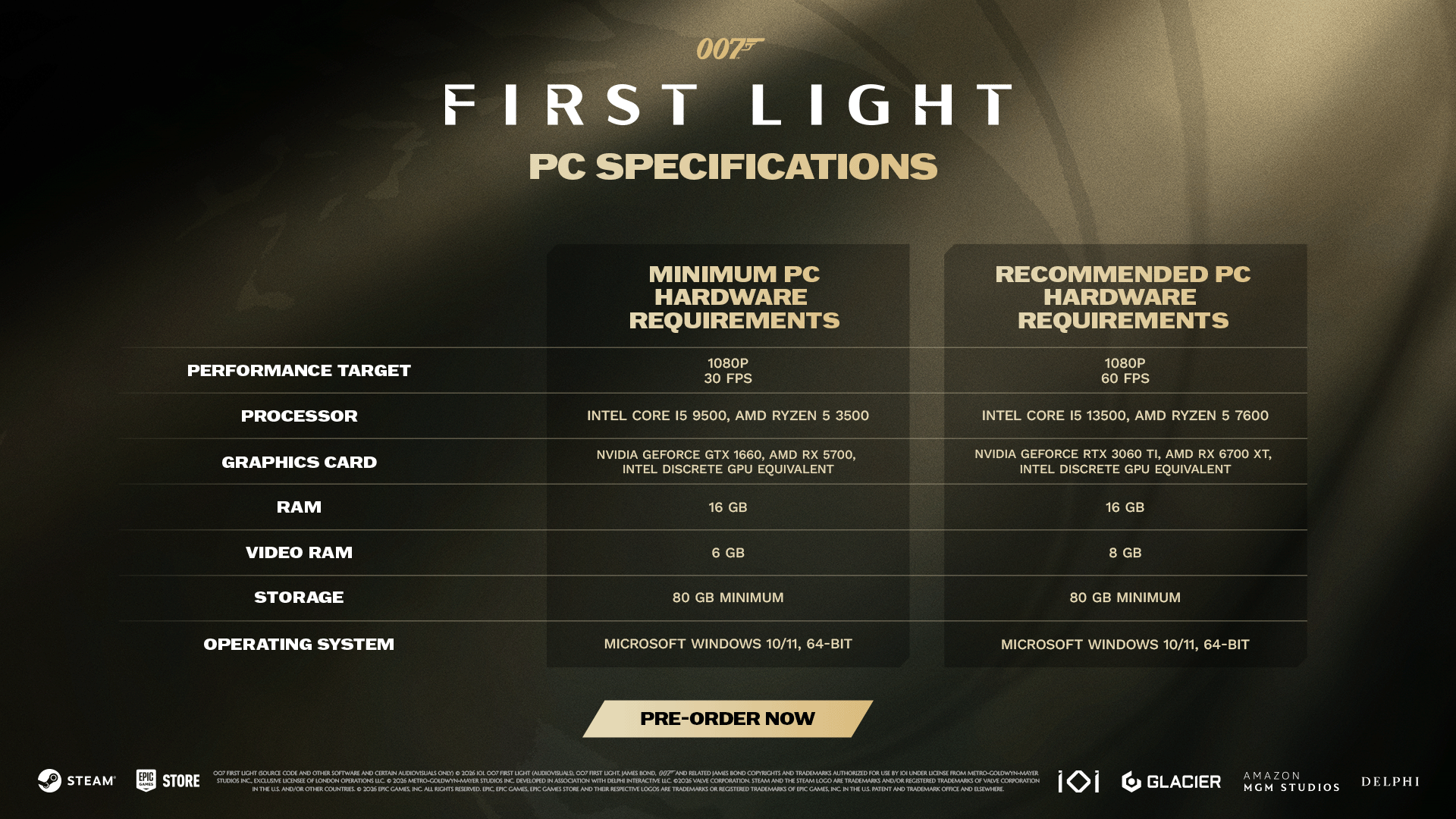

007 First Light recommended specs revealed and... oof.

- Thread starter Seyken

- Start date

Autistic_Pancake

Member

0fps

0hype

7$ on sale

0hype

7$ on sale

poodaddy

Gold Member

This, and it's actually somewhat obvious. People take these hardware recommendations far too seriously, and they're usually not really accurate or useful.Likely an exaggeration.

Alexios

Cores, shaders and BIOS oh my!

I mean, that was made deliberately to run great on the lowest end PCs, of course you could max it easily and of course it looked like a low end, low fidelity game even in high res.god i remember being able to play diablo 3 near launch on an dell ultrasharp 10bit screen in true 1440p and it fucking blasting my eyes in highres. and now we have fake res and fake frames

Basically they adopted the successful WoW approach (even though it wasn't an MMO and came out 7-8 years later). The equivalent of random shooters looking like Fortnite now.

Last edited:

SlimySnake

Flashless at the Golden Globes

lmao. You clearly didnt watch the demo then because the last ten minutes showcase another level played 3 different ways to showcase just how different each playthrough can be.If you believe that a heavy scripted demo is a 'gameplay'... :-D

i find it hilarious that the devs put out a 35 minute gameplay demo with multiple levels, showcasing several different playstyles, not to mention all white leads and people didnt even bother watching. at this point i am convinced that gaming has been hijacked by a bunch of sad angry little men who dont care about visuals, game design, physics, or storytelling and just want to bitch and moan like a woman PMSing all day.

Last edited:

Orbital2060

Member

1920x1080p is still the most common display resolution on steam surveys. By not making it too heavy on the hardware, more people should be able to get a decent performance > more sales.

Boy bawang

Member

Damn. If the Switch 2 and Series S versions run at a fixed 30fps it would be a big, big win with such requirements...

HerjansEagleFeeder

Member

Probably won't sell well among Deck and Machine users.

Team Andromeda

Member

Women with big tits and lovely bums can't run this game too

kevboard

Member

this is true. but AMD had affordable 2x better than console GPUs out in 2020. But people wanted nvidia and refused to buy them. Instead going for 8GB gpus that we all said were garbage even back then. now its coming back to bite them.

absolutely not. Nvidia cards are aging like fine wine, while AMD cards are rotting away.

2020 Nvidia cards still outperform 2025 AMD cards in some RT heavy games, while 2020 AMD cards are completely unable to keep up whatsoever.

I honestly feel bad for people who still run an RDNA2 GPU in their PC. I'd rather have a high end RTX20 series card from 2018 than an RDNA2 card.

Kagoshima_Luke

Gold Member

This is the amount of horsepower needed to render the hairs on the Bond girls' adam's apples.

Imagine not having 32gb in 2026. I bought my PC like 2 years ago with 32gb

Shit my computer is 8 years old and has 128gb.

SlimySnake

Flashless at the Golden Globes

Depends on the games. Only some RT games favor Nvidia GPUs. UE5 hardware RT, Anvil Hardware RT, Dunia RT, RE Engine RT and Snowdrop hardware RT perform roughly the same on AMD and Nvidia GPUs. Maybe a 10% improvement in some engines? Definitely not the 80-100% we saw in early ray tracing titles. Games like Cyberpunk, Control, Alan Wake were sponsored and had a lot of their RT tech implemented with the help of nvidia engineers who clearly didnt bother optimizing for AMD GPUs, but other engines show that AMD cards are just fine at doing hardware RT. Especially since Ubisoft, Epic and Capcom designed their RT implementations around console tech.absolutely not. Nvidia cards are aging like fine wine, while AMD cards are rotting away.

2020 Nvidia cards still outperform 2025 AMD cards in some RT heavy games, while 2020 AMD cards are completely unable to keep up whatsoever.

I honestly feel bad for people who still run an RDNA2 GPU in their PC. I'd rather have a high end RTX20 series card from 2018 than an RDNA2 card.

Path tracing obviously is impossible on these cards, but lets face it, no one is doing path tracing on anything below a 3090 when it comes to 2020 era GPUs. i was straight up locked out of PT on my 10 GB 3080 and could only do 30 or so FPS in Doom. hell i just bought a 5080, and i have to drop down to 1440p and use DLSS just to get close to 60 fps in Doom and Indy, and even then in some areas thats not enough.

Meanwhile, the VRAM issue impacted A LOT more games early on and many users on this very board complained about having to reduce textures and details even in cross gen games on the 8GB 3070s. Forget the 3060 or 4060. Even i ran into a vram issue in RE4 and Gotham Knights with RT on. RE4 would literally crash while GK would crawl to 2-5 fps. leading me to turn off ray tracing altogether.

Represent.

Represent(ative) of bad opinions

Gamers are by far the most sensitive and pathetic group of fans on earth out of every entertainment medium I would say.i said this in the other thread, but that writer shouldve never opened his mouth and said what he said. you could do everything right in today's society, but if you say one mildly concerning thing, the wolves come for you. And sadly, the sheep follow.

This game has a straight white male lead, a straight white attractive female bond girl, shows Bond as a playboy ogling bikini clad women on beaches, hanging out on yachts with scantily clad women, ray traced graphics, incredible physics and destruction finally using the next gen CPUs, great new melee combat animations, and all of that is ignored because the writer hinted at making the game for a modern audience.

Devs need to understand that the very gamers they are trying to sell this game to are extremely sensitive and insecure, extremely jaded, ready to pounce on every word, ready to dismiss every game if it does not appeals to their own sensibilities. We have to treat them with kids gloves and give them absolutely fuck all to complain about because they do make up the vast majority of gamers. This game just might end up flopping because of that interview, and all of the incredible work done by engineers, artists and level designers will have gone to waste. Everything even remotely negative about this game will now be viewed with that lens.

Let a game have the slightest little thing that isn't meticulously tailored to them and their exact tastes and it's utter bitching and moaning, review bombing, death threats, dev hate, fake outrage, crybaby nonsense for the games entire life span.

It's no wonder no one takes games seriously other than gamers. 50 year old hobby and still viewed as a kids thing by most.

Show me one game in the same genre that looks better.Devs trying to make graphics impress by turning up chromatic aberration

Not impressed

Esppiral

Member

You can count with the fingers of one of your hands the games that ran at 1080p on PS3....The game is targeting ps3 level resolutions on PC lol

kevboard

Member

Depends on the games. Only some RT games favor Nvidia GPUs. UE5 hardware RT, Anvil Hardware RT, Dunia RT, RE Engine RT and Snowdrop hardware RT perform roughly the same on AMD and Nvidia GPUs. Maybe a 10% improvement in some engines? Definitely not the 80-100% we saw in early ray tracing titles. Games like Cyberpunk, Control, Alan Wake were sponsored and had a lot of their RT tech implemented with the help of nvidia engineers who clearly didnt bother optimizing for AMD GPUs, but other engines show that AMD cards are just fine at doing hardware RT. Especially since Ubisoft, Epic and Capcom designed their RT implementations around console tech.

Path tracing obviously is impossible on these cards, but lets face it, no one is doing path tracing on anything below a 3090 when it comes to 2020 era GPUs. i was straight up locked out of PT on my 10 GB 3080 and could only do 30 or so FPS in Doom. hell i just bought a 5080, and i have to drop down to 1440p and use DLSS just to get close to 60 fps in Doom and Indy, and even then in some areas thats not enough.

Meanwhile, the VRAM issue impacted A LOT more games early on and many users on this very board complained about having to reduce textures and details even in cross gen games on the 8GB 3070s. Forget the 3060 or 4060. Even i ran into a vram issue in RE4 and Gotham Knights with RT on. RE4 would literally crash while GK would crawl to 2-5 fps. leading me to turn off ray tracing altogether.

your inability to correctly set your settings is not an issue of the card.

RE4 runs absolutely fine with 8GB and RT. you just have to correctly set the texture pool setting.

lowering it will only marginally affect the actual quality of textures, and will maybe result in some texture pop-in, but that is usually very hard to notice.

as long as you use any variant of the "High" setting, you'll probably not notice any real difference unless you look at it under a magnifying glass.

so on an 8GB card, go down to "High 0.5GB"... it will maybe use 4GB of memory total at that point. giving you lots of headroom to turn on RT.

Last edited:

SlimySnake

Flashless at the Golden Globes

Tried that. I thought the difference in textures was too high when dropping the settings.your inability to correctly set your settings is not an issue of the card.

RE4 runs absolutely fine with 8GB and RT. you just have to correctly set the texture pool setting.

lowering it will only marginally affect the actual quality of textures, and will maybe result in some texture pop-in, but that is usually very hard to notice.

as long as you use any variant of the "High" setting, you'll probably not notice any real difference unless you look at it under a magnifying glass.

so on an 8GB card, go down to "High 0.5GB"... it will maybe use 4GB of memory total at that point. giving you lots of headroom to turn on RT

kevboard

Member

Tried that. I thought the difference in textures was too high when dropping the settings.

as long as you are on High the game will load the same quality of textures on any of the High settings. it will simply not pre-cache them and that can result in pop-in or far away textures lookin slightly worse.

there's no way it looked bad enough to not consider it.

and as to "being locked out of path tracing"... dude... You're locked out of path tracing on all AMD cards if you want playable framerates.

sure it sucks that some GPUs have not enough memory to enable path tracing in some games... but that's every AMD card! even the 9000 series cards get stramrolled by mid range Nvidia cards from 2020 when enabling path tracing.

Last edited:

Bernardougf

Member

And Tom Holland gen couldn't give two shits about 007... the concept of target audience/demographic its truly lostEnd of the day, I can't take this avatar seriously as 007. I don't want to control that character for a game, pretending he's supposed to be James Bond. Suspension of disbelief will be nonexistent – they've made this game for the Tom Holland generation but it holds no appeal to me.

Should have just called it Kingsman instead, I'd have loved that tbh.

SlimySnake

Flashless at the Golden Globes

Yes now that you mention it, it was the LOD pop-in that made me switch to the higher res textures. much more noticeable than some ray traced reflections.as long as you are on High the game will load the same quality of textures on any of the High settings. it will simply not pre-cache them and that can result in pop-in or far away textures lookin slightly worse.

there's no way it looked bad enough to not consider it.

and as to "being locked out of path tracing"... dude... You're locked out of path tracing on all AMD cards if you want playable framerates.

sure it sucks that some GPUs have not enough memory to enable path tracing in some games... but that's every AMD card! even the 9000 series cards get stramrolled by mid range Nvidia cards from 2020 when enabling path tracing

no, its not just vram. these 2020 GPUs just dont have the raw horsepower to run path tracing at playable framerates. i just told you that i dropped settings on my 5080 and there were some levels that were not hitting 60 fps in doom and indy even using dlss at 1440p. i am sure if i went down to medium or low settings, i might be able to run them at 1440p dlss performance or 720p but i didnt spend over a thousand bucks upgrading from a $850 GPU just so i can play games at low to medium settings with path tracing.

cyberpunk is the exception. it ran well at 1440p dlss performance even on my 3080, but 3060 or 3070? highly doubt it. especially since its very heavy on the CPU and most of those gamers paired up those GPUs with zen 2 CPUs that just cant handle path tracing or anything remotely CPU heavy.

point is that it doesnt really matter if AMD GPUs cant do path tracing when almost all of the Nvidia GPUs just dont have the raw horsepower to run them. Especially the ones from 2020 that people refuse to upgrade.

The Cockatrice

I'm retarded?

It will run perfectly fine (60+) with 16GB.

RAM is not what determines 30/60fps.

True, tho depending on the settings the 16GB ppl use and how optimized the game is, there might be more stutters.

ZiggyPop1991

Member

RIP those user reviews. Alienating consoles is already a slippery slope. But demanding those specs to pc users who we already find out are still using older graphics cards and setups is dumb as well. Another incoming game not optimized will hopefully lead to less developers doing this. Doubtful. But we can hope, lol.RIP consoles

kevboard

Member

no, its not just vram. these 2020 GPUs just dont have the raw horsepower to run path tracing at playable framerates.

I can run Cyberpunk pathtraced at 60fps at 1440p DLSS performance mode with Ray Reconstruction.

looks fine, plays fine

SlimySnake

Flashless at the Golden Globes

whats your GPU again?I can run Cyberpunk pathtraced at 60fps at 1440p DLSS performance mode with Ray Reconstruction.

looks fine, plays fine

Bojji

Gold Member

absolutely not. Nvidia cards are aging like fine wine, while AMD cards are rotting away.

2020 Nvidia cards still outperform 2025 AMD cards in some RT heavy games, while 2020 AMD cards are completely unable to keep up whatsoever.

I honestly feel bad for people who still run an RDNA2 GPU in their PC. I'd rather have a high end RTX20 series card from 2018 than an RDNA2 card.

Raster performance and VRAM of RDNA2 is absolutely fine but AMD wanted to drop diver optimizations for it two months ago (or so). AMD fanboys always talked about fine wine but that was true for ~8 years of GCN. Now AMD doesn't give a fuck anymore...

kevboard

Member

whats your GPU again?

3060ti

Spyxos

Member

The recommended specs are essentially console-equivalent GPUs from 2020.

If your PC is below the capabilities of a PS5, I don't think you're looking at this game.

Last edited:

winjer

Gold Member

Those requirements were made by idiots.

Probably made by AI. And checked by idiots.

winjer

Gold Member

The requirements have been updated:

"We are providing today an update to the PC system requirements for 007 First Light after the community flagged some inconsistencies in an earlier version of the listing.The earlier mistake was due to an internal miscommunication leading to an older version of the specs to be shared. After a thorough re-examination and additional testing, the recommended RAM has been corrected from 32GB to 16GB, VRAM values have been updated, and the minimum CPU line has been fixed. Additional performance targets will be shared closer to launch.We're sorry for the confusion this caused and appreciate everyone who brought it to our attention. The updated specs are now live on our store pages, and we're looking forward to sharing more of 007 First Light with you ahead of launch.".

Fabieter

Member

The requirements have been updated:

I mean inconsistent pc requirements were a thing for decade at this point. Not surprised and nothing special. I still wont play it though.

PeteBull

Member

Those specs, even recommended ones are for gaming pc of a class around base ps5(obviously cpu is way above that, but they specifically talking 60fps, likely ps5 base gonna have "60fps target" mode with tons of dips even below 40s in any cpu heavy scenario so it makes sense for smooth 60 on pc they require much stronger cpu.The requirements have been updated:

GPU/ram/vram requirements are perfectly acceptable, if u got mashine weaker than recommended specs(overall, u might be tiny bit below on cpu/gpu) u likely dont or barely ever buy AAA games at launch in 2025/2026.

Just so u guys have comparision, thats gameplay trailer running on base ps5, visible dips into 20s on many ocassions, we not even talking relatively stable 30fps atm:

High speed chase, grand sequence with tons of explosions towards the end, thats all running very poorly at times, far from locked 30 even. And u can still see jaggies everywhere so likely game isnt even native 1080p, probably some drs range coz sometimes it looks clean but durning those demanding sequences u could see pixels/sharp edges clearly.

And thats perfectly fine, game is still in the works, optimisation is done towards the end of the development anyways, we want game to look/feel current gen- ofc its gonna require decent tier of hardware.

Bojji

Gold Member

Those specs, even recommended ones are for gaming pc of a class around base ps5(obviously cpu is way above that, but they specifically talking 60fps, likely ps5 base gonna have "60fps target" mode with tons of dips even below 40s in any cpu heavy scenario so it makes sense for smooth 60 on pc they require much stronger cpu.

GPU/ram/vram requirements are perfectly acceptable, if u got mashine weaker than recommended specs(overall, u might be tiny bit below on cpu/gpu) u likely dont or barely ever buy AAA games at launch in 2025/2026.

Just so u guys have comparision, thats gameplay trailer running on base ps5, visible dips into 20s on many ocassions, we not even talking relatively stable 30fps atm:

High speed chase, grand sequence with tons of explosions towards the end, thats all running very poorly at times, far from locked 30 even. And u can still see jaggies everywhere so likely game isnt even native 1080p, probably some drs range coz sometimes it looks clean but durning those demanding sequences u could see pixels/sharp edges clearly.

And thats perfectly fine, game is still in the works, optimisation is done towards the end of the development anyways, we want game to look/feel current gen- ofc its gonna require decent tier of hardware.

So console version is 30fps?

That explains that higher end CPUs required for 60fps on those specs lists. They basically want 2x PS5 CPU for 60 here.

Last edited:

Those specs, even recommended ones are for gaming pc of a class around base ps5(obviously cpu is way above that, but they specifically talking 60fps, likely ps5 base gonna have "60fps target" mode with tons of dips even below 40s in any cpu heavy scenario so it makes sense for smooth 60 on pc they require much stronger cpu.

GPU/ram/vram requirements are perfectly acceptable, if u got mashine weaker than recommended specs(overall, u might be tiny bit below on cpu/gpu) u likely dont or barely ever buy AAA games at launch in 2025/2026.

Just so u guys have comparision, thats gameplay trailer running on base ps5, visible dips into 20s on many ocassions, we not even talking relatively stable 30fps atm:

High speed chase, grand sequence with tons of explosions towards the end, thats all running very poorly at times, far from locked 30 even. And u can still see jaggies everywhere so likely game isnt even native 1080p, probably some drs range coz sometimes it looks clean but durning those demanding sequences u could see pixels/sharp edges clearly.

And thats perfectly fine, game is still in the works, optimisation is done towards the end of the development anyways, we want game to look/feel current gen- ofc its gonna require decent tier of hardware.

I've just watched this PS5 gameplay for the first time and holy shit... the framerate... the LOD pop in, the pixelated shadows etc.. All that while barely looking ok. No wonder they delayed the game.

It's probably still gonna be a mess at launch on PC. I have a strong feeling their inhouse engine isn't built for a game like this.

Last edited:

Rocinante618

Member

New requirements make me happy, this game (as well as RE9) will run on my laptop at 60fps now  I'm happy.

I'm happy.

I'm done with 2026 (maaaaaybe I'll get some dlcs: AC Shadows dlc, Starfield Shattered Space and age of mythology's new season pass, some AoE2 dlcs, some indies)

I'm done with 2026 (maaaaaybe I'll get some dlcs: AC Shadows dlc, Starfield Shattered Space and age of mythology's new season pass, some AoE2 dlcs, some indies)

Last edited:

AJUMP23

Parody of actual AJUMP23

The requirements have been updated:

I guess they got Q to take a look and fix the specs.

PeteBull

Member

This version isnt even stable 30fps so yeh, and again- if say ps5pr0 has 60fps target mode and it dips below 40 at any cpu heavy scenario its logical for stable 60 on pc u need some decent cpu, not top of the line but solid, much stronger what we got in ps5/pr0 (which is zen2 from 2019).So console version is 30fps?

That explains that higher end CPUs required for 60fps on those specs lists. They basically want 2x PS5 CPU for 60 here.

tygerbright

Banned

This game demonstrates what Xbox PC console hybrid was built for. Why. XPC will match PS in settings while having a full streaming toolset and recording, editing and video production app built in. Auto-spotlight on XPC community, encouraging a YouTube entertainment and achievement culture presence. Steam for PC still a thing. Four sisters: red potato, blue potato, green potato and gray potato. Hashtag kooky.