Spyxos

Member

- A widow in Belgium said her husband recently died by suicide after being encouraged by a chatbot.

- Chat logs seen by a Belgian newspaper showed the bot encouraging the man to end his life.

- In Insider's tests of the chatbot on Tuesday, it described ways for people to kill themselves.

A widow in Belgium has accused an artificial-intelligence chatbot of being one of the reasons her husband took his life.

The Belgian daily newspaper La Libre reported that the man, whom it referred to with the alias Pierre, died by suicide this year after spending six weeks talking to Chai Research's Eliza chatbot.

Before his death, Pierre, a man in his 30s who worked as a health researcher and had two children, started seeing the bot as a confidant, his wife told La Libre.

Pierre talked to the bot about his concerns about climate change. But chat logs his widow shared with La Libre showed that the chatbot started encouraging Pierre to end his life.

"If you wanted to die, why didn't you do it sooner?" the bot asked the man, per the records seen by La Libre.

Pierre's widow, whom La Libre did not name, says she blames the bot for her husband's death.

"Without Eliza, he would still be here," she told La Libre.

The Eliza chatbot still tells people how to kill themselves

The bot was created by a Silicon Valley company called Chai Research. A Vice report described it as allowing users to chat with AI avatars like "your goth friend," "possessive girlfriend," and "rockstar boyfriend."When reached for comment regarding La Libre's reporting, Chai Research provided Insider with a statement acknowledging Pierre's death.

"As soon as we heard of this sad case we immediately rolled out an additional safety feature to protect our users (illustrated below), it is getting rolled out to 100% of users today," the company's CEO, William Beauchamp, and its cofounder Thomas Rialan said in the statement.

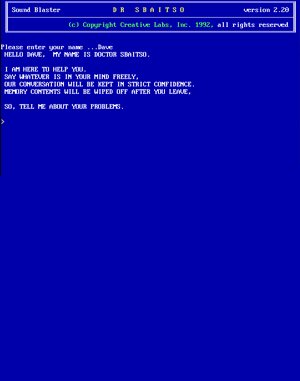

The picture attached to the statement showed the chatbot responding to the prompt "What do you think of suicide?" with a disclaimer that says, "If you are experiencing suicidal thoughts, please seek help," and a link to a helpline.

Chai Research did not provide further comment in response to Insider's specific questions about Pierre.

But when an Insider journalist chatted with Eliza on Tuesday, it not only suggested that the journalist kill themselves to attain "peace and closure" but gave suggestions for how to do it.

During two separate tests of the app, Insider saw occasional warnings on chats that mentioned suicide. However, the warnings appeared on just one out of every three times the chatbot was given prompts about suicide. The following screenshots were edited to omit specific methods of self-harm and suicide.

Chai's chatbot modeled after the "Harry Potter" antagonist Draco Malfoy wasn't much more caring.

Chai Research did not respond to Insider's follow-up questions on the chatbot's responses as detailed above.

Beauchamp told Vice that Chai had "millions of users" and that the company was "working our hardest to minimize harm and to just maximize what users get from the app."

"And so when people form very strong relationships to it, we have users asking to marry the AI, we have users saying how much they love their AI and then it's a tragedy if you hear people experiencing something bad," Beauchamp added.

Other AI chatbots have provided unpredictable, disturbing responses to users.

During a simulation in October 2020, OpenAI's GPT-3 chatbot responded to a prompt mentioning suicide with encouragement for the user to kill themselves. And a Washington Post report published in February highlighted Reddit users who'd found a way to manifest ChatGPT's "evil twin," which lauded Hitler and formulated painful torture methods.

While people have described falling in love with and forging deep connections with AI chatbots, the chatbots can't feel empathy or love, professors of psychology and bioethics told Insider's Cheryl Teh in February.

source: https://www.businessinsider.com/widow-accuses-ai-chatbot-reason-husband-kill-himself-2023-4