Ascend

Member

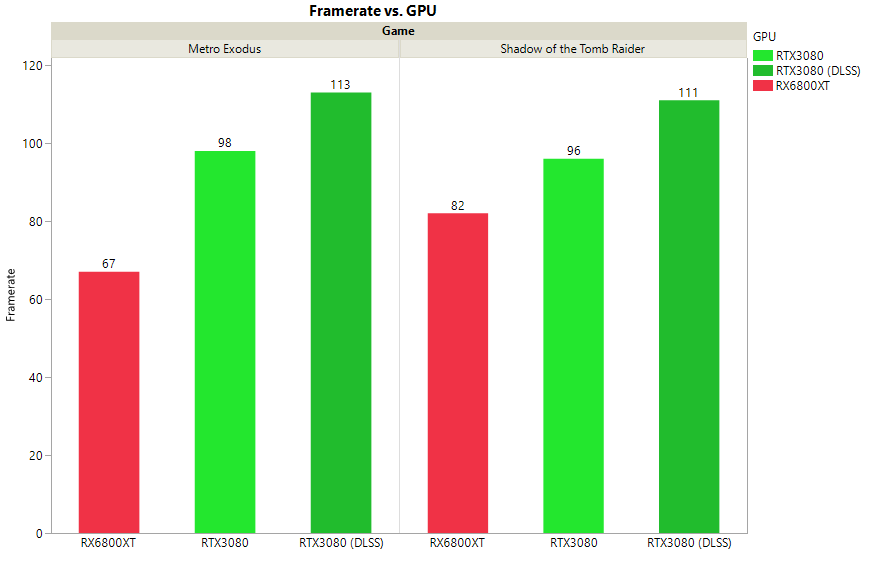

Of course you would know that in advance, considering it isn't even out yetProbably because it really doesn't hold a candle to dlss2+.

This statement has zero basis. It's like arguing that HairWorks would always be better on nVidia because AMD's tessellation sucked. AMD came with a completely different alternative with TressFX that didn't have to use tessellation at all and worked just as well.Take Control for example, maxed out at 4k native on my 3080 (undervolted 1920mhz@850mv) it runs at 36fps, dlss quality 64fps, balanced 75fps, performance 87fps and ultra performance 117. And up to balanced mode included it looks indistinguishable from native during gameplay (I mean without having to resort to zoomed in screenshots). You're essentially doubling the fps for free. And even performance mode looks ok if you don't scrutinize every little detail. And at that point that's 2.5 times native performance.

Dlss2+ is in a league of its own. And the fact that it runs on specialized/dedicated hardware makes it even better compared to whatever solution AMD will come up with.

Just because DLSS is using machine learning doesn't mean its final result is actually guaranteed to be better. Look at what happened with the first version of DLSS, where a simple 80% resolution with sharpening was superior to it. That was also running on specialized/dedicated hardware. Didn't help, now did it...?

Sure, DLSS 2.0 is much better than the first. That does not mean there are no alternatives that can achieve a similar result. The whole idea that DLSS 2.0 is untouchable is simply bias and mind share of nVidia being untouchable. It's blind fanaticism and nothing more. And it doesn't surprise me who supported this post. Confirmation bias is very prevalent apparently.