AnythingBut

Member

No building yet as i have to get off to work in 40 mins, but i think work will be done with a extra bit of zest today.

No building yet as i have to get off to work in 40 mins, but i think work will be done with a extra bit of zest today.

Nice. Be interesting to see how you get on with overclocking later. Conflicting reports on the 1700 in that regard.

Could only get this motherboard after the Amazon shenanigans yesterday with pre orders, will see how it goes and decide if i want/need to get a different one once they're more easily available.

I usually go with the best chipset and mobo but I now tend to think a bit more economical.

Looking at the ASRock AB350 one currently - anything speaking against AB350 versus X370 for average workstation/gaming usage?

Not in most gaming scenarios, no.Are we expecting the 6 core ryzen variant to perform significantly worse than the 8c variant in gaming scenarios?

GPU load is at 99% throughout the video. That's a measure of GPU performance, not a CPU test.

You have to use a fast enough GPU setup, reduce the resolution, or reduce graphical options as much as necessary to prevent the GPU from ever hitting 99/100% if you are running a CPU test.

It is incredibly frustrating that so many sites/youtube channels have no idea what they're doing when testing the gaming performance of a CPU - and worse, that AMD encouraged reviewers to set up GPU-bottlenecked tests.

http://www.gamersnexus.net/hwreview...review-premiere-blender-fps-benchmarks/page-7

yea not even close.

This shit would be called out in a fucking instant if it were any other company. People just love the underdog, even when they use shit tactics like every other company.

Not in most gaming scenarios, no.

Yeah, just watched the Linus Tech Tips video and all their games were running at 4K.

How is that an optimal way to test CPUs?

Are we expecting the 6 core ryzen variant to perform significantly worse than the 8c variant in gaming scenarios?

Really decent improvement by disabling SMT. Does that point to something that can be improved via software?

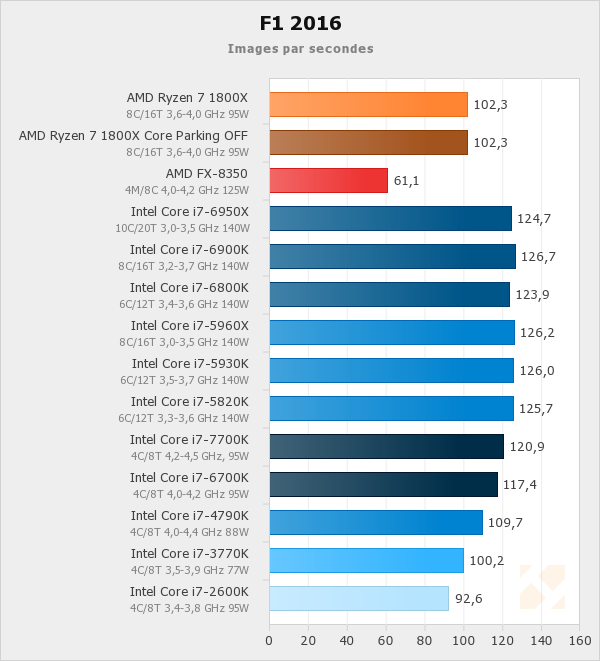

http://www.hardware.fr/articles/956-17/jeux-3d-project-cars-f1-2016.html

Are we expecting the 6 core ryzen variant to perform significantly worse than the 8c variant in gaming scenarios?

So what would be the Intel equivalent to a 1600X in terms of gaming performance?

Edit: ok, no benchmarks for the 1600X yet I think...

plus intel will have launched the kabylake refresh(7740K) at that point, it's an easy fix for them just to use 1$ extra for thermal interface on the IHS.

The current data presented by AMD themselves has the 6core clocked lower than ryzen1700, clock is already the Ryzens problem when it comes to gaming, so unless they fix so you can OC it to 4.5ghz+ then it will be a bit problematic.. plus intel will have launched the kabylake refresh(7740K) at that point, it's an easy fix for them just to use 1$ extra for thermal interface on the IHS.

GPU load is at 99% throughout the video. That's a measure of GPU performance, not a CPU test.

You have to use a fast enough GPU setup, reduce the resolution, or reduce graphical options as much as necessary to prevent the GPU from ever hitting 99/100% if you are running a CPU test.

It is incredibly frustrating that so many sites/youtube channels have no idea what they're doing when testing the gaming performance of a CPU - and worse, that AMD encouraged reviewers to set up GPU-bottlenecked tests.

http://www.gamersnexus.net/hwreview...review-premiere-blender-fps-benchmarks/page-7

If you refer to Jokers review, should maybe turn on the sound. He explains why the workloud is 99% in his 1080p tests. VSYNC off means gpu load will always show 99%. He discusses it further in his follow up videos.

It is incredible frustrating to see people trash reviews without an idea whats going on.

The 7740k and 7640k are coming for socket 2066, without iGPUs. They're not refreshes.

https://www.techpowerup.com/230474/...5-7640k-codenamed-kaby-lake-x-112w-tdp-no-igp

https://benchlife.info/intel-kaby-lake-x-with-core-i7-7740k-and-core-i5-7640k-x299-02072017/

They are HEDTs (basically re-purposed Xeons), so they come with higher premiums than normal KBL-S. The motherboard alone will be much more expensive.Wonder what the pricing would be on those CPU's. I'd assume they'd be cheaper than what we expect considering they don't have iGPU's.

No it is not, the 1600X is clocked at 3.6/4.0Ghz

The 7740k and 7640k are coming for socket 2066, without iGPUs. They're not refreshes.

https://www.techpowerup.com/230474/...5-7640k-codenamed-kaby-lake-x-112w-tdp-no-igp

https://benchlife.info/intel-kaby-lake-x-with-core-i7-7740k-and-core-i5-7640k-x299-02072017/

Its optimal to use that review along with the others to build an overall picture of whether this CPU will suit your specific usage patterns. Its apparent this CPU sings when all the cores are lit up, the question is does your usage light all the cores up? Or does the benefits of lighting all the cores up outweigh the times when you won't be in your rig?

If you refer to Jokers review, should maybe turn on the sound. He explains why the workloud is 99% in his 1080p tests. VSYNC off means gpu load will always show 99%. He discusses it further in his follow up videos.

It is incredible frustrating to see people trash reviews without an idea whats going on.

The current data presented by AMD themselves has the 6core clocked lower than ryzen1700, clock is already the Ryzens problem when it comes to gaming, so unless they fix so you can OC it to 4.5ghz+ then it will be a bit problematic.. plus intel will have launched the kabylake refresh(7740K) at that point, it's an easy fix for them just to use 1$ extra for thermal interface on the IHS.

I doubt he said that because it's wrong, as any hardware reviewer would know. If you're CPU limited you will not have 99% GPU usage because your GPU is waiting on your CPU, and thus it's not working at full speed.

Ouch all this doom & gloom and it's down to some board manufacturers having poor bios's.

So pick one. Either its GPU-bound (as claimed) or its CPU-bound (and then the tests do show cpu-bound results)

So which one is it? It cant be both.

I doubt he said that because it's wrong, as any hardware reviewer would know. If you're CPU limited you will not have 99% GPU usage because your GPU is waiting on your CPU, and thus it's not working at full speed.

He's wrong. VSync off does not mean GPU usage will always be pegged at 99%. I can take some screenshots to show you if you want.

It's incredibly frustrating that you (and apparently this so-called "reviewer") have no idea how computers work.If you refer to Jokers review, should maybe turn on the sound. He explains why the workloud is 99% in his 1080p tests. VSYNC off means gpu load will always show 99%. He discusses it further in his follow up videos.

It is incredible frustrating to see people trash reviews without an idea whats going on.

You can talk 40 hours about bullshit, that doesn't make it smell less.You dont have to belive me anything, just watch the criticized reviews. There is even a 40min talk about what and how it was tested and why it makes sense.

Please tell me this is hidden joke.

No it's not BIOS it's whatever you are testing in gpu limited test place or not.

AMD even asked reviewers to use GPU limited places because it makes their cpus closer to Intel.

So pick one. Either its GPU-bound (as claimed) or its CPU-bound (and then the tests do show cpu-bound results)

So which one is it? It cant be both.

Any good review should show both cases, the 4k and the 1080p (with low settings preferably), because while the 4k might give a more realistic idea of how the CPUs fare with current graphic hardware, the 1080p gives a better idea of the future since the graphic card is quite likely to be exchanged before the CPU ever does, and graphic cards have been evolving nicely.

But the initial critique against him was that he is offering GPU-bound scenarios. If they are not GPU-bound then, then by definition, they will be CPU-bound scenarios.

You don't see the flaw in this methodology?

But they are GPU bound. If the GPU usage is fixed at 99, then they are by definition GPU bound. I really don't see what's hard to understand about this.

Gamersnexus talked about this in their YT review. AMD suggested to do ryzen benchmarks in 1440p, which is indeed a bit misleading.

(Gamersnexus YT review @ 18:45)

in 1080p the 7700k is ~ 34% faster than the 1800x (both stock clocks)

In 1440p the 7700k is only ~10% faster.

really strange since they are still listed as a dual channel design... hmm guess they are abondening the old socket(or maybe the rumors are just false)

It's incredibly frustrating that you (and apparently this so-called "reviewer") have no idea how computers work.

If you are CPU-limited in a given gameplay scenario, then your GPU load will not be at 100%. This is completely irrespective of vertical synchronization.

Seriously, these things should be the absolutely minimum basic knowledge in order to talk from a position of authority about a CPU, or a GPU, or any hardware component (which is what a review is, or at least should be).

You can talk 40 hours about bullshit, that doesn't make it smell less.

You don't see the flaw in this methodology? What the picture clearly shows is that IPC on current bios is lower but there's more cores. so overall performance is considerably higher on the software that can use all cores. The downside is that its lower on the software that can't.

The question here is that can we expect devs to use more cores or will they stick with what they are currently doing. Bit of a chicken and egg scenario.

I wouldn't call it "a bit" misleading - it's full on attemp to fool consumers with false picture of performance.

And it completely ignores that today 1080p performance of GPUs is tomorrow 1440p as GPU power grows with each new generation.

Using their method I could prove that FX is almost as good gaming cpu as Skylake i7

Its okay, then they *are* gpu-bound. But what is the point of attacking the vsync angle which WOULD produce - according to your own posts, for example, - non-gpu bound scenarios when we CAN see CPU failing to send data to the gpu fast enough. That was my point. I am not arguing that the videos are good, I am arguing that it cant be wrong for TWO opposing reasons at the same time

Its a talk with gamernexus about both their testing methods, not only a defense from joker.

if 99% gpu usage is hit pretty much all the time in any tested game at 1080p, wouldnt it then mean any test at 1080p is useless, since its always gpu bound?

Yes, when your purpose is testing CPU performance, any game scenario with a 99% GPU load is useless.if 99% gpu usage is hit pretty much all the time in any tested game at 1080p, wouldnt it then mean any test at 1080p is useless, since its always gpu bound?

That's not really true though?

I paid around 350 for the 5820k in 2014. Now the Ryzen 7 1700 (never mind X or 1800) is 360.

I agree as to the significance of the WD2 results, but your remark about the 7700k doesn't hold true in frametimes at Computerbase at least:

That said, Intel's 6- and 8-core CPUs show much better scaling.

Essentially, yes. I could for example bust out a Bulldozer CPU, show you footage of it and a 7700K running a game at the same framerate (because they're both GPU bound at 99%) and claim that the Bulldozer was as good as the 7700K for gaming. Now we all know that's not true, because the methodology was flawed.

Edit: Same point, beaten by michaelius above.

I wouldn't call it "a bit" misleading - it's full on attemp to fool consumers with false picture of performance.

And it completely ignores that today 1080p performance of GPUs is tomorrow 1440p as GPU power grows with each new generation.

Using their method I could prove that FX is almost as good gaming cpu as Skylake i7

Where do the differences then come from? Why then test at 1080p at all (thats what seems to be wanted by most), if any modern game is apperently still GPU limited pretty hard at 1080p, even with the fastest gpus.

Also, isnt this then a test which games are cpu bound at all? games that are really cpu limited would show a drop in gpu usage.

Its okay, then they *are* gpu-bound. But what is the point of attacking the vsync angle which WOULD produce - according to your own posts, for example, - non-gpu bound scenarios when we CAN see CPU failing to send data to the gpu fast enough. That was my point. I am not arguing that the videos are good, I am arguing that it cant be wrong for TWO opposing reasons at the same time

Slightly OT, but try clean reinstalling XCOM2 without the mods once.I wouldn't just assume things though, and there's cases where single thread performance is highly important and actually limiting right now, but unfortunately most reviews don't even touch titles like Xcom 2.I've spent over 1000 hours on it, and it runs like a dog, and the only thing that'll help even slightly is high clocks and IPC. That's an actual reason to opt for 7700K, not because GTA V runs at 150 vs. 180 FPS on your 60 Hz monitor with a Titan X at 1080p.