TC McQueen

Member

Well, someone apparently got a Ryzen R5 1400 early and made a video about it:

https://www.youtube.com/watch?v=DbDpMWo7XTk

https://www.youtube.com/watch?v=DbDpMWo7XTk

Using all three here.

In what world does core performance scale linearly with clockspeeds?

Can I just OC my FX-6300 from 3.5ghz to 4.5 and get a hefty ~32% increase? Sign me up on that plane!

If this is your attempt at a joke then right on, it's about time you loosened up and that had me chuckling.

If not, in what parallel universe does CPU clockspeed + IPC correlate directly to FPS in games? This takes the biscuit in this thread for most 'out there' reasoning.

This thread really just arguing over irrelevant benchmarks? Firmware and support aren't there yet for Ryzen, any comparisons with Intels more mature architecture isn't an apples and apples comparison. When Conroe first came out the gaming performance didn't blow AMD Out of the water and people kind of flipped, look how that turned out.

It is apples to apples for consumers who's purchasing decision are made now and not soon. AMD released the product, they think it's mature enough for release.

Anyways, the difference between AMD and NVIDIA GPUs used with Ryzen was interesting.

I don't see the same type of advancements made with Kaby Lake one month after release.

It seems Nvidia is still reliant on a heavy single thread even for its DX12 driver, so it may be falling into the same typical scheduler-force-moved-me-across-CCXes performance grave as so many similar cases with Ryzen under Windows.

This thread really just arguing over irrelevant benchmarks? Firmware and support aren't there yet for Ryzen, any comparisons with Intels more mature architecture isn't an apples and apples comparison. When Conroe first came out the gaming performance didn't blow AMD Out of the water and people kind of flipped, look how that turned out.

Well, someone apparently got a Ryzen R5 1400 early and made a video about it:

https://www.youtube.com/watch?v=DbDpMWo7XTk

Well, someone apparently got a Ryzen R5 1400 early and made a video about it:

https://www.youtube.com/watch?v=DbDpMWo7XTk

Pretty good video

.

It's not irrelevant for people who are looking at a new build in the near future and want something that is the best price / performance for today.This thread really just arguing over irrelevant benchmarks? Firmware and support aren't there yet for Ryzen, any comparisons with Intels more mature architecture isn't an apples and apples comparison. When Conroe first came out the gaming performance didn't blow AMD Out of the water and people kind of flipped, look how that turned out.

This thread really just arguing over irrelevant benchmarks? Firmware and support aren't there yet for Ryzen, any comparisons with Intels more mature architecture isn't an apples and apples comparison. When Conroe first came out the gaming performance didn't blow AMD Out of the water and people kind of flipped, look how that turned out.

When an RX 470 is beating a GTX 1060 by 32% there is definitely something wrong. No card from that market segment should be CPU bottlenecked on a R7 1700 so the problem lies elsewhere.

Doesn't work like that when the CPU in question is already clocked at 3.6Ghz.

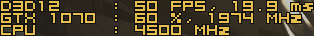

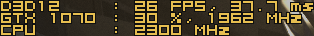

Well that doesn't make any sense, since the i5-2500K I used for this test is a 3.3GHz processor stock, overclocked to 4.5GHz, and then clocked down to 2.3GHz for this comparison.Doesn't work like that when the CPU in question is already clocked at 3.6Ghz.

Results ( avg fps/cpu load/gpu load )

AMD DX11: 128.4 fps CPU: 33% GPU: 71%

NVIDIA DX12: 143.9 fps CPU: 42% GPU: 67%

NVIDIA DX11: 161.7 fps CPU: 40% GPU: 80%

AMD DX12: 189.8 fps CPU: 49% GPU: 86%

This is significant since you have to consider that the FuryX is about half the speed of a Titan X Pascal. The 720P results show that the Fury X/Ryzen combo is very close to the TXP in most of the games tested, not just in ROTTR or in DX12 only. The problem seems to affect DX11 too since the Fury X is doing so well against the TXP in Shadow or Mordor and the other DX11 games. If they had used mGPU FuryX to simulate a TXP equivalent AMD card, the Ryzen would have easily beaten the best Intel+TXP score in all the games tested. This is more confirmation that the Nvidia gpu driver is a major factor in poor Ryzen gaming performance.Rise of the Tomb Raider (DX12) 720p high details:

Titan X: 40 min/105 avg/158 max

Fury X: 49 min/130 avg/230 max

same with AotS 720p high details and DX12:

Titan X: 62 fps

Fury X: 72 fps

Using his modus operandi dr_rus could easily argue it's actually Pascal's hardware design that's broken.This is more confirmation that the Nvidia gpu driver is a major factor in poor Ryzen gaming performance.

More like Nvidia is underperforming using DX12 on Ryzen, but yes.So if I have an RX480 and am looking to get a new CPU, a Ryzen 5 is probably still the best bang for my buck? It's just when paired with an Nvidia GPU it's underperforming?

https://www.youtube.com/watch?list=PL_sfYUCEg8Og_I4k7nL62IsMrJv5rFRa_&v=QBf2lvfKkxA

Shocking how the 'tech press' missed this. That's exactly what some of these sites should be investigating if Ryzen was throwing up some big anomalies. But in this culture of 'first' and impatience, all they cared about was getting their review out asap.

But even the late reviews were completely oblivious.

Another one with the same findings:

German magazine "C't" did some benchmarks with a Fury X, too. (and the 1800X)

https://www.reddit.com/r/Amd/commen...agazine_ct_did_a_test_with_a_fury_x/?sort=new

This is significant since you have to consider that the FuryX is about half the speed of a Titan X Pascal. The 720P results show that the Fury X/Ryzen combo is very close to the TXP in most of the games tested, not just in ROTTR or in DX12 only. The problem seems to affect DX11 too since the Fury X is doing so well against the TXP in Shadow or Mordor and the other DX11 games. If they had used mGPU FuryX to simulate a TXP equivalent AMD card, the Ryzen would have easily beaten the best Intel+TXP score in all the games tested. This is more confirmation that the Nvidia gpu driver is a major factor in poor Ryzen gaming performance.

Using his modus operandi dr_rus could easily argue it's actually Pascal's hardware design that's broken.

This is getting off-topic, but I wouldn't be surprised if the cause is completely in software. Nvidia for years (decades?) is using a platform agnostic binary blob as driver which they then adapt to interface with their different hardware as well as the different platforms and graphics APIs. This is how they manage to support new APIs quite quickly, and as the driver can also implement graphics features in software the resulting all-encompassing driver support without differences in features has been one of Nvidia's big selling point over all the years. Now the issue with DX12/Vulkan and Ryzen is that those work best by exploiting multithreading while Nvidia's unified driver blob worked perfectly well without heavy multithreading up to this point.Nvidia's drivers have taken a nose-dive of late. DX12 performance is shocking in these games, and I reckon it's mostly software based but also hardware too.

This is getting off-topic, but I wouldn't be surprised if the cause is completely in software. Nvidia for years (decades?) is using a platform agnostic binary blob as driver which they then adapt to interface with their different hardware as well as the different platforms and graphics APIs. This is how they manage to support new APIs quite quickly, and as the driver can also implement graphics features in software the resulting all-encompassing driver support without differences in features has been one of Nvidia's big selling point over all the years. Now the issue with DX12/Vulkan and Ryzen is that those work best by exploiting multithreading while Nvidia's unified driver blob worked perfectly well without heavy multithreading up to this point.

FYI binary blob is a common term for proprietary closed software drivers under Linux (and by extension Android) and Nvidia is probably the best known actors using such there (loved by gamers' for its feature parity, frowned upon by those wanting a transparent system).Interesting. Agnostic binary blob is also the best technical term I heard this year.

Vega vs 1080/1080 Ti in DX12/Vulkan is going to produce fireworks.

It seems to me AMD is going all-in with multi-threading. Vega + Ryzen could make for a killer combo for a gaming rig when the dust settles.

Yes, in much the same way Polaris vs. 1060 produced fireworks.

Wait.

LOL

If Vega can't even outperform a 1080 how's it going to take on the 1080 Ti?

yeah! because the rx480 is shit and loses in every game againts the 1060!

OH WAIT

And it's funny you already have Vega benchmarks.

The RX 480, despite being the All-Hyped DX12 video card, at best matches the 1060, trading blows with it losing some benchmarks and winning others.

So you'll forgive me if I'm less than interested in how the Vega performs when I'm already planning on buying a 1080 Ti.

I like Ryzen quite a bit for what it offers and this is a Ryzen thread so I don't even know why people are bringing Vega up in it. But the Radeon line has consistently been a day late and a dollar short and Vega is unlikely to change that reality.

The RX 480, despite being the All-Hyped DX12 video card, at best matches the 1060, trading blows with it losing some benchmarks and winning others.

So you'll forgive me if I'm less than interested in how the Vega performs when I'm already planning on buying a 1080 Ti.

I like Ryzen quite a bit for what it offers and this is a Ryzen thread so I don't even know why people are bringing Vega up in it. But the Radeon line has consistently been a day late and a dollar short and Vega is unlikely to change that reality.

Has the vega already been released? Link me to some reviews.....don't know how i missed the launch because i've been anticipating the thing for so long......Yes, in much the same way Polaris vs. 1060 produced fireworks.

Wait.

LOL

If Vega can't even outperform a 1080 how's it going to take on the 1080 Ti?

The only thing that will be a dollar short is you after paying the Nvidia mark-up for your 1080 Ti. Vega will be cheaper at least.

What mark-up? Are there currently any competitive products with the 1080 Ti that I'm unaware of? When there's only one product in a category, there is no mark-up. It's either take it or leave it.

Sure, Vega will be cheaper. And it will perform less. So I'm not understanding what you mean by mark-up here. If it performs less, it ought to cost less.

Anyways, this is the Ryzen thread. So I've said all I will say about Vega here..

If you guys want a good explanation of the new AotS patch and the Ryzen improvements, here's a good explanation of it: https://twitter.com/FioraAeterna/status/847472586581712897

I have no idea about current 1080ti pricing but if there is an MSRP and retailers are selling it for above MSRP isn't that the definition of a markup?

If you want to make that argument I think you can only do that with something that doesn't have a set MSRP.

You said "Nvidia mark-up" implying Nvidia was overcharging for their products, not retailers. That's what he was arguing. On top of that, the people with the highest performing hardware always set the price where they want it. Remember how much the 7970 cost before the 680 dropped?

What mark-up? Are there currently any competitive products with the 1080 Ti that I'm unaware of? When there's only one product in a category, there is no mark-up. It's either take it or leave it.

.

980 Ti launched at $649 and the 1080 Ti is $699.What mark-up? So you think they just about break-even on those £830 1080 Tis? Come on dude.

When the 980 Ti came out, you could get them for £550. Now the new generation of that card cost £800. That's a pretty astonishing £250 mark-up in such a short space of time. There's no excuse for it.

A really interesting video that helps to explain some of the uneven game performance on Ryzen and why AMD's GPUs seem less affected by it:

AMD vs NV Drivers: A Brief History and Understanding Scheduling & CPU Overhead

Hopefully this is something that NVIDIA will be working to improve.

You dont even know that though. Nobody knows how Vega performs.Yes, in much the same way Polaris vs. 1060 produced fireworks.

Wait.

LOL

If Vega can't even outperform a 1080 how's it going to take on the 1080 Ti?