You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Rentahamster

Rodent Whores

I like that dude's hair

SlimySnake

Flashless at the Golden Globes

Someone should use DLSS on his shirt. My eyes are bleeding.

amigastar

Member

As far as i know, no it doesn't. The Super Sampling is added to the latest Nvidia driver releases with a few megabytes in size.I still don't know how this works, i'm still on a measly 1060.

Does DLSS need online to work?

You need an RTX Graphic cards though.

MrFunSocks

Banned

That's one stylish fella haha. Great presenter too.

DLSS is great, it's great seeing the evolution of it. My 3070 should last a loooooooooong time if DLSS adoption keeps increasing.

DLSS is great, it's great seeing the evolution of it. My 3070 should last a loooooooooong time if DLSS adoption keeps increasing.

bender

What time is it?

Someone should use DLSS on his shirt. My eyes are bleeding.

I always knew my grandmothers curtains would one day become sentient.

marjo

Member

I like that dude's hair

Hair was pretty good, but shirt was next level.

SatansReverence

Hipster Princess

Thats cool, but where can I download the file so I can give it a go?

SlimeGooGoo

Banned

.

Last edited:

Bonfires Down

Member

I am very happy that I bought a 2070 instead of a 1080/ti at the time.

Great Hair

Banned

Better means "lets increase bloom, hide everything under a super bright sun ..."

Sosokrates

Report me if I continue to console war

U need an RTX card.I still don't know how this works, i'm still on a measly 1060.

Does DLSS need online to work?

rofif

Banned

That hair is crazy but the man is surprisingly smart looking in the eyes and well spoken.

His teeth are so perfect and he even smiles ... in a corporation ? :O

DLSS is so fucking amazing. this is 4k native with Dlss quality screenshot. Absolutely zero shimmering. Looks almost like 8k ground truth

His teeth are so perfect and he even smiles ... in a corporation ? :O

DLSS is so fucking amazing. this is 4k native with Dlss quality screenshot. Absolutely zero shimmering. Looks almost like 8k ground truth

Kenpachii

Member

It's great, shimmering is the worst in games specially in small detail. Back in the day i would AA the game to shit and tank my performance hard as result or supersample + aa to get rid of it all of them tank performance hard.

DLSS now just fixes this shit and gives bonus performance. its great.

DLSS now just fixes this shit and gives bonus performance. its great.

Little Mac

Member

That guy reminds me of my grandma.

sertopico

Member

I can confirm, this DLSS 2.3 works wonders. Never had such a pristine image, there's of course some "pixel crawling"/shimmering/flickering or whatever you wanna call it when moving on some edges, but it's much less present than before. Incredible, I wonder what this tech could do if they keep improving it. This is 1440p DLSS Quality (version used for CP is 2.3.4):

And this is at 4k DLSS Balanced (from my LG CX, HDR is on so the colors are a bit washed out)

And this is at 4k DLSS Balanced (from my LG CX, HDR is on so the colors are a bit washed out)

Last edited:

SlimeGooGoo

Banned

.

Last edited:

01011001

Banned

I can confirm, this DLSS 2.3 works wonders. Never had such a pristine image, there's of course some "pixel crawling"/shimmering/flickering or whatever you wanna call it when moving on some edges, but it's much less present than before. Incredible, I wonder what this tech could do if they keep improving it. This is 1440p DLSS Quality (version used for CP is 2.3.4):

yeah I posted this a few weeks ago when I got my new PC and experienced DLSS for the first time in person:

same settings, but one is running at a lower resolution + UE4 upscaling + TAA while the other runs with DLSS.

you can see that I get basically the same framerate (even slightly better, 2 FPS to be exact, on the DLSS settings) while the DLSS output completely shits on the classically upscaled version.

the DLSS version looks almost like Native 1440p + TAA while the traditionally upscaled version is clearly below 1080p

that is DLSS PERFORMANCE MODE btw, that is the LOWEST QUALITY setting you can set it to in Ghostrunner

Last edited:

sertopico

Member

Yup, it's crazy how much this reconstruction technique is effective at upscaling the image and preserving the details at the same time. I was pretty much skeptical at first, not to mention that the older versions weren't as good as the newer ones. Besides, they've been improving DLSS perf and balanced quite a bit with the latest releases, since DLSS 2.2.x came out.yeah I posted this a few weeks ago when I got my new PC and experienced DLSS for the first time in person:

same settings, but one is running at a lower resolution + UE4 upscaling + TAA while the other runs with DLSS.

you can see that I get basically the same framerate (even slightly better, 2 FPS to be exact, on the DLSS settings) while the DLSS output completely shits on the classically upscaled version.

the DLSS version looks almost like Native 1440p + TAA while the traditionally upscaled version is clearly below 1080p

that is DLSS PERFORMANCE MODE btw, that is the LOWEST QUALITY setting you can set it to in Ghostrunner

Last edited:

JonSnowball

Member

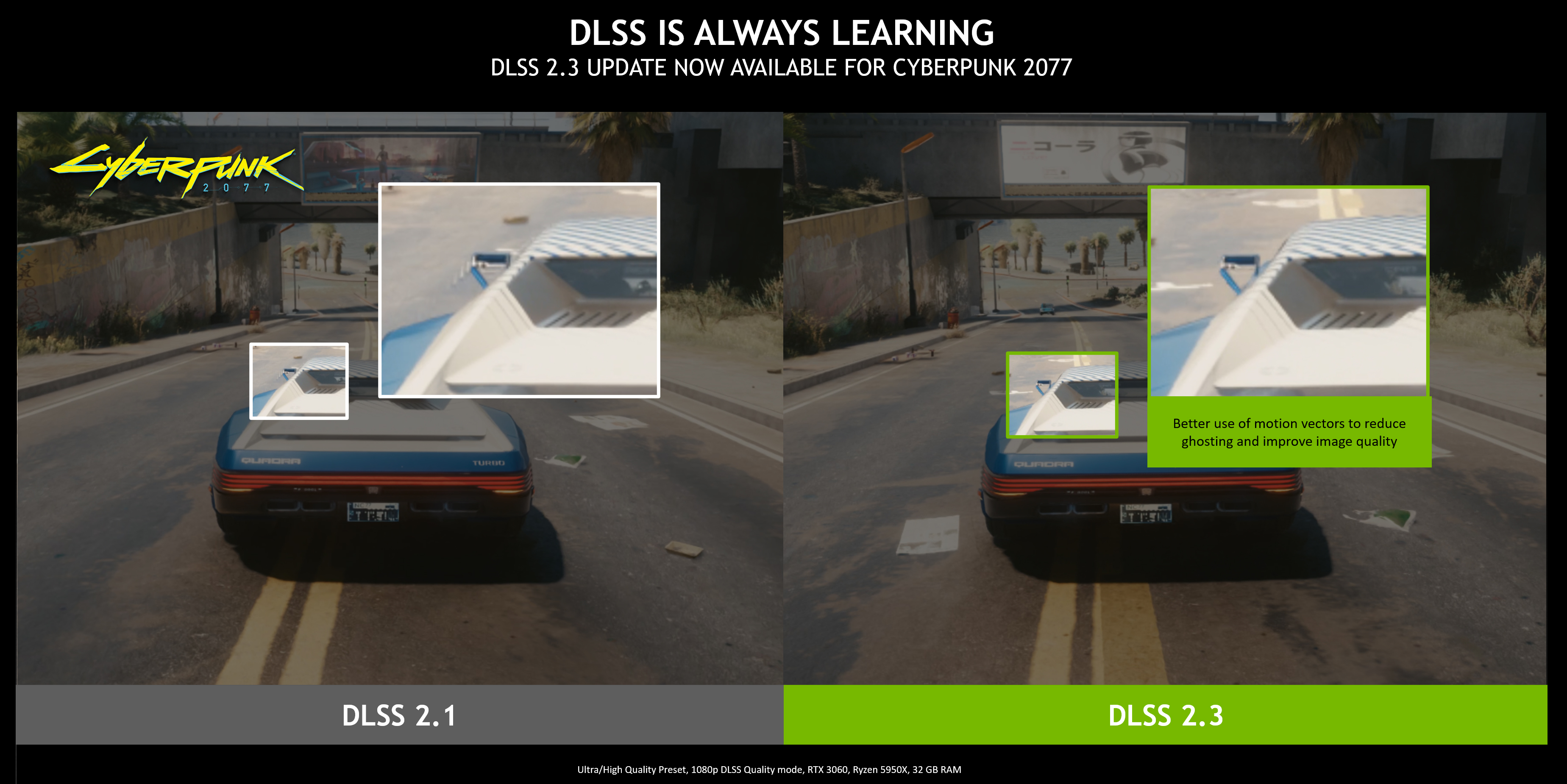

The ghosting in this image is gone, the previous DLSS image has artifacts surrounding the car. For a better example see the article here: https://www.nvidia.com/en-us/geforce/news/nvidia-image-scaler-dlss-rtx-november-2021-updates/Better means "lets increase bloom, hide everything under a super bright sun ..."

BusierDonkey

Member

This dude is rocking that hair, those glasses and that shirt and somehow not coming across as a clown.

I will buy your DLSS sir. Well done.

Seriously though, DLSS is the shit. Even with the current version, Doom Eternal + RT with all the boxes ticked runs on my system at a rock-solid 120fps and manages to look better than without it active somehow. This new version of DLSS seems to address the few issues I could probably only pick out with a microscope in the prior version. I', curious what the next big evolution of the technology, or 3.0 will be.

Microsoft needs to start using this in their big PC releases. I get they have an arrangement with AMD on the console side of things and some of their big games like Gears 5 are partnered releases but that shouldn't affect the PC side where there are better solutions to increase both performance and IQ and a lot of cards available (you know what I mean) that can take advantage of it. Gears and Forza could benefit greatly from it and although Halo Infinite's MP Beta runs super smooth, once the campaign and RT updates launch it would be a smart move to implement this if possible. Keep detail high while adding performance. Right now Horizon 5 has AA issue where the high contrast lighting around edges of objects appears super aliased (even with 8X MSAA) which DLSS has shown to mitigate. The game would also greatly benefit from being able to free up some power to put towards framerate without dumping a bunch of resolution for those that want it. Upcoming releases like Fable, Starfield, Forza Motorsport, etc would all see a huge benefit from using this.

First off, both images are with DLSS enabled. Second, look at the entire scene in both sides. The images aren't taken at the exact same position and CP2077 has a moving skybox so the clouds aren't in the same position either. The video is highlighting the ghosting in the previous version and how DLSS eliminates it entirely now.

I will buy your DLSS sir. Well done.

Seriously though, DLSS is the shit. Even with the current version, Doom Eternal + RT with all the boxes ticked runs on my system at a rock-solid 120fps and manages to look better than without it active somehow. This new version of DLSS seems to address the few issues I could probably only pick out with a microscope in the prior version. I', curious what the next big evolution of the technology, or 3.0 will be.

Microsoft needs to start using this in their big PC releases. I get they have an arrangement with AMD on the console side of things and some of their big games like Gears 5 are partnered releases but that shouldn't affect the PC side where there are better solutions to increase both performance and IQ and a lot of cards available (you know what I mean) that can take advantage of it. Gears and Forza could benefit greatly from it and although Halo Infinite's MP Beta runs super smooth, once the campaign and RT updates launch it would be a smart move to implement this if possible. Keep detail high while adding performance. Right now Horizon 5 has AA issue where the high contrast lighting around edges of objects appears super aliased (even with 8X MSAA) which DLSS has shown to mitigate. The game would also greatly benefit from being able to free up some power to put towards framerate without dumping a bunch of resolution for those that want it. Upcoming releases like Fable, Starfield, Forza Motorsport, etc would all see a huge benefit from using this.

Better means "lets increase bloom, hide everything under a super bright sun ..."

First off, both images are with DLSS enabled. Second, look at the entire scene in both sides. The images aren't taken at the exact same position and CP2077 has a moving skybox so the clouds aren't in the same position either. The video is highlighting the ghosting in the previous version and how DLSS eliminates it entirely now.

ThatOneGrunt

Member

I was finally able to snag a 3070 ti (at retail. Thank you.) And I've been blown away by DLSS. I got it wanting it for VR, but learned that games have to implement it for it to be available. But I think it's going to be key for VR titles and I can't wait.

Also a big bummer was once I got the card, I was really excited to play through Half Life Alyx again. I did it originally with a 1060. It did fine but I had to make some graphical sacrifices. I got my 3070, plugged it in, got everything running, booted up the game, and my dumb CV1 Oculus wont work. Can't stay connected to my PC. Discontinued by Facebook so I can't even order a replacement cable to see if that's the issue.

Also a big bummer was once I got the card, I was really excited to play through Half Life Alyx again. I did it originally with a 1060. It did fine but I had to make some graphical sacrifices. I got my 3070, plugged it in, got everything running, booted up the game, and my dumb CV1 Oculus wont work. Can't stay connected to my PC. Discontinued by Facebook so I can't even order a replacement cable to see if that's the issue.

Knightime_X

Member

$5 says there will STILL be 30fps games even IF it's not cpu bound.

Because someone out there fucking hates 60fps.

Because someone out there fucking hates 60fps.

digital_ghost

I am a closet furry. Don't you judge me.

I hate 60 faps per second. That's how you break your jimmy.$5 says there will STILL be 30fps games even IF it's not cpu bound.

Because someone out there fucking hates 60fps.

01011001

Banned

StateofMajora

Banned

It's safe to say this is now the best upscale method. HOWEVER, when people say it has better results than native rendering, it's important to note that dlss is still not as sharp as native due to its temporal nature, and even if ghosting is minimal ,it's still present.

For those that value sharpness over smoothness, and for games that benefit more from sharpness, native rendering will always have its place.

For those that value sharpness over smoothness, and for games that benefit more from sharpness, native rendering will always have its place.

Last edited:

StateofMajora

Banned

I don't think so ; performance modes are quickly becoming standard. To the point where it might piss console players off if there's no 60fps option.$5 says there will STILL be 30fps games even IF it's not cpu bound.

Because someone out there fucking hates 60fps.

Worst case, we see where developers focus on a 30fps mode and the 60fps option is significantly worse than it should be i.e. 4k30 > 1080p60 with lower detail ala guardians of the galaxy.

Last edited:

Bulletbrain

Member

The dream is driver level integrated DLSS and DLAA that one can just switch on per game without any constraints what so ever. Get to it NVIDIA.

IntentionalPun

Ask me about my wife's perfect butthole

Or from those who can't stand shimmering and other edge artifacting.For those that value sharpness over smoothness, and for games that benefit more from sharpness, native rendering will always have its place.

01011001

Banned

It's safe to say this is now the best upscale method. HOWEVER, when people say it has better results than native rendering, it's important to note that dlss is still not as sharp as native due to its temporal nature, and even if ghosting is minimal ,it's still present.

For those that value sharpness over smoothness, and for games that benefit more from sharpness, native rendering will always have its place.

yeah but in modern times what we also often get is really blurry or simply ugly TAA. and DLSS can actually look sharper than some TAA solutions out there.

for example, if anyone ever played Mirror's Edge Catalyst on PC, they know how fucked up TAA can be. ME Catalyst would straight up look 10x sharper if you would run it with DLSS Performance mode instead of the TAA they used in it.

because I played it at 1440p and that TAA literally makes the game look like you're playing at 900p (and you can't turn it off either)

Last edited:

StateofMajora

Banned

I'm talking about dlss vs. native without AA, or MSAA.yeah but in modern times what we also often get is really blurry or simply ugly TAA. and DLSS can actually look sharper than some TAA solutions out there.

for example, if anyone ever played Mirror's Edge Catalyst on PC, they know how fucked up TAA can be. ME Catalyst would straight up look 10x sharper if you would run it with DLSS Performance mode instead of the TAA they used in it.

because I played it at 1440p and that TAA literally makes the game look like you're playing at 900p (and you can't turn it off either)

Dlss 2.3 vs TAA is going to look sharper always, native res being equal at least.

I would like to compare dlss to insomniac's temporal injection though to see how close they are.

01011001

Banned

I'm talking about dlss vs. native without AA, or MSAA.

Dlss 2.3 vs TAA is going to look sharper always, native res being equal at least.

I would like to compare dlss to insomniac's temporal injection though to see how close they are.

on PC TAA is a sad reality tho. most games run on generic engines and use their respective TAA solution. some of them are pretty good, some really shit.

if developers actually tried using better AA it would be a different story but they don't usually.

and no AA is almost always out of the question in modern games with high frequency detail everywhere that will turn your game into an epilepsy fest with all the shimmering lol.

even MSAA in Forza is really not enough to actually smooth out everything anymore, but of course it is sharp as fuck

Dream-Knife

Banned

As a Nintendo fan, this always interests me. Hoping the Switch 2 delivers

Nintendo plans on using their own implementation if their patents are any clue. I'd be really curious to see the difference with this on their current games.Well, if Nintendo's next console adopts DLSS they will be able to sell their console much cheaper than the competition.

I guess it can't work like that.The dream is driver level integrated DLSS and DLAA that one can just switch on per game without any constraints what so ever. Get to it NVIDIA.

What is NVIDIA DLSS, and How Will It Make Ray Tracing Faster?

At NVIDIA's CES 2019 presentation, the company showed off a new technology called DLSS.

Here's the rub: the deep learning part of DLSS requires months of processing in NVIDIA's data centers before it can be applied to PC games. So for every new game that comes out, NVIDIA needs to run its gigantic GPU arrays for a long time in order to get DLSS ready.

Once the heavy lifting is done, NVIDIA will update its GPU drivers and enable DLSS on the new games, at which point the developer can enable it by default or allow it as a graphics option in the settings menu. Because the deep learning system has to look at the geometry and textures of each game individually to improve the performance of that specific game, there's no way around this "one game at a time" approach. It will get faster as NVIDIA improves it—possibly shaving the time down to weeks or days for one game—but at the moment it takes a while.

Rentahamster

Rodent Whores

I wonder if Nvidia could ever leverage the power of their customers' GPUs and offload some of processing necessary for their machine learning to people who want to opt in. Sort of like Folding @ Home

StateofMajora

Banned

No AA is preferable to a poor TAA, or fxaa. For sharp, colorful and high contrast games like Mario Odyssey, no AA would be preferable to even good TAA. Although it would be nice if Nintendo used smaa 1x at least (evolution of mlaa which was a lot less blurry than fxaa) or msaa. Obviously mario Odyssey needs higher resolution as well.on PC TAA is a sad reality tho. most games run on generic engines and use their respective TAA solution. some of them are pretty good, some really shit.

if developers actually tried using better AA it would be a different story but they don't usually.

and no AA is almost always out of the question in modern games with high frequency detail everywhere that will turn your game into an epilepsy fest with all the shimmering lol.

even MSAA in Forza is really not enough to actually smooth out everything anymore, but of course it is sharp as fuck

Just to clarify dlss is going to help Nintendo a lot, like going from native 1080p to 4k dlss is going to be huge and also save tons of performance. But hypothetically if resources were vast, native 4k with no AA would be preferable over 4k dlss for those sharp games.

For a game like ratchet and clank where the colors are more muted, their amazing TAA upscale works great.

On PC, you can pretty much turn any TAA off if you want.

Nobody should complain about forza horizon 4k plus 4x msaa lol, aliasing is very minimal and it's sharper than damn near any other console game atm.

Last edited:

Makoto-Yuki

Banned

I'm not 100% sure of the process but the first version of DLSS I think developers shared their game with Nvidia who put in things like textures through their super duper powerful AI machine learning computers. So when DLSS was turned on the game could render at say 720p (thus increasing performance) but display a clean image closer to native resolution. It's basically just AI up scaling.I still don't know how this works, i'm still on a measly 1060.

Does DLSS need online to work?

back then not a lot of games supported it but With DLSS 2.0 any game could use it. The developer would just need to support it. No need for them to send off the game to Nvidia to run through their machines. The AI had been trained well enough to work with any game.

DLSS is only supported on RTX cards because it needs the tensor cores to execute the AI algorithm. Same as RTX. games are rendered on the cuda cores and DLSS/rtx on the tensor cores, So no you dont need to be online to use DLSS. it's not a cloud based technology. It runs on the GPU itself. the Developer just needs to build it into the game and nvidia include it in a driver update.

GymWolf

Member

Uh?As far as i know, no it doesn't. The Super Sampling is added to the latest Nvidia driver releases with a few megabytes in size.

You need an RTX Graphic cards though.

GymWolf

Member

Hairs, glasses and shirts are in battle for who stand out more...I like that dude's hair