What in the hell is this pixel soup. With frame gen starting from the 20~30 fps baseline

Nope. Why would I? I have a full fledged PC.

Only reason I would get a switch 2 is Nintendo games. A nice have if I have a flight, but 2 handhelds is too much.

Like I said, in the 15W envelope. Sure you can force things and probably break the more modern games by doing so, probably ages away from DF's Series S claims.

How come even old ass games like Death Stranding acts like this?

Kneecapping CPU I guess will really not play well with Series S games that aren't crossgen. Kind of like I don't think Switch 2 CPU can keep up with Series S cpu bottlenecked games.

Yes, some games need more CPU power, while others play better with more GPU power. You can't max out both, but for a lot of Unreal games maxing out the GPU can help. Locking the GPU to 1.4Ghz solves the CPU issues in basically any game the Steam Deck can run, and a 1.4 TF RDNA 2 GPU and a 1.72 TF Ampere GPU will, on average, show similar results. Some games will of course heavily favour each as needed.

Wow so a complete paradigm shift in architecture is not doing the old method of rendering as good as the cards that were dedicated to it.

In fact, 9.75 TFlops 5700XT is at the heels of 10.8 TFlops 6650XT with 32MB infinity cache in avg benchmarks

The more games go into compute pipeline in concurrency with ML + RT or use things like mesh shaders then the more efficient Ampere becomes. It's made for occupancy and concurrency with a lot more than raster pipeline.

5700XT goes out of equation with mesh shaders.

A 3060 effectively averages to a 6600XT's 10.6 TFlops vs 3060's 12.6 TFlops even on the 15 games average at AMD Unboxed.

"significantly faster" nnnaawwwww

The 6700 XT is significantly faster than the 3060. Not the 6600 XT. That was the claim I made. As you pointed out a 6600 XT averaged across multiple games is equivalent to a 3060. The 6600 XT is about ~10.8 TF, the 3060 is ~13.8 TF, and the 6700 XT is about ~12.8 TF. Using actual, real world clock speeds. Nvidia advertised boost clocks are always extremely conservative. As you can see, Ampere is a bit behind because of the dual 32 compute nature of the architecture. The equivalent TF RDNA 2 GPUs in regular, rasterised workloads would be around 20-30 percent faster. Naturally the Nvidia GPUs are equal faster when actually comparing GPUs in the same tier and not just looking at the raw TF number.

It's an architecture made for Raster/compute + ML + RT, its compute side is not flexed if you just go pure raster. Just like future AMD graphic cards who go with neural shader pipeline will not be raster only anymore, they'll have to share resources around.

Well, yes. Ampere is clearly better for RT and ML workloads, but this specific discussion is mostly focussed on the raster comparison.

What about newer games?

Even even without RTX or AI upscaling

How come 3070 Ti 21.8 TFlops is at the heels of 6800 XT's 20.74 TFlops

Well, two reasons in this case.

1.) It is an RT game. Ampere>RDNA2 for any RT workload.

2.) 3070ti TF, ~23, 6800 XT, 20.74 TF. 11% advantage for the 3070ti in TF, the 6800 XT still outperforms it by 7%. (As mentioned, Ampere reported clock speeds are very conservative).

or even stranger, above a 7700XT's 35.2 TFlops? The fuck is happening here with RDNA 2 beating the shit out of higher TFLops RDNA 3?

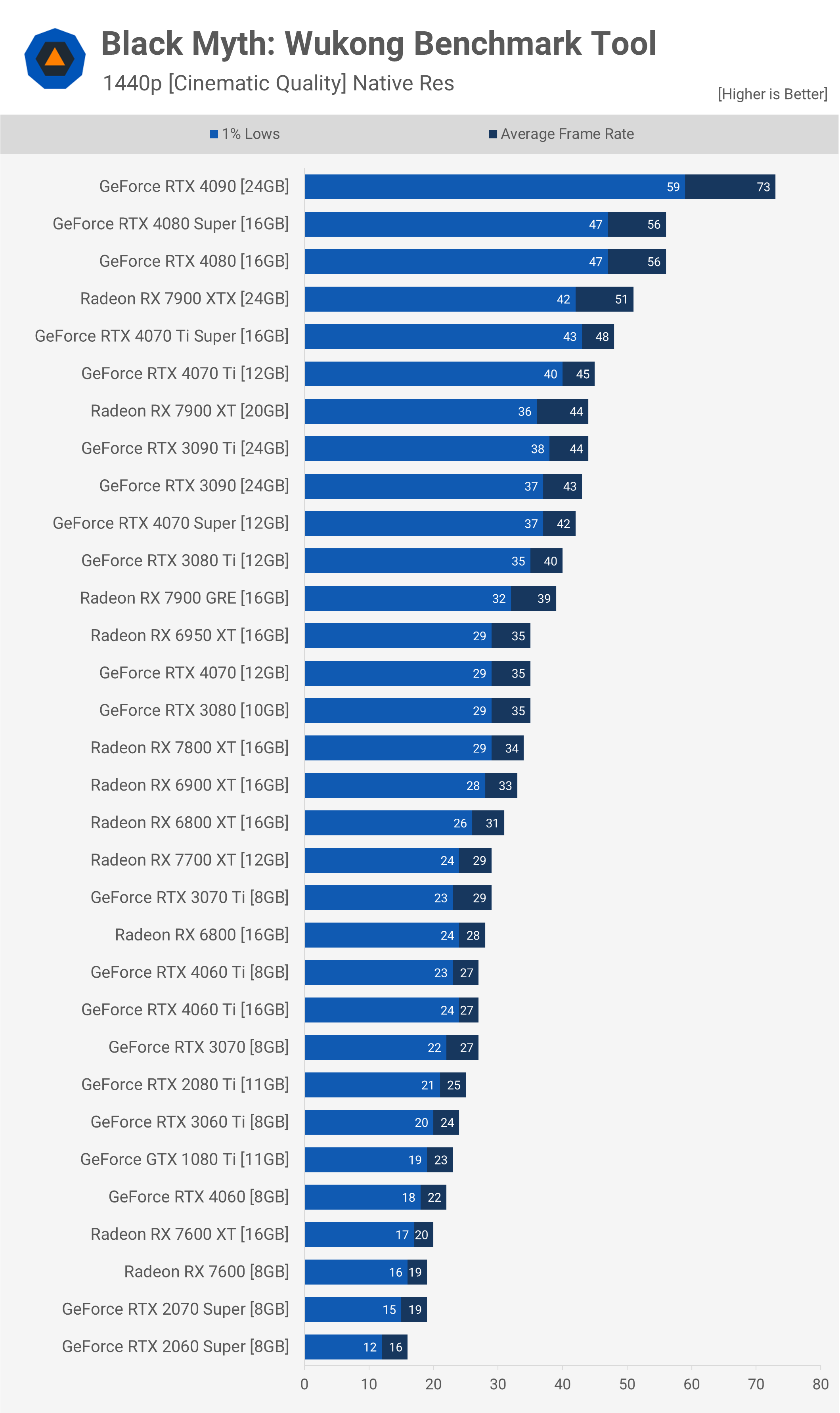

Black Myth Wukong

3070 Ti 21.8 TFlops is at the heels of 6800 XT's 20.74 TFlops

The nonsense of RDNA 3 TFlops show again here against RDNA 2.

Some reasons again.

1.) The 7700XT is not 35.2 TF, because VOPD is useless for any games, and not at all the same thing as Amperes dual issue 32 architecture, which is an actual, usable TF number. Naturally, dual issue 32 compute doesn't magically double performance by itself due to a number of other factors, but it is infinitely more useful than VOPD. The actual 7700 XT TF number would be ~18.3 TF.

2.) Same thing as in Star Wars Outlaws for the 3070 Ti vs the 6800 XT, 11% advantage in TF, outperformed by 7% (14% if you use the lows).

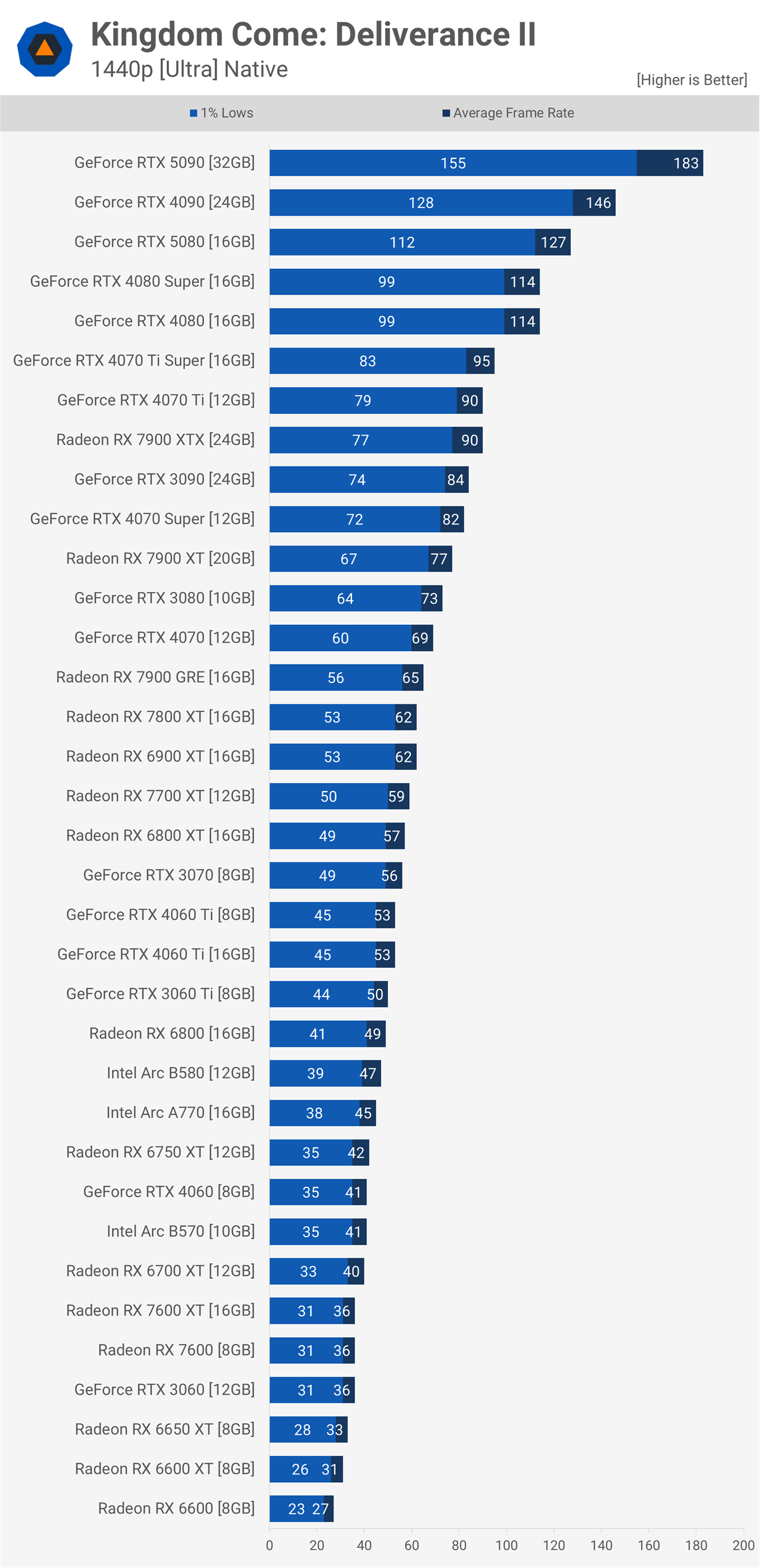

Why not KCD 2

3060 Ti 16.2 TFlops at same performance as the 6800 16.2 TFlops hmmm.

3060ti is ~18.3 TF, 6800 is ~16.9. 8% advantage in TF for Nvidia, but yes, AMD does not perform well in this title at all.

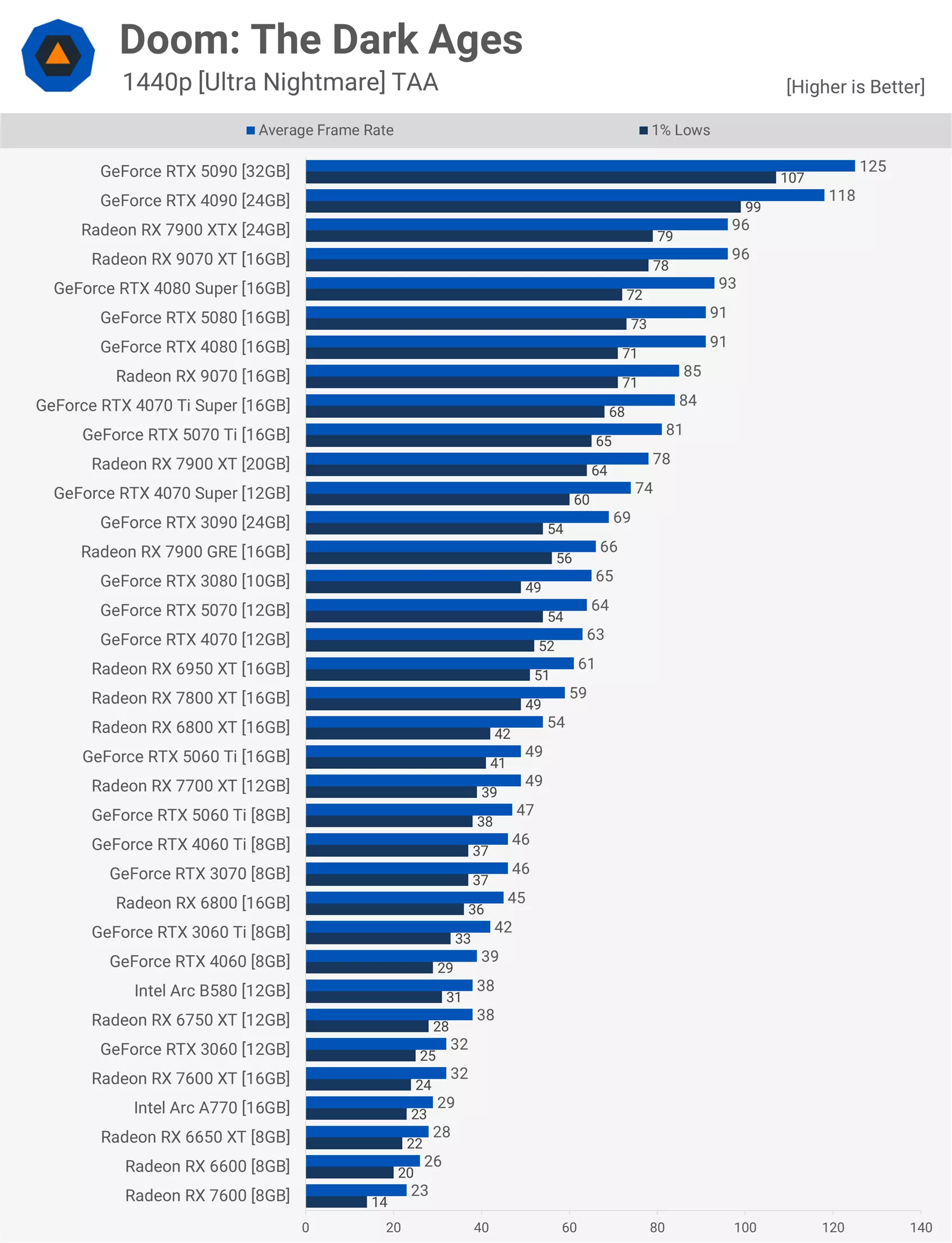

Let's see DOOM DA

3060 Ti 16.2 TFlops just a tad under the 6800 16.2 TFlops while the game showcases that AMD performs very well.

1.) RT game again.

2.) 8% TF advantage for Nvidia, still looses by 8%, even in this RT game.

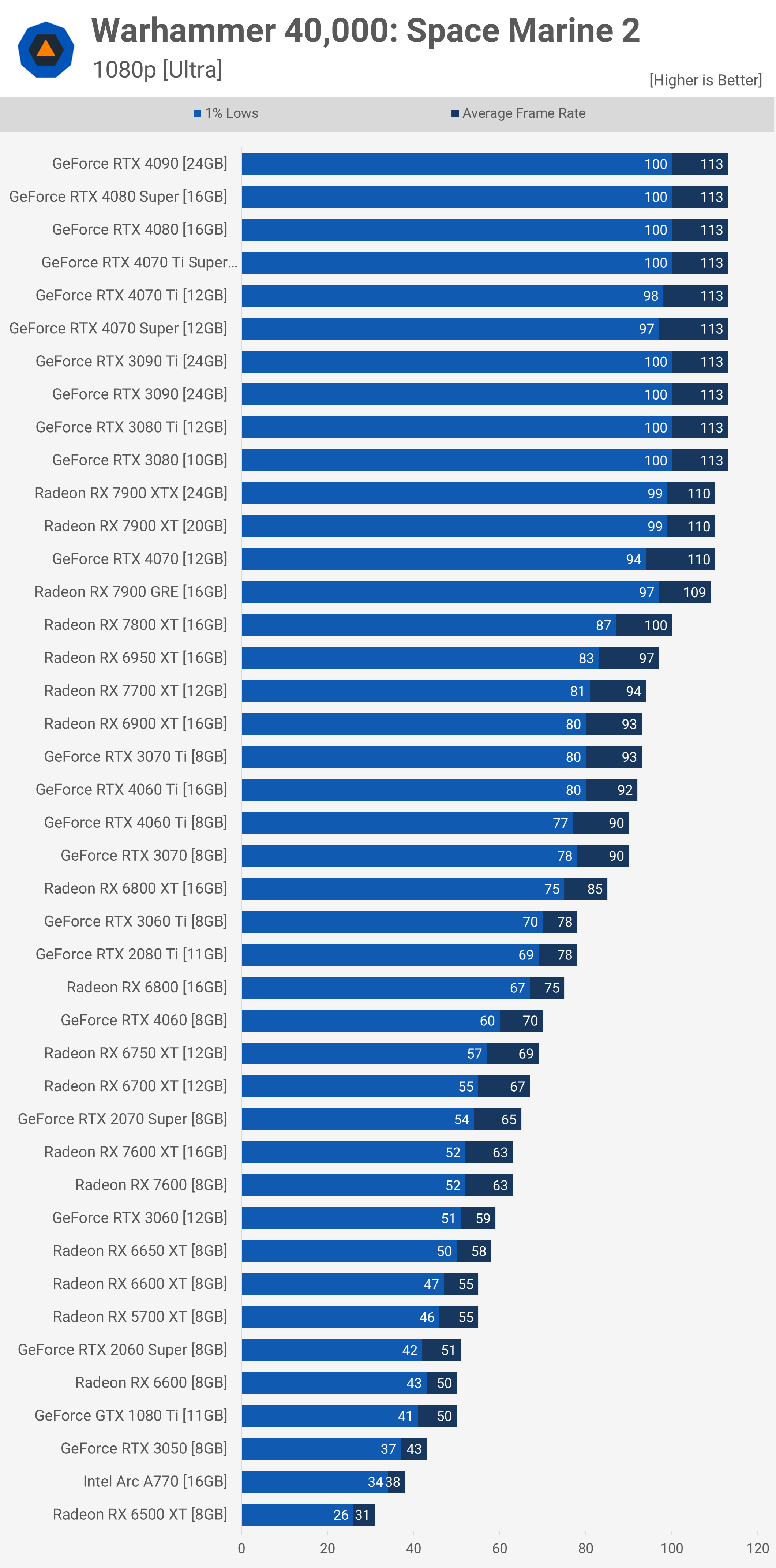

Warhammer 40k?

1080p here cause clearly the 3070 Ti or 3060 Ti at 8GB lose a chunk.

3060 Ti 16.2 TFlops 4% higher than the 6800 16.2 TFlops

3070 Ti 21.8 TFlops 10% higher than the 6800 XT's 20.74 TFlops

3060 Ti 18.3 TFlops 8% higher than the 6800 16.9 TFlops (AMD looses by around 4%).

3070 Ti 23 TFlops 11% higher than the 6800 XT's 20.74 TFlops (AMD looses by around 9%)

Terrible showing for AMD here of course.

I basically picked the recent "GPU benchmarks" of modern games at techspot (AMD unboxed)

I can use individual games as well.

3080 33.6 TFlops 47% higher than the 6900 XT's 22.86 TFlops (Nvidia looses by around 4%).

3080 33.6 TFlops 47% higher than the 6900 XT's 22.86 TFlops (Nvidia looses by around 14%).

3080 33.6 TFlops 47% higher than the 6900 XT's 22.86 TFlops (Nvidia looses by around 10%).

3080 33.6 TFlops 47% higher than the 6900 XT's 22.86 TFlops (Nvidia looses by around 13%).

Maybe some lower tier cards?

3060 13.8 TFlops 28% higher than the 6600 XT's 10.8 TFlops (Nvidia looses by around 4%).

3060 Ti 18.3 TFlops 43% higher than the 6700 XT's 12.8 TFlops (virtually tied).

3060 13.8 TFlops 28% higher than the 6600 XT's 10.8 TFlops (Nvidia wins by around 7%).

3060 Ti 18.3 TFlops 43% higher than the 6700 XT's 12.8 TFlops (Nvidia wins by around 7%).

3060 13.8 TFlops 28% higher than the 6600 XT's 10.8 TFlops (Nvidia wins by around 2%).

3060 Ti 18.3 TFlops 43% higher than the 6700 XT's 12.8 TFlops (virtually tied).

3060 13.8 TFlops 28% higher than the 6600 XT's 10.8 TFlops (virtually tied).

3060 Ti 18.3 TFlops 43% higher than the 6700 XT's 12.8 TFlops (Nvidia wins by 6%).

Games are finally heading into the trend that architecture paradigm shifts have setup since 2018's, or Nvidia finewine?

Or don't use individual games to try and compare performance? There is a reason averages are so useful.

Now imagine on NVApi. If peoples thought that Nvidia was unfair with DX11 because they could have leeway to tweak around the API via drivers, imagine what their own API can do.

The advantages of a low-level API are well-known, thanks to the PS5. Most 3rd party games do indeed see a bump in comparison to equivalent PC hardware, but the difference is hardly that staggering, outside 1st party titles specifically tailored extensively around the architecture. Which does mean Nintendo 1st party games will look better than anything a PC handheld can do.

CPUs are sensitive to latency more than GPU, it'll impact Zen 2 more than ARM by far. Chips and cheese didn't have good things to say about van gogh CPU paired with LPDDR5 let me tell you that.

I agree, hence why the CPU performance for the Nintendo Switch should be alright.

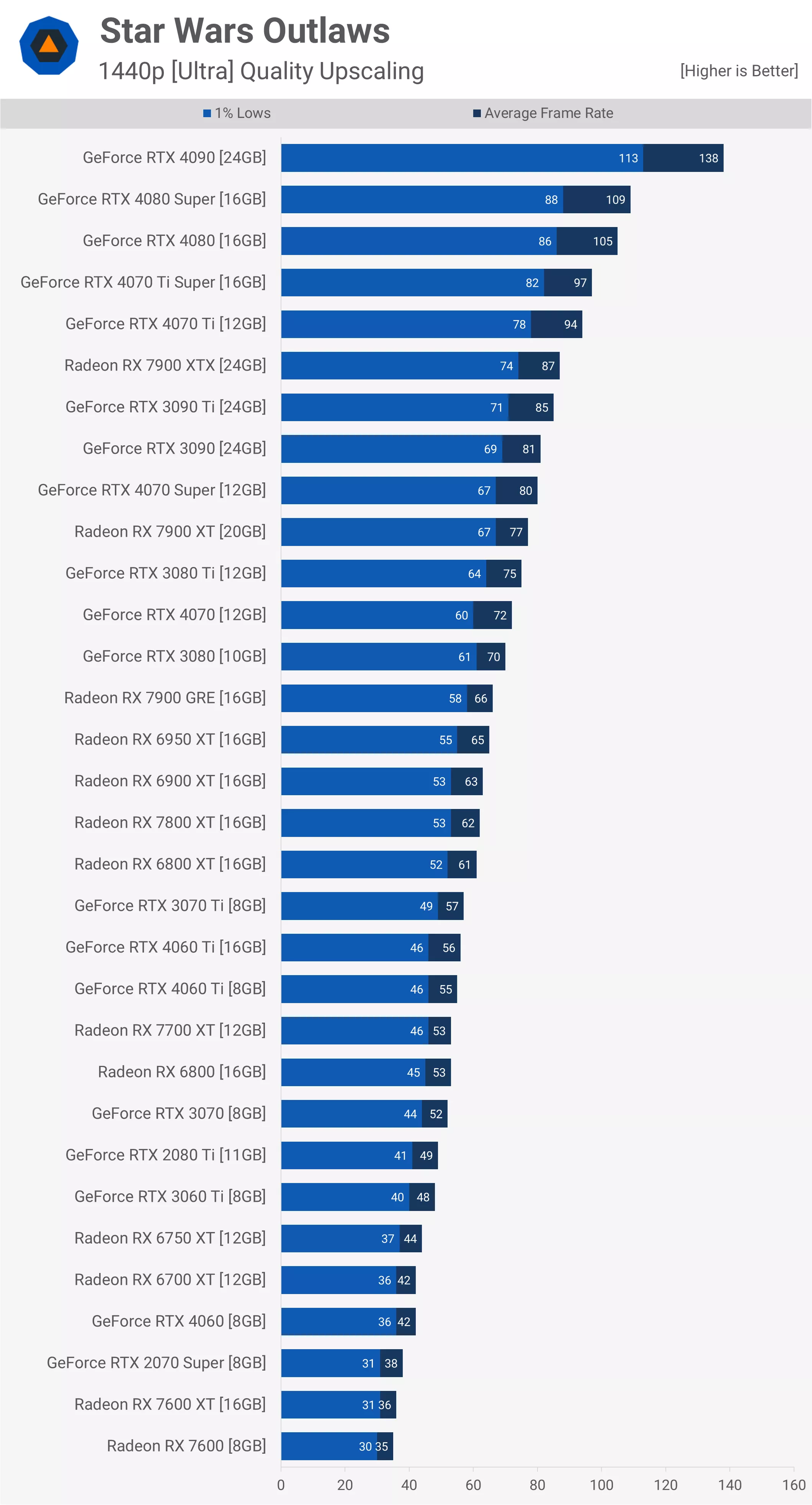

Star Wars outlaws you think?

If its anywhere near the dogshit that it is on steam deck, Ubisoft will never release a game running like that on Switch 2. Its cute to tinker with games on a PC handheld, at your own risk and all, but selling a game to an actual console needs a minimum decent performance, it wasn't decent on Steam deck, at all.

I went to the Nintendo event in Amsterdam, DLSS is doing a lot of heavy lifting on Cyberpunk for example. Native pixel counts look extremely low, so thank god for DLSS. If it wasn't for DLSS, I think the image quality on Steam Deck and the Switch 2 might be similar. Although, using Native XeSS is possible on the Steam Deck, which makes it look quite a bit better, but that requires a lot of tweaking to get decent performance in Dog Town.