Plies

GAF's Nicest Lunch Thief and Nosiest Dildo Archeologist

You're taking things a little bit too far, don't you think?But, as a German, does this make your eye twitch wildly?

Have some respect.

You're taking things a little bit too far, don't you think?But, as a German, does this make your eye twitch wildly?

You're taking things a little bit too far, don't you think?

Have some respect.

Its funny how cheap sci-fi productions always seem to contain some from of Motocross Gear.

U R SNOT E

This is how AI becomes the central nervous system of the whole world.

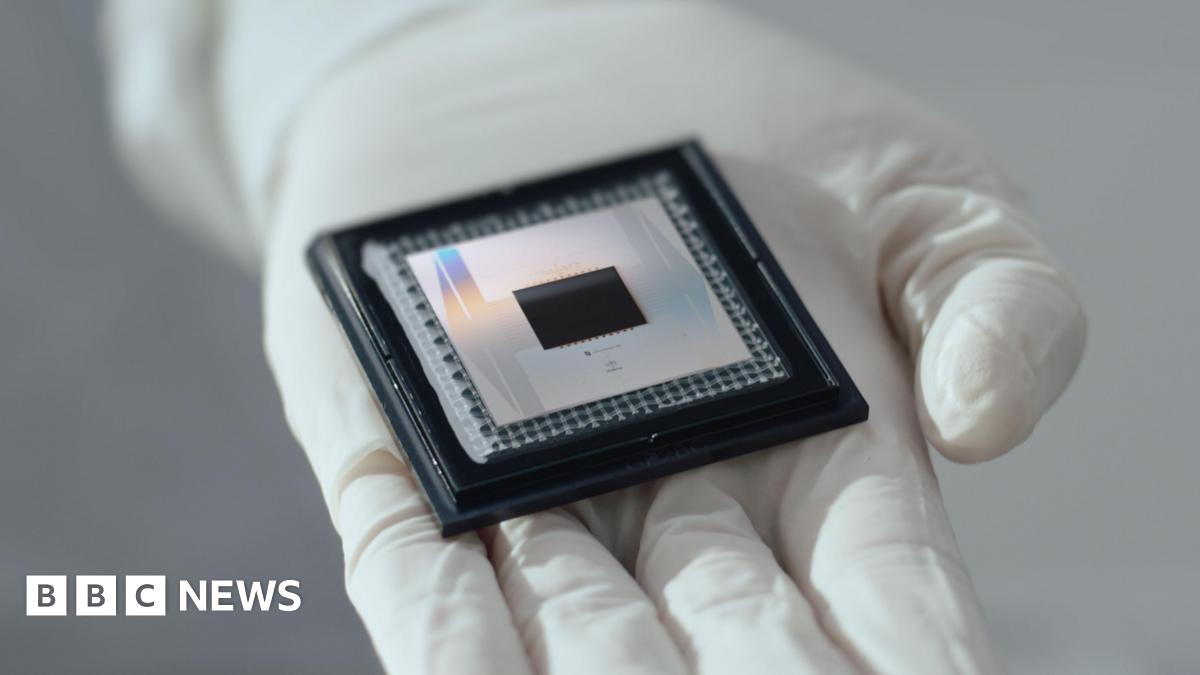

Google unveils 'mind-boggling' quantum computing chip

It solves in five minutes a problem computers now would need 10,000,000,000,000,000,000,000,000 years to work out, Google says.www.bbc.co.uk

What I find more fascinating is the error rate. So, if they run it 100 times they can get a bunch of different answers and the more they run it, the more likely it is there are errors? Kind of shoddy if true...

Google unveils 'mind-boggling' quantum computing chip

It solves in five minutes a problem computers now would need 10,000,000,000,000,000,000,000,000 years to work out, Google says.www.bbc.co.uk

So they finally made a chip that's just as good as the human brain.What I find more fascinating is the error rate. So, if they run it 100 times they can get a bunch of different answers and the more they run it, the more likely it is there are errors? Kind of shoddy if true...

Digital computers work with 'bits', noughts and ones, 'a very crude approximation of reality'. But quantum computers use the qubit – the state of an atom – as a unit of computation. As we know from quantum theory, atoms can point up or down, but also spin: 'There are infinitely more states than just zeros and ones…

It is quite literally the birth of 'almost real' artificially intelligent researchers. A single Auto GPT set up on a QCPU can in effect simulate 10,000 human researchers working 24/7 for their entire lives and output it every few seconds.

Uncertainty is literally the concept of a quantum computer.Still seems like there are too much uncertainty/unreliability regarding quantum computers to get anything interesting done with them. That's my impression at least.

It's a cool concept though.

I can't really recall my specific line of thought when I wrote that.Uncertainty is literally the concept of a quantum computer.

We? You're on your own, buddy.I like to think that we really are living in a simulation, and quantum computing is our way of exploiting our own code.

INSUFFICIENT DATA FOR MEANINGFUL ANSWER.How can we reverse entropy?

Same as the guys making horseshoes in the 1700s.So atoms are basically:

What are the researchers and lab assistants whose jobs might be on the line think of this?

Bet it still can't answer why women won't just say what they want

Dick. Respect. Enough Time To Shop.

What I find more fascinating is the error rate. So, if they run it 100 times they can get a bunch of different answers and the more they run it, the more likely it is there are errors? Kind of shoddy if true...

Idiot.Why? Because quantum computers are unimaginably more powerful than the digital sort.

Bet it still can't answer why women won't just say what they want