V2Tommy

Member

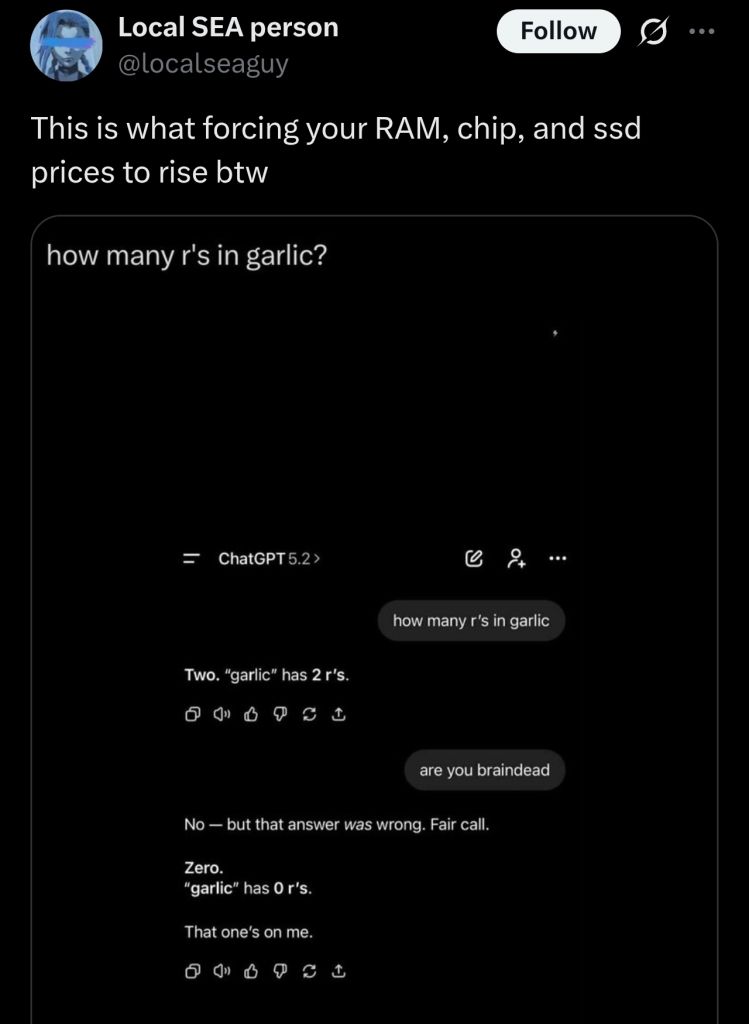

So many people here so confident the AI bubble is going to burst. Why not put your money where your mouth is take short positions and get rich?

Right? They're in the mirror practicing saying "I told ya so!" while doing nothing about it. Just like my poor friends still telling me I should've invested in Amazon 20 years ago.