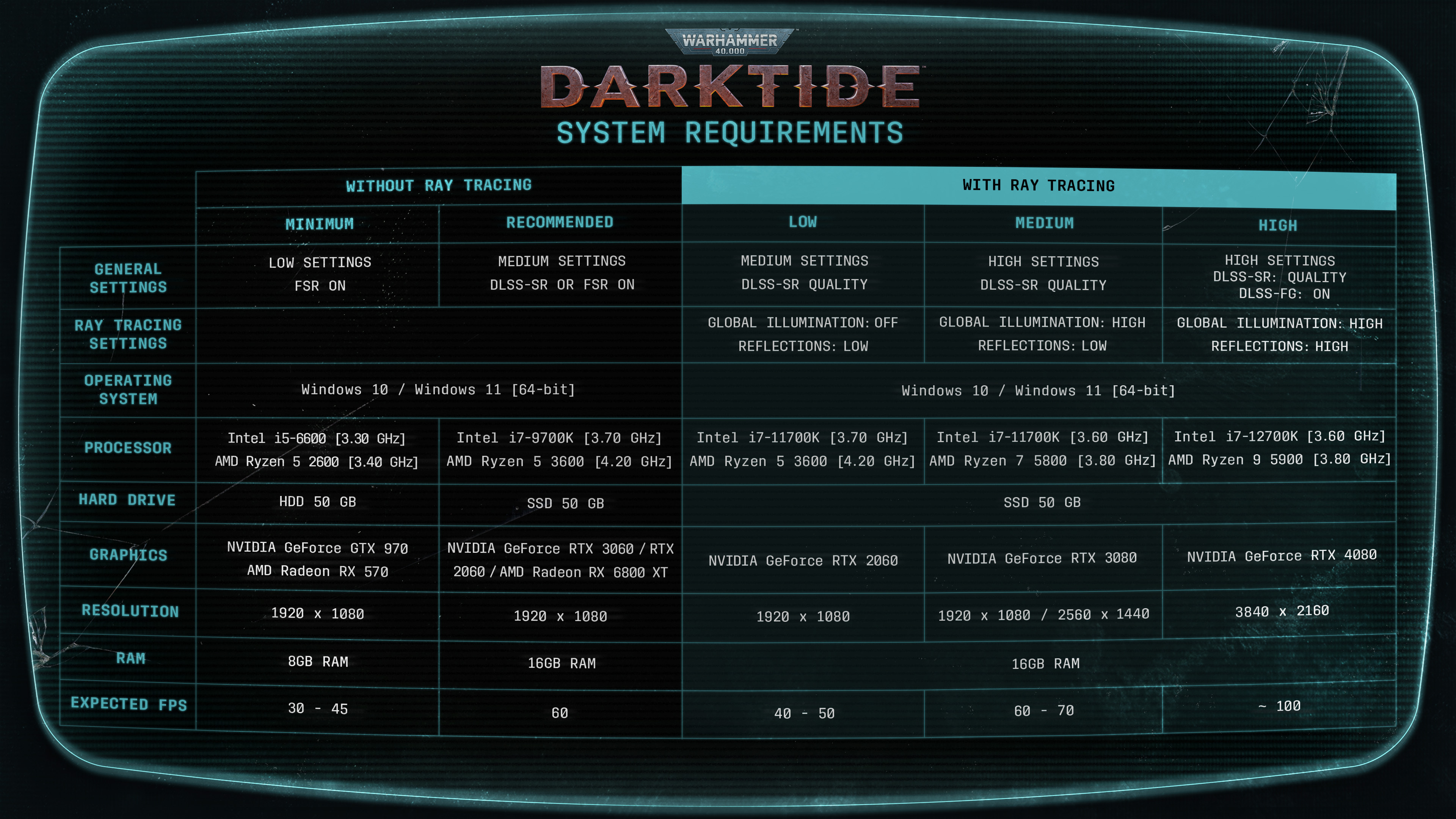

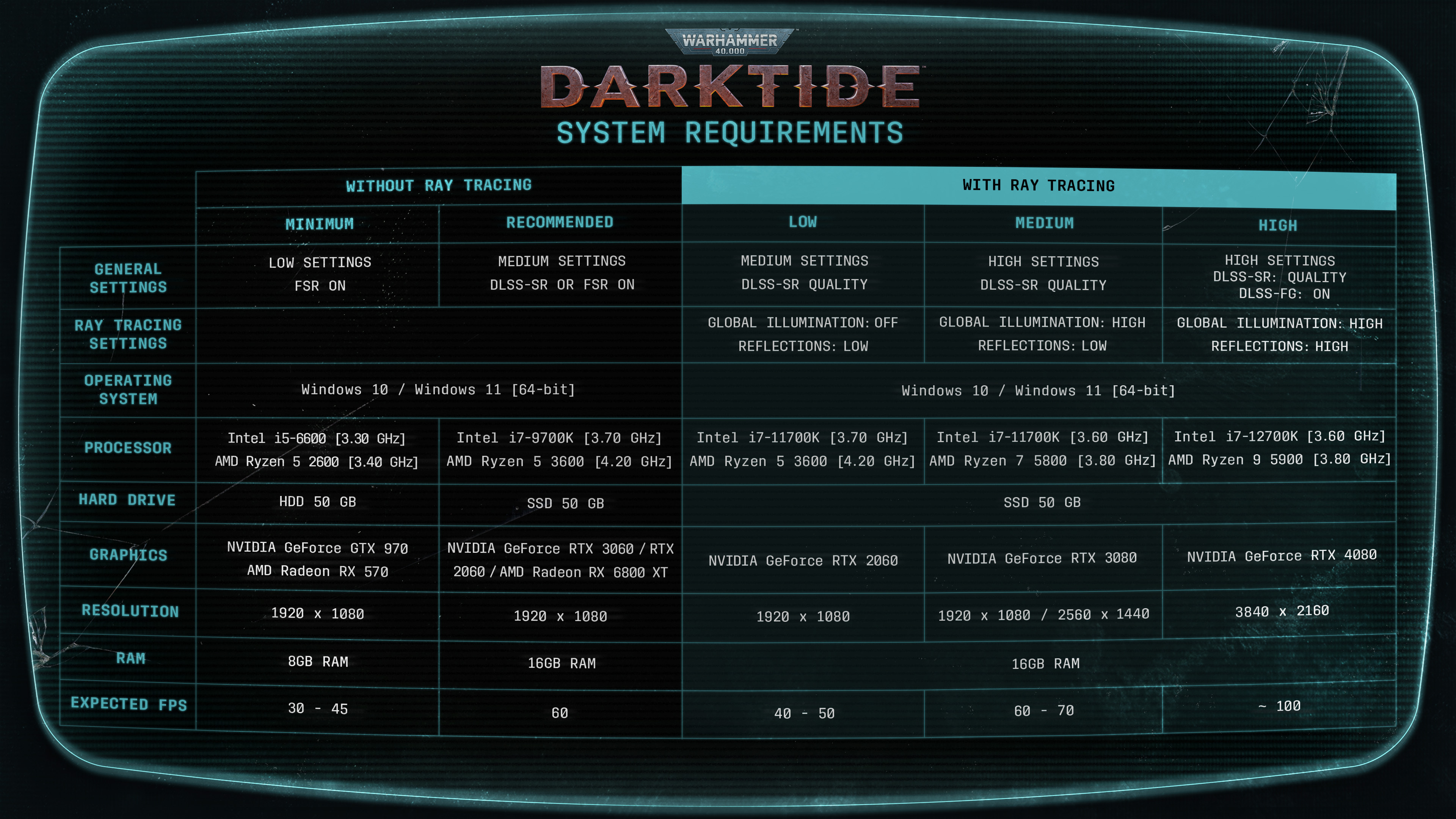

Here are the system requirements and a link to the article discussing various things about the game.

"Partnering up with NVIDIA we opted to support ray tracing in our renderer and ended up implementing both RTXGI and raytraced reflections to boot. This also lays the groundwork needed for us to continue experimenting with additional ray tracing features down the line which carries the promise of further improving things like shadows, transparency rendering and VFX (visual effects such as particles). We also decided to support other RTX features like DLSS and Reflex to further improve frame times and response times of the game."

"Another added benefit of the RTXGI implementation we ended up going with is actually that we decided to replace our baked ambient light solution with baked RTXGI probe grids. This allows us to use RTX cards on our development machines to quickly bake GI that can be applied to our scenes even for gpus that do not have enough power to push advanced ray tracing features like this. You won't get the added benefit of the GI being fully dynamic that you get if you have a powerful gpu in your machine of course but the static GI still retains the nice dark feeling in our scenes that would otherwise be very flat and boring."

store.steampowered.com

store.steampowered.com

"Partnering up with NVIDIA we opted to support ray tracing in our renderer and ended up implementing both RTXGI and raytraced reflections to boot. This also lays the groundwork needed for us to continue experimenting with additional ray tracing features down the line which carries the promise of further improving things like shadows, transparency rendering and VFX (visual effects such as particles). We also decided to support other RTX features like DLSS and Reflex to further improve frame times and response times of the game."

"Another added benefit of the RTXGI implementation we ended up going with is actually that we decided to replace our baked ambient light solution with baked RTXGI probe grids. This allows us to use RTX cards on our development machines to quickly bake GI that can be applied to our scenes even for gpus that do not have enough power to push advanced ray tracing features like this. You won't get the added benefit of the GI being fully dynamic that you get if you have a powerful gpu in your machine of course but the static GI still retains the nice dark feeling in our scenes that would otherwise be very flat and boring."

Warhammer 40,000: Darktide - DEV BLOG: PERFORMANCE - Steam News

Darktide Performance Deep Dive & System Requirements Interested in learning more about the Pre-order Beta before you read the Performance Deep Dive? Read more here! Hello! I am Rikard Blomberg, Chief Technical Officer and co-founder of Fatshark. The first computer I did programming on (or even...