It might be "quite a lot", but this is still a massive improvement over bringing path tracing techniques for real time rendering (and not interactive rendering).

If the cost of the technique is linear, it would be trivial in two GPU generations, especially as techniques are improving rapidly.

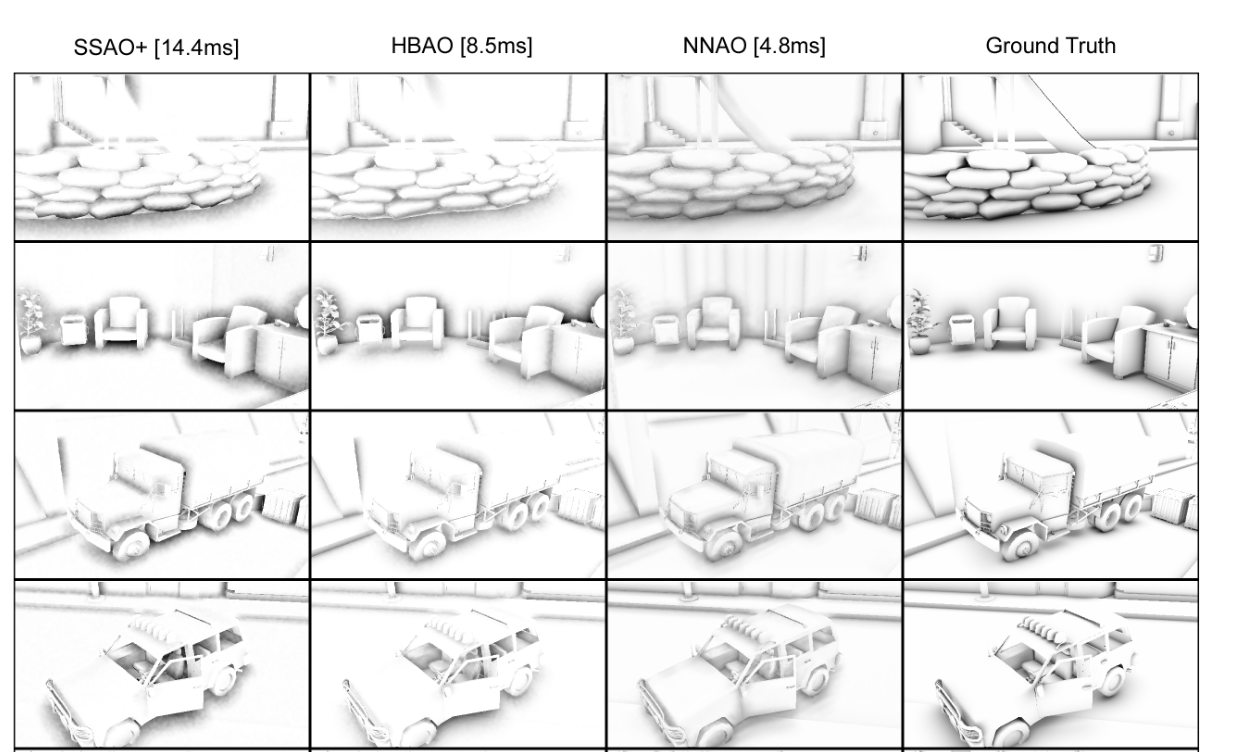

Another similar technique published nearly at the same time is

this one, and if there is something I would find problematic is that the denoising process, especially in this last technique in my opinion, seems to lose a lot of details in the materials, making things kind of... flat/overly smooth ? Thus losing an advantage from using path tracing in the first place, but this might be because the scenes themselves are maybe not really detailed in the first place.