-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Pagusas

Elden Member

RX XT X the naming is all over the place.

Is my 3700x still relevant or it became obsolete crap today ?

Still a great CPU that will take you through all of next gen at 4k without issue.

Rentahamster

Rodent Whores

That looks very impressive. I can't wait to see the new Threadrippers.'

Intel's "look at me" blog post from yesterday about Rocket Lake" feels lame by comparison. The review embargoes for Ryzen 5000 can't be lifted fast enough.

Intel's "look at me" blog post from yesterday about Rocket Lake" feels lame by comparison. The review embargoes for Ryzen 5000 can't be lifted fast enough.

Pagusas

Elden Member

That looks very impressive. I can't wait to see the new Threadrippers.'

Intel's "look at me" blog post from yesterday about Rocket Lake" feels lame by comparison. The review embargoes for Ryzen 5000 can't be lifted fast enough.

The one thing I will say for Intel is they dont have that much of a performance delta to make up for.

gunslikewhoa

Member

sexy

Thank you to Microcenter for always making CPUs their loss leader.

Thank you to Microcenter for always making CPUs their loss leader.

Pagusas

Elden Member

sexy

Thank you to Microcenter for always making CPUs their loss leader.

my favorite part of living in Dallas is finally having a Micro Center nearby

The one thing I will say for Intel is they dont have that much of a performance delta to make up for.

While true, I am glad AMD is finally giving them some competition. I have felt no reason to upgrade my 2600K until now.

Pagusas

Elden Member

While true, I am glad AMD is finally giving them some competition. I have felt no reason to upgrade my 2600K until now.

Same, this will be my first inkling to upgrade my 6700k

gunslikewhoa

Member

my favorite part of living in Dallas is finally having a Micro Center nearby

Albeit minor, it would honestly make my list of pros and cons in a moving decision.

Papacheeks

Banned

The one thing I will say for Intel is they dont have that much of a performance delta to make up for.

In single thread no. In multi-threaded? They have some work to do in effeciency and performance.

gunslikewhoa

Member

So you guys reckon that 3800x or 3900x will go down in price now?

Also, will have 3800x be good enough for gaming for around 3-4 years?

No and yes, imo.

Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

RX XT X the naming is all over the place.

Is my 3700x still relevant or it became obsolete crap today ?

It pretty much justified whatever price you payed for it with the 5800X coming in at 450 dollars.

Your generation is all but sorted now till nextgen consoles or refreshes show up.

AMD goofed on the pricing there, either release a 5700X or price drop by atleast 50 dollars.

3700Xs can be gotten for sub 300 dollars today.....the 5800X is not 150 dollars better than a 3700X.

And def not ballpark 3900X.

There is no reason to buy the 5800X.

Gaming.....its not 150 bucks better than 3700X

Productivity.....you can get a 3900X

New features......yeah about that.

Seems like slower than RTX3080 ? Checked Techpowerup for 4k benchmark of Gears 5 and Borderland 3 with the allegedly slowest 3080 (Zotac Trinity)Price will be deciding factor I guess

TPU test borderlands 3 @ ultra DX11 which gets higher frames than badass DX12 so it is not comparable.

Gears 5 depends on if they use the built in test or gameplay testing because techspot (Hardware Unboxed) get 72 fps for the 3080FE in gears.

Pagusas

Elden Member

How the hell did Zotac manage to be SLOWER than the FE???

Seems like slower than RTX3080 ? Checked Techpowerup for 4k benchmark of Gears 5 and Borderland 3 with the allegedly slowest 3080 (Zotac Trinity)Price will be deciding factor I guess

Iorv3th

Member

my favorite part of living in Dallas is finally having a Micro Center nearby

That's where I will be going to pick mine up on launch day.

Knitted Knight

Banned

Looking great. Strategy is sound.

PhoenixTank

Member

Indeed. Assuming AMD used the in-game benchmark for BL3, we can use Eurogamer's 3080 review as an acceptable-ish comparison: DX12/Bad Ass/TAA.TPU test borderlands 3 @ ultra DX11 which gets higher frames than badass DX12 so it is not comparable.

They mention 65 above and to the right of the line chart but 60.9 as the mean (I think?) for the bar graph.

Honestly that is pretty good considering how comparatively strong the 3080's performance is at 4K vs 1080p & 1440p.

If Big Navi's CUs aren't similarly underutilized at lower resolutions this could turn out closer than rumours would suggest.

Way too early to take this with anything but a mine's worth of salt. Just one game. So much better to get apples to apples on a variety games from one outlet when comparing. (And then checking against other outlets to avoid outliers)

Wait, isn't this the Zen 3 thread anyway?

Last edited:

LordOfChaos

Member

Oh shit, apparently people are receiving BIKE?

martino

Member

also are those bench using a 5950x cpu ?Indeed. Assuming AMD used the in-game benchmark for BL3, we can use Eurogamer's 3080 review as an acceptable-ish comparison: DX12/Bad Ass/TAA.

They mention 65 above and to the right of the line chart but 60.9 as the mean (I think?) for the bar graph.

Honestly that is pretty good considering how comparatively strong the 3080's performance is at 4K vs 1080p & 1440p.

If Big Navi's CUs aren't similarly underutilized at lower resolutions this could turn out closer than rumours would suggest.

Way too early to take this with anything but a mine's worth of salt. Just one game. So much better to get apples to apples on a variety games from one outlet when comparing. (And then checking against other outlets to avoid outliers)

Wait, isn't this the Zen 3 thread anyway?

can impact them since they will be top of the game.

Unknown Soldier

Member

You're not familiar with Zotac, are you? This is normal for Zotac. Their stuff is cheap sure but never try and figure out why, you won't like the answer.How the hell did Zotac manage to be SLOWER than the FE???

Ascend

Member

Yeah. Keep imagining. Because that's the only place where that's happening.I'll say it again, just imagine Intel on the 10nm process if they're keeping up with AMD on 7nm. AMD at 5nm will get destroyed as they are finally faster than intel at 14nm.

The Skull

Member

I'll say it again, just imagine Intel on the 10nm process if they're keeping up with AMD on 7nm. AMD at 5nm will get destroyed as they are finally faster than intel at 14nm.

I'll take whatever you are smoking.

LordOfChaos

Member

JohnnyFootball

GerAlt-Right. Ciriously.

It will be good. Whether it will have long legs like Intel processors have remains to be seen. Of course Intel could blow the doors if they have another Core 2 moment.So you guys reckon that 3800x or 3900x will go down in price now?

Also, will have 3800x be good enough for gaming for around 3-4 years?

Last edited:

PhoenixTank

Member

Should be absolutely minimal CPU bottleneck at 4K 60-70fps, regardless of whether a 10900k or 5950x is used.also are those bench using a 5950x cpu ?

can impact them since they will be top of the game.

Seeing the presentation I don't know. I mean intel is looking a lot better given I can get a deal on a 10700k combo right now and use cheaper memory. If I want to do a 5800x I have to wait a month, use more expensive memory and hope that they have enough volume to cover any scalping.(Which given the PS5, XSX, 3080, and 3090 is not as certain as I would have thought a month ago.) Oh and forget any deals until probably around January. I mean something this new with this much hype at this time of year? I figure no deals and scalping. (You know, also assuming that they're not exaggerating their performance.)

ResilientBanana

Member

Just like how big Navi was supposed to overtake the 3080? That is where my imagination is at.Yeah. Keep imagining. Because that's the only place where that's happening.

Ascend

Member

The performance shown is on par with an RTX 3080. And we don't know if it's the full die with zero CUs disabled...Just like how big Navi was supposed to overtake the 3080? That is where my imagination is at.

SLESS

Member

Anyone in the know care to provide some insight into the possible benefits of the Ryzen 7 5800X: 8-cores/16-threads vs Ryzen 5 5600X: 6-cores/12-threads specifically for gaming? They both have the same 32MB Cache pool. The 5800X will have 100 Mhz higher base and boost speeds. Are the extra cores worth the extra $ and the need to purchase a third party cooler?

Rentahamster

Rodent Whores

Anyone in the know care to provide some insight into the possible benefits of the Ryzen 7 5800X: 8-cores/16-threads vs Ryzen 5 5600X: 6-cores/12-threads specifically for gaming? They both have the same 32MB Cache pool. The 5800X will have 100 Mhz higher base and boost speeds. Are the extra cores worth the extra $ and the need to purchase a third party cooler?

The Xbox and PS5 both have 8 core CPUs, so as next gen matures, I would imagine that most multiplat games would be designed with that in mind as the norm.

I don't see why you wouldn't want to go there unless you're really on a budget. In the short term, 6 cores is perfectly fine, but if you want to future proof, 8 cores are safer.

supernova8

Banned

Also don't forget they promise a FPS uplift in games because of ZEN3 so you have to add those to the 3080 too as they will also get a uplift with those CPUs. So it seems the GPU stacks in somewhere between the 3070 and 3080 as expected. I wonder how RTX performance will be though and if they have any kind of DLSS competitor.

Doesn't that CPU Zen 3 uplift generally only apply at lower resolutions like 1080p?

If they're showing at 4K then I'm expecting there won't be as much of a difference (hence why they chose to show games running at 1080p for the CPU and yet 4K for the GPU).

supernova8

Banned

The Xbox and PS5 both have 8 core CPUs, so as next gen matures, I would imagine that most multiplat games would be designed with that in mind as the norm.

I don't see why you wouldn't want to go there unless you're really on a budget. In the short term, 6 cores is perfectly fine, but if you want to future proof, 8 cores are safer.

So basically:

6 core 12 thread - $300

8 core 16 thread - $500

12 core 24 thread - $450

Personally I would save up a bit longer and just get the 5900X.

I already have a 3900X so I'm personally in no rush whatsoever to upgrade my CPU but if I were on Zen plus instead of Zen 2 I might upgrade.

RNG

Member

Like others have said just save a bit more and get at least an 8 core CPU.Anyone in the know care to provide some insight into the possible benefits of the Ryzen 7 5800X: 8-cores/16-threads vs Ryzen 5 5600X: 6-cores/12-threads specifically for gaming? They both have the same 32MB Cache pool. The 5800X will have 100 Mhz higher base and boost speeds. Are the extra cores worth the extra $ and the need to purchase a third party cooler?

rnlval

Member

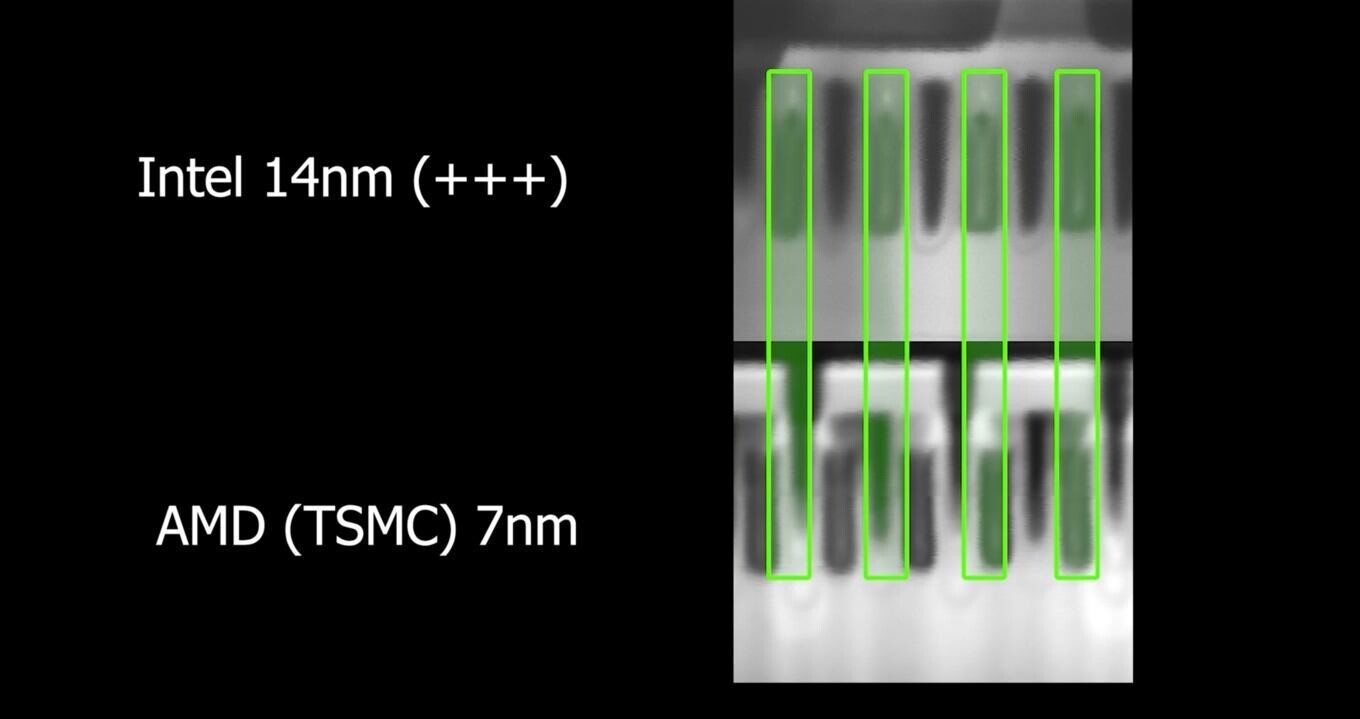

AMD is using superior process, but it's not as far ahead as naming imply (7 vs 14nm, yeah, 10xxx are 14nm).

As examination of the chips revealed, AMD's transistors are 22*22nm, while Intel's are 24*24nm.

Intel 14 nm Node Compared to TSMC's 7 nm Node Using Scanning Electron Microscope

Currently, Intel's best silicon manufacturing process available to desktop users is their 14 nm node, specifically the 14 nm+++ variant, which features several enhancements so it can achieve a higher frequencies and allow for faster gate switching. Compare that to AMD's best, a Ryzen 3000 series...

Specifically, Intel's "14 nm+++" (CoffeeLake generation) gate width is close to TSMC's 7nm (Zen 2 generation).

It's not wise to compare vendor A's nm PR marketing to vendor B's nm PR marketing

CuNi

Member

Doesn't that CPU Zen 3 uplift generally only apply at lower resolutions like 1080p?

If they're showing at 4K then I'm expecting there won't be as much of a difference (hence why they chose to show games running at 1080p for the CPU and yet 4K for the GPU).

Depends on the game.

AFAIK for example AC:Odyssey has a 10FPS difference between a i9-10900k and a 3900X on a RTX 3080.

So it really comes down to how the rendering pipeline is made. Many games will show no difference because as you said you should be GPU bound, but unfortunately there are also as many games out there that are to this day not very well made and sadly will benefit from a faster CPU even in 4k.

Edit: Nonetheless, RDNA2 looks promising. I think it will come down to how AMD will price them. If they price it 100$ cheaper then it could really disrupt 3070 sales. If its priced at 699$ like the 3080 then I think it won't do as good as it could, because the 3080 will still outperform it and offer RTX/DLSS on top.

Last edited:

supernova8

Banned

Depends on the game.

AFAIK for example AC:Odyssey has a 10FPS difference between a i9-10900k and a 3900X on a RTX 3080.

So it really comes down to how the rendering pipeline is made. Many games will show no difference because as you said you should be GPU bound, but unfortunately there are also as many games out there that are to this day not very well made and sadly will benefit from a faster CPU even in 4k.

Yeah that's a good point. Of course both AMD, Intel and NVIDIA will cherry pick games and applications that show them in a good light

What's interesting is that AMD had to essentially refine 7nm to beat Intel's 14nm(++++++++++++++++++++++++++++++++++++++++++). If you think of that way then when Intel moves on a smaller node they may start to rip Ryzen to pieces. AMD might pull some more rabbits out of the hat in the meantime but I would probably respect AMD more if they beat NVIDIA rather than Intel.

Beating Intel at this point is like beating a has-been one-legged guy in a 100m sprint, whereas beating NVIDIA is basically beating Usain Bolt.

smbu2000

Member

Disappointing? Look at how the 3000 series compared to the 10900k in gaming and then look at the 5000 series. The 5000 series is a massive increase in performance and overtakes Intel in the only area that they were leading in as AMD leads in pretty much everything else. Now Intel doesn't even have "best gaming performance" to fall back on.So it looks like the 5000 series is only slightly better than a 10900k in gaming? That's pretty disappointing.

The price increase is a bit rough, but Ryzen 3 is better than Intel in every other metric.

SLESS

Member

The Xbox and PS5 both have 8 core CPUs, so as next gen matures, I would imagine that most multiplat games would be designed with that in mind as the norm.

I don't see why you wouldn't want to go there unless you're really on a budget. In the short term, 6 cores is perfectly fine, but if you want to future proof, 8 cores are safer.

Like others have said just save a bit more and get at least an 8 core CPU.

Thank you both, this makes sense. I will get the 8 core

sxodan

Member

That's not how it works, buddy. There's a reason why Intel is stuck with 14nm for so long.I'll say it again, just imagine Intel on the 10nm process if they're keeping up with AMD on 7nm. AMD at 5nm will get destroyed as they are finally faster than intel at 14nm.

Last edited:

CuNi

Member

Yeah that's a good point. Of course both AMD, Intel and NVIDIA will cherry pick games and applications that show them in a good light

What's interesting is that AMD had to essentially refine 7nm to beat Intel's 14nm(++++++++++++++++++++++++++++++++++++++++++). If you think of that way then when Intel moves on a smaller node they may start to rip Ryzen to pieces. AMD might pull some more rabbits out of the hat in the meantime but I would probably respect AMD more if they beat NVIDIA rather than Intel.

Beating Intel at this point is like beating a has-been one-legged guy in a 100m sprint, whereas beating NVIDIA is basically beating Usain Bolt.

As far as I understood, those "nm" numbers by now are only marketing. They began with the actual node-size but since then are usually only used to show progress. Nothing really measures 14nm or 7nm in those CPUs.

Also to note is that AMD uses TSMC 7nm while Intel is using his own fabs. TSMC 7nm is slightly smaller than Intel 14nm, but size alone obviously is not everything. Intels problem is that they had too great ambitions for 10nm which is why they run into so big problems.

AMD is at the front and Intel will be behind now for a while. Intels next chips are slated for either December 2020 or more likely 1H 2021. 2H 2021 will mark ZEN4 launch with DDR5 etc. so now Intel is playing catch up with AMD for the foreseeable future.

AMD vs NVIDIA is completely the other way around, as you said. AMD is facing a competitor there who did not cripple itself with overconfidence and is still dominating the GPU market aggressively to this day.

The fact that NVIDIA bought ARM is not going to make things easier for AMD there too.

That's not how it works, buddy. There's a reason why Intel is stuck with 14nm for so long.

Yes because AMD is using TSMC and Intel is using its own fabs.

Intel 14nm is as you can clearly see quit competitive to TSMC 7nm. Intel 10nm will probably outperform TSMC 7nm and so on.

There are multiple reasons why Intel is stuck on 14nm. For one, let's not deny it, they took their leading spot for way to granted and secondly they had way to high bars of requirements for their 10nm process.

The only problem for Intel as of now is that AMD is leading. Instead of AMD releasing a stronger CPU just for Intel to swoop in and make it obsolete again after 2-3 Months, Intel is not expected to be really competitive until 2022/2023 at the earliers so now it will be Intel showing new CPUs just for AMD to swoop in and win the crown again.

If Intel skips its 10nm and goes straight to Intel 7nm, I wouldn't be so sure about AMD being the king anymore.

Last edited:

mitchman

Gold Member

Some games are very CPU bound even in higher resolutions, eg. Flight Sim 2020. In general, I agree with you, but it's not guarantee to apply to all games.Doesn't that CPU Zen 3 uplift generally only apply at lower resolutions like 1080p?

If they're showing at 4K then I'm expecting there won't be as much of a difference (hence why they chose to show games running at 1080p for the CPU and yet 4K for the GPU).

llien

Banned

AMD has been pretty decent on that side.Yeah that's a good point. Of course both AMD, Intel and NVIDIA will cherry pick games and applications that show them in a good light

They were showing figures for a wide range of games.

5% looks like 5% and not like on NV slides.

Also, when AMD says it is X% it is more or less X% and not like team green.

Alexios

Cores, shaders and BIOS oh my!

Did our unbiased Leo make an "AMD, New King in Gaming" thread then? No? But why?Disappointing? Look at how the 3000 series compared to the 10900k in gaming and then look at the 5000 series. The 5000 series is a massive increase in performance and overtakes Intel in the only area that they were leading in as AMD leads in pretty much everything else. Now Intel doesn't even have "best gaming performance" to fall back on.

The price increase is a bit rough, but Ryzen 3 is better than Intel in every other metric.

I'd wait for potential price cuts to match any coming from Intel though, they have room as Linus explains they actually set their models a bit higher than intel's equivalent offerings this time and I'm sure Intel will try to appear more attractive by lowering them further.

Last edited:

Armorian

Banned

I love that he was happy that Intel was still "winning" with 2015 cores