winjer

Gold Member

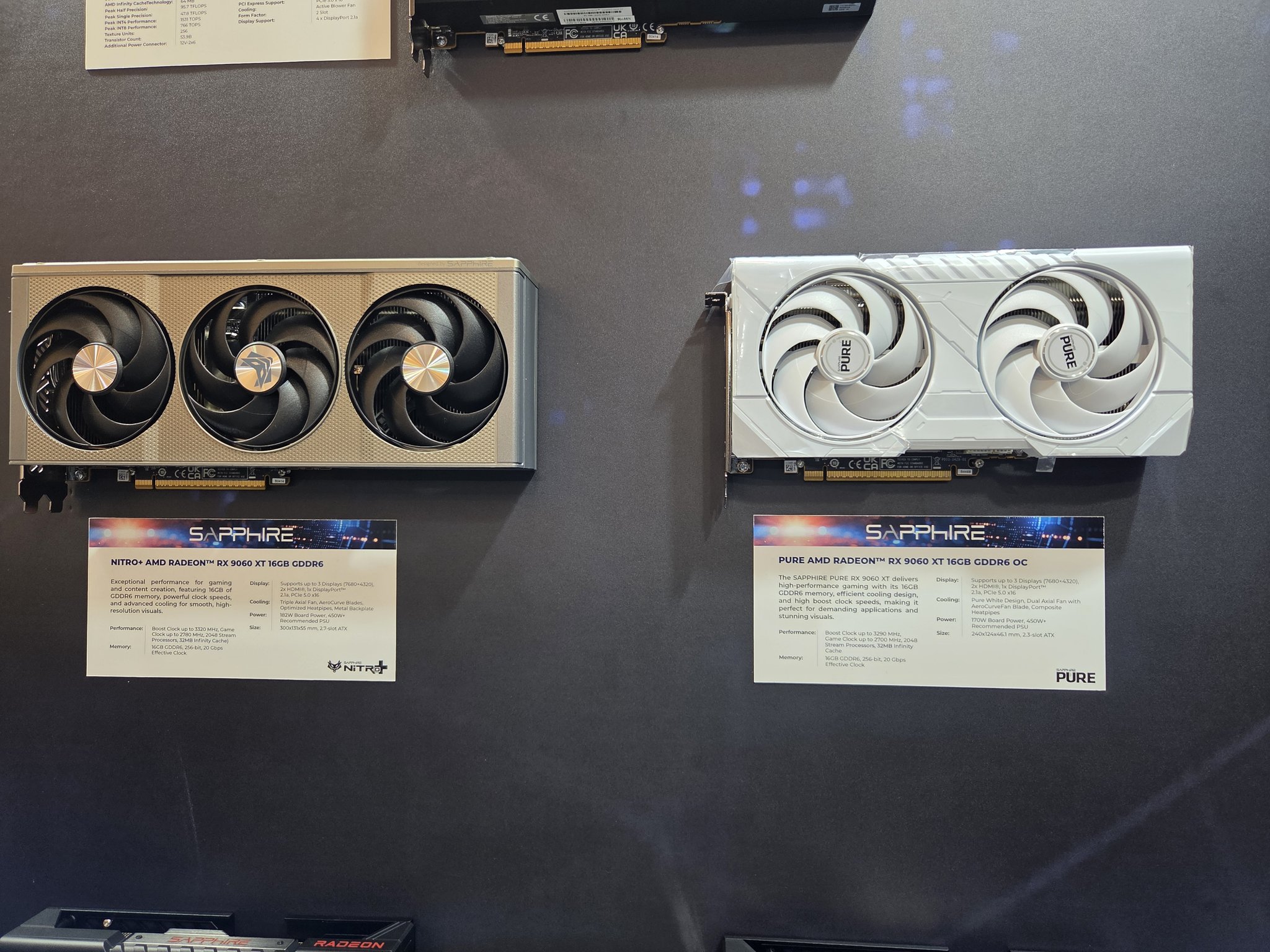

Just like the RTX 5060 Ti, the Radeon RX 9060 XT will feature two memory configurations of 8GB and 16GB. The difference compared to GeForce is that AMD is sticking to GDDR6 technology and clocks of 20 Gbps.

Based on the most recent information we have from AMD board partners, the RX 9060 XT will launch with 2048 Stream Processors. This is, of course, nothing surprising, because the card was meant to use the Navi 44 GPU, which has half the core count of Navi 48.

We also have an update on clocks, and it looks very interesting. First, a reminder that the RX 7600 XT, the predecessor to the RX 9060 XT, featuring the Navi 33 XT GPU, had a game clock of 2470 MHz and a boost clock of 2755 MHz. The RDNA4 update will have much higher clocks. According to our information, the RX 9060 XT will ship with a 2620 MHz game clock and a 3230 MHz boost clock. But that's not all, we also learned that some OC variants will have a 3.3 GHz boost.

The RX 9060 XT will require at least a 500W power supply, and some models will need 550W or higher specs. We are yet to hear about the RX 9060 XT with a 16-pin power connector, as most specs we saw are for 8-pin variants. It's also worth adding that the RX 9060 XT will have three display connectors, not four like the RX 9070 series. This is likely due to the limitations of Navi 44.

That is about 26 TFLOPs, with VOPD.

Last edited: