sncvsrtoip

Member

I still think if ps6 is 650$ and next xbox 1k but around 50% faster than ps6 and will have steam catalog so also sony games it will be interesting

I'm familiar with that DLSS3 performance mode table, but can you actually claim that is representative of the contiguous processing time from a native frame being in VRAM to being DLSS3 upscaled and flipped to the framebuffer for display - in the way Cerny's PS5 Pro technical presentation can actually be validated for PSSR's contiguous journey from unified RAM to being flipped to the framebuffer?What are you talking about? Cost of PSSR is similar to FSR2 or a bit higher, nothing new - it's not doing stuff that Reflex is doing on PC (cutting latency on game level).

@Xyphie posted cost of DLSS already:

And when it comes to competitive gaming, many console games have 60fps locks, 120hz at best and in that mode they rarely even use PSSR. On PC you can play them in 240+FPS so what latency would that have?

It's definitly not just cosmetic, it frees up ram and improves power efficiency.Isn't the Xbox mode just a visual skin to hide the desktop mode? I know MS were planning to build a completely gaming focused hybrid OS at one point, but not sure they're there yet

Been like that for years here. And I even ignored Playstation for a while, during the PS4 era. Xbox brand feels extremely damaged at this point. Their whole identity has strayed too far from what it used to be during the OG Xbox era.PlayStation

Switch

PC

That's gaming here forward.

I'm familiar with that DLSS3 performance mode table, but can you actually claim that is representative of the contiguous processing time from a native frame being in VRAM to being DLSS3 upscaled and flipped to the framebuffer for display - in the way Cerny's PS5 Pro technical presentation can actually be validated for PSSR's contiguous journey from unified RAM to being flipped to the framebuffer?

We don't even know from that table if it includes VRAM to GPU cache transfer latency for the tensor image, or if that is just a calculation of how many OPS per pixel DLSS3 performance mode does and then calculated against real-world TOPs throughput of each card based on reference clocks, tensor units, etc.

Do we even have a real-world example of identical native game scene rendering at 1080p without DLSS3 and identical settings but with DLSS3 performance mode without Frame-gen outputting at 3840x2160 with a dip in frame-rate equal to native render frame-rate but lowered by adding the value in the table?

So if taking the RTX 2080ti as an example, with a game scene at native 1080p57fps, would the identical DLSS3 performance mode output for 4K be [1 / ((1/57)+ 1.26ms)] = 2160p53fps?

I highly doubt it, as those table numbers look synthetic with the way they scale and even if true, would alter on VRAM/GPU cache contention or misses - unlike PSSR which the PS5 Pro CUs have dedicated OP instructions to commandeer the CUs and register memory for executing PSSR immediately.

Given that frame-gen is even more prevalent in DLSS4 I suspect latency hiding is even greater in DLSS4 given the new algorithm processes the image details together holistically - implying greater data residency and GPU cache use - rather than DLSS3's individual filter passes - but as Nvidia's documentation is so opaque compared to Cerny's PSSR info, I'm happy to be proved wrong and actually see DLSS latency validated computationally and with real world games.

Yeah. That was the magic of the 360 era.There's no edge, their exclusives have lost their power as titles, as IP, not to mention losing their exclusivity. There was something mysterious, edgy and "Americana" about the Xbox, for me as a European.

Xbox One X vs PS4 Pro. Which one was more successful?

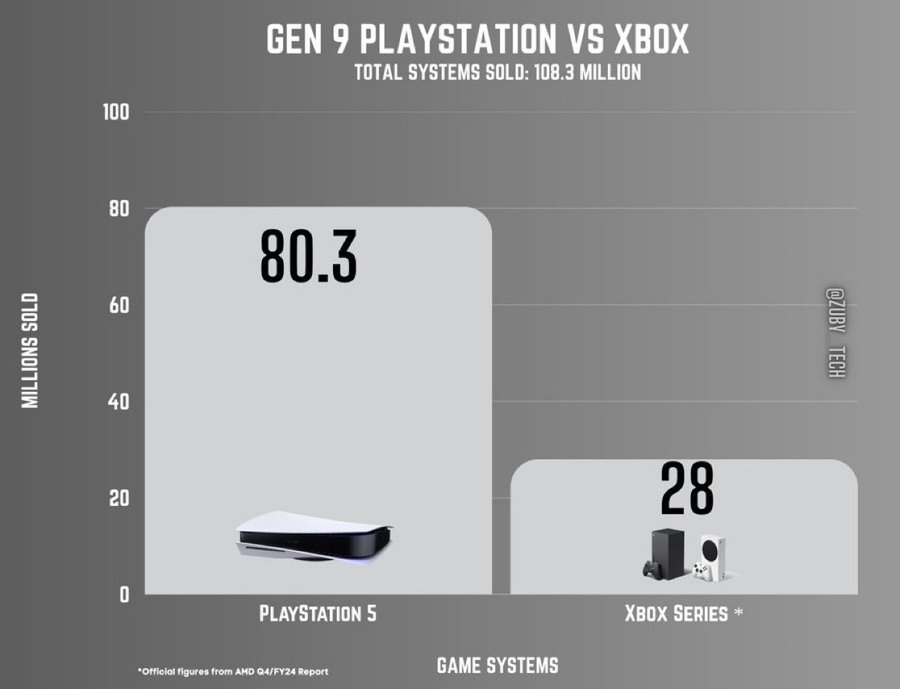

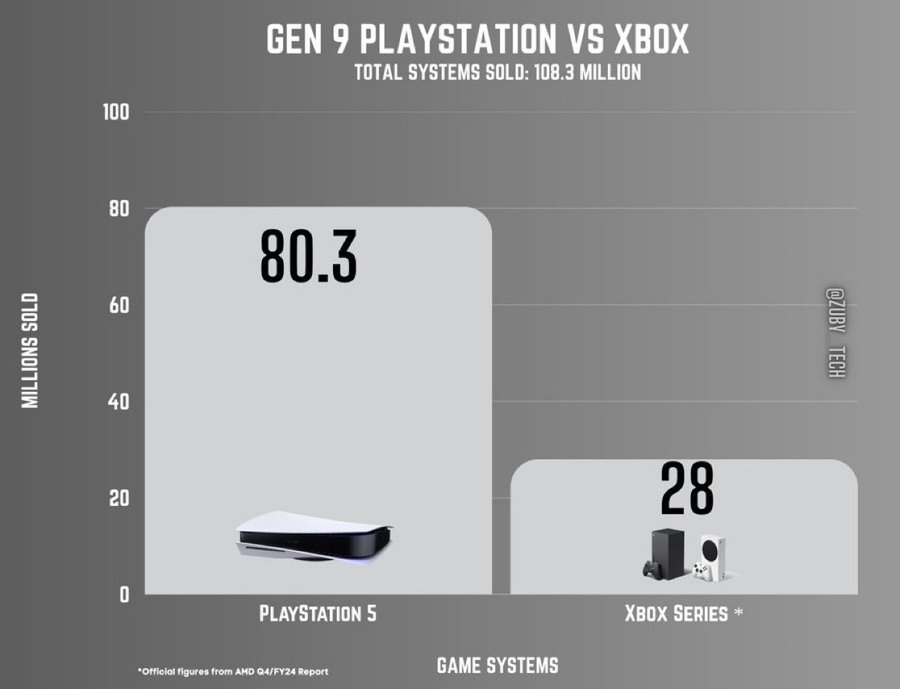

Taking everything into account over the past five years, even 28mil seems unrealistic. If we found out the real sales numbers, 15-20 wouldn't shock me.

That the difference in favor of PS6 will be even greater (if there is an Xbox console...)

All your images are showing "content not viewable in your region" (the UK), but is that a yes or no to the specific question I asked - that I've requoted?I can give you data. 1920x1080 TAA, 3840x2160 TAA, 3840x2160 with DLSS Performance (1080p), all with Reflex off:

And for H:FW:

This is latency measurement - not frametime:

And nvidia probably only measured algorithm cost, I doubt they added cost of post processing that now have to render in 4K and not in 1080p (or HUD).

Edit: Reflex off vs. on (native 4k):

Do we even have a real-world example of identical native game scene rendering at 1080p without DLSS3 and identical settings but with DLSS3 performance mode without Frame-gen outputting at 3840x2160 with a dip in frame-rate equal to native render frame-rate but lowered by adding the value in the table?

So if taking the RTX 2080ti as an example, with a game scene at native 1080p57fps, would the identical DLSS3 performance mode output for 4K be [1 / ((1/57)+ 1.26ms)] = 2160p53fps?

They've been selling to the fullest in the pandemic years. There is no way Xbox Series is below 30m.Taking everything into account over the past five years, even 28mil seems unrealistic. If we found out the real sales numbers, 15-20 wouldn't shock me.

They were mostly selling Series S consoles due to Sony having manufacturing issues for a good while. A lot of people just wanted something to tide them over until they could secure a PS5.They've been selling to the fullest in the pandemic years. There is no way Xbox Series is below 30m.

Series S is also an Xbox (like my iPhone lol). I agree that the Series X sales must be around twenty five units sold.They were mostly selling Series S consoles due to Sony having manufacturing issues for a good while. A lot of people just wanted something to tide them over until they could secure a PS5.

I still can't see them anywhere close to 30. More like 20.

I did mean both together, combined sales.Series S is also an Xbox (like my iPhone lol). I agree that the Series X sales must be around twenty five units sold.

Then agree to disagree. I'm team 30:I did mean both together, combined sales.

There is no next Xbox even if there is a next Xbox.Except there will be no next Xbox according to the latest Rumors

and it really makes no technical sense… You could make the case in 2027 for a console built on 2nm fab process with dedicated AI cores, 24GB of faster GDDR6 RAM (~768- 864 GB/s with less latency due to the better fab nodes and improved controllers) and 10GB/s+ SSD targeting $350-$450 and still have a bigger leap.You end up with games having sharper textures, more defined shadows and higher overall resolution for that console.

Things 95% of the target audience doesn't even notice or care about.

Original Xbox was an even greater example. Still didn't matter in the end.Xbox One X demonstrated that power isn't the be all and end all for end users.

That the difference in favor of PS6 will be even greater (if there is an Xbox console...)

Man, I loved the Xbox One X. Give me that again and I'm all over it.Xbox One X vs PS4 Pro. Which one was more successful?

All your images are showing "content not viewable in your region" (the UK), but is that a yes or no to the specific question I asked - that I've requoted?

I'm familiar with that DLSS3 performance mode table, but can you actually claim that is representative of the contiguous processing time from a native frame being in VRAM to being DLSS3 upscaled and flipped to the framebuffer for display - in the way Cerny's PS5 Pro technical presentation can actually be validated for PSSR's contiguous journey from unified RAM to being flipped to the framebuffer?

We don't even know from that table if it includes VRAM to GPU cache transfer latency for the tensor image, or if that is just a calculation of how many OPS per pixel DLSS3 performance mode...

I changed hosting site:

I am deep into PS ecosystem. Don't plan to game on PC high refresh rate monitor. PS6 should be able to push upscaled 4k , >=60 fps with RT on. So it's PS6.

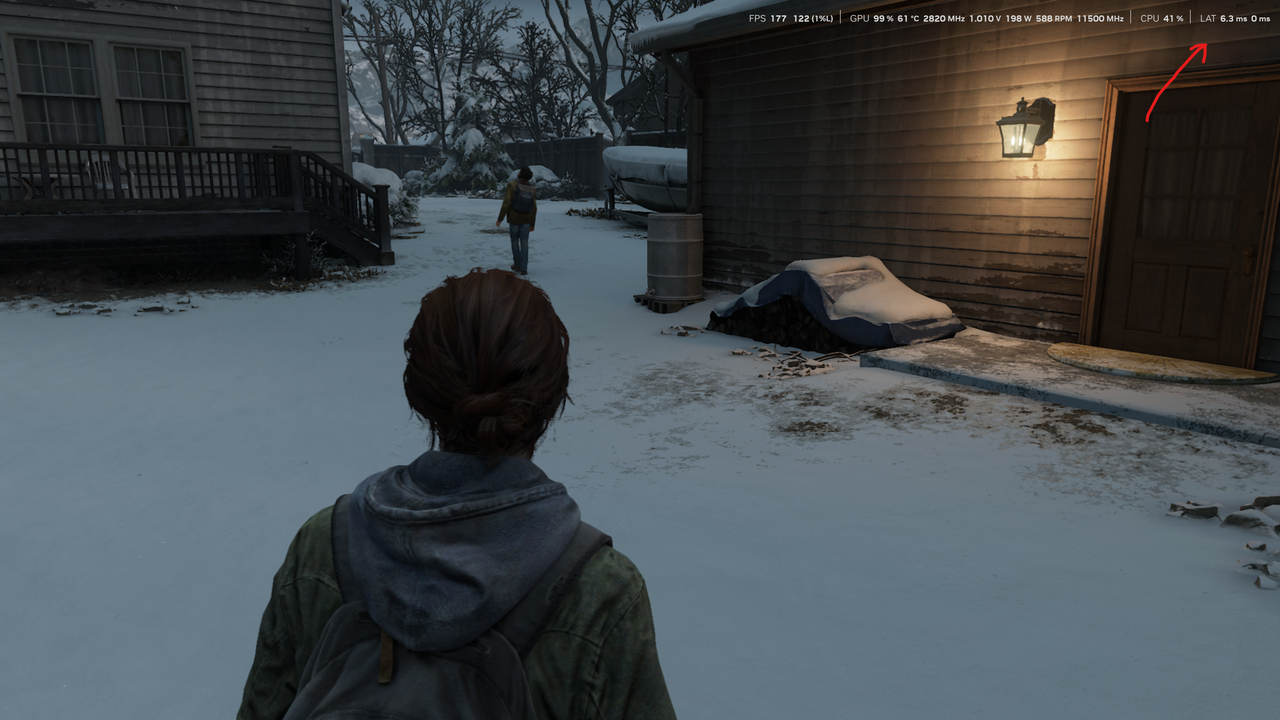

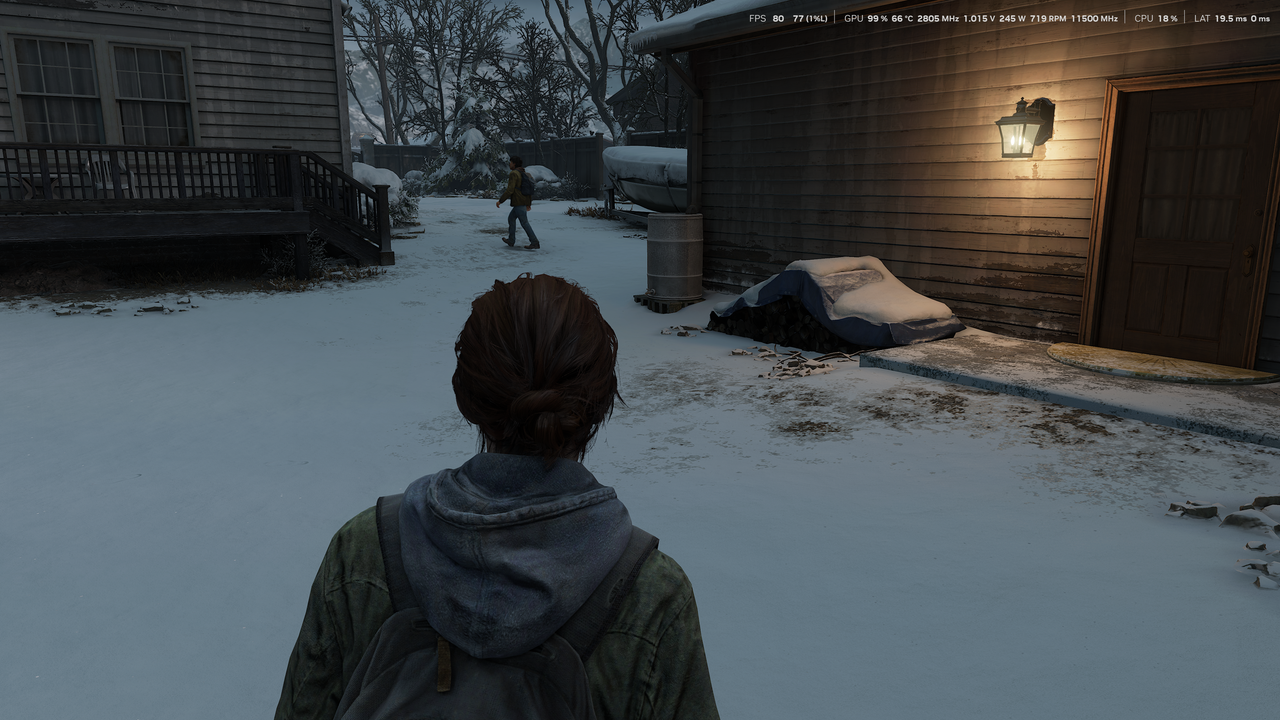

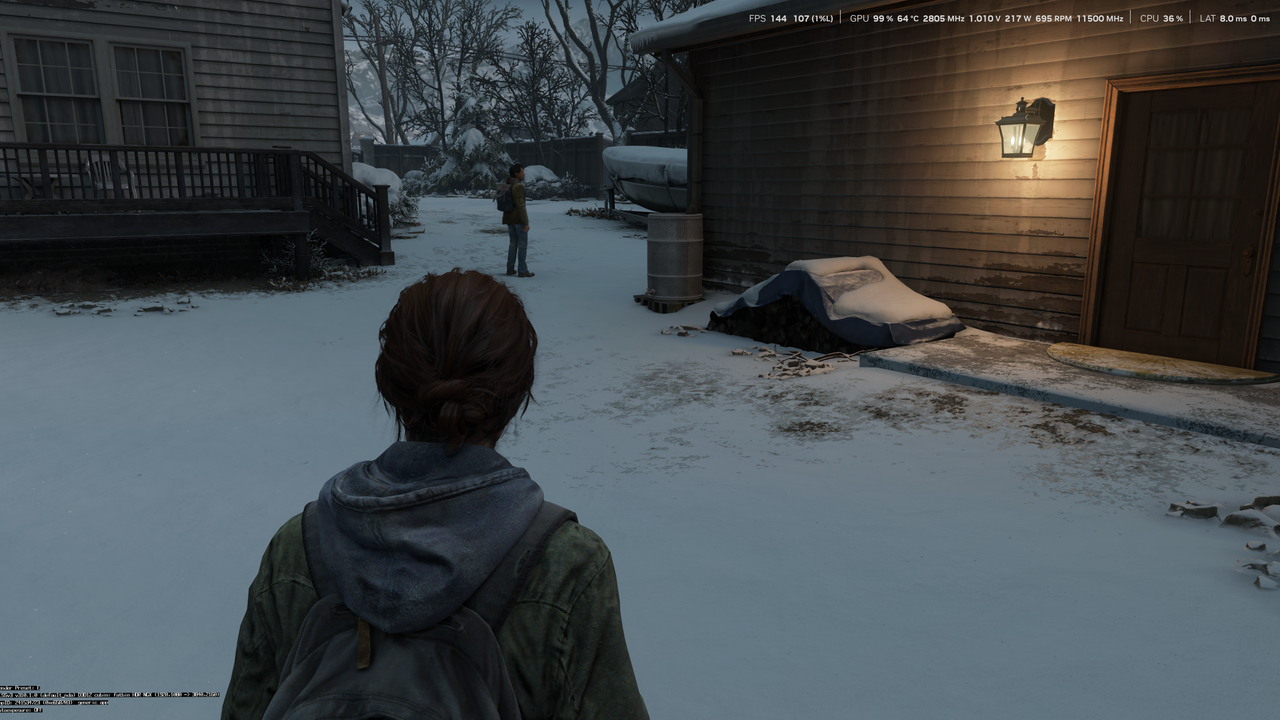

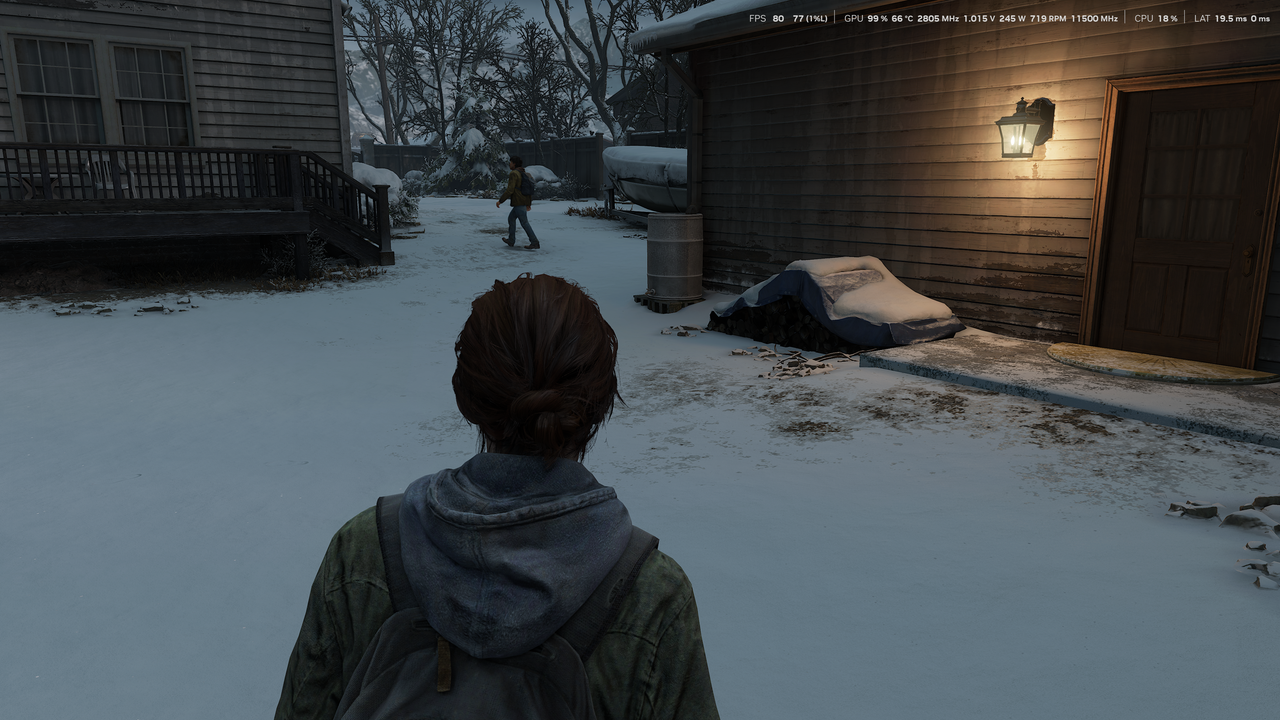

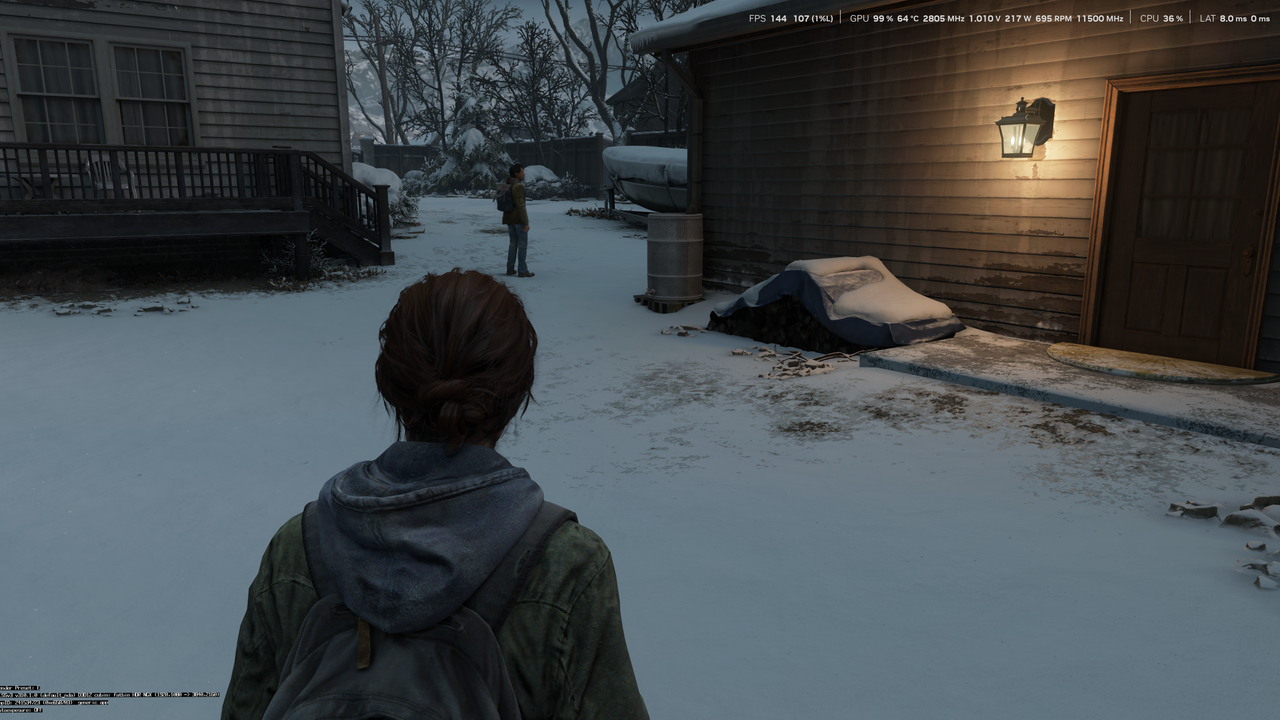

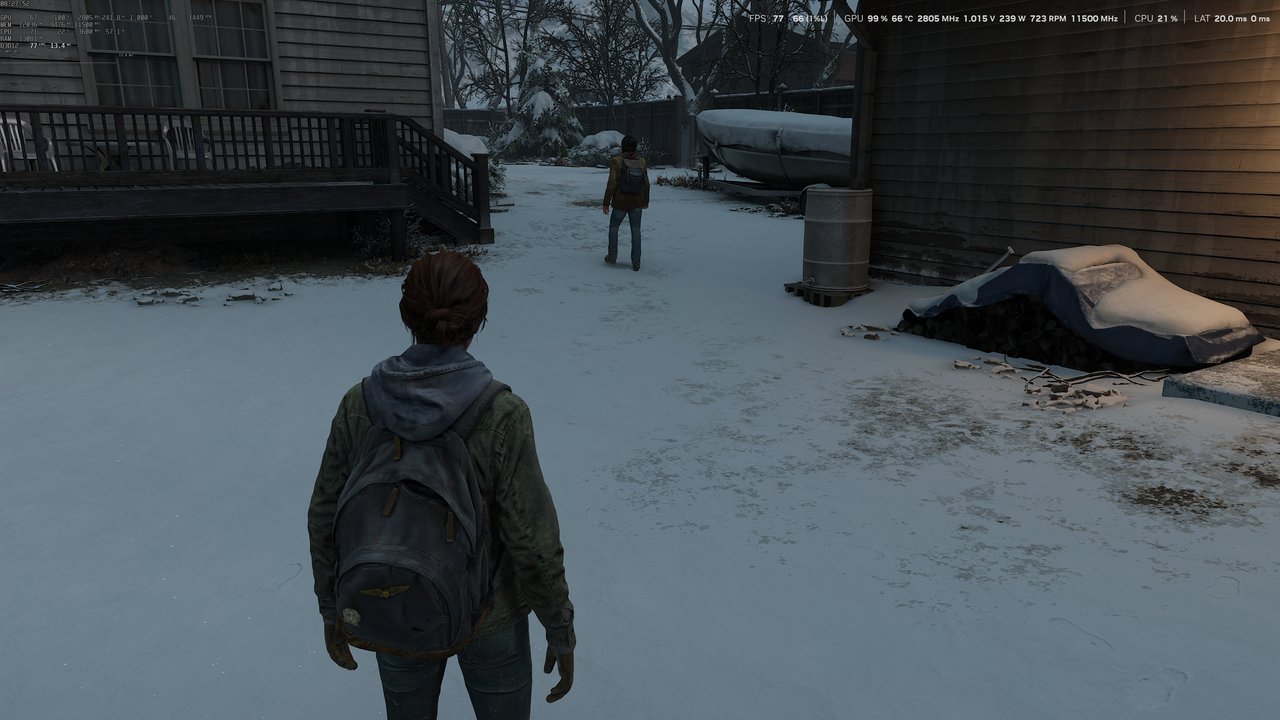

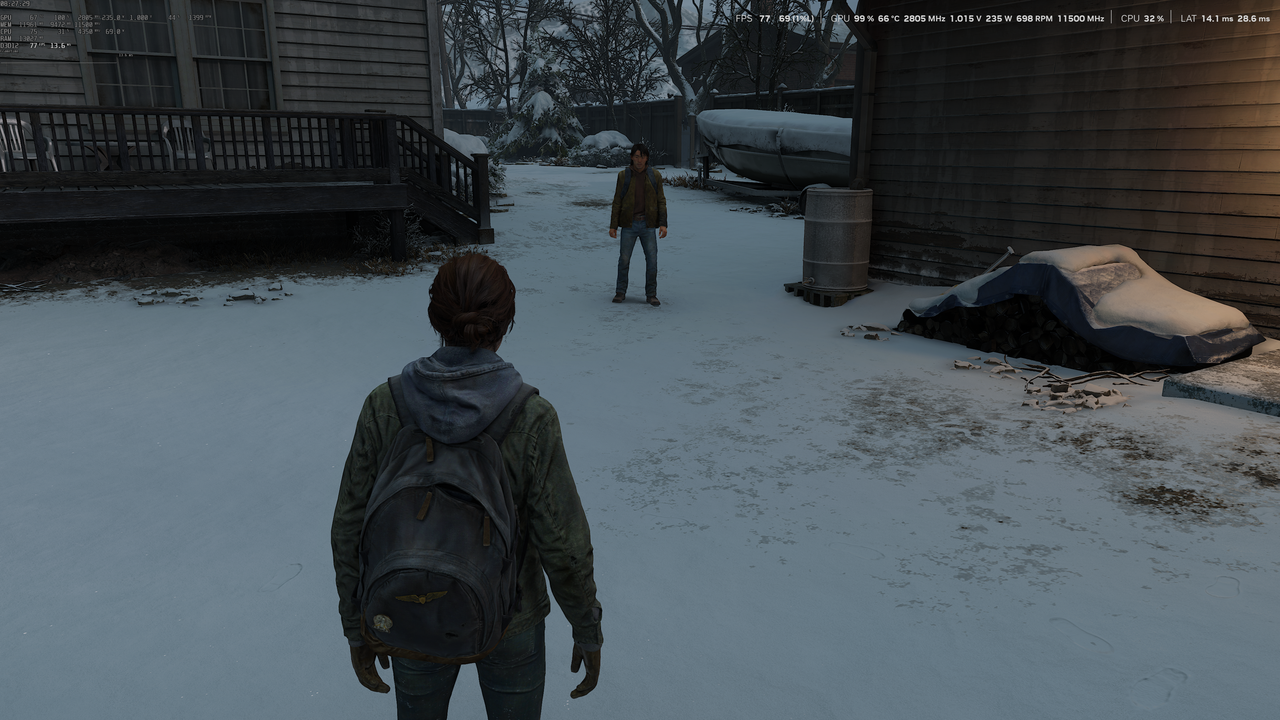

//assumption: TAA's motion vectors are calculated and supplied to the DLSS3 process & the other parts of a TAA-like history blending are done by Nvidia's solution too, we can treat //the DLSS processing as containing a full TAA pass, which actually favours the DLSS calculation given the TAA-pass is typically lighter after ML-AI inferencing.I can give you data. 1920x1080 TAA, 3840x2160 TAA, 3840x2160 with DLSS Performance (1080p), all with Reflex off:

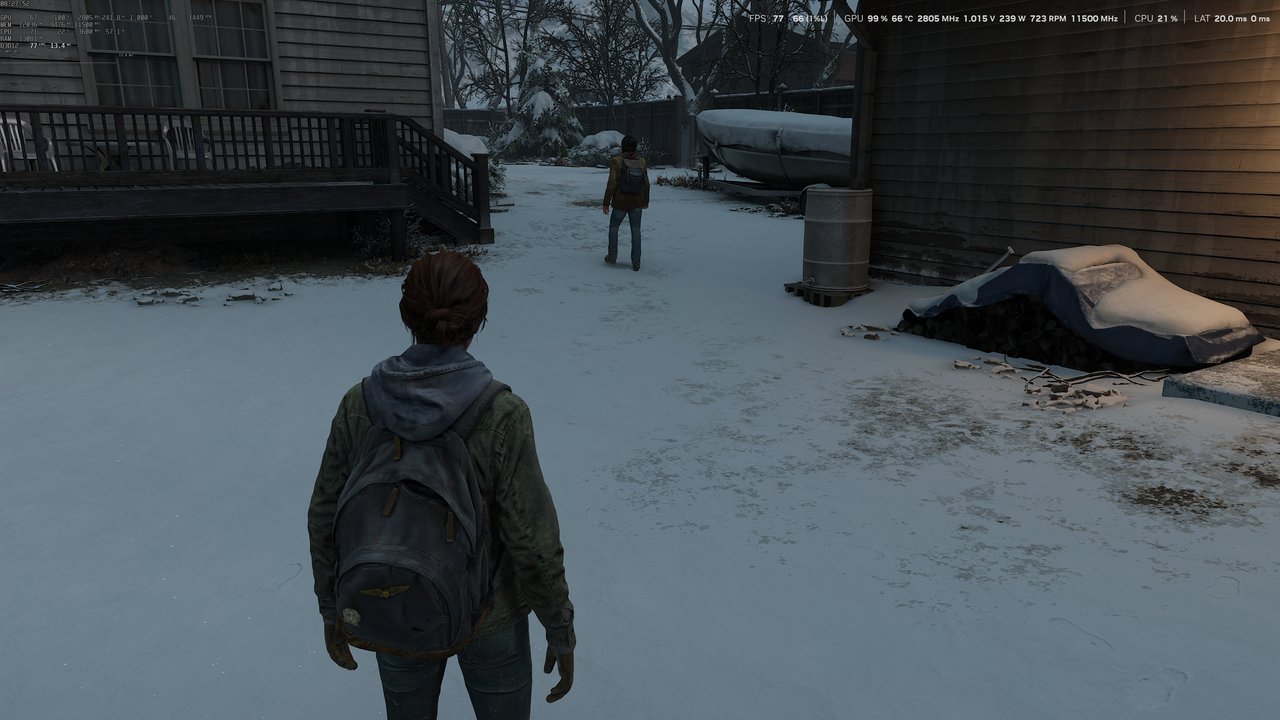

And for H:FW:

This is latency measurement - not frametime:

And nvidia probably only measured algorithm cost, I doubt they added cost of post processing that now have to render in 4K and not in 1080p (or HUD).

Edit: Reflex off vs. on (native 4k):

//assumption: TAA's motion vectors are calculated and supplied to the DLSS3 process & the other parts of a TAA-like history blending are done by Nvidia's solution too, we can treat //the DLSS processing as containing a full TAA pass, which actually favours the DLSS calculation given the TAA-pass is typically lighter after ML-AI inferencing.

What card is that on - is it a 2080ti with the 1.26ms DLSS3 processing time? and is dynamic resolution and Frame-gen disabled?

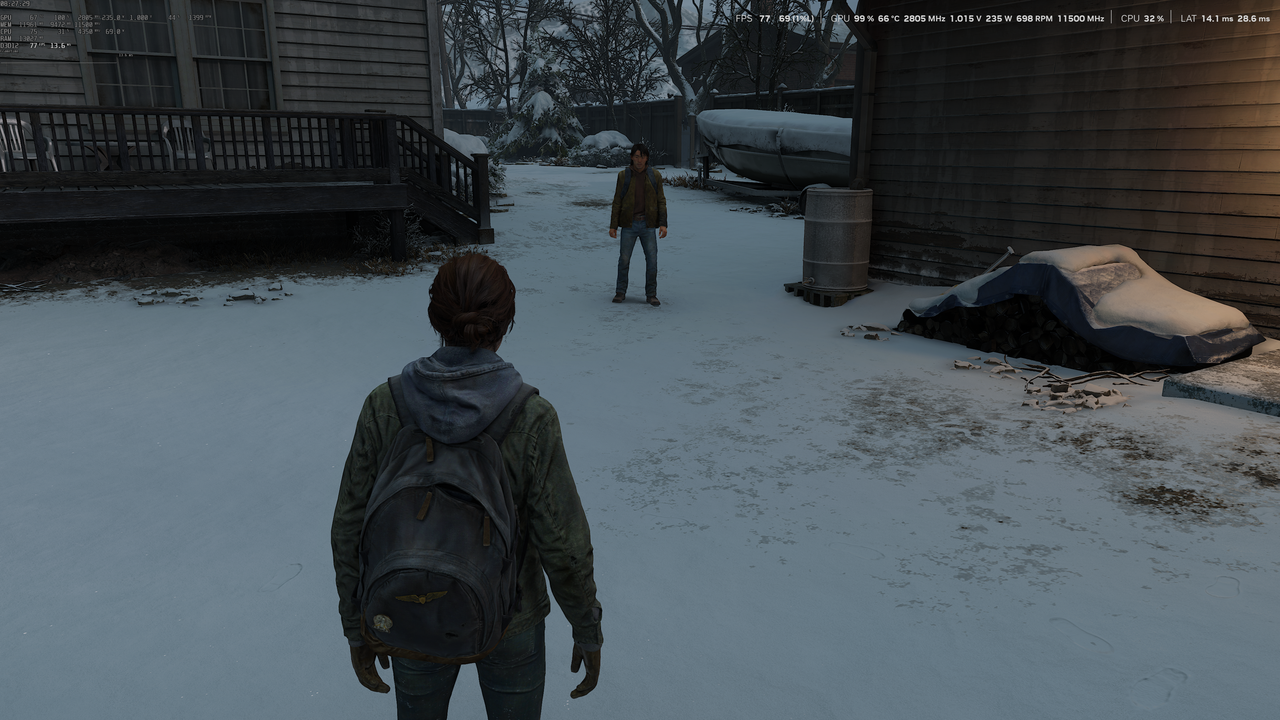

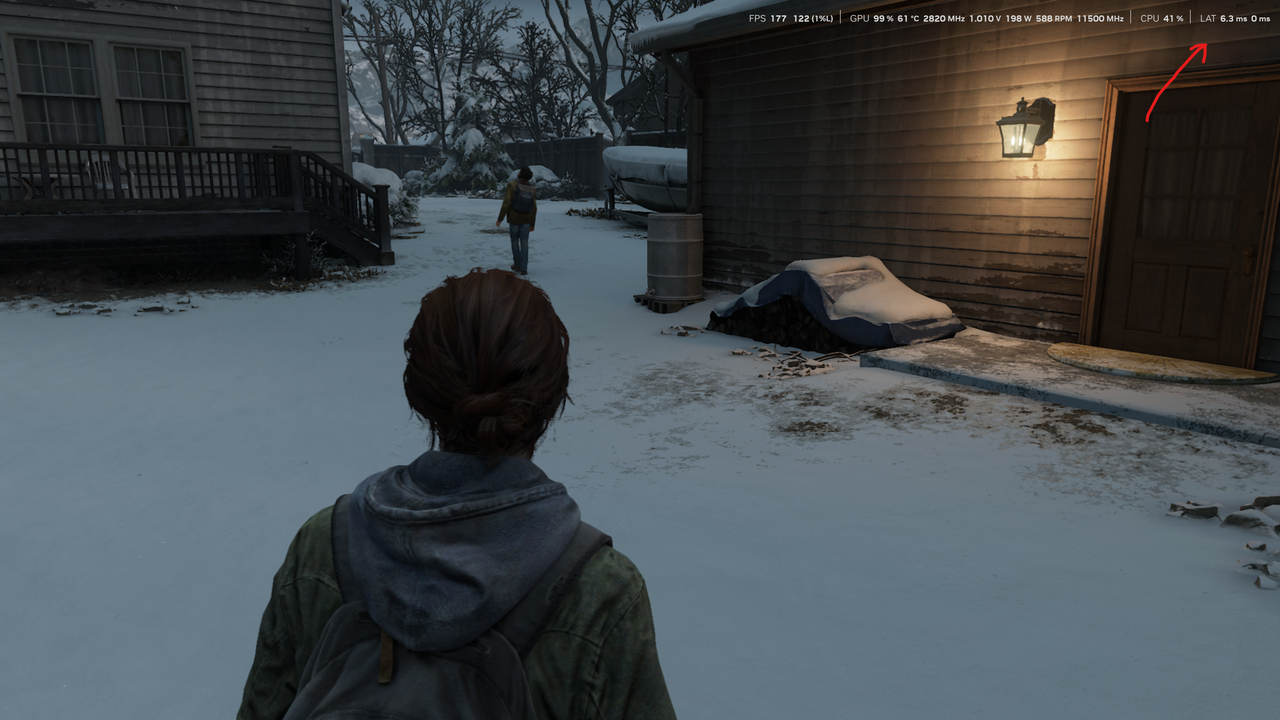

From the first set of Last of Us 2 (PS4 game)images, using the first and last images' FPS values, so comparing TAA 1080p177fps DLSS3 off to TAA 2160p144fps DLSS3 on, the DLSS3 processing difference is 1.295ms which is pretty close to the quoted value from a 2080ti, if smidge over.

The HFW(PS5 game) second set of images TAA 1080p127fps and TAA+DLSS3 on 2160p103 fps is effectively 2ms, which is double the cost of PSSR.

So If you aren't on a 2080ti and are on a 4080 or 4090, that processing cost is effectively 2-4 times more than the processing cost quoted in that table, and from those results it looks like the higher fidelity of the underlying game the higher the processing time of the DLSS ML inferencing component.