PaintTinJr

Member

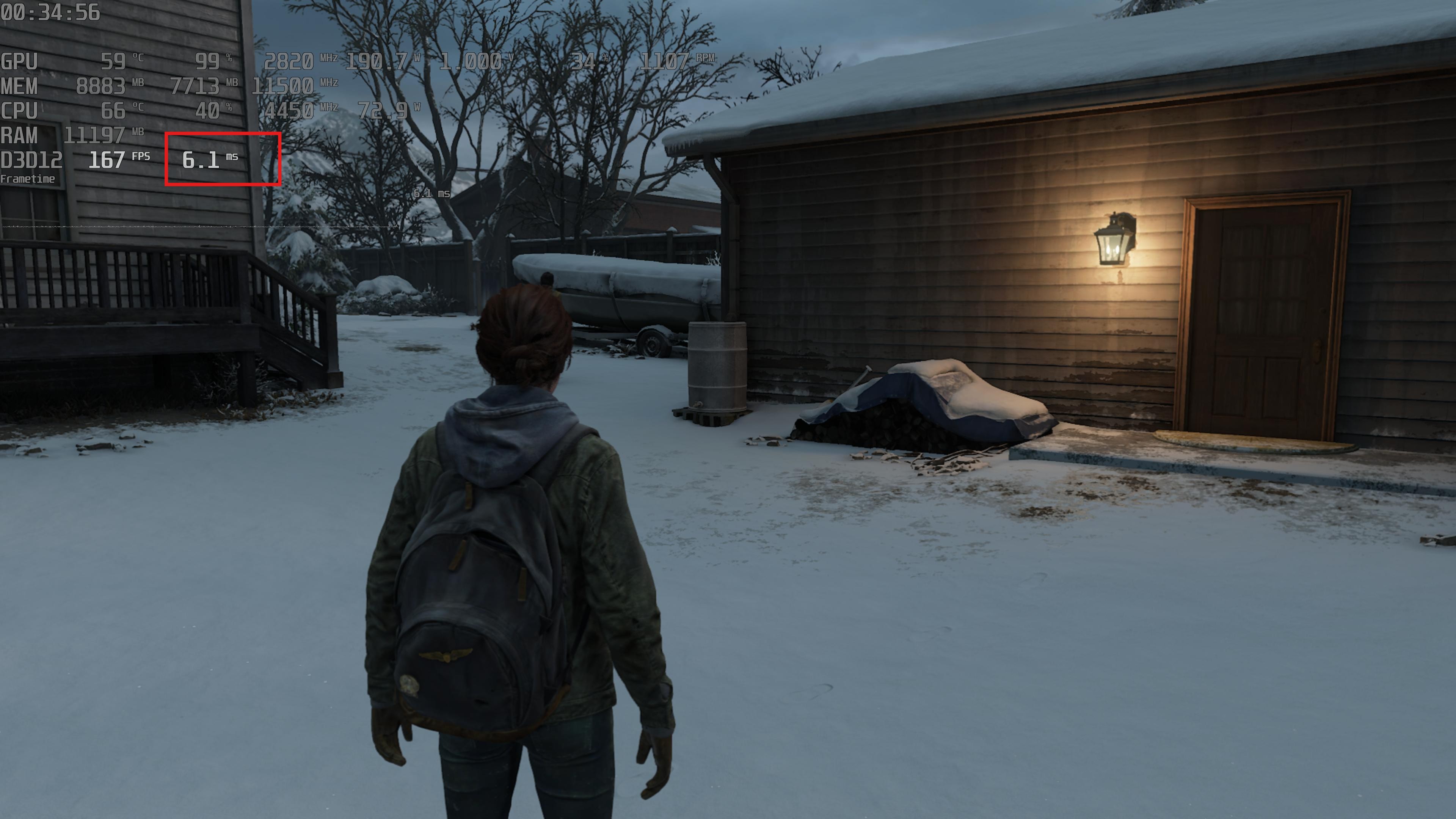

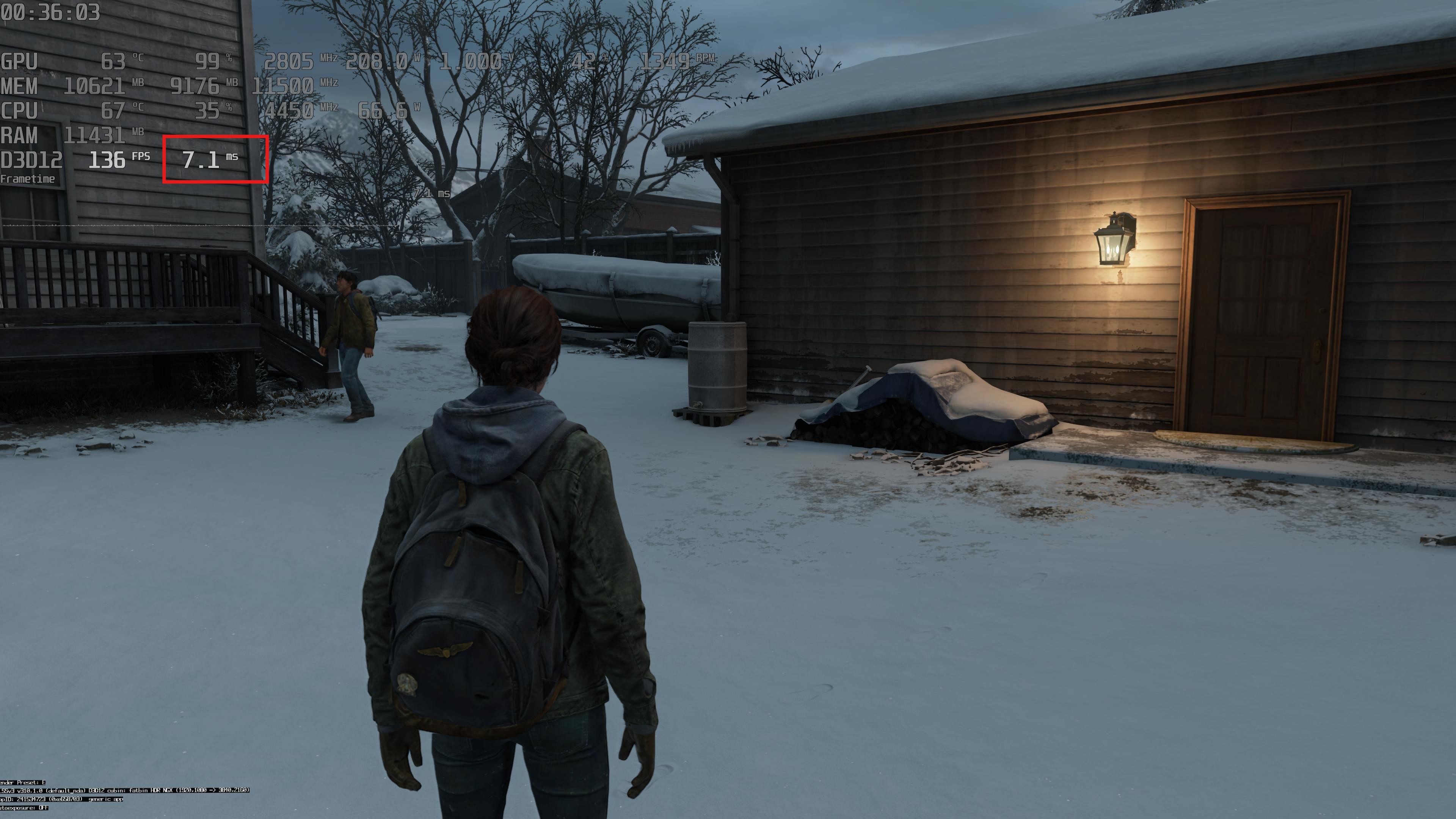

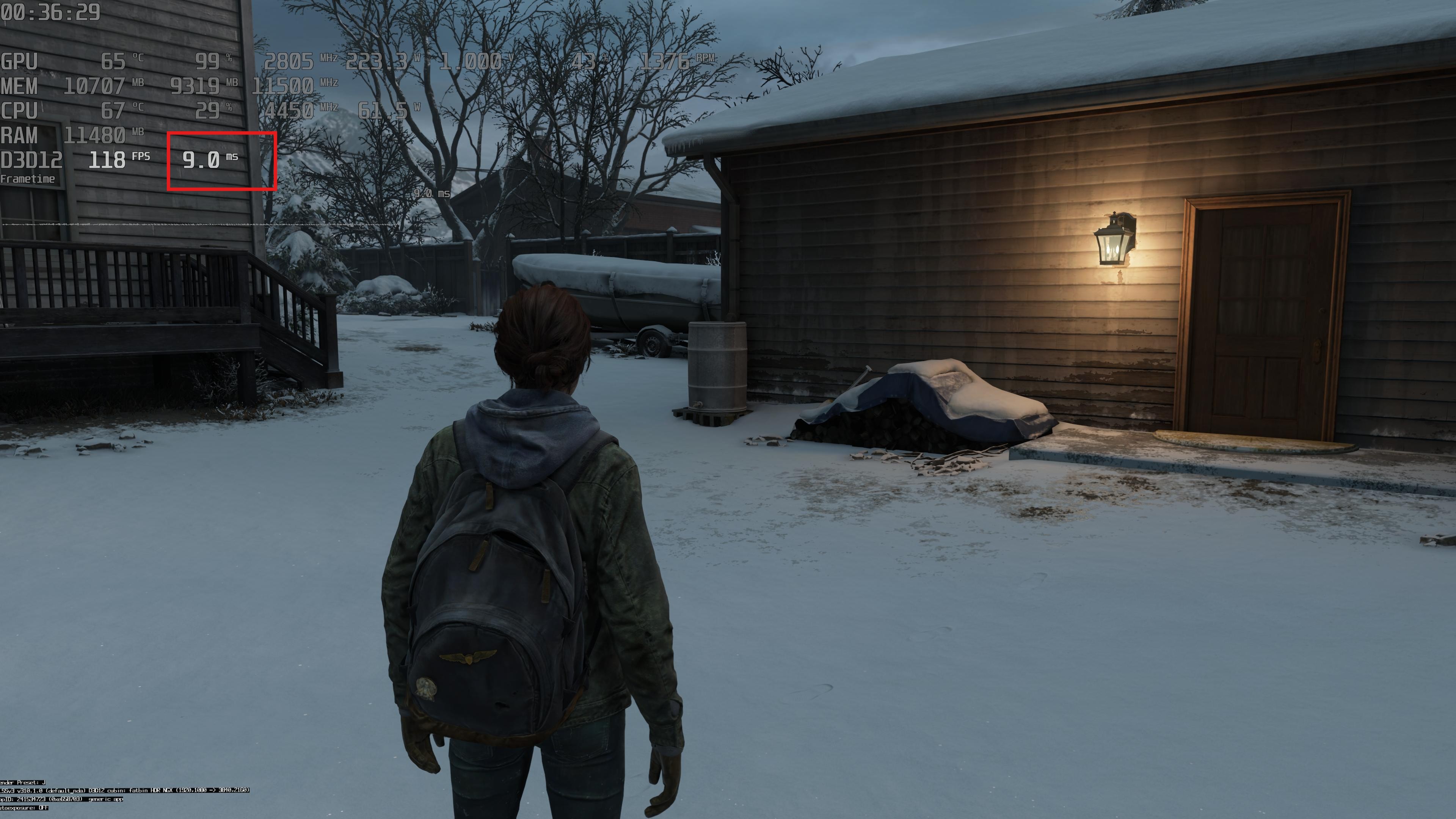

The column in the table I was comparing from Nvidia is supposed to be taking a native 1080p source and producing the DLSS3 4k equivalent, and that is the cost of the inferencing. Not the calculation of the motion vectors or the TAA-styled history blend pass, and assuming you haven't altered any settings, the native render should be the same workload for TAA pass and the TAA+DLSS pass, if not maybe lighter for the DLSS pass, giving it even more latitude to under calculate the post frame render latency(processing).4070ti super.

You have to remember that graph (I think) is missing all this stuff that even with internal geometry resolution being much lower still have to render in native 4k (or lower but still higher than 1080p output).

This cost is missing for DLSS table and I'm sure it's also missing for PSSR because it's game dependent. Every game will have different things, shadow maps, alphas (smoke, fire), HUD, volumetric lights, DOF etc.

People often forget about this and expect the same performance from 4k DLSS performance and native 1080p. This have to be added to the cost of reconstruction.

I also focused on latency here because this is what you were talking about, pure cost in frametime is different.

The latency numbers you are looking at don't mean anything outside your setup with your TV latency because in an unpredicted new frame change that happen in games all the time, the latency is the time it takes to render, upscale and present that brand new frame, and the latency I am talking about is the upscale processing latency - after the native frame rendering finishes - and in this situation, PSSRs fixed ~1ms is less processing latency for the upscale than DLSS3, and definitely DLSS4's on similar TOPs, and even on more TOPs going by your 4070 ti results.