We got some questions from the community on DLSS 4.5 Super Resolution and wanted to provide a few points of clarification.

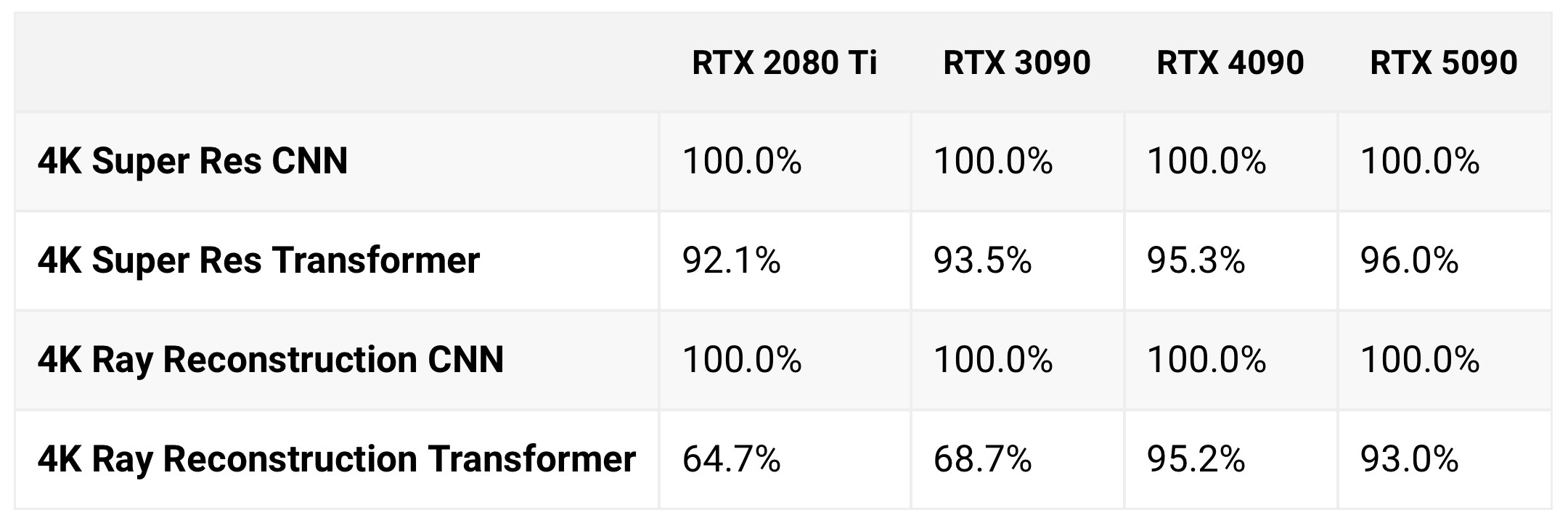

DLSS 4.5 Super Resolution features a 2nd generation Transformer model that improves lighting accuracy, reduces ghosting, and improves temporal stability. The new model delivers this image quality improvement via expanded training, algorithmic enhancements, and 5x raw compute. DLSS 4.5 Super Res uses FP8 precision, accelerated on RTX 40 and 50 series, to minimize the performance impact of the heavier model.

Since RTX 20 and 30 Series don't support FP8, these cards will see a larger performance impact compared to newer hardware and those users may prefer remaining on the existing Model K (DLSS 4.0) preset for higher FPS.

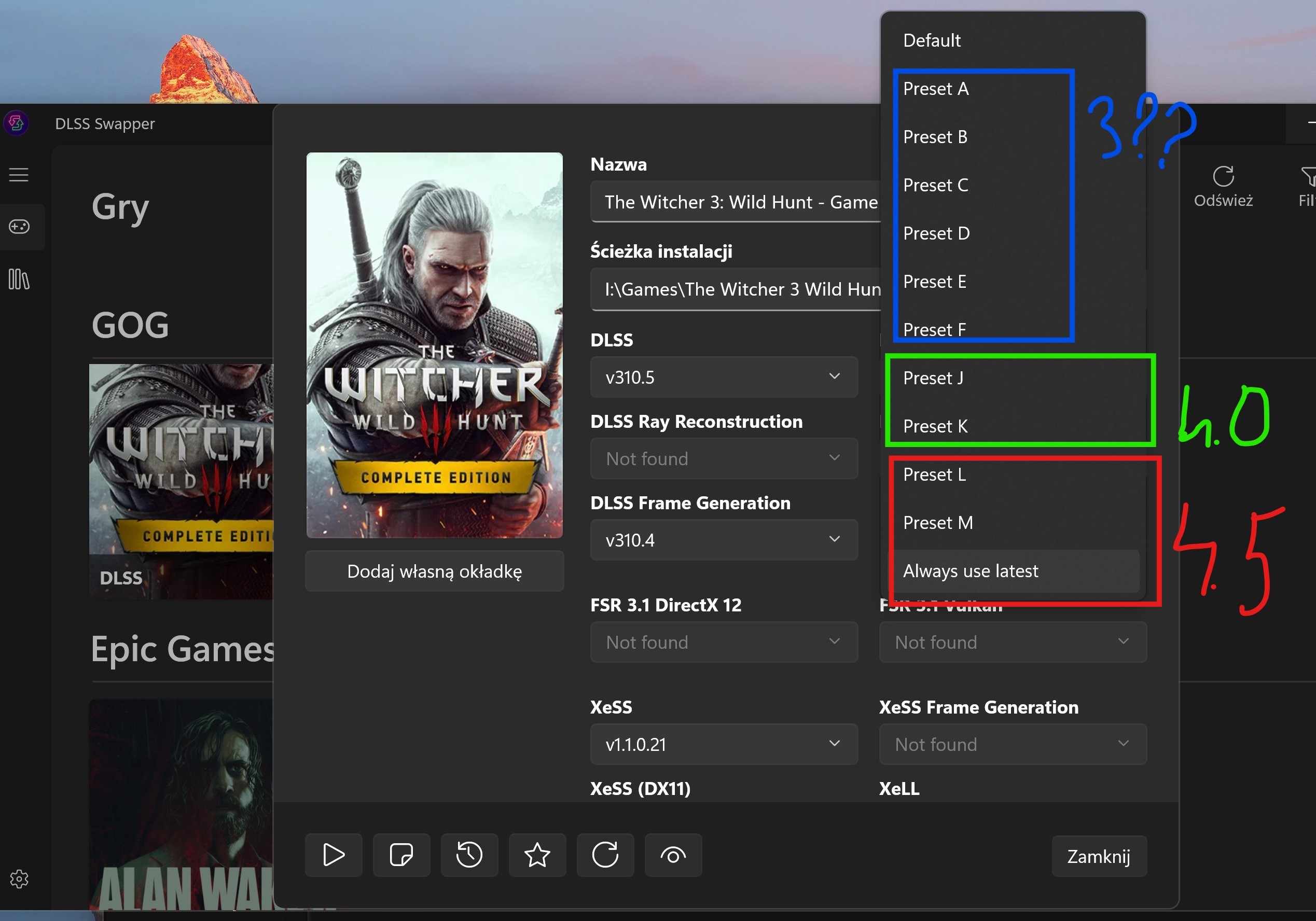

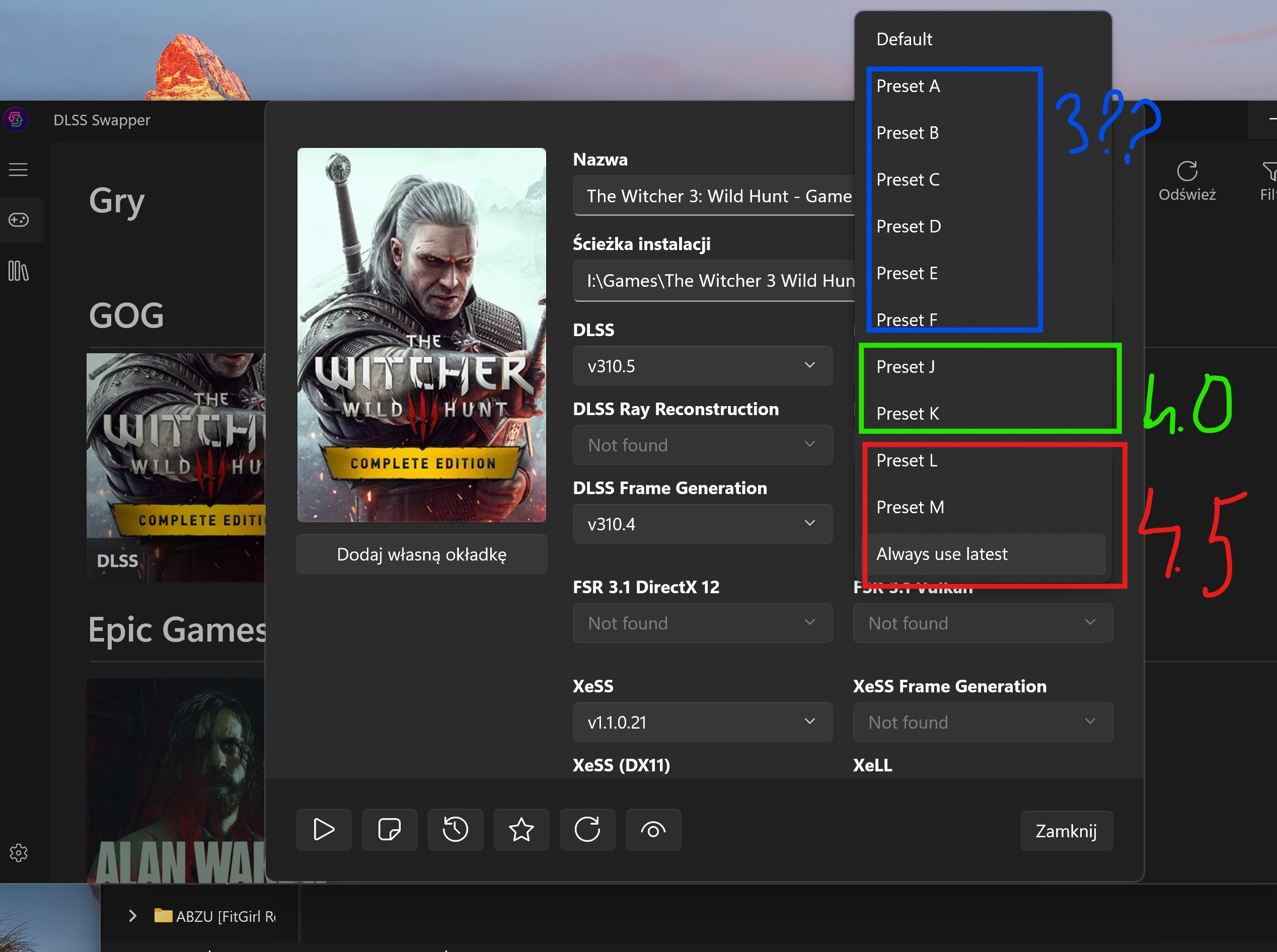

DLSS 4.5 Super Resolution adds support for 2 new presets:

- Model M: optimized and recommended for DLSS Super Resolution Performance mode.

- Model L: optimized and recommended for 4K DLSS Super Resolution Ultra Performance mode.

While Model M and L are supported across DLSS Super Resolution Quality, Balanced modes, and DLAA mode, users will see the best quality vs. performance benefits in Performance and Ultra Performance modes. Additionally, Ray Reconstruction is not updated to the 2nd gen transformer architecture – benefits are seen using Super Resolution only.

To verify that the intended model is enabled, turn on the NVIDIA app overlay statistics view via Alt+Z > Statistics > Statistics View > DLSS.

We look forward to hearing your feedback on the new updates!