NeOak

Member

Seeing as AMD is one of the developers of HMB, you can bet your ass they will.Yup. Based on that Sk Hynix ppt, August/September 2016 at the earliest.

AMD might also get first dibs on HBM2.

Unlike NVIDIA lawl

Seeing as AMD is one of the developers of HMB, you can bet your ass they will.Yup. Based on that Sk Hynix ppt, August/September 2016 at the earliest.

AMD might also get first dibs on HBM2.

Is AMD going to use this architecture the next time they move to a new process? From what I understand nVidia's Pascal could be launching within the the next 6-8 months, and it seems like they would have a significant advantage over AMD with a new architecture, manufacturing process and HMB2.

I will bet my left bollock that Pascal is not coming in 8 months on HBM2's production timeline alone.

How so? If anything, the increasing frametime variance at higher resolutions (which is apparent across most sites who do frametime analysis) would indicate the opposite.

Amd loyalists grasping. Wait for new drivers. Wait for DX12. It goes on and on.

It seemingly performs better in FPS, but worse in frametime variance. This could be explained by (a) more memory bandwidth being required and less CPU driver overhead induced at higher resolutions, leading to better overall performance, but (b) the total memory requirements going up and in some games exceeding the available memory, leading to intermittent microstutter as assets are swapped of PCIe.That doesn't necessarily indicate being limited by available memory.

Generally we see the Fury X performing better at 4K than at 1080p relative to the 980 Ti. This suggests that having 2 GB less memory than the 980 Ti is not a disadvantage.

Yes. Yes they do. Always.

The Gigabyte G1 Gaming series of cards look like this. That's 2x dual-link DVI, 3x DisplayPort, and 1x HDMI 2.0. It doesn't matter WHAT you own, even if you are somehow still connecting to an analog monitor over VGA, one of those DVI's is actually a DVI-I and can output to VGA which is quite amazing. It can output to all 3 DP connectors at once, both DVI's at once, or anything on the right side of the panel plus something on the left for a total of 4 displays. The way the left side works is, it has an internal TMDS transmitter that can either direct signal to the single DVI on the top left or the 2 DP's below it. THIS is the kind of connector diversity a $650 flagship video card should have on it. If you own it, this card can drive it. Period. Too bad AMD never heard of such a thing it seems.

As an example of VRAM stuttering of a similar variety, load up heavvy VRAM games on a 970. They will in general have a good avg framerate, but the minimum framerates and the qualitative experience will be less enjoyable.

I am currently extremely ocnfused as to who for what situation this card is designed... the memory bandwidth advantage does not seem to be pulling much if any weight.

Yep, certainly the figures look rather weird... it will be interesting to see if DX12/vulkan have any effect on those figures.

I also wonder if this card might be more suited to VR, as that's a kindof quirky performance area.

This really is the finest air cooled card out there right now. You forgot to mention how it clocks faster than any other air 980Ti or Titan X. Unless Fury does something magical in the next month I'm so gonna get one.

Brent Justice said:We've had some time now to do some preliminary overclocking with the AMD Radeon R9 Fury X. We have found that you can control the speed of the fan on the radiator with MSI Afterburner. Turning it up to 100% fan speed keeps the GPU much cooler when attempting to overclock, and isn't that loud.

For example, during our overclocking attempts the GPU was at 37c at full-load removing temperature as a factor holding back overclocking. We also found out that you will not be able to overclock HBM, it is at 500MHz and will stay at 500MHz. You will only be able to overclock the GPU. Currently, there is no way to unlock voltage modification of the GPU.

In our testing we found that the GPU hard locked in gaming at 1150MHz 100% fan speed 37c. Moving down to 1140MHz we were able to play The Witcher 3 without crashing so far. This is with the fan at 100% and 37c degree GPU. So far, 1140MHz seems to be stable, but we have not tested other games nor tested the overclock for a prolonged amount of time.

More testing needs to be done, but our preliminary testing seems to indicate 1130-1140MHz may be the overclock. This is about a 70-80MHz overclock over stock speed of 1050MHz. That is a rather small increase in overclock and doesn't really amount to any gameplay experience or noteworthy performance improvements.

We have at least learned that temperature is not the factor holding the overclock back, at 37c there was a lot of headroom with the liquid cooling system. There are other factors holding the overclock back, one of which may be voltage.

Yes. Yes they do. Always.

The Gigabyte G1 Gaming series of cards look like this. That's 2x dual-link DVI, 3x DisplayPort, and 1x HDMI 2.0. It doesn't matter WHAT you own, even if you are somehow still connecting to an analog monitor over VGA, one of those DVI's is actually a DVI-I and can output to VGA which is quite amazing. It can output to all 3 DP connectors at once, both DVI's at once, or anything on the right side of the panel plus something on the left for a total of 4 displays. The way the left side works is, it has an internal TMDS transmitter that can either direct signal to the single DVI on the top left or the 2 DP's below it. THIS is the kind of connector diversity a $650 flagship video card should have on it. If you own it, this card can drive it. Period. Too bad AMD never heard of such a thing it seems.

How does it have HDMI 2.0? There are no active DP 1.2 to HDMI 2.0 converters out there yet. Just curious.

How does it have HDMI 2.0? There are no active DP 1.2 to HDMI 2.0 converters out there yet. Just curious.

You're right, there aren't yet.How does it have HDMI 2.0? There are no active DP 1.2 to HDMI 2.0 converters out there yet. Just curious.

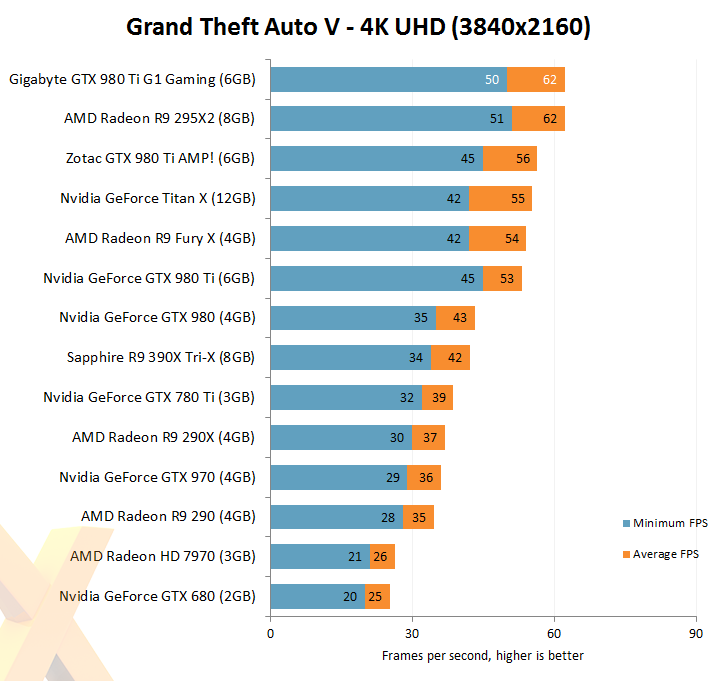

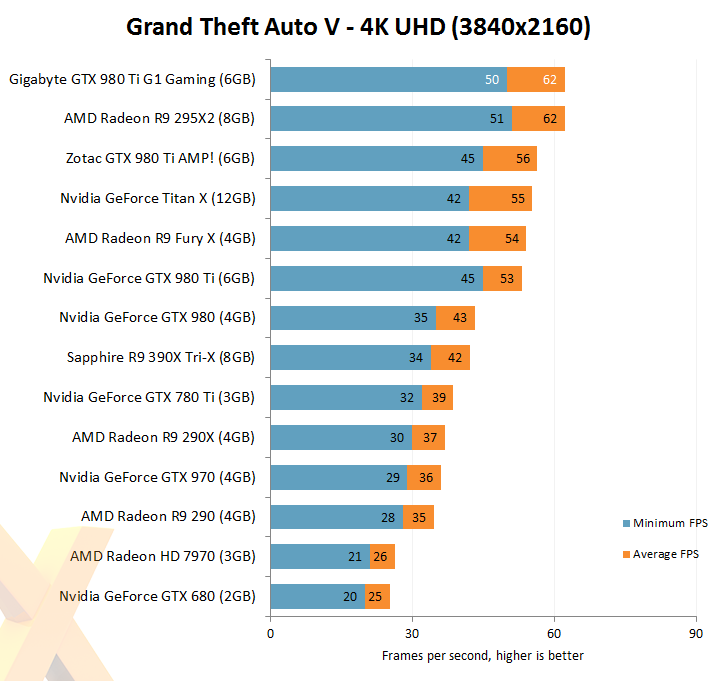

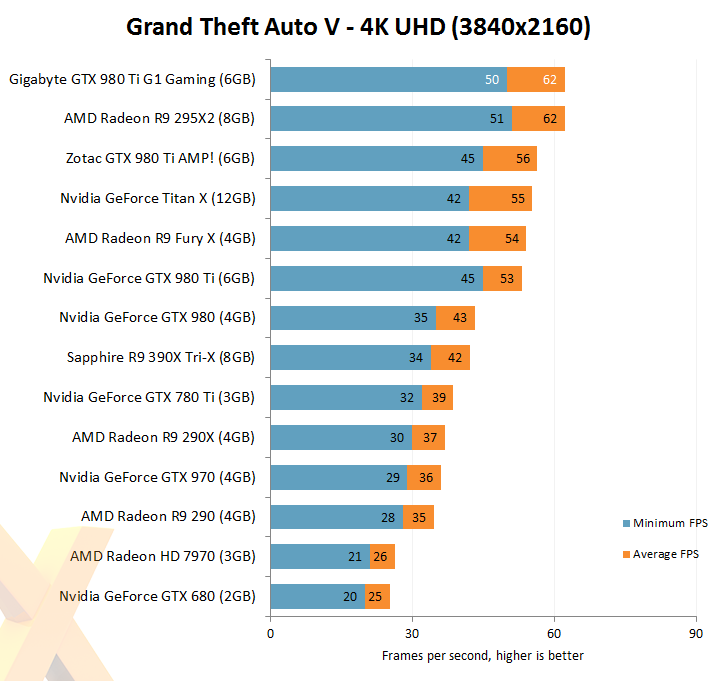

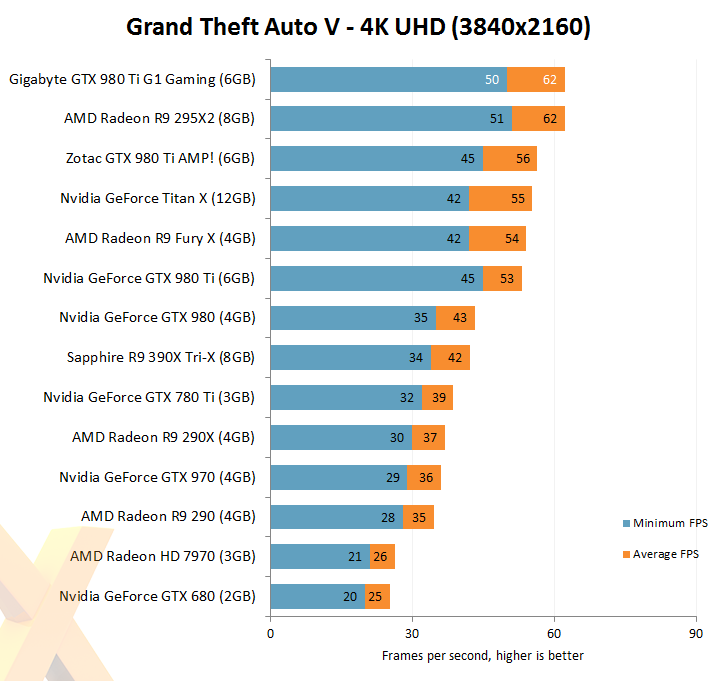

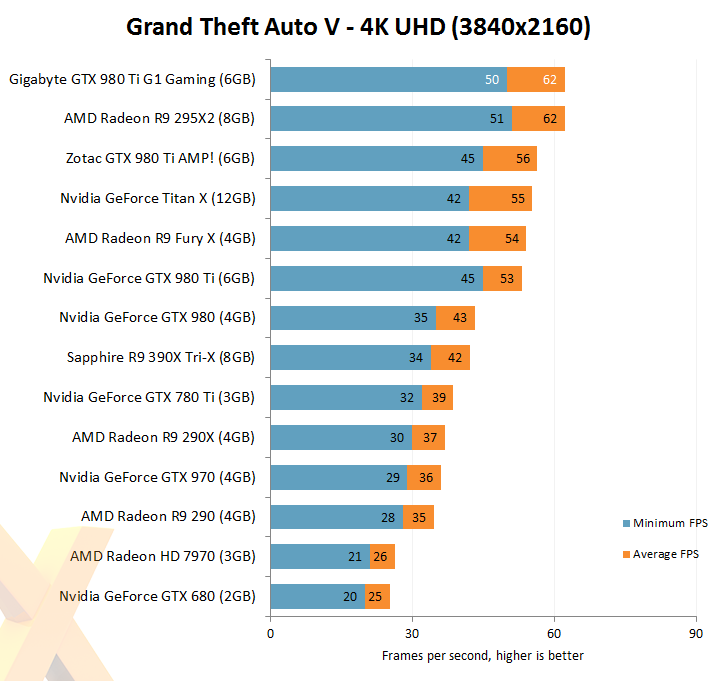

Damn. Seeing this just confirms that I dont need an upgrade yet. 290x is still powerful enough..Here's a fun review, the Gigabyte G1 Gaming 980 Ti reviewed AFTER the launch of Fury X by Hexus. So it's an example of a Fury X going against a factory overclocked 980 Ti.

http://hexus.net/tech/reviews/graphics/84284-gigabyte-geforce-gtx-980-ti-g1-gaming-6gb/

The Fury X gets humiliated by an overclocked 980 Ti. It's actually really ugly to witness how badly the Fury X fares against a card which comes from the factory running at the clock speeds the G1 Gaming has.

Computerbase, awesome as they are, tested both a press sample and a retail card. They noted coil whine on both, but the press sample was actually worse.I just went over to my friends who just got his fury X, it is anything but quite, it has worse coil whine than the 970s and 670s and 45 dollar PSUs, and the fan is actually loud, it also didn't perform on par it my OC 980 or his 980ti and hrs so upset he's returning it.

after further research we we found a video by jayztwocents. we came to the conclusion that the reviewers got GOLDEN samples that were also silicon lottery chips because my friend had the same experience as jay. if you were an official reviewer AMD must have sent a pre Okayed card that was binned, checked for or maybe even improved for coil whine, and for some reason ours are not as quite as all these reviews say....also not only werecwe ae to get 980ti to perform bettter but we were both able to get overclock 980's to perform better.

did AMD cherry pick samples for their reviews because both my friend and jay got different results

http://www.youtube.com/watch?v=iEwLtqbBw90

Here's a fun review, the Gigabyte G1 Gaming 980 Ti reviewed AFTER the launch of Fury X by Hexus. So it's an example of a Fury X going against a factory overclocked 980 Ti.

http://hexus.net/tech/reviews/graphics/84284-gigabyte-geforce-gtx-980-ti-g1-gaming-6gb/

The Fury X gets humiliated by an overclocked 980 Ti. It's actually really ugly to witness how badly the Fury X fares against a card which comes from the factory running at the clock speeds the G1 Gaming has.

Computerbase, awesome as they are, tested both a press sample and a retail card. They noted coil whine on both, but the press sample was actually worse.

Humillated by 5 frames?

So how does this card work with HDMI tvs? Do I need an adapter?

Here's a fun review, the Gigabyte G1 Gaming 980 Ti reviewed AFTER the launch of Fury X by Hexus. So it's an example of a Fury X going against a factory overclocked 980 Ti.

http://hexus.net/tech/reviews/graphics/84284-gigabyte-geforce-gtx-980-ti-g1-gaming-6gb/

The Fury X gets humiliated by an overclocked 980 Ti. It's actually really ugly to witness how badly the Fury X fares against a card which comes from the factory running at the clock speeds the G1 Gaming has.

Computerbase really have the second-best GPU reviews right now, after Tech Report, IMHO. Better in some regards actually (e.g. larger selection of games, more in-depth noise/temp testing).that's a reviewer I never heard of I'll have to check it.

Do you know how to do math?

Min FPS: 50 - 42 = 8 fps / 50 fps = G1 beats Fury X by 16% on Min FPS

Fury X has a single HDMI 1.4 output which can output 1080p/60hz or 4K/30hz

If you look at both the relative increase in FPS and the fact that it is in 4K, then I would assume he is right.Hyperbole much? "Humiliated"? Are you being serious or just trolling?

Here's a fun review, the Gigabyte G1 Gaming 980 Ti reviewed AFTER the launch of Fury X by Hexus. So it's an example of a Fury X going against a factory overclocked 980 Ti.

http://hexus.net/tech/reviews/graphics/84284-gigabyte-geforce-gtx-980-ti-g1-gaming-6gb/

The Fury X gets humiliated by an overclocked 980 Ti. It's actually really ugly to witness how badly the Fury X fares against a card which comes from the factory running at the clock speeds the G1 Gaming has.

...exactly. That's the real issue.People still focus too much on FPS. The real issue ("humiliation" if you want) with Fury X' performance right now is consistency:

...exactly. That's the real issue.

But I suppose all of this will be history once we'll have FreeSync in pretty much every future (*cough*) monitor in existence, no ?

Variable refresh rates don't help much with inconsistent frametimes. I mean, at least you won't have interference effects between the framerate and presentation rate, but it will still not be fluid if every other frame is delivered much more slowly....exactly. That's the real issue.

But I suppose all of this will be history once we'll have FreeSync in pretty much every future (*cough*) monitor in existence, no ?

How much is that factory overclocked 980ti compared to the FuryX? $100-150 more?

dude i can see why you get called out in amd threads, youre a bit much and on my ignore list now.

I'm not sure whether you are serious, but I think that the stutters would still be pretty annoying even with a FreeSync solution.

Thanks for the explanation. Yes, seems logical. Things are looking even worse.Variable refresh rates don't help much with inconsistent frametimes. I mean, at least you won't have interference effects between the framerate and presentation rate, but it will still not be fluid if every other frame is delivered much more slowly.

In Switzerland, the Gigabyte Gaming is only 20 CHF more expensive than the Fury X.How much is that factory overclocked 980ti compared to the FuryX? $100-150 more?

Thanks for the explanation. Yes, seems logical. Things are looking even worse.

In Switzerland, the Gigabyte Gaming is only 20 CHF more expensive than the Fury X.

The EVGA GTX 980 Ti is 30 CHF less expensive.

Durante, is there any possibility for AMD to compare favourably to the 980Ti at this point ?

As I said much earlier in the thread, it already competes pretty well in noise, temperature, and FPS at 4k.Durante, is there any possibility for AMD to compare favourably to the 980Ti at this point ?

As I said much earlier in the thread, it already competes pretty well in noise, temperature, and FPS at 4k.

If they lower the price a bit and fix the frametime variance in software (if that is possible) it could be a valid choice for 4k gaming. Both of these really need to happen though before I'd suggest it to anyone (unless someone really needs this particular form factor).

Yes. Yes they do. Always.

The Gigabyte G1 Gaming series of cards look like this. That's 2x dual-link DVI, 3x DisplayPort, and 1x HDMI 2.0. It doesn't matter WHAT you own, even if you are somehow still connecting to an analog monitor over VGA, one of those DVI's is actually a DVI-I and can output to VGA which is quite amazing. It can output to all 3 DP connectors at once, both DVI's at once, or anything on the right side of the panel plus something on the left for a total of 4 displays. The way the left side works is, it has an internal TMDS transmitter that can either direct signal to the single DVI on the top left or the 2 DP's below it. THIS is the kind of connector diversity a $650 flagship video card should have on it. If you own it, this card can drive it. Period. Too bad AMD never heard of such a thing it seems.

People still focus too much on FPS. The real issue ("humiliation" if you want) with Fury X' performance right now is consistency:

This son of a bitch card. Gotta go return it because faulty DP 1.2 ports (runs fine at DP1.1 spec, but I ain't time for 30Hz)... keeps getting sold out (replacement waiting on order), so I've been using my old Radeon card as my main for the last week and a half.

Fuckin' bummed I made the switch the Nvidia.

How much is that factory overclocked 980ti compared to the FuryX? $100-150 more?

Curious, you getting black screens at windows logon and sometimes no input source black screen anywhere with DP?

What I find interesting is how the 295X holds up. Dual card, sure but it's putting in work.

You can get a stock Ti to clock at those speeds pretty easily, though. In fact you can overclock that card another 10-15% on top of what it's already clocked at.