Senua

Member

Looks like Moore's Law was right the entire time too. A lot of people were down on his info for the longest time.

He was wrong as fuck about ampere

Looks like Moore's Law was right the entire time too. A lot of people were down on his info for the longest time.

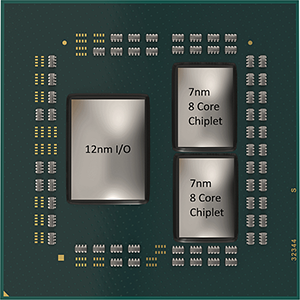

This is the first iteration. A really nice foundation to build off of. People took notice with Zen. Zen 2 was when the 'switch' started. Zen 3 is domination.I think the Radeon launch success/failure will hinge on the reviewers.

Think back to Ryzen, it only seemed to get popular after a variety of Youtubers started to switch to Ryzen for their own actual builds. Up until that point that most of them were like "yeah ryzen is great! (but I'm still using an i7/i9)" and it all felt a bit hypocritical. Encouraging people to buy X while using Y because they want that sweet sweet affiliate cut. I get it but yeah it's obviously more reassuring if they use it themselves without being forced.

Real proof of the pudding will be whether influencers actually started using Radeon themselves.

Where are games that used DXR API instead of proprietary green crap?Where are the ray tracing performance benchmarks?

Yeah.Pretty sure most games at least higher budget titles are going to have RT going forward.

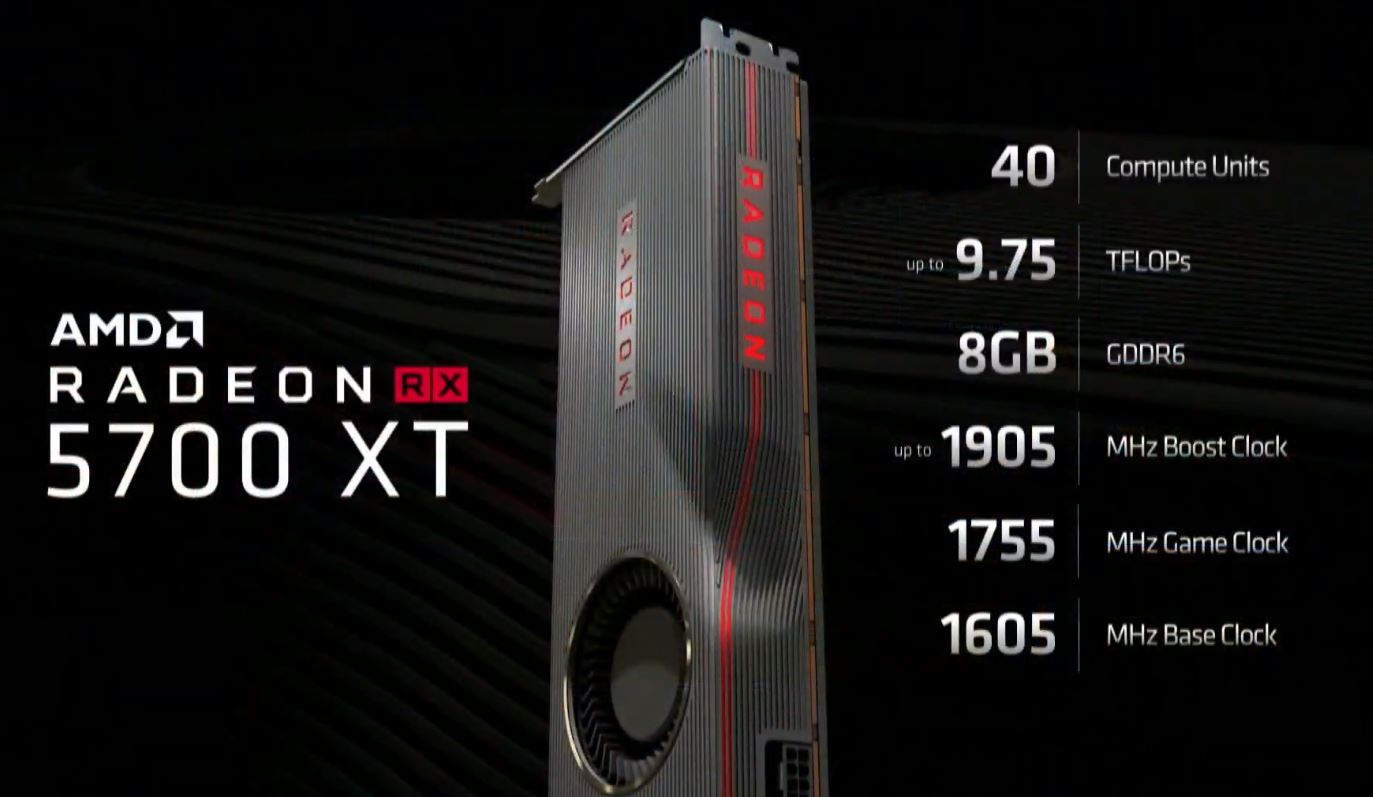

Giving the PS5 the benefit of the doubt, and giving it the upper range of 2015mhz, the PS5 actual and sustained TFLOP count would be 9.28 TFLOPS.

Still a bit too early to know this for a fact, but the facts that we have now are definitely pointing STRONGLY in this direction.

Good analysis from Buildzoid as usual

That's fair. Everything I'm saying is still early and subject to change. We won't truly KNOW until the GPUs are out in the wild and tested.

But at this point, IMO, it looks like all of those sky high clocks are just fantasy.

Today, AMD announced their new GPUs with game clocks (sustained performance) at 1.8 to 2.0 GHz - this is NORMAL. GPUs for years have been able to hit these frequencies and hold them.

2.1GHz has been the upper end, but possible.

2.2Ghz - I don't think there has been ANY GPU made so far that can sustain this clock. Even a watercooled GPU can't quite do this.

So when we hear rumors of RDNA2 GPUs that can run at 2.2 to 2.5GHz, this represents a range that was only possible with liquid nitrogen so far.

I'm not saying that these clocks are impossible to hit without LN2 (clocks get faster over time, and eventually we'll get there). But I am saying that if a GPU says 2.4 GHz on the spec sheet but in reality can only blip upto that frequency for a few milliseconds - that just doesn't count for anything IMO.

If Sony's RT is indeed the same RDNA2 implementation then it's DOA. It's 100% dependent of CU count as it has 1Ray Accelerator per CU. That would make the XSX have a 45% advantage, although it runs at slower clocks making it a lower advantage overall. Still I'd guess 30% or more.

Link's?

True , but for RT it’s still a tangible difference, as RT is power heavy.if those CUs run >20% more cycles in the same time budget, it's exactly the same difference as with TFlops.

Amd own footnote

Boost Clock Frequency is the maximum frequency achievable on the GPU running a bursty workload. Boost clock achievability, frequency, and sustainability will vary based on several factors, including but not limited to: thermal conditions and variation in applications and workloads. GD-151

‘Game Frequency’ is the expected GPU clock when running typical gaming applications, set to typical TGP (Total Graphics Power). Actual individual game clock results may vary. GD-147

True , but for RT it’s still a tangible difference, as RT is power heavy.

Did AMD say AIB partner cards are launching on Nov. 18 or just AMD editions? Any indication when AIB partners are allowed to unveil their cards and specs?Yeh you mentioned it but frequency plays a part.

The comparison is pretty much 1-1 on the 6000 series cards because the clock speeds are similar across the board however the PS5's GPU is higher so it makes a direct comparison more difficult. With that said the Series X should have an advantage here if Microsoft can get their SDK in order.

Wait for AIB card announcements.

All the leaks we had in the lats couple of weeks originated from AIB's or people who managed to get their hands on AIB samples.

AMD reference cards almost always clock in lower than what the AIB's manage to squeeze out.

since when nvidia is developing games? It's up to devs to use these features and as nvidia owner right now, I don't care how it runs on amd. I just want good looking and running games.My favorite part about this video is when he goes over the infinity cache he immediately wonders what bullshit graphical feature Nvidia might come up with that intentionally tanks performance on the AMD cards.

You're welcome to answer this at a later point:What you said is complete nonsense. You just threw a bunch of technology buzzwords at me.

You could write a decent Star Trek episode "We'll channel the tachions through the deflector array to charge the Klingon time crystals."

Why don't you drink some Valerian or something?I think anyone still believing/hoping for the 2.5GHz clock is setting up himself for a huge disappointment.

Oh, c'mon. Nvidia plays dirty since we all can remember, like when they forced ubisoft to remove DirectX 10.1 from ass creed 1 to make it compilant with some stupid "the way it's meant to be played", or something like that, just because it was playing way better on AMD hardware. The list is immense, like the tesselation fiasco, the physx launch and so on. They always do something to sucker punch AMD and everybody knows it, albeit it's heavily protected by NDA.since when nvidia is developing games? It's up to devs to use these features and as nvidia owner right now, I don't care how it runs on amd. I just want good looking and running games.

You are just overestimating your 3900x.

Did AMD say AIB partner cards are launching on Nov. 18 or just AMD editions? Any indication when AIB partners are allowed to unveil their cards and specs?

This is the first iteration. A really nice foundation to build off of. People took notice with Zen. Zen 2 was when the 'switch' started. Zen 3 is domination.

nvidia better not take it likely. AMD will only get better. Crazy to think we have Intel joining the fray as well

You can order, pay and expect to get it, they just won't ship anything.It says that RTX 3000 series will be available to buy on 29th of October, like, as if I care anymore

"It's OK Anna, our drivers are still better"

chuckled at this bit lol

I've got zero issues with Nvidia partnering with different game devs so they implement either new graphical options developed inhouse by Nvidia or features like Ansel as long as it's just to make it's cards more attractive to consumers by virtue of said additions. I don't like it when Nvidia does said partnerships and then uses them to harm it's competitor like locking gpu accelerated Physx to nvidia cards for no non bullshit reason for a long time. On that note i'm really curious if the AMD cards that support raytracing are going to be able to play the current crop of games that support it through RTX without it having to be patched in by the developer.since when nvidia is developing games? It's up to devs to use these features and as nvidia owner right now, I don't care how it runs on amd. I just want good looking and running games.

AMD got good cards but failed in the most important aspect... the price.

The RX 6800 should be competing with the RTX 3070 in the price range, instead it's 80-100€ more.

Greedy AMD.

Well look at it like this, you can buy a 3070 for $500 with 8GB VRAM, or for just $80 more you can get an extra 10-15% performance along with double the VRAM at 16GB.

The 6800 makes the 3070 completely redundant, it can't compete on performance and has only half the VRAM for only an $80 saving? I mean I think it would have been better if AMD priced the 6800 at $550 to really bring the heat but $580 is still reasonable.

Why would someone buy a 3070 knowing it has only 8GB VRAM in 2020/2021 when for only $80 more you get double VRAM and go up almost an entire tier in performance?

Now that the dust has settled, something to note about the 6900XT is that the benchmarks had SAM + Rage Mode enabled.

So Rage Mode is a better version of automatic overclocking, can be done in the Radeon control panel without voiding warranty.

This means it should be a conservative OC compared to an AIB factory OC or a manual OC of the reference design. But it should probably increase the TBP by some small amount. Still likely behind the 3090 in TBP, nice that every day gamers can use this feature to gain a few extra % of performance for "free" essentially without needing to know how to OC their card.

The SAM stuff seems like a really cool synergy feature, granted this will depend on things like RAM speeds/quality in your MOBO, which tier of Ryzen chip you have and as we know is only supported on the 5000 series Ryzen and seemingly 500 class MOBOs, but it seems to give a nice performance boost for free pretty much.

If you remove these two features then the 6900XT is slightly behind the 3090 compared to the official slides so this will likely be represented in 3rd party benchmarking/reviews. Just to set the expectation correctly.

Granted, the fact that it is able to nip at the heals of a 3090 level of performance for $500 less is pretty amazing. What is even more crazy is that it is doing it with 50 less watts of power draw than the 3090.

These cards (6000 series) seem to have a lot of OC headroom, you might ask "well why didn't AMD just do what Nvidia did and push the clocks/TBP close to max for that extra performance?"

Well it seems that AMD wanted to stay under 300w power draw target. They wanted the efficiency crown and they likely designed the reference models based around the properties of their reference cooler, which once you go above 300w might end up running hot and loud.

So if you can push these cards up to 3090 levels of power draw with an OC they would likely pretty much match them, it will be interesting to compare the top OC'd AIB 3090 to the top OC 6900XT (one AIB partners are allowed make them). The 6900XT might end up pulling ahead if there is as much OC headroom as implied.

Plus if you do happen to have the latest Ryzen/MOBO in your rig too you can gain a nice bit of extra performance for free with this cool new SAM stuff.

I wonder how much extra perf Rage Mode would give on an already aggressively OC'd AIB model?

Anyway, really interesting times ahead of us! Just don't expect non Rage Mode+SAM 6900XT to match the 3090, without those enabled it will be a tiny bit behind most likely.

So I guess you would consider RDNA2 to really be more like a Zen Plus rather than a Zen 2 equivalent in GPUs? If so, that means RDNA3 will be the Zen 2 moment.

AMD got good cards but failed in the most important aspect... the price.

The RX 6800 should be competing with the RTX 3070 in the price range, instead it's 80-100€ more.

Greedy AMD.

+16% on price is not too much to ask for +18% performance and double VRAM.The RX 6800 should be competing with the RTX 3070 in the price range, instead it's 80-100€ more.

RDNA1 was meh, much better than the GCN predecessors, but still far behind the competition

I was impressed how the upscale in Death Stranding looks from 240p and up. 240p+DLSS is of course not a real use case and the differences become quite underwhelming at higher resolutions, in your example some lines look downright blurry when they shouldn't or wouldn't be. Pass.When ppl try to tell you how great DLSS is remember it's hype:

Remember his PS5 upclock claims?He was wrong as fuck about ampere

Amd own footnote

Boost Clock Frequency is the maximum frequency achievable on the GPU running a bursty workload. Boost clock achievability, frequency, and sustainability will vary based on several factors, including but not limited to: thermal conditions and variation in applications and workloads. GD-151

‘Game Frequency’ is the expected GPU clock when running typical gaming applications, set to typical TGP (Total Graphics Power). Actual individual game clock results may vary. GD-147

As one would expect from TAA => blurrier picture.When ppl try to tell you how great DLSS is remember it's hype:

Is that from DLSS 2.0?When ppl try to tell you how great DLSS is remember it's hype:

Yes, quality mode, WD:Legion.Is that from DLSS 2.0?

Btw nobody has talked about VR performance yet yes? I wonder how the 6800XT will compare to the 3080 in a VR environment.

Dont care about raytracing. I saw the watch dogs video and there was barely any difference.

Will buy the 6800xt card.

He was wrong as fuck about ampere