ZywyPL

Banned

Was it though?

Granted they did not release a card to compete at the high end, I'll give you that, but the 5700XT launched at the same price as the 2060 (non super I think?) with performance on par with a 2070 for a much cheaper price.

This actually forced Nvidia to release super refreshes and drop the price.

Recently Hardware unboxed released a video benchmarking the 5700XT with the most recent drivers (September 2020 drivers).

In this video the 5700XT gains additional performance and matches the 2070Super across the benchmark suite in performance. The fine wine effect is real.

I think that was a fairly impressive first step given how badly AMD's previous GPUs were performing. And don't try to rewrite history, it was incredibly competitive with its equivalent Nvidia tier GPU. You can look at the reviews at the time to see that. Not only that but in little over a year from release it has gained additional performance to compete almost perfectly with the 2070Super. I would say that is pretty impressive.

Yeah but people who wanted that kind of level of rasterization-only performance already bought 1070/Ti/1080/Ti years ago, and many of them didn't look at Turing GPUs either, since the rasterization performance was on par with Pascal, while the RT performance was seriously lacking. For anyone with a 5-7yo hardware who was really forced to do an upgrade already in that period of time the RX 5700/XT were a good value, but for everyone else, it was simply LTTP.

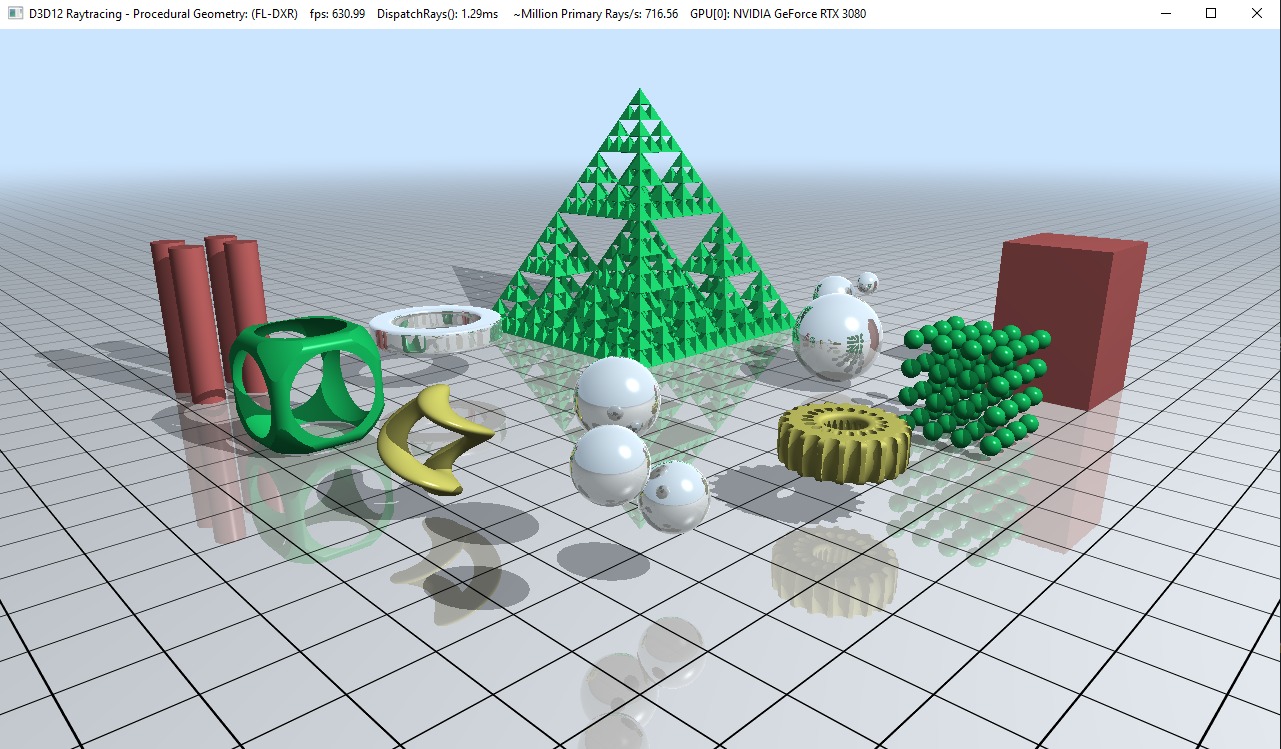

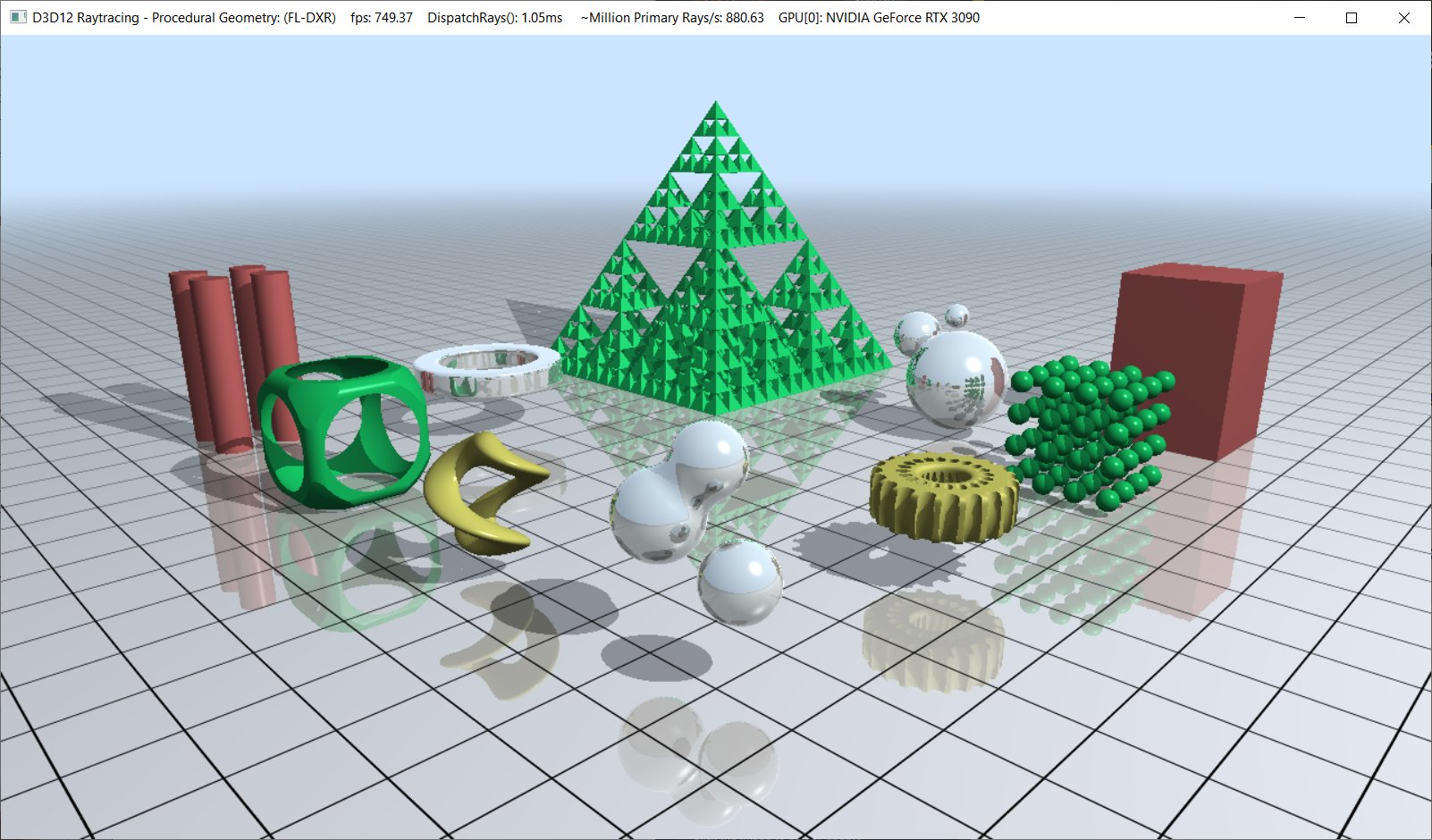

When ppl try to tell you how great DLSS is remember it's hype:

What are the FPS tho? because that's DLSS' purpose. I agree that the initial hype was overblown, or rather, the feature didn't delivered what was promised, I was even one of the first who heavily criticize NV for what I thought back then was nothing but a poor gimmick instead of simply doubling the RT cores, but its current state doubles the performance with just a few drawbacks here or there, if any, depending on the title, and now AMD is working on similar solution as well, not without a reason, it's simply a more efficient approach in terms of tranzystor budget/die size, instead of just stacking up the CUs.