GHG

Member

An i5 3470 (4 core, 4 threads)

What the hell you waiting for?

Upgrade that shit and stop being a bitter old hag.

An i5 3470 (4 core, 4 threads)

Yep, they landed a strong punch with the SteamDeck APU, delivered an even stronger one with the Ryzen 7 6800U SoC (super popular for handheld PC's), and a next generation APU could deliver a super strong uppercut that gets them a strong strong market hold.Zen4/RDNA3 APU combo for entry level gaming for laptops will be unmatched with DDR5, PCIE5. I think they will be the only company to offer 1440p/60fps+ on entry level gaming. They really emphasized the performance/power/efficiency usage. Don't know too much about RDNA 3, but perhaps you can finally get ray tracing, along with machine learning\DLSS super sampling on an entry level laptop/pc at 60pfs+

DirectstorageAPI with 1-4 sec load time icing on the cake

Yep, they landed a strong punch with the SteamDeck APU, delivered an even stronger one with the Ryzen 7 6800U SoC ( super popular for handheld PC's), and a next generation APU could deliver a super strong uppercut that gets them a strong strong market hold.

If I were AMD I would be strongly courting Nintendo for a semi-custom solution based on this APU (the lowest power variant possible with some minor Nintendo customisations). Worth considering although it would be a big change and require emulation for Switch BC, but I think that would be doable… for older titles? Well, Nintendo could again drip feed them to customers again.

An i5 3470 (4 core, 4 threads)

You're going to be GPU bound at 1440p?

I'm talking about the leap in performance from my perspective, probably my bad for not including it. From 3700x to a 7700x would easily yield me 15FPS increase or more even at 4k, provided I'm not totally GPU bounded.Are you new to this ?

Please do some research before you post ... you should know that even the 5600x and 5900x when it comes to gaming, there is no performance difference worth the 300$ price tag difference. Not even 10 frames at 4k.

The same goes for intel. For example the i5 12600 and the i7 12700 in gaming, the performance is about the same . Yet there is big price difference.

The difference in these cpu are bigger in content creation. But this is not our topic here since we are focused on gaming.

When you look at the slide in the OP about the performance gain between both high end CPUs 12900k and 7950k... In gaming .... Then refer back to my previous post. It's not impressive or even close to being good in terms of gaming. They are not even close to the 13th gen intel at this rate.

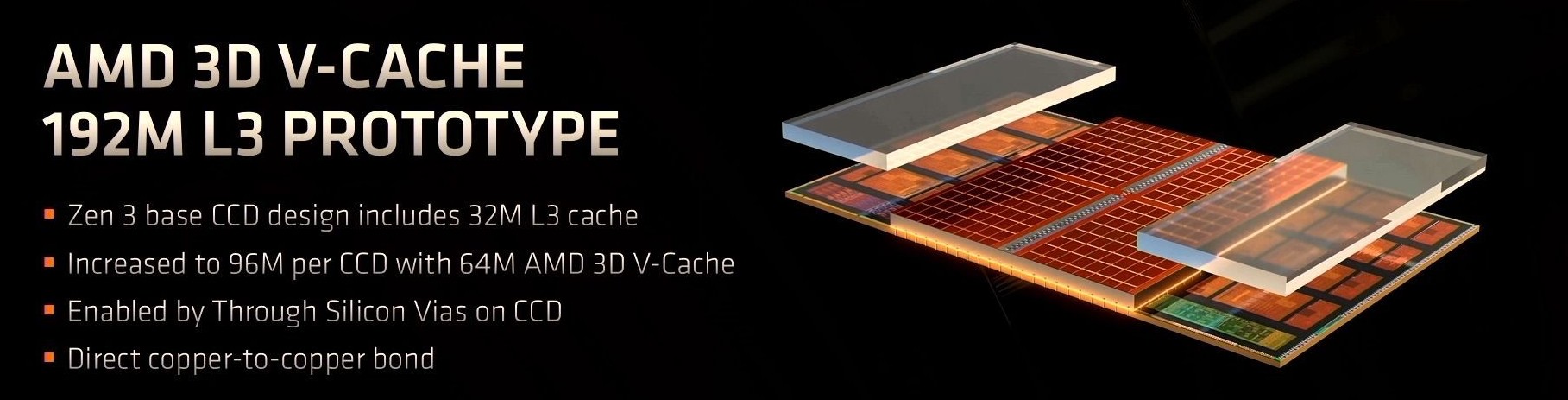

Which is why the 5800x3d is such a great product. Too bad it was released way too late. With the death of the ddr4 soon and the high asking price, I can't really recommend it to anyone. If the 7800x3d is going to be released next year, then it would be a great product since it technically should hold till 2025 when the new amd Zen5 or whatever they wanna fall it is out.On average, the 12900K was around 12% faster than the 11900K in 1080p gaming. The 11900k was around 1% (!) faster than the 10900k. If Raptor Lake manages even a 10% boost it will be impressive given that it is on the same process with no major architectural changes.

Then the 7800X3D is going to arrive...

No worries.I'm talking about the leap in performance from my perspective, probably my bad for not including it. From 3700x to a 7700x would easily yield me 15FPS increase or more even at 4k, provided I'm not totally GPU bounded.

There are already circumstances where I wished I could lock certain games at 4k120 on my C1, but couldn't because of the CPU as I'm definitely not GPU bound with a 3080TI.

I was addressing the claim that AMD would get "destroyed" by the 13900K. After my post I found an AMD slide that claims the Zen 4 core can beat a 12900K (according to the footnotes a 12900KS), by 11% in gaming.Which is why the 5800x3d is such a great product. Too bad it was released way too late. With the death of the ddr4 soon and the high asking price, I can't really recommend it to anyone. If the 7800x3d is going to be released next year, then it would be a great product since it technically should hold till 2025 when the new amd Zen5 or whatever they wanna fall it is out.

But what they announced today ? Nope not worth it. Add to the cost the new motherboard and ddr5 ram.. you spending 900$ US after tax at least 1000$. Just nope lol

We don't know. in Theory, you are right. but I do not see Intel releasing something inferior to AMD 4 months at least after AMD release.I was addressing the claim that AMD would get "destroyed" by the 13900K. After my post I found an AMD slide that claims the Zen 4 core can beat a 12900K (according to the footnotes a 12900KS), by 11% in gaming.

https://www.techpowerup.com/298318/amd-announces-ryzen-7000-series-zen-4-desktop-processors

So if Raptor Lake is ~10% faster than its predecessor that should be an effective tie with Zen 4. Or if it's 15% faster, it will beat Zen 4 by <5%. Hardly a destruction.

If you think about the last 10 years of Intel and AMD GPUs, the only case I can think of where you saw >25% performance improvement in gaming on average was with Zen 3. And that's really only at low resolutions. Zen 3 is a special case, because Zen 2 was being significantly held back by the dual CCX design, which caused big latency penalties. By moving all 8 cores to one CCX and doubling the shared L3 cache, AMD gained a massive advantage over their old architecture.We don't know. in Theory, you are right. but I do not see Intel releasing something inferior to AMD 4 months at least after AMD release.

We could be more disappointed with Intel than what AMD had to offer.

for what we know so far. AMD next generational leap is a joke. and there is no leap really from going to ZEN4. you would think switching socket and socket design, adding DDR5 ram and on top of that a much higher TDP, you would think you get a huge boost in performance. but nope that didn't happen.

to me, it sounds like it's the same processor under a different socket, it's just the DDR5, and the higher TDP is the reason we are getting this uplift ( I know it's not really the case but honestly, it almost sounds like it. as if the engineering team was playing with their balls the last 2 years I guess )

240hz+ Gaming, also, its a CPU test so you want to lower the resolution to not be GPU bound.Who the hell's playing at 1080p, with that kind of CPU in their rig?

why can't amd make efficiency cores just like raptor lake? Also is intel's tile based architecture just another word salad for APU's?

They can but why would one need those? Interestingly I could actually buy the 7900x and downclock it to 5900x levels and it would use 62% lower power at the same perf.

Because they don't seem to need them? Zen seems to scale very well, only really falling off a bit at the very high end (high clocks and power consumption). Impressive for a single architecture.why can't amd make efficiency cores just like raptor lake? Also is intel's tile based architecture just another word salad for APU's?

With meteor lake, they are going to put some sort of ray tracing cores/processing embedded within the CPU core. I have a feeling zen 5 is all going to be about AI and ML.

Intel already have efficiency cores in laptops. They seem to do jack shit for now though.This is just a guess but:

1) The efficiency cores of raptor lake are contributing to higher multi-threaded Cine bench scores

2) The efficiency cores would be useful for mobile laptops

240hz+ Gaming, also, its a CPU test so you want to lower the resolution to not be GPU bound.

Do we think AMD will do a 8 core 65w part (possibly lower cache/frequency)?

Want a part that's less TDP then what l own today.

Do we think AMD will do a 8 core 65w part (possibly lower cache/frequency)?

Want a part that's less TDP then what l own today.

Most AMD processors have a lower power state that you can lock the processor to in the bios. Most of the 65w had a profile for 45w, etc. Maybe these will have that.

Undervolt the 7700X to ~65W and itll likely still outperform the 5800X.Do we think AMD will do a 8 core 65w part (possibly lower cache/frequency)?

Want a part that's less TDP then what l own today.

just lower settings to get 60fps @4k.People being shocked about 1080p is so weird to me.

You don't like 120+ fps gameplay? Add RT on top and getting 120+ is getting really hard, really fast. If you have GPU horsepower to spare, you downsample from higher rez.

If you get a 4K monitor, you have to deal with lower performance or poor image scaling because your GPU is too slow for 60, 120 fps or more. I'm not dropping 2000$+ on GPUs every 12-24 months.

I have money, I just think their current pricing less than 10% cheaper than non-gaming 4K monitor is nuts.LOL, according to steam survey 67% still plays at 1080p. 1080p will still be here for a long time specially not everyone has the money to get 1440p monitors

It's time instead of doing 65 and 35W processor variations of the same high performance part they add motherboard official presets for the CPU's to conform to those power draws.Do we think AMD will do a 8 core 65w part (possibly lower cache/frequency)?

Want a part that's less TDP then what l own today.

Bunch of snobs in here. Sure, we're enthusiasts, but that's no excuse.

1080p --> 1440p is only really noticeable on bigger displays. On smaller displays you have to be looking for a something to moan about.

1440p --> '4k' only really matters for large displays and proper '4k' is out of reach of most people.

Perfectly passable for gaming and watching videos though.22" and below the difference is negligible but at 27" 1080p is already soft. 1440p is somewhat of a sweetspot for that size. Then again 1440p over 27" starts to become soft quite fast.

Anything over 27" this matters. For 32" 4k imo its already a must.

Perfectly passable for gaming and watching videos though.

Specific cutoff points wasn't my point either. Quite a few members here are detached from reality (for most others).

There's nothing wrong with enjoying '4k', etc. but stuff like '1080p needs to die' is just ignorant and lacking in empathy.

Mate, that was like a decade ago. We don't live in the past.Just wondering where was this cap when athlons and phenoms were out?

Don't live in the past but probably were team Intel then right?Mate, that was like a decade ago. We don't live in the past.

Don't live in the past but probably were team Intel then right?

Was team amd and team ati back in the 2000s switched and never went back.Never been 'Team Intel'.

It's nice that AMD is giving a push for USB 4.0 with 40Gbps bandwidth:

However ...

USB 4.0 Version 2.0 Announced with 80Gbps of Bandwidth

Tbh Im perfectly fine with 1080p(24 inch) unless the game have really shitty AA solution .Bunch of snobs in here. Sure, we're enthusiasts, but that's no excuse.

1080p is perfectly fine up to about 24", 27" at a push. This is not only being realistic, but as far as we know how most PC gamers play.

480p --> 720p was a big and very noticeable jump.

720p (HD) --> 1080p (Full HD) was/has been a less noticeable jump, though is quite easy to notice if you try.

1080p --> 1440p is only really noticeable on bigger displays. On smaller displays you have to be looking for a something to moan about.

1440p --> '4k' only really matters for large displays and proper '4k' is out of reach of most people.

'8k' is currently pointless for pretty any consumer. Looks incredible though.