I guess, there may still be some optimization work needed for threadripper for games including the two memory presets, but performance is not bad....Does pretty well in Tomb Raider, but some games like Dirt Rally don't know what to do with the 32 cores on the 1950x....Not like I'm considering the TR1950x, but when more games start using more threads and with some improvements to x399, I can see Ryzen TR scaling to better perf in games just like regular Ryzen.....I'd personally love to see how game performance scales on the platform with higher clocked memory like the FlareX 3600Mhz tbh and other workloads of course...

All in all, I see your point, since the 1800x is a very viable alternative for gaming and non-gaming workloads as it is...I just see the 1900x as an affordable entry point on a feature rich platform (about +$130 over 1800x's price) for HEDT. It's tempting to say the least, but those mobo prices though

I imagine there will be cheaper alternatives coming along shortly as well?

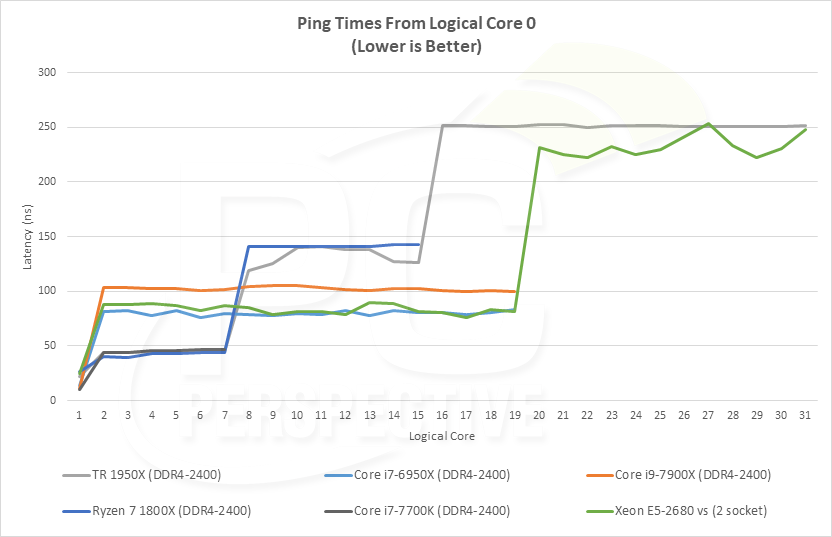

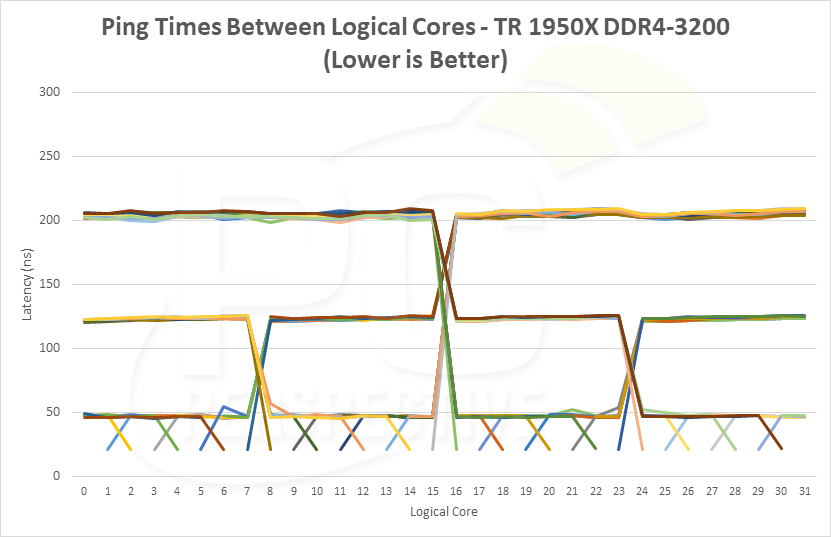

This is not like Ryzen where there is a (comparatively) small latency penalty for threads communicating across CCXes within the same die. This is significant latency across two dies in UMA mode.

In NUMA mode (game mode) Windows is basically told that the two dies are two separate CPUs, and my understanding anything not written to explicitly work across multiple CPUs will be restricted to one of them.

I really doubt that there will be much game support for NUMA Nodes.

With a 1700/X you get 4 cores per CCX, and two CCXes total.

With a 1900/X you get 2 cores per CCX, two CCXes per die, and two dies total.

PC Perspective covers this in their review.

However, since it only covers the 12-core 1920X and 16-core 1950X, it does not explain the severity of the issue with the 8-core 1900/X.

A 1920X still has six cores that it can dedicate to a game, and the 1950X has eight. The 1900/X will only have four, so game performance will be like an overclocked

1500X 1400 non-X.

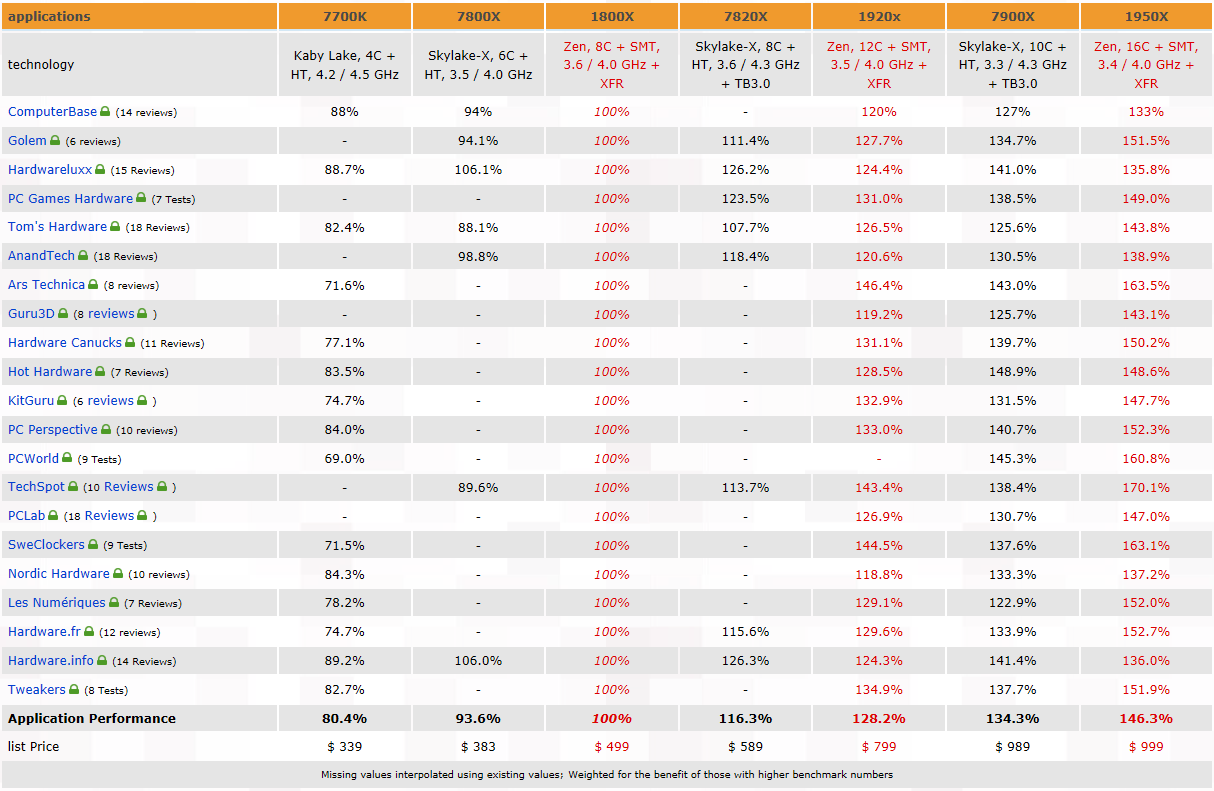

This chart does a good job comparing them.

When communication is contained within a CCX, Ryzen/Threadripper actually have slightly lower latency than the 7700K, and considerably lower latency than the 7900X.

However cross-CCX communication has a big penalty that puts latency higher than the 7900X - even with faster memory.

Cross-die communication is significantly higher than that - similar to a dual-socket Intel system.

And it will be

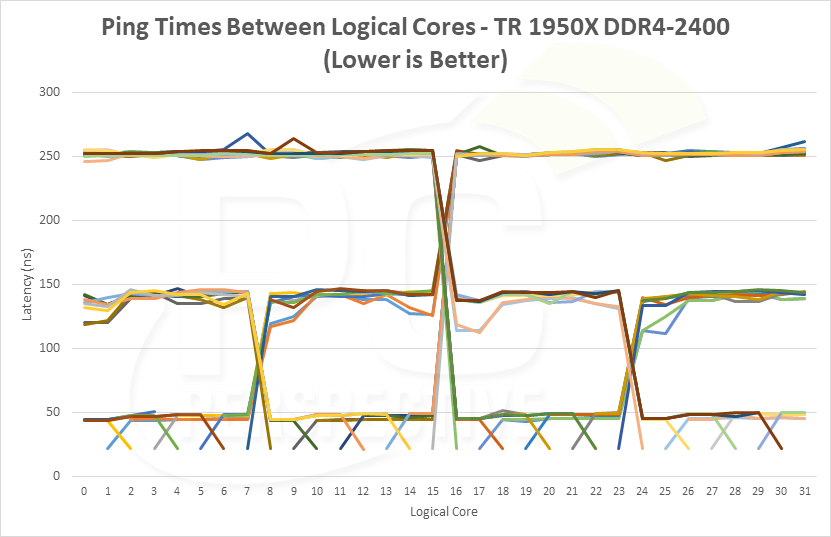

really important to buy faster memory for Threadripper compared to Ryzen.

Here we can see that the cross-CCX latency drops from 143ns to 125ns going from 2400MT/s memory to 3200MT/s. (12.5% - there are diminishing returns above 2666MT/s)

But the cross-die latency drops from over 250ns to ~200ns (20%) which is significant. That brings latency lower than a dual-socket Intel system.

It's just not smart to buy an 8-core Threadripper unless you

need the PCIe lanes or memory capacity/bandwidth, and your workload doesn't care about the latency across threads. (games do)

Giving games access to all 8 cores (UMA mode) could result in less consistent or possibly even worse performance than restricting them to 4. (NUMA mode)

If the only "High End" gaming I do is VR would a Ryzen 7 be better or worse than an i7-6700k (running at stock)?

I can't find much on Ryzens VR performance compared to an i7 specifically (although AMD claims a lead over the 7700k in vr performance)

Gamers Nexus did some testing back in April:

For the most part, there is no significant difference between the AMD R7 1700 ($330) and Intel i7-7700K ($345) in the test data. Both perform in a fashion which delivers a smooth, imperceptibly different experience. The performance is effectively equivalent, with regard to throughput to the HMDs. In the Rift games, Intel tended to do better, but those games (interestingly) are also traditional desktop games first, and VR games later. AMD was not dropping or missing warps in a way which changed the experience in either title, and AMD did show benefit (sometimes within margin of error, as illustrated) in some HTC Vive use cases. Because the headsets are locked to 90Hz, the unconstrained metrics have limited use beyond potentially trying to extrapolate future performance but even that is a bit dicey with VR. It is best to look at frametimes vs. intervals and drops/warp misses.

Either the Intel i7-7700K or AMD R7 1700 would provide an acceptable experience in VR. It will largely come down to GPU choice, given these two CPUs to choose from.

However I'd wait until the 21st and see what Intel have to announce.

They've put a rush on Coffee Lake for desktops (there will apparently be a v2 launch in Q1 2018) and 6 cores without any kind of CCX (Zen) or mesh topology (X299) should kick everything's ass in pure gaming tests.

I am not dissatisfied with my 1700X system - especially since it has ECC memory while Intel restricts that to Pentiums, i3s, and Xeons - but I do feel like it would have been smarter to wait for the reaction to Ryzen before building a system, rather than buying one.

I'm mostly happy with its performance in games, but there is the occasional title which really seems to need the higher IPC that Intel offers, and that's disappointing.

I'm starting to think that with my next GPU upgrade, I may end up building a separate (Intel) gaming rig and keep this as a dedicated workstation/server.

I would prefer to have a single machine that does everything, but that just doesn't seem to be possible.