RoadHazard

Gold Member

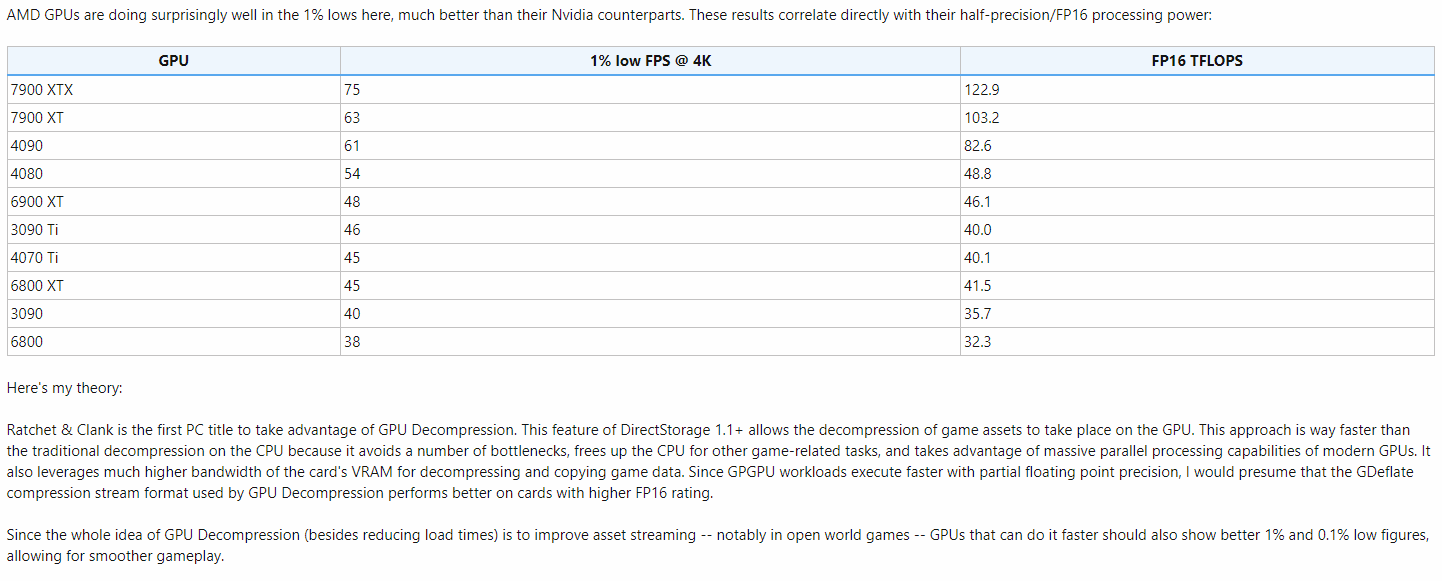

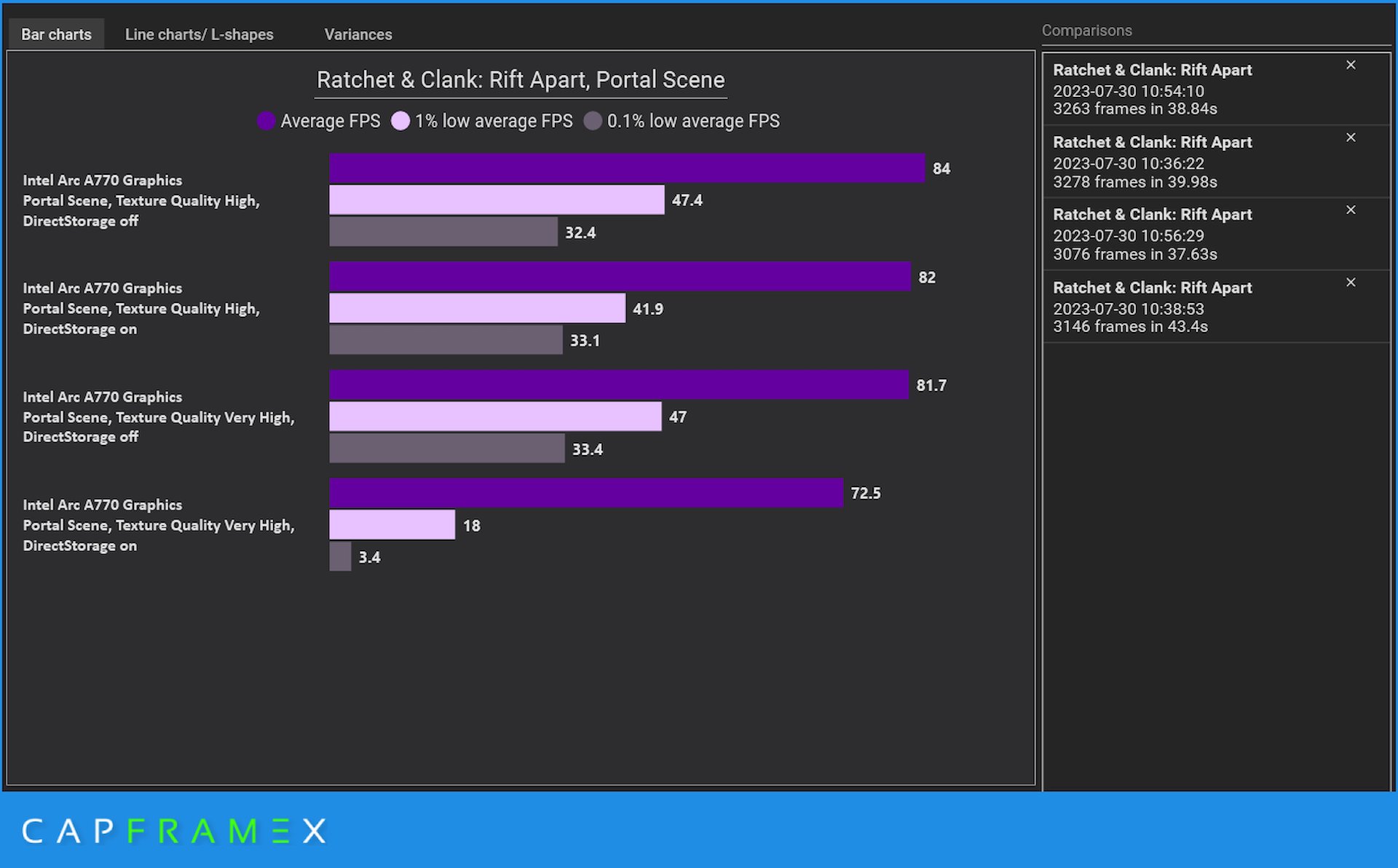

And DirectStorage doesn't degrade performance, otherwise, AMD would exhibit the same behavior and we'd see tangible differences with RTX IO off when it comes to NVIDIA cards.

Isn't this because AMD cards don't even support it in hardware (like RTX IO)?