For those interested in investigating, I am getting VERY disconcerting results on PC. I'm diverting the topic a lot and for my part the debate ends here, but as I said I would test the PC version, here they are.

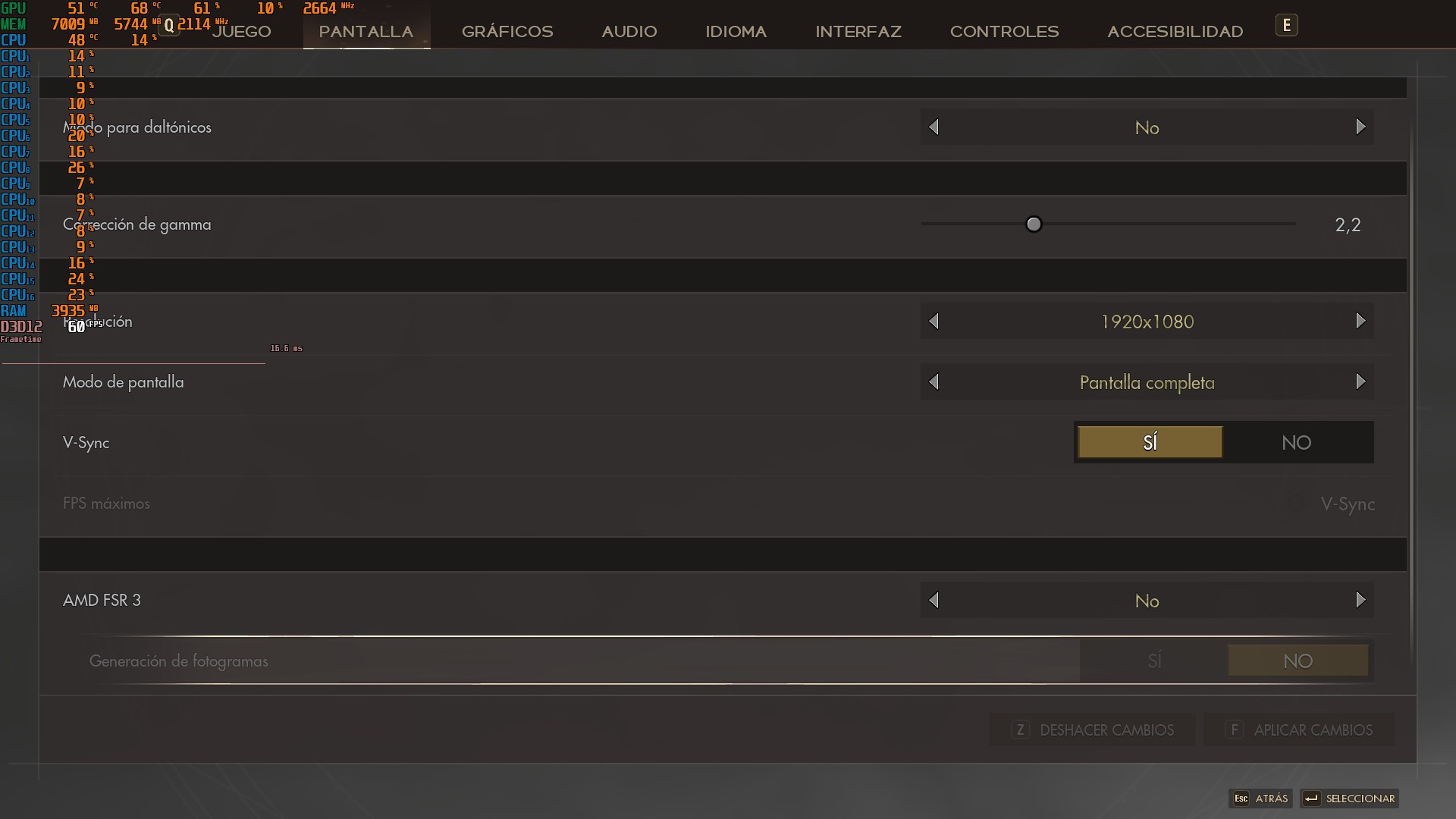

In this screenshot, I'm playing at 1080p and with FSR 3 disabled. That is, it would be playing at native 1080p. However, then I activated FSR 3 and the result will surprise you:

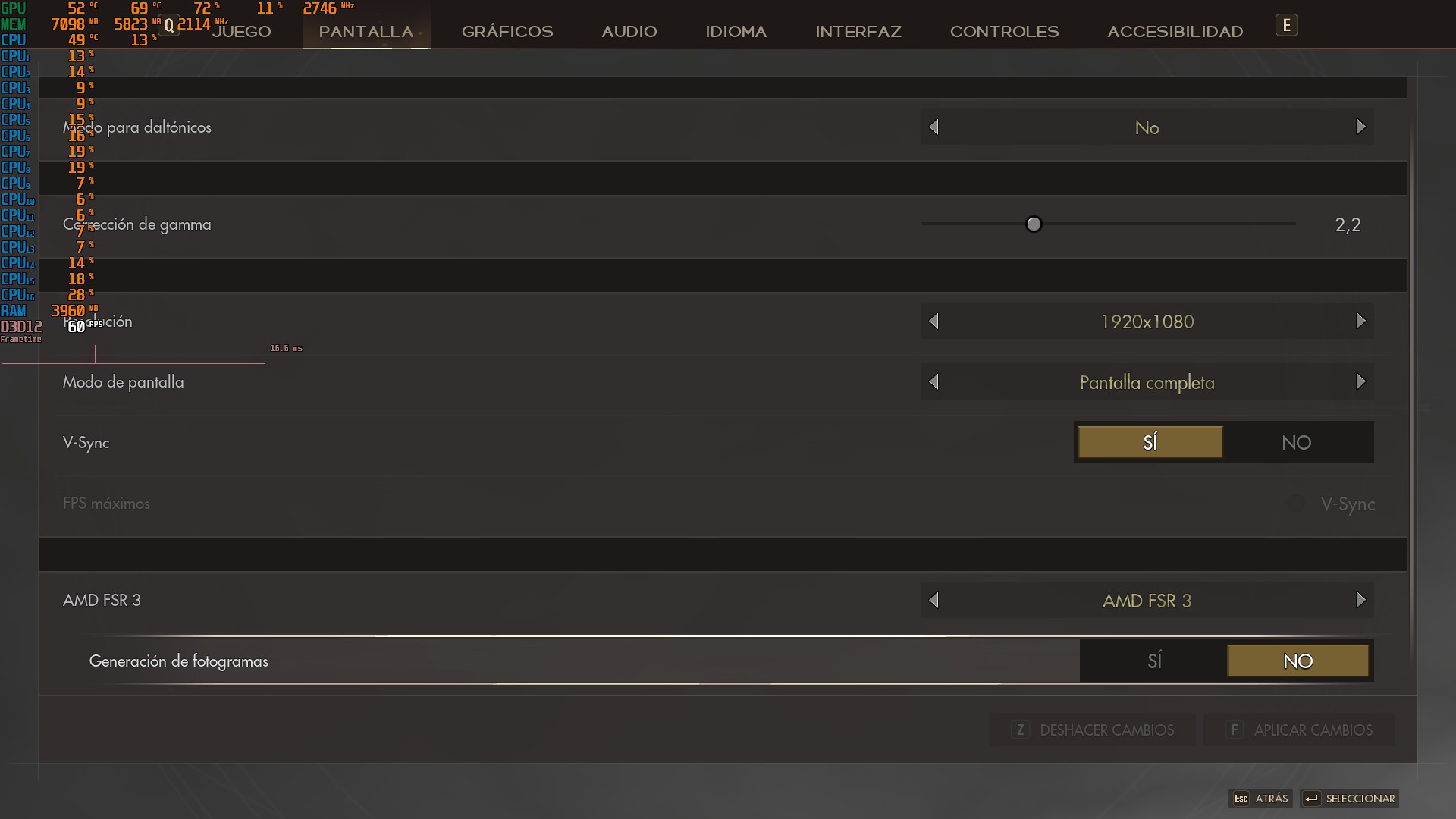

Surprisingly, with FSR 3 activated I get a result extremely similar to the PS5, and with FSR 3 disabled it happens EXACTLY THE SAME as in the Xbox Series X version.

Anyone can verify that I'm not lying. I had to make sure several times that I was not wrong. In case anyone doubts my honesty here, I also put the two screenshots with unlocked framerate and FSR 3 activated and deactivated:

FSR 3 off:

FSR ON:

Why does it look sharper with FSR 3? More tests, I find it very interesting. This shot is of the game with the textures on low and with FSR activated, it still does not match XSX.

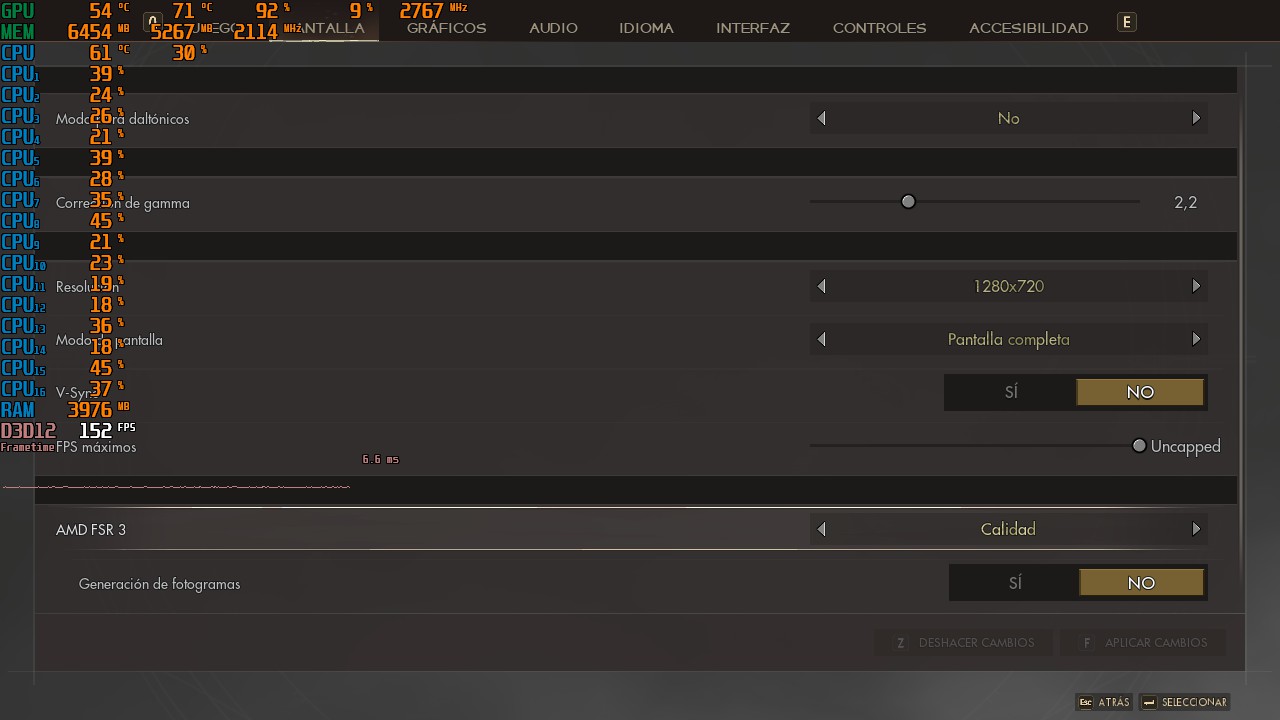

Then I tried downgrading to 720p with FSR disabled (textures high), and the image almost fully matches the XSX:

Now, the same shot with FSR 3 in Quality and at 720p:

What I'm seeing here really doesn't make sense. But in short, trying to replicate the PS5 and XSX versions, this is what I get. The XSX version looks very similar to native resolution, and on PS5 it looks like any FSR usage. I miss him? Performance with FSR 3 does not increase, neither in quality nor in balance, only if I activate frame generation.

It doesn't make much sense, it's like FSR doesn't work the way you expect. But the blurring of some textures on Xbox Series X is clearly related to image quality, not related to texture quality. In summary, this would be the "equivalent" way to XSX and PS5 on PC:

XSX/PC 720p/Native Resolution:

¿PS5?/PC 720p/FSR balance:

Performance with and without FSR barely varies, but notice how GPU utilization drops with FSR.