I still stand on my position.

I play on 8K/4K screens, as well as use DLDSR resolutions a lot. I spent hundreds of hours with DLSS testing. Cannot even count the amount of times spent on diverse comparison with all options, presets etc... in conjunction with DLDSR 1.78 & 2.25.

DLSS P on 4K is noticeable and absolutely a no go for fast games (racing/fps) it smears, it has blurring and oversharpened stuff mainly on the distance because of the compensation the processing has to do to accommodate for low details.

DLSS, no matter how good it is, is still a TAA solution. It's a good TAA solution, but it still is a TAA.

Also, because of the use of extreme blurring, motion blur, dof, chromatic aberration, sharpen filter etc... on all "modern" slop engines like UE5, the difference seems less noticeable to your brain simply because even on native these engines are blurry & smeary slops. Every medium to distant details are lost within it.

Heck, when you think about it, even "quality" mode is very low as it is 0.667 scale which makes the resolution 0.45x of source.

Just tired to see all these "magic voodoo" statement that constantly repeat that the 25% pixels can construct a proper 100% res. No.

But know that this is because of these false claims & false observations that developers don't bother optimizing games anymore, relying on the "well, put dlss performance bruh it will be like native".

To my testing, DLSS P started to output a statisfying image when using DLDSR 1.78 (2880 image), balanced preset still being a bit better.

DLDSR 2.25X, 5760x3240 so 2880X1620 DLSS resolution was when that preset was good to my eye.

Also, there was no offence implied on my first answer, but my apologies if it was clumsy on my end.

The exhaustion of the constant "DLSS being magic" is sometimes bothering me for the aforementioned reasons.

Are you sure you arnt talking about ray reconstruction (RR) artefacts? RR does everything you mentioned. At a lower internal resolution (even DLSSQ), it starts bluring fine details and smears during motion (and especially with DLSSP). RR is also adding adaptive sharpening. I find these problems very noticeable, and I would agree with you if you were talking about RR.

As for Deep Learning Super Resolution, this technology (DLSS2) previously used adaptive sharpening during motion as well. However, DLSS3 has completely resolved this issue, and now uses uniform sharpening settings (and with the default sharpening settings at 0%, no sharpening mask is used at all). Therefore, I find it strange that the sharpening in the DLSS image bothers you so much when this technology no longer adds sharpening.

If you are using DLDSR, it is also possible that this technology will sharpen the image. I used DLDSR feature on my previous 2560x1440 monitor because downscaling allowed me to achieve a sharp image even in the blurriest TAA games. DLDSR image however wasnt perfect, because depending on the smoothness level this downscaling method either blurs the image, or eversharpen it, so I wasnt never able to get neutral sharpness like with normal DSRx4 downscaling, or just MSAA / SMAA. I stopped using DLDSR since buying a 32-inch 4K OLED monitor, because TAA no longer looks blurry (the very high pixel density helps to mitigate the blur caused by TAA), so I get neutral sharpness (image is sharp without looking oversharpened)

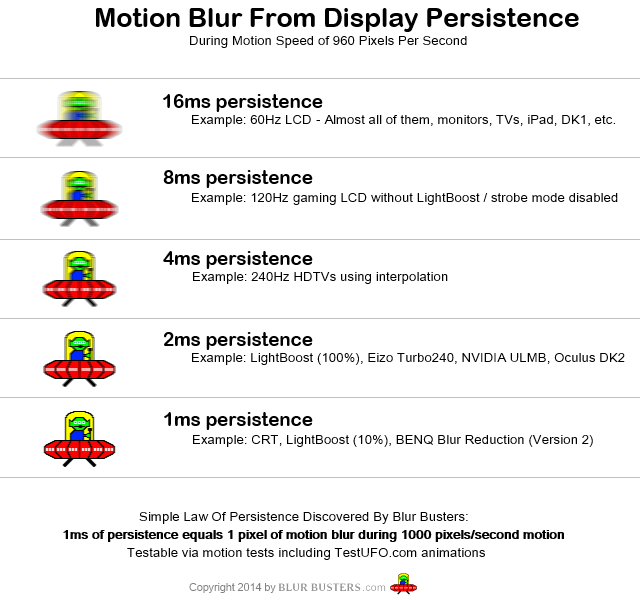

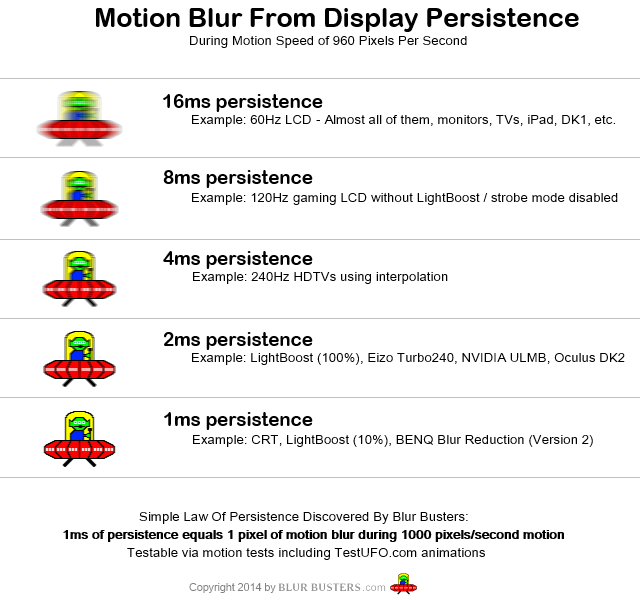

My training in photography and photoshop has taught me to look out for imperfections in images. Even from that perspective, however, I found the 4K DLSS image quality to be very good. I need to sit like 50cm from my 32inch monitor to notice some 4-8 pixels ghosting / noise during motion but from normal viewing distance (80cm-100cm) both DLAA and DLSSP have indistinguishable image clarity during motion. The only difference comes down to the quality of RT effects. Even at 100cm distance from my monitor I can still notice things like RT GI instability (especially in dimly lit locations), RT noise and shadows pixelation, so that's the reason why I prefer to play at the highest internal resolution if possible. DLAA looks better than DLSS for sure, but it's not always worth trading subtle difference in RT quality for 2x worse framerate. Games at 120fps DLSS-Performane looks sharper during motion than 60fps DLAA and the whole gaming experience is also a lot better. 60fps on OLED / LCD technology (sample and hold displays) blurs 16 pixels during relarively fast motion / scroling, whereas 120fps 8 pixels. Based on what I saw DLSS motion artefacts blend perfectly with that persistence blur and if you want a reasonably sharp image during motion you would need at least 240Hz. My 4K oled has 240Hz and that's still not good enough to offer a CRT like motion clarity.

If you think the DLSSP image quality is terrible and unacceptable, what do you think about the image quality on consoles

?

Image quality on the base PS5 in black myth wukong.

4K DLSS-performance using the same internal resolution (1080p) as the PS5.

I think console gamers would be blown away if the PS6 could offer similar image quality. In Black Myth Wukong even DLSS3 in it's performance mode looked way better to my eyes than FSR3 Native. FSR native looked very noisy during motion, and there was also noticeable shimmering around trees. DLSS is an extremely impressive technology that is clearly superior to FSR 3. It also often looks better than native TAA, which is why so many people prefer to use DLSS instead of TAA. I haven't tested FSR4 yet, but I've heard that AMD has made significant improvements to their image reconstruction.

If the DLSS image doesn't satisfy your standards, then I cant imagine you having a good gaming experience, because even the RTX5090 can't run the latest UE5 games well at 4K with 100% res scale. You need at least 100fps to get a reasonable motion clarity and the RTX5090 can barely run 60fps at 4K DLAA with these demanding UE5 games.

Results from DSOG

DSOGaming is your source for PC games news, PC hardware news, screenshots, trailers, PC performance analyses, benchmarks, demos and PC patches.

www.dsogaming.com