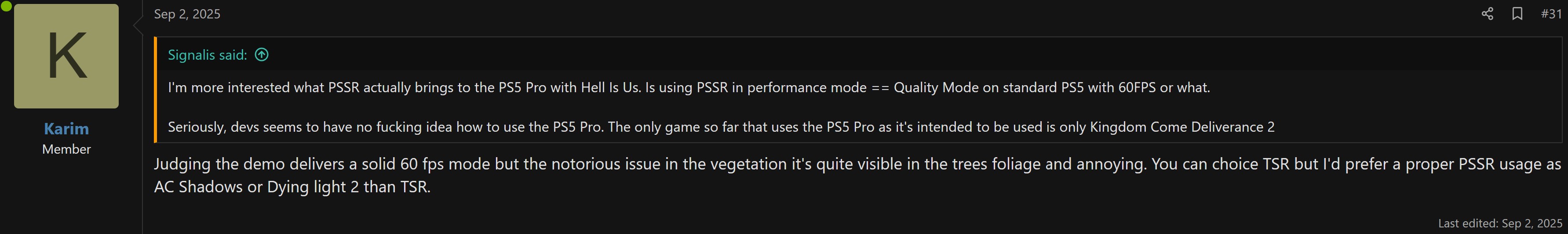

it can reach highs of 1700p but the game isn't necessarily always producing IQ based artifacts either at those high resolutions. When you have a dynamic res jumping in that massive range it doesn't help you with the temporal artifacts. When people complained about the issue of temporal noise in SW:JS and made those comparisons they never mentioned framerate ever. Now it's important though.

No, it's all about preference and the point is you wouldn't get this on console even if it ran DLSS 3 because devs can and will sometimes choose a "tiny" version on it on limited hardware if it means lower frametime (high framerate) and freed cores used to boost RT features instead. Its a double edged sword because you can choose higher internal res to avoid worse artifacts and use a lighter "tiny dlss" or as DF suggested drop the internal res even further and use "full fat" (still DLSS 3) but that does not mean you are going to be artifact free as SF and Cyberpunk show. That's what you're seeing on Pro too. It's simply dev choices that the user cannot change and may not necessarily prefer.

That depends on if you really despise artifacts or favour IQ but DLSS 3 and PSSR are largely very similar and PSSR has looked as good as native and even better than native in games like TLOU so that "never" is untrue.

This expectation should apply elsewhere too. Even at higher res DLSS is still "imperfect".

Why do you think that is though? If it was just called "DLSS" instead of DF now giving it a name of "tiny DLSS" would it have mattered to you?

"Proper DLSS"? The other games are still DLSS but it's constrained by dev choices on fixed hardware just like the Pro. Could you call PSSR in TLOU and Demon's Souls "proper PSSR" and the others something else? Not really, so why do you do this here? TLOU has great picture quality with PSSR thats better than native but people nitpicked edge artifacts on peach fuzz nonetheless. Now look at what you call "Proper DLSS" on SF6, same artifacts but even more pronounced on character hair. "Tiny DLSS" is still DLSS and other implementations of PSSR are still PSSR, simply the developers making choices based on their fixed targets and hardware with PSSR and DLSS.

Nah it's both, because on fixed hardware and settings it's always a tradeoff choice. It's DLSS adding artifacts/issues and frametime cost. It's a tradeoff. Do I lower res so that I can use DLSS with the cost that it has but suffer from poor quality artifacts or do I increase res and remove DLSS, do I use "proper DLSS" but maybe get lower framerates. It's all choices made for you on console. Also SF6 is a shit example because even PS

4 Pro looked better than the Series S version which apparently according to DF uses "capcoms custom internal upscaler". The Switch 2 version has to be docked to look better than the Series S as well.

That's completely horseshit. PSSR doesn't give results worse than FSR2/3. It gives much better results in terms of IQ. It may give similar artifacts like DLSS3 does, It may even lower framerate below that of the base console depending on the dev choices when adding it, but it will 100% give you better results than FSR 2 or 3. If you're talking about the toggle to turn off PSSR the same thing is happening on Switch 2. People asked for a mode in Fast Fusion that turns off DLSS because the artifacts were atrocious. The developer had to really lower settings for it though to boost resolution slightly instead. You should be happy for options.

Yes that is my point but

kevboard

kevboard

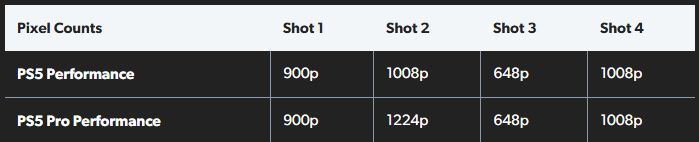

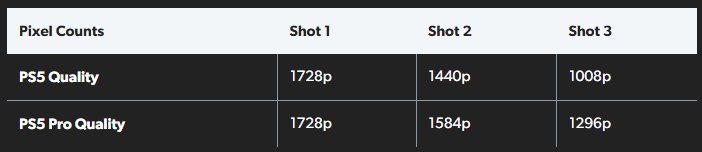

is now suggesting that the resolution was 900p or 1700p and so it was the upscaler which is "problematic". they do have higher expectations but those expectations of a perfect upscale with zero artifacts or matching unknown PCs would never be met. Especially if they are blaming the reconstruction technique for everything and when it comes to switch changing that around to other factors. PS5 Pro will never match high end PCs or even lower-mid end nvidia GPUs especially if it has RT features in the PS5 Pro game enabled. I would say expectations were high for Switch 2from a lot of people too because they were saying the upscaling was going to be transformer and better than home consoles but the overall result isn't very good. It's not the transformer model in DLSS4 but CNN and the upscale is still full of glaring artifacts, flickering and generally very unstable.

Crank up the res on this vid and look at the roof of this bridge

This is a result of low settings but it's exacerbated but the upscale.

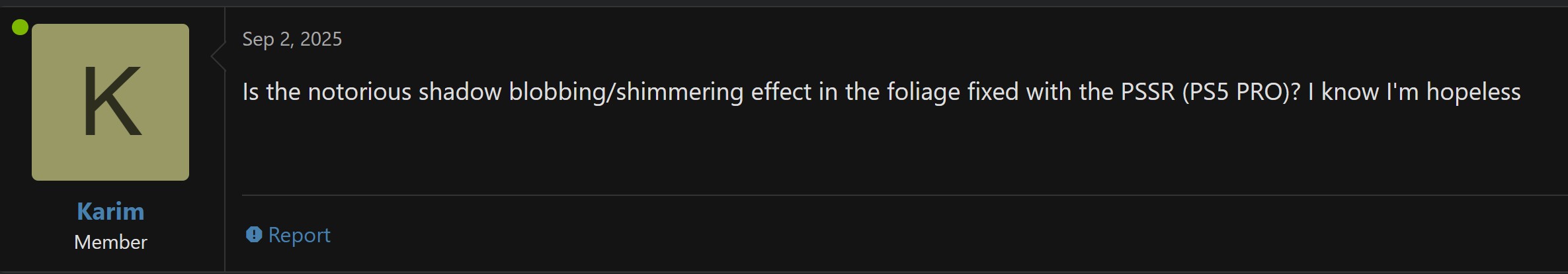

Now recall the dedicated threads to shit like this that people were perpetuating and highlighting on Pro and "PSSR":