You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

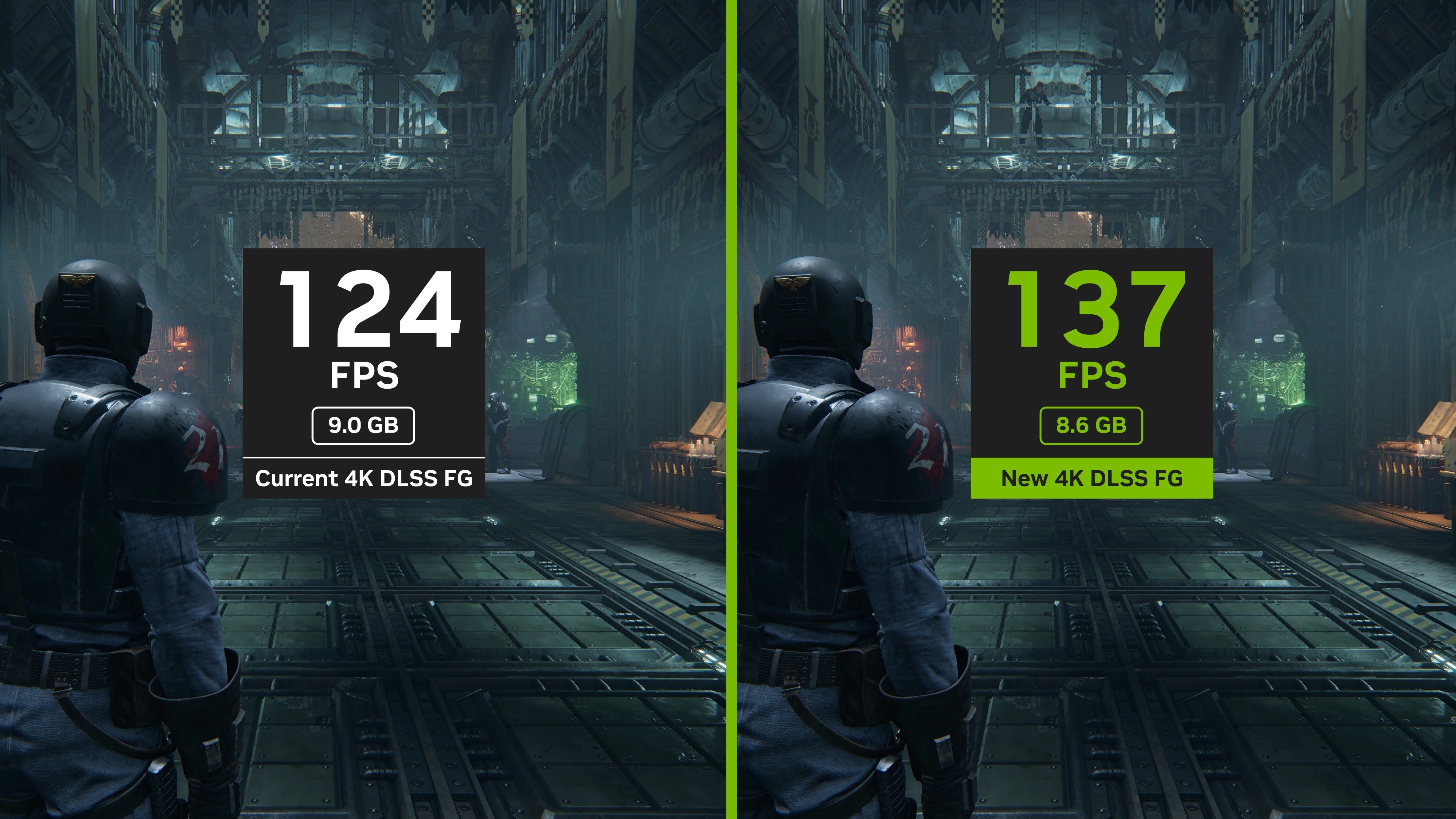

Frame Gen will gets updated on 40 and 50 series with a new AI model that is faster and uses less VRAM (~10% performance increase on 40 series)

- Thread starter LectureMaster

- Start date

- Hardware

PicoLordens

Member

One game that certainly needs it is Indiana Jones. Even at 1440p native with DLAA and full path tracing my 4090 can't hit 100fps.

CompleteGlobalSaturation

Member

I decided to try DLSS FG on Ghost of Tsushima.

90FPS without FG feels smoother than 120FPS with FG.

Using the NVIDIA LAT, it appears FG adds 10msec more input lag than normal rasterisation. Furthermore I noticed an increase in motion artefacts.

The tech sucks if you are very sensitive to input lag and care about the best possible image clarity. I hope this is not the future of GPUs.

90FPS without FG feels smoother than 120FPS with FG.

Using the NVIDIA LAT, it appears FG adds 10msec more input lag than normal rasterisation. Furthermore I noticed an increase in motion artefacts.

The tech sucks if you are very sensitive to input lag and care about the best possible image clarity. I hope this is not the future of GPUs.

Last edited:

The Cockatrice

I'm retarded?

I decided to try DLSS FG on Ghost of Tsushima.

90FPS without FG feels smoother than 120FPS with FG.

Using the NVIDIA LAT, it appears FG adds 10msec more input lag than normal rasterisation. Furthermore I noticed an increase in motion artefacts.

The tech sucks if you are very sensitive to input lag and care about the best possible image clarity. I hope this is not the future of GPUs.

If GoT doesnt have reflex then thats the reason why it feels less smooth. Most games should have it by default on when FG is enabled. It also makes no sense why your game would feel less smooth with FG on when you can reach 90 FPS by default without it. It's only incredibly noticeable if your nonfg framerate is very low, not 90.

llien

Banned

How much faster is it compared to this:

www.neogaf.com

www.neogaf.com

?

New Lossless Scaling 2.9 update introduces 3x Frame Interpolation

https://store.steampowered.com/news/app/993090/view/4145080305033108761 Introducing the X3 frame generation mode. LSFG 2.1 can now generate two intermediate frames, effectively tripling the framerate. X3 has increased GPU load by approximately 1.7 times compared to X2 mode. At the same time...

?

llien

Banned

For FG to reduce lag it needs to go "into the future", predicting frames.I decided to try DLSS FG on Ghost of Tsushima.

90FPS without FG feels smoother than 120FPS with FG.

Which is problematic.

So the best theoretical maximum it can do is increase lag by not that much.

Tarnpanzer

Member

Gaiff

SBI’s Resident Gaslighter

You notice 10ms in a game like GOT. Really?I decided to try DLSS FG on Ghost of Tsushima.

90FPS without FG feels smoother than 120FPS with FG.

Using the NVIDIA LAT, it appears FG adds 10msec more input lag than normal rasterisation. Furthermore I noticed an increase in motion artefacts.

The tech sucks if you are very sensitive to input lag and care about the best possible image clarity. I hope this is not the future of GPUs.

CompleteGlobalSaturation

Member

This is with Reflux On + Boost.If GoT doesnt have reflex then thats the reason why it feels less smooth. Most games should have it by default on when FG is enabled. It also makes no sense why your game would feel less smooth with FG on when you can reach 90 FPS by default without it. It's only incredibly noticeable if your nonfg framerate is very low, not 90.

Without FG and Reflex off, the latency is still lower by 10-13msec than the above combination

Yes. There is a delay in movement when panning the camera with framegen. It simply does not feel as smooth as FG disabled.You notice 10ms in a game like GOT. Really?

Last edited:

Gaiff

SBI’s Resident Gaslighter

It has to be more than 10ms. Nobody would ever notice such a short amount of time in a game of that speed.This is with Reflux On + Boost.

Without FG and Reflex off, the latency is still lower by 10-13msec than the above combination

Yes. There is a delay in movement when panning the camera with framegen. It simply does not feel as smooth as FG disabled.

CompleteGlobalSaturation

Member

I decided to retest. Added latency seems to be approx 12 msec.It has to be more than 10ms. Nobody would ever notice such a short amount of time in a game of that speed.

4K - no DLSS

4K DLSS Framegen + Reflex Boost

Last edited:

Cyberpunkd

Banned

Cyberpunkd

Banned

I decided to retest. Added latency seems to be approx 12 msec.

The Cockatrice

I'm retarded?

I decided to retest. Added latency seems to be approx 12 msec.

4K - no DLSS

4K DLSS Framegen + Reflex Boost

You must have super human sense to notice the difference of MS between those two.

Gaiff

SBI’s Resident Gaslighter

On the one hand, it's 50% more latency. On the other hand, you're at 37ms which is very, very low. GOT also isn't especially fast.I decided to retest. Added latency seems to be approx 12 msec.

4K - no DLSS

4K DLSS Framegen + Reflex Boost

CompleteGlobalSaturation

Member

Subjectively playing the game without FG feels smoother and more responsive. Of course I also recognise bias may play a role here. It would be better if this was a blinded test and I was not aware of which has FG on and off.You must have super human sense to notice the difference of MS between those two.

We can't argue that FG adds latency though.

Last edited:

Xcell Miguel

Member

Is your monitor/TV limited to 120 Hz ?I decided to retest. Added latency seems to be approx 12 msec.

4K - no DLSS

4K DLSS Framegen + Reflex Boost

If so, that may be normal that you feel an input delay. The 116 FPS of your FG screenshot look like a VSync limitation with VRR.

You already had 90 FPS without FG, if you enable FG but are limited to your screen's refresh rate, the game will have to render less frames so that FG frames and rendered frames can alternate until max refresh rate, causing higher delay between real frames, thus more input delay.

Try without VSync, or up some details or resolution to get a better ratio between FG and no FG.

90 real frames will have less input delay than 120 with FG, but then compare 60-70 real frames to 120 with FG.

When you already have 80+ fps you should only use FG if it won't reach your monitor's refresh limit, or you'll get a bit more of input delay caused by less rendered frames as it have to alternate to avoid frametime spikes.

CompleteGlobalSaturation

Member

Yes - 120Hz G-Sync display.Is your monitor/TV limited to 120 Hz ?

If so, that may be normal that you feel an input delay. The 116 FPS of your FG screenshot look like a VSync limitation with VRR.

You already had 90 FPS without FG, if you enable FG but are limited to your screen's refresh rate, the game will have to render less frames so that FG frames and rendered frames can alternate until max refresh rate, causing higher delay between real frames, thus more input delay.

Try without VSync, or up some details or resolution to get a better ratio between FG and no FG.

90 real frames will have less input delay than 120 with FG, but then compare 60-70 real frames to 120 with FG.

When you already have 80+ fps you should only use FG if it won't reach your monitor's refresh limit, or you'll get a bit more of input delay caused by less rendered frames as it have to alternate to avoid frametime spikes.

Utherellus

Member

My thoughts exactly. That game is seriously VRAM hungry. I've never ever been worried about vram in games with my 4070, it was just never full.One game that certainly needs it is Indiana Jones. Even at 1440p native with DLAA and full path tracing my 4090 can't hit 100fps.

In Indiana Jones, vram bottlenecked me even on 1080p. Bizarre experience.

TrebleShot

Member

Am I losing touch here, I have the money and ability to get a 5090 but the only reason so far legit seems to be based around the physical size of the card?

Also do we expect there to be a notable uptick in perf on things like the new LG UW (5k)

Also do we expect there to be a notable uptick in perf on things like the new LG UW (5k)

Black_Stride

do not tempt fate do not contrain Wonder Woman's thighs do not do not

Am I losing touch here, I have the money and ability to get a 5090 but the only reason so far legit seems to be based around the physical size of the card?

Also do we expect there to be a notable uptick in perf on things like the new LG UW (5k)

Its the usual gen on gen uptick. ~30%

Assuming you already have a 4080+ class card, you likely dont need to upgrade.

Follow the usual logic of skipping a generation so you can "feel" the upgrade.

Gen on gen is rarely worth it outside of braging rights.

If you are building fresh, then obviously go for what you wallet allows.

Last edited:

TrebleShot

Member

Yeah I am on a 4090, but was considering it on the new screens. Usually like maxing out fps on my displays. For instance 120hz LG C2 as a monitor, 4K 120hz is possible on most games with some upscaling.Its the usual gen on gen uptick. ~30%

Assuming you already have a 4080+ class card, you likely dont need to upgrade.

Follow the usual logic of skipping a generation so you can "feel" the upgrade.

Gen on gen is rarely worth it outside of braging rights.

If you are building fresh, then obviously go for what you wallet allows.

The new monitors are 165hz 5k so imagine it will be difficult to do that with new games, but maybe with frame gen?

Thebonehead

Gold Member

I decided to retest. Added latency seems to be approx 12 msec.

Mouse and keyboard or controller?

Can remember controller felt gloopy compared to m+kB last time I tried it. It definitely felt laggy via controller.

A bit like the reverse of tlou1 on pc when it came out. Controller was ultra smooth but m+kb was a stuttery mess.

CompleteGlobalSaturation

Member

Controller. I agree; M/KB combo feels much more responsive than controllers overall. Too bad I hate using a M/KB for games.Mouse and keyboard or controller?

Can remember controller felt gloopy compared to m+kB last time I tried it. It definitely felt laggy via controller.

A bit like the reverse of tlou1 on pc when it came out. Controller was ultra smooth but m+kb was a stuttery mess.

Last edited:

Magic Carpet

Gold Member

My 4070 will now get me a few more months.

Waiting for the reviews of the 5000 series.

Waiting for the reviews of the 5000 series.

KuraiShidosha

Member

Not even remotely close to the same thing. ADDING input lag vs without is a worsening of what came before whereas moving to texture based geometry mapping improves image quality while costing significantly less performance vs pure polygon count boosts to match quality.

DirtInUrEye

Member

I'd still rather just lock to 60 real frames than ever choose a pretend 120 with extra input lag. I just don't get the appeal.

People say, "well FG is really nice if you're coming from higher base fps". Like 80 or 90? Both of those feel much more pleasing natively than double phoney digits of it.

I say this as someone with a 4000 series card.

People say, "well FG is really nice if you're coming from higher base fps". Like 80 or 90? Both of those feel much more pleasing natively than double phoney digits of it.

I say this as someone with a 4000 series card.

Last edited:

nemiroff

Member

Yeah.. It's the same song and dance every time a new tech is introduced. Some people have absolutely no vision. Supposedly MLFG is the same as the interpolation that TVs did back in the days.. It's so dumb it beggars belief.

analog_future

Resident Crybaby

How much faster is it compared to this:

New Lossless Scaling 2.9 update introduces 3x Frame Interpolation

https://store.steampowered.com/news/app/993090/view/4145080305033108761 Introducing the X3 frame generation mode. LSFG 2.1 can now generate two intermediate frames, effectively tripling the framerate. X3 has increased GPU load by approximately 1.7 times compared to X2 mode. At the same time...www.neogaf.com

?

Safe to say it is significantly faster, with better image quality, and much lower latency.

HeisenbergFX4

Member

TintoConCasera

I bought a sex doll, but I keep it inflated 100% of the time and use it like a regular wife

Now look at them console-bros, that's the way you do it

You play at 4K/60fps on the TV

That ain't workin', that's the way you do it

Performance for nothing and your frames for free

Now that ain't workin', that's the way you do it

Lemme tell ya, them fat guys ain't dumb

Maybe get an extra ms on your little finger

Maybe get an artifact on your hair

We got to install our own disc drives, vertical stands deliveries

We got to pay to play online, we got to wait these pro patches to come

See the little pcbro with the steam deck and the rgb lights

Yeah, buddy, that's his own chair

That little pcbro got his own 5090

That little pcbro, he's a millionaire

etc etc

You play at 4K/60fps on the TV

That ain't workin', that's the way you do it

Performance for nothing and your frames for free

Now that ain't workin', that's the way you do it

Lemme tell ya, them fat guys ain't dumb

Maybe get an extra ms on your little finger

Maybe get an artifact on your hair

We got to install our own disc drives, vertical stands deliveries

We got to pay to play online, we got to wait these pro patches to come

See the little pcbro with the steam deck and the rgb lights

Yeah, buddy, that's his own chair

That little pcbro got his own 5090

That little pcbro, he's a millionaire

etc etc

proandrad

Member

Are so also seeing frame judder? I would assume you would if you are capping your frames. The generated frames wouldn't be able to be evenly distributed between the real frames, any time your base framerate fluctuates. I think you have to uncap your framerate to see the ideal results. It also could be dropping your base frame rate to 60 and doubling it to match the 120 cap you have, that could explain the increase input lag.I decided to try DLSS FG on Ghost of Tsushima.

90FPS without FG feels smoother than 120FPS with FG.

Using the NVIDIA LAT, it appears FG adds 10msec more input lag than normal rasterisation. Furthermore I noticed an increase in motion artefacts.

The tech sucks if you are very sensitive to input lag and care about the best possible image clarity. I hope this is not the future of GPUs.

Last edited:

llien

Banned

Can you combine amazing NV frame gen with Samsung's?

Asking for a friend.

Last edited:

analog_future

Resident Crybaby

Well I think that settles it for me. Will stick with my 4090 and wait until the 6000 series to upgrade.

buenoblue

Member

You real FPS is exactly half of what current frame gen gives you. So if you lock your fps to a 120hz monitor, your real frame rate, and your input lag will be 60fps/Hz. That's why 90fps feels so much better.If GoT doesnt have reflex then thats the reason why it feels less smooth. Most games should have it by default on when FG is enabled. It also makes no sense why your game would feel less smooth with FG on when you can reach 90 FPS by default without it. It's only incredibly noticeable if your nonfg framerate is very low, not 90.

Frame gen only really makes sense to me if your struggling to hit 60fps or you have a really high refresh display. There is a cost to frame gen and you don't get double the FPS you get without it.

For instance I'm playing Hogwarts legacy on a 4070 ti with dllss on 4k quality maxed out with no ray tracing. Without frame gen I get 65 to 70 FPS but it can drop into the 50s. This doesn't seem that smooth even with gsync.

If I use frame gen I get average 105 FPS with drops to the 90s. This seems much much smoother. But obviously my input lag is akin to playing 53-to 45fps. Input lag doesn't feel that bad playing a single player game with a controller, but it doesn't feel like 100fps lag at all.

This is on a 120hz screen btw.

buenoblue

Member

Can you combine amazing NV frame gen with Samsung's?

Asking for a friend.

Haha I wanted to try this but Samsung game motion plus is disabled @120hz

llien

Banned

Where is your "challenge accepted" attitude, citizen?Haha I wanted to try this but Samsung game motion plus is disabled @120hz

Need a game that card runs at below 30fps.

Then use this:

New Lossless Scaling 2.9 update introduces 3x Frame Interpolation

https://store.steampowered.com/news/app/993090/view/4145080305033108761 Introducing the X3 frame generation mode. LSFG 2.1 can now generate two intermediate frames, effectively tripling the framerate. X3 has increased GPU load by approximately 1.7 times compared to X2 mode. At the same time...

in combination of DLSS FG.

And than let Samsung generate frames on top. (this was available on TVs for ages, by the way)

Results should be epic.

Zathalus

Member

It's only semi useful for 30-60 fps interpolation. Don't expect miracles though, latency does make a large jump and you do pick up on some graphical issues. Just like you shouldn't expect your TVs built in upscaling to match DLSS/XeSS/PSSR you shouldn't expect it to replace frame generation.Haha I wanted to try this but Samsung game motion plus is disabled @120hz

buenoblue

Member

So for a laugh I ran elden ring at 30fps then used Samsung game motion plus, then added dlss frame gen on top...then ran lossless scaling 20x on top of thatWhere is your "challenge accepted" attitude, citizen?

Need a game that card runs at below 30fps.

Then use this:

New Lossless Scaling 2.9 update introduces 3x Frame Interpolation

https://store.steampowered.com/news/app/993090/view/4145080305033108761 Introducing the X3 frame generation mode. LSFG 2.1 can now generate two intermediate frames, effectively tripling the framerate. X3 has increased GPU load by approximately 1.7 times compared to X2 mode. At the same time...www.neogaf.com

in combination of DLSS FG.

And than let Samsung generate frames on top. (this was available on TVs for ages, by the way)

Results should be epic.

This is what I got