You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GeForce RTX 5060/5060 Ti review thread

- Thread starter Gaiff

- Start date

Hudo

Member

They were on their way here but ran out of RAM.Where is the "8GB is fine with modern decompression" gang today?

PeteBull

Member

We had 3060 12gigs launch over 4years ago at 330$ msrp, now nvidia takes away vram from 20-25% stronger card and what can u do with this card in 2027-2028 once next gen consoles/games arrive, ppl can only shove those 8gigs of vram up their ass8g is fine ... If you brought it 3 years ago.

PeteBull

Member

Good card killed by Vram

Yups, if my fellow gaffers are wondering if they shoulf bite, srsly spend bit more and go for 16gigs version of 5060ti, at least u wont be preasured to replace it till next gen crossgen period ends so around 2030, with 5060 that 8gigs will force u to upgrade by 2027-2028 latest so 60xx launch(or amd equivalent at that time).

Last edited:

64gigabyteram

Reverse groomer.

im seeing these pieces of shit go for 500 dollars, at that point you might as well spend the extra 150-200 for a 9070 and give novideo the middle finger.

8gb. in 2025. This is about as bad as pre ryzen intel.

8gb. in 2025. This is about as bad as pre ryzen intel.

SolidQ

Member

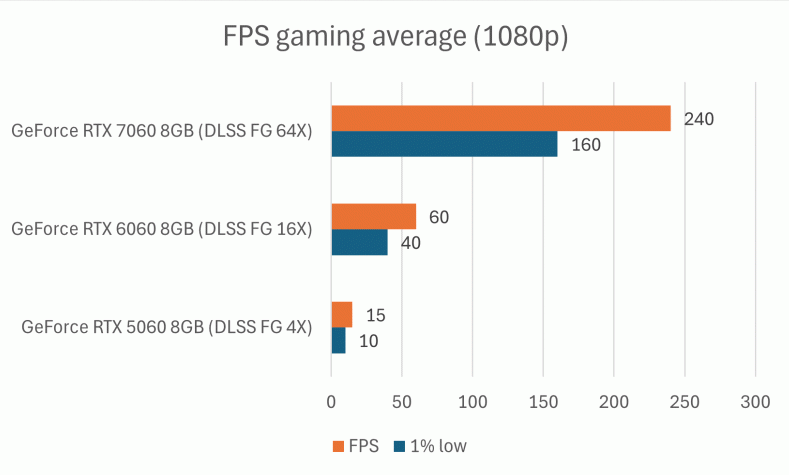

from HUBwell spend the extra 150-200 for a 9070 and give novideo the middle finger.

64gigabyteram

Reverse groomer.

if its anything less than 12gb its dead to me. 9070 is at least 16gbfrom HUB

SolidQ

Member

it's 8 and 16gb, but AIB's announce only 16GB version. Seems AMD give to them freedom, what they want to sell.if its anything less than 12gb its dead to me

64gigabyteram

Reverse groomer.

the fact there's an 8gb version at all is incredibly concerning but we'll seeit's 8 and 16gb, but AIB's announce only 16GB version. Seems AMD give to them freedom, what they want to sell.

dgrdsv

Member

AIBs want an 8GB version because it sells better for them through the OEMs while having higher margin for the AIBs. Which is why you see 5060Ti 8GB and will see 9060XT 8GB as well. This has zero to do with AMD giving anyone any freedom.the fact there's an 8gb version at all is incredibly concerning but we'll see

Crayon

Member

8 gb would be fine on a $150 card with matching performance but we seem to be in a new era where that doesn't exist. Even those $200 6600's appear to be dried up.

An affordable apu that can trade with ps5 can't come fast enough. $300 is just too much when you still need another $400 of stuff to finish a build that can't play some games right off the bat thanks to that 8gb. Ditch that card and get a gaming apu that is $100 more than a normal cpu and you are back at $500 to get into pc gaming.

An affordable apu that can trade with ps5 can't come fast enough. $300 is just too much when you still need another $400 of stuff to finish a build that can't play some games right off the bat thanks to that 8gb. Ditch that card and get a gaming apu that is $100 more than a normal cpu and you are back at $500 to get into pc gaming.

Gp1

Member

Does anyone has at least a prediction on how much 8gb GDDR7 actually cost? Is there some sort of RAM shortage in the market?

It's beyond my comprehension why neither AMD nor Intel cut NVIDIA's legs by simply killing the 8GB versions and putting an aggressively priced 16GB version on top of the RTX 5060 8GB.

It's beyond my comprehension why neither AMD nor Intel cut NVIDIA's legs by simply killing the 8GB versions and putting an aggressively priced 16GB version on top of the RTX 5060 8GB.

Last edited:

asdasdasdbb

Member

Does anyone has at least a prediction on how much 8gb GDDR7 actually cost?

No

It's beyond my comprehension why neither AMD nor Intel cut NVIDIA's legs by simply killing the 8GB versions and putting an aggressively priced 16GB version on top of the RTX 5060 8GB.

AMD is rumored to have stopped production of the 8 GB model. But mainly to sell the 16 since it'd fetch a higher price than the cost difference.

BTW, The 5060 Ti 8 GB is going to be short lived as the Super will use 12. As to what the Fake/Real MSRP of that will be, well...

Last edited:

winjer

Member

NVIDIA releases firmware fix for GeForce RTX 5060 series to address black screen and reboot issues

NVIDIA Support

The bad news is that if you own an RTX 5060 series card (either RTX 5060 or RTX 5060 Ti), your GPU may be prone to black screens or reboots. According to NVIDIA, this is caused by incompatibility with certain motherboard SBIOS (System BIOS) versions. The company emphasizes that the firmware update should only be applied by users experiencing these problems. If your system is running normally, the update is not necessary.

Gaiff

SBI’s Resident Gaslighter

Predictably, it sucks.

SolidQ

Member

would be interesting to see battle between 5060 and 9060xt 8gb with full pcie on PCI3(b450) motherboardsPredictably, it sucks.

Bojji

Member

And AMD created the same problem by naming 8GB and 16GB versions of their GPUs the same. This shit was done on purpose.

Silver Wattle

Member

I didn't think they could make a worse generation than the 40 series and yet here we are, fuck Nvidia.

PeteBull

Member

We can tell by the price difference of 8 and 16gigs version of 5060ti, at most its 50$, but likely not even thatDoes anyone has at least a prediction on how much 8gb GDDR7 actually cost? Is there some sort of RAM shortage in the market?

It's beyond my comprehension why neither AMD nor Intel cut NVIDIA's legs by simply killing the 8GB versions and putting an aggressively priced 16GB version on top of the RTX 5060 8GB.

Last edited:

Crayon

Member

Just the fact that there are 16gb options is a big step.

It was a strange upgrading from an 8GB card to another 8gb card. And now I'm upgrading from a 16 to another 16. So I don't like the situation. But at least now, so many people aren't going to get railroaded into 8gb. The gap to 16gb is $50.

There are going to be so many 8gb cards in use for so long, might as well accept it's around as the baseline. Maybe this gen will be the last, maybe next. Developers will waste tons of time trying to get their games to look good on there. Sort of the way of the world now, though. The only think showcasing what hardware can do is demos and benchmarks.

It was a strange upgrading from an 8GB card to another 8gb card. And now I'm upgrading from a 16 to another 16. So I don't like the situation. But at least now, so many people aren't going to get railroaded into 8gb. The gap to 16gb is $50.

There are going to be so many 8gb cards in use for so long, might as well accept it's around as the baseline. Maybe this gen will be the last, maybe next. Developers will waste tons of time trying to get their games to look good on there. Sort of the way of the world now, though. The only think showcasing what hardware can do is demos and benchmarks.

Gaiff

SBI’s Resident Gaslighter

The 40 series wasn't bad though. 4090, 4080, 4070 Ti, 4070S, and even the 4070 are all great to good cards. There were a few stumbles such as the launch price of the 4080 and the lack of a 16GB 4060, but otherwise, I'd say it was better than Turing and fairly comparable to Maxwell, if not better.I didn't think they could make a worse generation than the 40 series and yet here we are, fuck Nvidia.

SolidQ

Member

Would be interesting to see duel between 9600XT 8gb and 5060ti 8gbnaming 8GB and 16GB versions of their GPUs the same. This shit was done on purpose.

Like this

dgrdsv

Member

There's a GPU like that, it's called 5060Ti 16GB, if you think that you need such VRAM amount go and buy it.it just needs more than 8GB of VRAM to show correct textures and ~60fps average:

Expecting a commercial company to take a financial hit and gift you more VRAM for no reason is lunacy.

What one could argue in this case is that a 5060 16GB would be a better option for the lineup than a 5060Ti 8GB.

But there's a huge MP only market which doesn't need more than 8GB while it does need all the performance it can get.

Which is why I wouldn't just assume that Nvidia doesn't know what the market wants or what would sell better. Their results certainly suggest that they know this really well.

Last edited:

Bojji

Member

There's a GPU like that, it's called 5060Ti 16GB, if you think that you need such VRAM amount go and buy it.

Expecting a commercial company to take a financial hit and gift you more VRAM for no reason is lunacy.

What one could argue in this case is that a 5060 16GB would be a better option for the lineup than a 5060Ti 8GB.

But there's a huge MP only market which doesn't need more than 8GB while it does need all the performance it can get.

Which is why I wouldn't just assume that Nvidia doesn't know what the market wants or what would sell better. Their results certainly suggest that they know this really well.

Yeah because multiplayer games will forever be stuck with low requirements.

Financial hit? You have to be kidding me, they have very healthy margins on every GPU model. You want to play games with lower settings on 5060ti 8gb? You can do that, just know that this GPU is powerful enough to display maximum settings in many games, it just needs that precious vram.

8gb model will be worthless on second hand market as well while currently MSRP difference is only 50$ 8gb model is for idiots.

Gaiff

SBI’s Resident Gaslighter

Stop shilling.There's a GPU like that, it's called 5060Ti 16GB, if you think that you need such VRAM amount go and buy it.

Expecting a commercial company to take a financial hit and gift you more VRAM for no reason is lunacy.

What one could argue in this case is that a 5060 16GB would be a better option for the lineup than a 5060Ti 8GB.

But there's a huge MP only market which doesn't need more than 8GB while it does need all the performance it can get.

Which is why I wouldn't just assume that Nvidia doesn't know what the market wants or what would sell better. Their results certainly suggest that they know this really well.

Does anyone has at least a prediction on how much 8gb GDDR7 actually cost? Is there some sort of RAM shortage in the market?

It's beyond my comprehension why neither AMD nor Intel cut NVIDIA's legs by simply killing the 8GB versions and putting an aggressively priced 16GB version on top of the RTX 5060 8GB.

DRAMeXchange has 16Gbit GDDR6 chips at around ~$8-10/piece. They don't list GDDR7, but presumably around that price or somewhat higher. You need 4x extra chips to build a 16GB rather than 8GB variant, so with the 9060XT and 5060 Ti 16GB both +$50 over 8GB they are likely sold at pretty close to the BOM increase after the extra VRM components, PCB layers etc needed.

Last edited:

Gp1

Member

DRAMeXchange has 16Gbit GDDR6 chips at around ~$8-10/piece. They don't list GDDR7, but presumably around that price or somewhat higher. You need 4x extra chips to build a 16GB rather than 8GB variant, so with the 9060XT and 5060 Ti 16GB both +$50 over 8GB they are likely sold at pretty close to the BOM increase after the extra VRM components, PCB layers etc needed.

Okay explain this to me like i'm a 6 years old.

GDDR6 8Gb[/td]

GDDR6 8Gb[/td]

[td]

0.22 %[/td][td]

[/td]

[/td]

2.90

[/td][td]1.45

[/td][td]2.90

[/td][td]1.45

[/td][td]2.313

[/td][td]

0.22 %

Its like we need 2 of these to get 16gb or i'm interpreting it wrong? 3 USD more?

+ the structural components changes in the card, which shouldn't be many since this isn't a case like a 1060 6gb/3gb with cut down cores etc. The entire cost of planning and executing an extra assembly line probably doesn't even justify the 50 dollars.

If this isn't plain and simple planned obsolescence or NV saying "the price is too damn high for ya, get this shit instead"...

Last edited:

Okay explain this to me.

[td]GDDR6 8Gb[/td]

[td]

2.90[/td][td]

1.45[/td][td]

2.90[/td][td]

1.45[/td][td]

2.313[/td][td]

[/td][td]

0.22 %

[/td]

Its like we need 2 of these to get 16gb or i'm interpreting it wrong? 3 dollars more + the structural components changes in the card, which shouldn't be many. The entire cost of planning and executing an extra assembly line isn'tlain and simple panned obsolescence

RAM chips are in bits, not bytes.

8Gbit = 1GB

16Gbit = 2GB

8GB is 4x 16Gbit, and 16GB is 8x. 8Gbit is obsolete and EOL, nothing currently in the market really uses it except for XSS/XSX.

Gp1

Member

RAM chips are in bits, not bytes.

8Gbit = 1GB

16Gbit = 2GB

8GB is 4x 16Gbit, and 16GB is 8x. 8Gbit is obsolete and EOL, nothing currently in the market really uses it except for XSS/XSX.

Missed the entire lowercase b there, forget my last post

PeteBull

Member

Lemme remind u guys just how awful those lowend/entry lvl gpu's are by comparing it to a ppl's champion back from early 2014, 750ti, which had fricken 150usd msrp(and actual streetprice too).

It matched for solid few years performance of 400$/€ base ps4 for fraction of its price

It matched for solid few years performance of 400$/€ base ps4 for fraction of its price

Mr Moose

Member

Only if you stole the rest of the PC.It matched for solid few years performance of 400$/€ base ps4 for fraction of its price

PeteBull

Member

Is there any point to make console vs pc warpost in a 5060/ti review thread, come on bro, u are smarter than that xDOnly if you stole the rest of the PC.

Lokaum D+

Member

Lemme remind u guys just how awful those lowend/entry lvl gpu's are by comparing it to a ppl's champion back from early 2014, 750ti, which had fricken 150usd msrp(and actual streetprice too).

It matched for solid few years performance of 400$/€ base ps4 for fraction of its price

I have a i5 3470 + GTX 750 for indies, emulation and old games, the damn thing still kicking just fine.

Last edited:

Mr Moose

Member

Is there any point to make console vs pc warpost in a 5060/ti review thread, come on bro, u are smarter than that xD

dgrdsv

Member

Forever or not but most of these games are "forever games" so they do tend to be stuck at whatever (low) requirements they had launched with.Yeah because multiplayer games will forever be stuck with low requirements.

And they won't take a hit on that for no reason, correct. The fact that someone thinks that they could or even should means zero.Financial hit? You have to be kidding me, they have very healthy margins on every GPU model.

This GPU is nowhere close to being powerful enough to "display maximum settings in many games".You want to play games with lower settings on 5060ti 8gb? You can do that, just know that this GPU is powerful enough to display maximum settings in many games, it just needs that precious vram.

8GB model will be fine on a 2nd hand market because the price is already low enough to not fall much from there.8gb model will be worthless on second hand market as well while currently MSRP difference is only 50$ 8gb model is for idiots.

ThisIsMyDog

Member

So... im replaying this beast of a game on my new GPU, with DLSS4 mod, looks great, runs great, can't wait for Resident Evil 9.

ChoosableOne

ChoosableAll

It's disappointing that they haven't offered enough improvement to justify upgrading from the 3060ti(apart from the 8GB ram difference ofc). Maybe the 6060ti will change my mind. It's surprising to see such small differences after two generations.

ThisIsMyDog

Member

I love multi frame-gen x3, is perfect for my 180hz monitor, locked at 171fps co im in gsync range all the time. Can't feel any added latency. This fluidity..