Gonzito

Gold Member

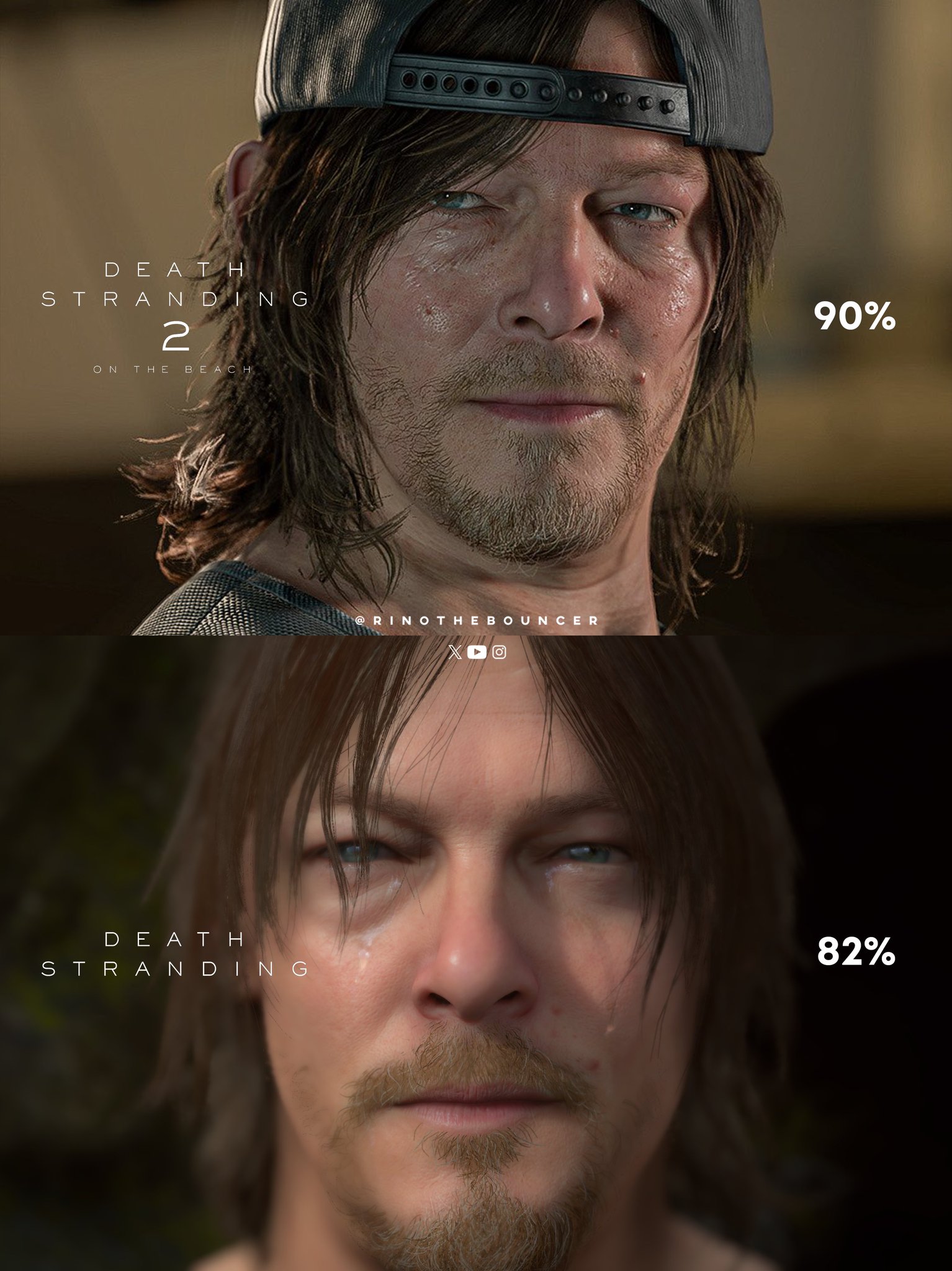

DS2 looks good enough, but the image quality and stable framerate is much more important to me, than some RT effects. So i prefer DS2 over any UE5 game on console that runs at 800p with heavy drops. The IQ here is PRISTINE and sadly this is not a common thing this generation

This is my main issue with UE5. It can look good but the image quality is poor always, even if you use TSR, the ghosting is disgusting and the performance in general is trash and I say this as a guy with a 4090 and one of the best amd cpus in the market.

The engine has potential but it's not good enough. I prefer playing a game like DS2 which looks and feels great in comparison