Draugoth

Gold Member

Remnant 2 is powered by Unreal Engine 5, meaning that it's one of the first games using Epic's latest engine. Moreover, the game supports DLSS 2/3, as well as XeSS and FSR 2.0. NVIDIA previously claimed that the game would only support DLSS 2, so the inclusion of DLSS 3 Frame Generation is a pleasant surprise.

For initial 4K tests, the game was benchmarked the starting area and the Ward 13 safe zone. The second area features numerous NPCs, so, in theory, it should provide reliable results for the rest of the game.

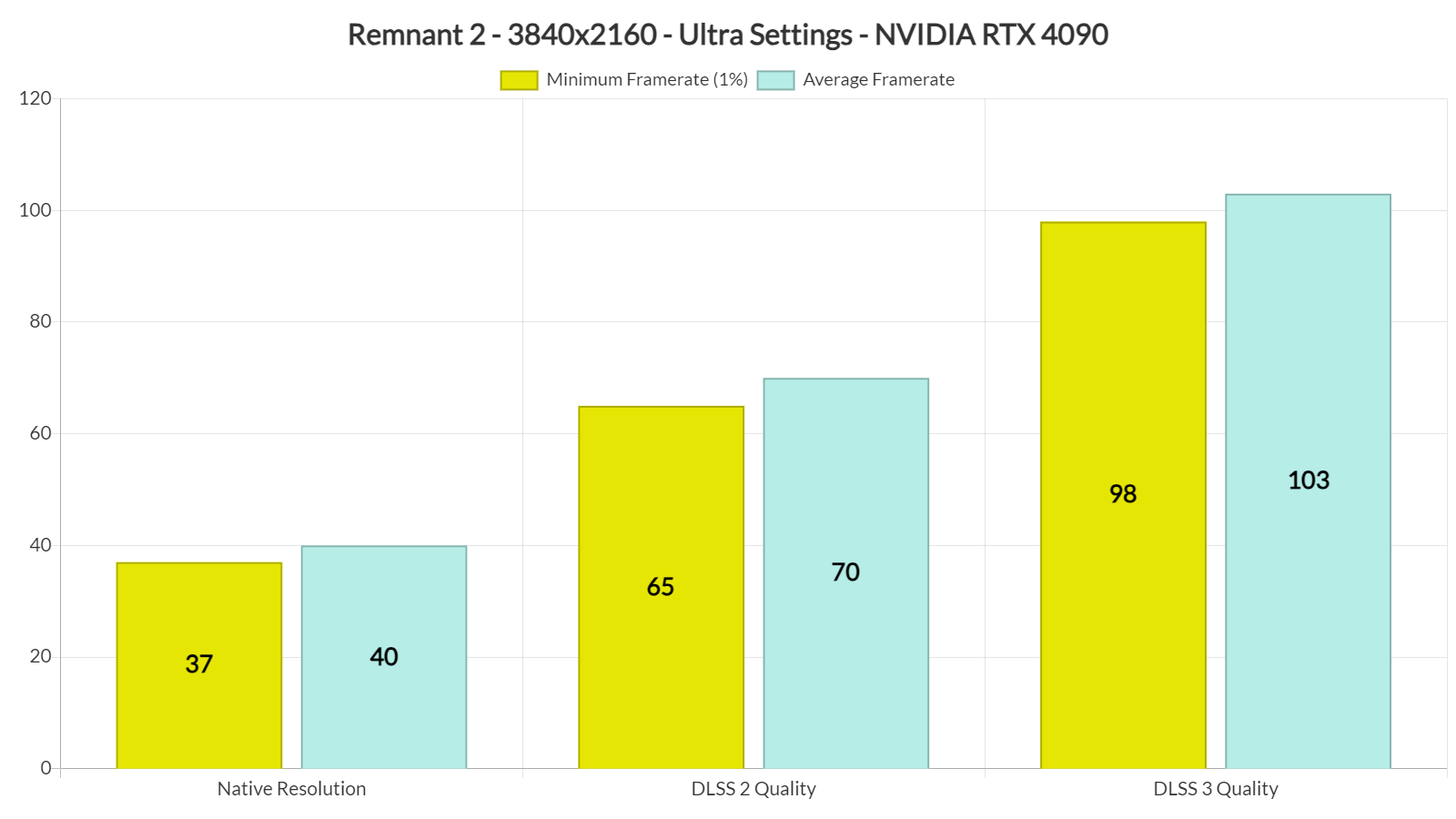

As you can see, the NVIDIA GeForce RTX 4090 cannot run Remnant 2 with 60fps at native 4K and Ultra Settings:

At Native 4K/Ultra, the RTX 4090 pushes an average of 40fps. By enabling DLSS 2 Quality, you get constant 60fps at 4K/Ultra. And then, by enabling DLSS 3 Frame Generation, we get an additional 45-50% performance boost.

The game also suffers from some weird visual artifacts. You can clearly see the artifacts on the blades of grass in the video we've captured (while moving the camera). These artifacts are usually caused by an upscaling technique. However, even without any upscaler, the game still has these visual glitches/bugs.\

To its credit, the game can be a looker. Gunfire has used a lot of high-quality textures, and there are some amazing global illumination effects. However, its main characters are nowhere close to what modern-day triple-A games can achieve. And, while the game looks miles better than its predecessor, it does not justify its ridiculously high GPU requirements.

Via DSOGaming