If you exclude the TMUs, the re-pipelining and other physical design optimizations then there isn't a huge difference between RDNA1 and RDNA2 Compute Units.

However that's not how reality works and how the PS5 or Xbox Series X are build.

The increase in clock speeds relative to RDNA1 GPUs comes from a lot of design work.

Each circuit design has a limit how fast it can work.

For example If you have 100 building blocks and 99 would achieve 2GHz but one only 1 GHz, then the whole design would need to run at 1GHz if all of them have to live under one clock domain.

As an architect you would look at this 1 block and try to expand that clock limit by re-designing the cuircuty.

There are multiple options to improve the clock speed with different compromises.

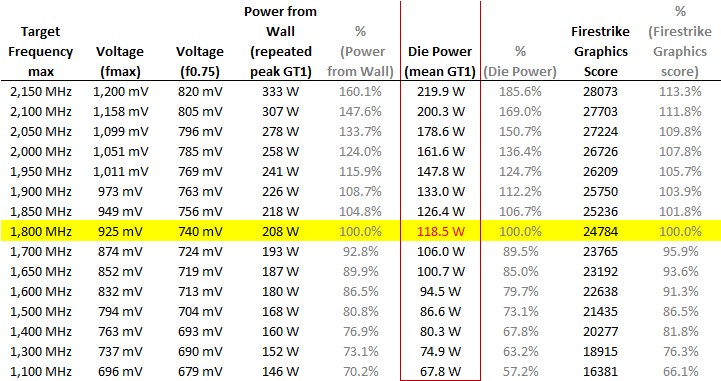

Beyond the "simple" clock speed increase the efficiency of the whole design was improved by a large factor.

Both the Xbox Series and PS5 are obviously leveraging a lot if not all of that RDNA2 work.

Because otherwise both would consume much more power and even the Xbox Series wouldn't run at 1.825GHz.

The 5700XT runs at similar clock rates under games but AMD can bin the best chips to run at higher frequency, there is also the 5700 which is slower.

For console you can't bin the chips after different classes, all of them have to hit 1.825GHz which is why console designs are usually less agressively clocked than top stack SKUs for desktop or mobile products.

Since the Xbox Series X is already running at 1.825GHz, showcases RDNA2 level of energy efficiency and includes the new Render Backend design, which was also optimized for higher clock rates, you can expect that the silicon can also achieve well over 2GHz, which wouldn't be possible under RDNA1 GPUs.

If you go by AMDs definition, CUs include the "Shader Processors and Texture Mapping Units" but not the ROPs.