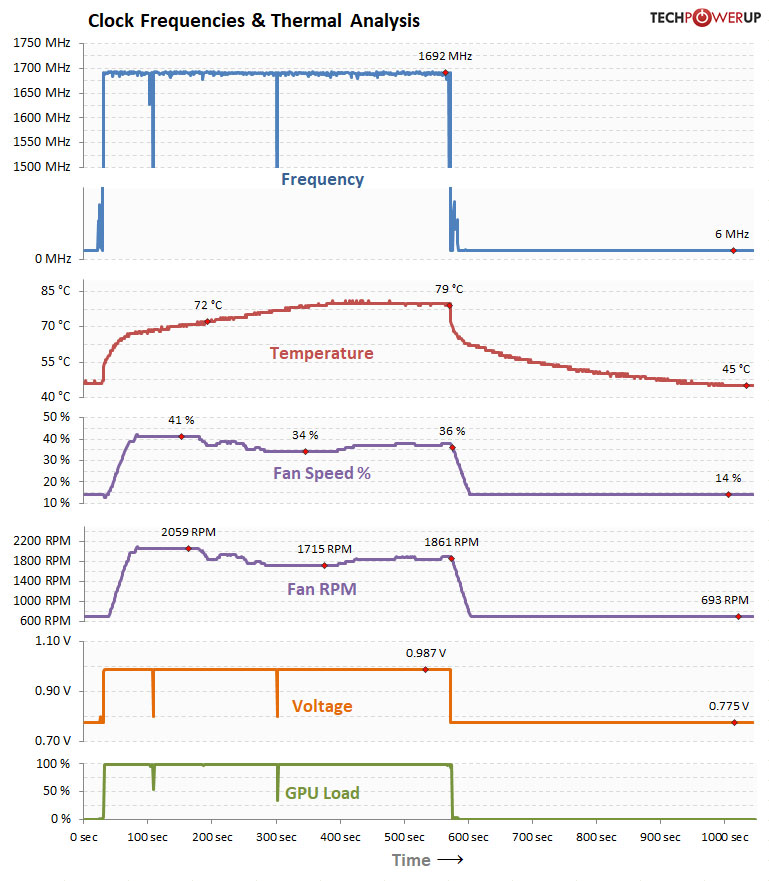

It runs way higher than that.

All reviewers pointed that.

"which can be boosted up to 2105 MHz" this is marketing PR that turned out to be false... it runs most of time higher than that.

The AMD marketed clocks are not accurate... they are doing what nVidia does for gens already.

Understood - and that's pretty much

expected for any GPU today (at least in PC market). They all come now with a base clock and a boost clock. You could also get varying results based on binning, etc. The ONLY thing guaranteed here is the base clock. That way if you

don't get the 2100Mhz ("up to") - you can't state that the GPU/card isn't meeting specs. In theory - you could possibly get the bottom of the bin pile and only get a GPU that runs at 1700Mhz.

NVidia cards do the same exact thing (I get quite a significant perf/boost bump out of my water cooled 2080 FE above spec'd base/boost clocks).

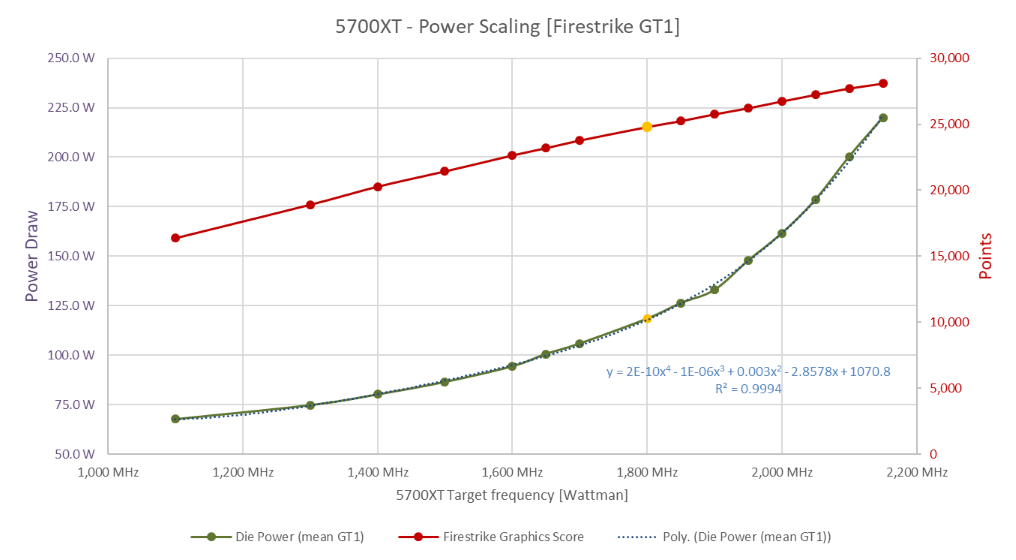

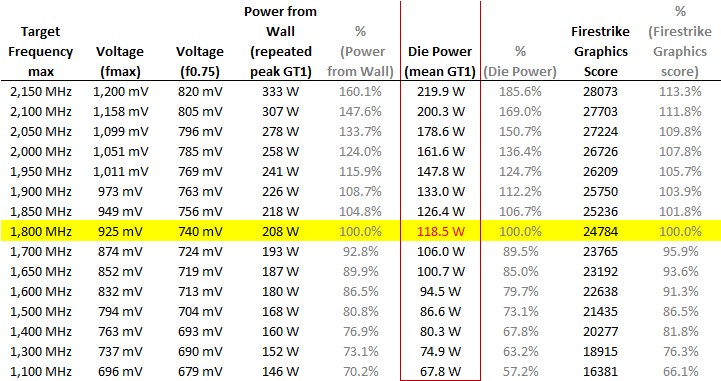

The first thing everyone does is try to OC the damn things to see what is optimal performance for the card. That's what TechPowerUp did with the article you quoted.

Generally speaking - GPU manufacturers will report minimum base and boost clocks for the GPU

chip.

Then, depending the actual card

design (AIB vs Reference), in most instances you'll see higher performance based on several other factors with respect the card/cooling solution implemented.

Old days - "Reference" designs were just that - you wouldn't expect much of a bump over base specs, and typically you wouldn't want to buy the reference card unless you planned to water cool it.

AMD and Nvidia changed that recently because they wanted to be more competitive in the market to actually sell their own branded cards.

Extending this further - you could buy a "lesser" card (i.e. 1070), water cool it, and OC the crap out of it to get 1080+ performance for significantly less investment.

With respect to the XSX vs PS5 comparison in GPU clocks.... IMHO - we're seeing similar approach with respective target architecture/designs.

I'm not surprised that we're seeing these early current/cross-gen games run "better" (at least in certain instances) on the PS5 due to the higher (variable) clock rate compared to the locked/lower clock rate of the XSX.

Regardless of all of this chatter around the IC and RDNA 1v 2 debate...

The real question is - over time, as the various studios/developers update and transition their respective overall software architecture/game engines to implement and adopt these newer technologies within their games (VRS, ML, Mesh Shading, etc.) - will the "fast and narrow" design of the PS5 be able to keep up with the "fat/wide/slower" design of the XSX?

There was a very similar discussion years ago between the buying 2080 and the 1080Ti - as they both performed similarly in raw/basic FPS and game-engine rasterization at that time. At the time it was released - it didn't really make much sense to "upgrade" to a 2080 versus owning a 1080Ti.

However, since then - games have been updated to take advantage of the new capabilities/features of the 2080.