-

Hey Guest. Check out your NeoGAF Wrapped 2025 results here!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

PSSR Patent speculation/discussion

- Thread starter cormack12

- Start date

cormack12

Gold Member

100% - I rewatched some of the Road to PS5 before and it was so much better. And Cerny looked so much younger. I have questions on the 12 channel SSD interface and how that scales for example.One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

Skifi28

Member

The presentation was way too short, too little real info and the games they decided to showcase were the worst possible considering they already had good image quality to begin with. I can only imagine they didn't want to throw other developers under the bus showing how bad 720p with FSR2 really is and it'd also make the base PS5 look meh in those scenarios. Here's hoping we get much more info at TGS.One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

LiquidMetal14

hide your water-based mammals

Good luck getting that in 9 minutes. They have plenty of time to talk their stuff including when it comes out. That's the stuff I love digging into. It was a formal unveiling. Not a 2 hour comprehensive walkthrough.One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

Good your Cerny candle ASMR session.

SyberWolf

Member

cerny said they added addictional custom hardware for AI processing (upscaling/framegen) in the video.

PSSR is the sony/amd version of DLSS that needs AI "tensor core" type hardware to run.

it is a seperate "chip" on the gpu die just like nvidia.

it uses the same technique as DLSS, has a library of supported games, and it uses that to reconstruct a higher quality image.

SweetTooth

Banned

I agree with you, I wanted to hear technical deep dive like Road to PS5, but apparently Sony keep those -understandably so- for mainline consoles onlyOne of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

TrebleShot

Member

I am really interested in this upscaler,

Of recent I have seen limitations in DLSS, dont get me wrong its still by far the very best upscaler out there, but its still an upscaler and theres a noticeable drop in Quality even in Quality mode.

Id love to see competition without frame gen that can push the boundaries.

Personally I am hoping it can look like DLDSR where you get super sampling. Some games may be able to utilise this with great performance.

Of recent I have seen limitations in DLSS, dont get me wrong its still by far the very best upscaler out there, but its still an upscaler and theres a noticeable drop in Quality even in Quality mode.

Id love to see competition without frame gen that can push the boundaries.

Personally I am hoping it can look like DLDSR where you get super sampling. Some games may be able to utilise this with great performance.

Mahavastu

Member

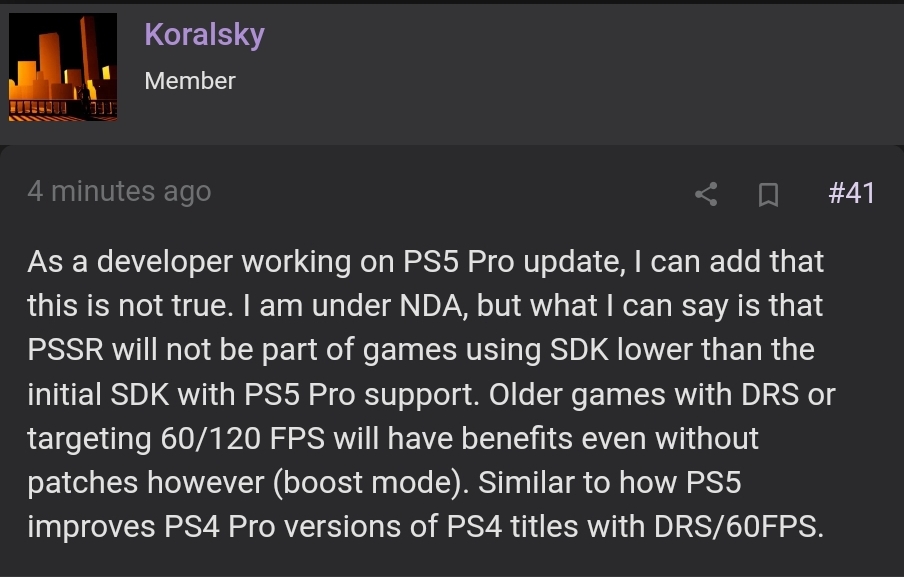

Hmm, this would be bad.

There were rumors a few months ago that Sony does a trick making it possible to use PSSR with older APIs, to make it easier and faster to upgrade games without much changes.

Seems the rumors were wrong

cormack12

Gold Member

I'm guessing it means they just need to recompile the game against the latest SDK, as the SDK they used initially wouldn't have had that functionality.Hmm, this would be bad.

There were rumors a few months ago that Sony does a trick making it possible to use PSSR with older APIs, to make it easier and faster to upgrade games without much changes.

Seems the rumors were wrong

I imagine the qa/testing will be the biggest overhead of that, or if functions are now deprecated/route versioned they will need updating.

Mahavastu

Member

I don't know how compatible the PS5 SDK stay over time. Can you just take a 2020 PS5 game source, recompile it with the latest SDK and it "just works"?I'm guessing it means they just need to recompile the game against the latest SDK, as the SDK they used initially wouldn't have had that functionality.

If so this would not be a problem.

RaySoft

Member

It depends on how many lib calls they used that now are deprecated/obsolete and are now replaced by a different call in the new SDK. And that new call could take other datainputs etc. so there may be some work to make it compatable. And then there are QA ontop of that.I don't know how compatible the PS5 SDK stay over time. Can you just take a 2020 PS5 game source, recompile it with the latest SDK and it "just works"?

If so this would not be a problem.

poppabk

Cheeks Spread for Digital Only Future

I don't have access to DLSS (6900XT) but blind tests have DLSS quality mode looking as good or better than native in most games. What are you seeing that is a noticeable drop in quality? FSR3 in quality mode upscaling to 4K looks pretty solid to me other than in some specific games (Rogue City).I am really interested in this upscaler,

Of recent I have seen limitations in DLSS, dont get me wrong its still by far the very best upscaler out there, but its still an upscaler and theres a noticeable drop in Quality even in Quality mode.

TrebleShot

Member

Yeah so I have a 4090 so I can use dlss or not with dlss on quality something like space marine or even outlaws lacks a little bit of punch. It's hard to describe but imagine the solid depth of native 4k vs an ever ever so slightly blurring. It's very close but the over all image isn't as deep or sharp.I don't have access to DLSS (6900XT) but blind tests have DLSS quality mode looking as good or better than native in most games. What are you seeing that is a noticeable drop in quality? FSR3 in quality mode upscaling to 4K looks pretty solid to me other than in some specific games (Rogue City).

diffusionx

Gold Member

Based on my experience, DLSS is not as good as native. It can get close depending on the game, but it's not magic.I don't have access to DLSS (6900XT) but blind tests have DLSS quality mode looking as good or better than native in most games. What are you seeing that is a noticeable drop in quality? FSR3 in quality mode upscaling to 4K looks pretty solid to me other than in some specific games (Rogue City).

JimboJones

Member

DLSS does a decent job at holding up under scrutiny from many players sitting close to a monitor.Based on my experience, DLSS is not as good as native. It can get close depending on the game, but it's not magic.

I think pisser is gonna have a way easier time convincing players in the living room setting.

twilo99

Member

One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

I was really hoping he would share details on the amazing breakthroughs they've done with the Zen2 CPU, but alas, he kept that under wraps.

Ovech-King

Member

They already had similar tech on their TV's with their "reality creation" setting . It works extremely well on gaming specially with big screens where you can see the low res easily . I'm sure they are using some of that in there

"

Reality Creation is Sony's AI powered sharpening. The standard sharpening setting (which Sony still has) applies edge enhancement to the entire screen regardless of content whereas Reality Creation tries to intelligently apply different amounts of sharpening to the various parts of the image. So it's clever enough to detect faces, foliage and architecture as well as being able to distinguish intentionally soft (out of focus) areas from low resolution blurry images.

The end result similar to cranking the standard sharpening setting but with fewer negative side effects such as the ringing artefacts (white lines) . "

"

Reality Creation is Sony's AI powered sharpening. The standard sharpening setting (which Sony still has) applies edge enhancement to the entire screen regardless of content whereas Reality Creation tries to intelligently apply different amounts of sharpening to the various parts of the image. So it's clever enough to detect faces, foliage and architecture as well as being able to distinguish intentionally soft (out of focus) areas from low resolution blurry images.

The end result similar to cranking the standard sharpening setting but with fewer negative side effects such as the ringing artefacts (white lines) . "

kevboard

Member

Yeah so I have a 4090 so I can use dlss or not with dlss on quality something like space marine or even outlaws lacks a little bit of punch. It's hard to describe but imagine the solid depth of native 4k vs an ever ever so slightly blurring. It's very close but the over all image isn't as deep or sharp.

that's always dependent on the game.

try Doom Eternal or Death Stranding.

TAA native VS DLSS Quality mode

DLSS will look better in both games

ap_puff

Banned

Are you sure? The process looks kind of like they are applying some sort of algorithmic approach to image reconstruction, like via checkerboarding and then filling in the missing checkerboarded pixels via MLThis is not a patent for an upscaler.

This is for image error correction. For example, when playing a video, and some data is missing, and this algorithm fills in the holes by interpolating data from previous frames.

winjer

Member

Are you sure? The process looks kind of like they are applying some sort of algorithmic approach to image reconstruction, like via checkerboarding and then filling in the missing checkerboarded pixels via ML

Read the patent. Then read the tech for any modern upscaler.

Rossco EZ

Member

Hopefully this can tide you over until that day!One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

ap_puff

Banned

Never mind I did read the patentRead the patent. Then read the tech for any modern upscaler.

This looks to be some way of dealing with image corruption on image data for wireless VR

FalsettoVibe

Member

Can't wait to see what Pisser brings to the table myself.

polybius80

Member

One of the reasons I felt the presentation was lacking as it was clearly a marketing first buzz reel. I wanted Mark Cerny to serenade my ears with a technical deep dive into the various components that are new in the PS5 Pro.

considering the comments of the "experts" in the last technical deep dive its understandable why they changed the format

Yeah, and we already discussed this pattent some time ago.This is not a patent for an upscaler.

This is for image error correction. For example, when playing a video, and some data is missing, and this algorithm fills in the holes by interpolating data from previous frames.

PaintTinJr

Member

From reading the patent, it sounds virtually impossible for this not to be a far superior solution to DLSS just because the strategy is so much more sophisticated because ....

the final image will actually have at least 1/4 of the output resolution rendered natively (so 1080p's worth of pixels in a 4K output) rather than 100% predicted from a lower mipmap like DLSS/XeSS

and in say a FPS where most of the holes will be superfluous data, the gamers is then still getting a native 4K render for picking(hit detection) and mouse/pad movement accuracy and FOV

PSSR's final image's natively render pixels will all be from high frequency detail or curved/silhouette geometry edges to if the gamer is mainly concentrating on high frequency data, which is normal, they aren't looking at inferred data, but real native rendered data.

and, although this probably isn't implemented in the solution yet..

Once the reconstruction has been complete and the 3/4 of hole data has been inferred, as a corrective neural network node, the algorithm can then remove the native 1/4 pixel data from the image, and then use the reconstructed 3/4 data to predict that 1/4 too. And then compare that prediction to the absolute truth of the natively rendered 1/4 pixels to then work out an inverse error of the 3/4 pixels that were reconstructed from the training and then feed that back into the training - using sparse matrix capability - and then predict the 3/4 even better in a 2nd pass.

Last edited:

ap_puff

Banned

That sounds like something you'd do in a server farm instead of at runtime. And pretty sure nvidia has been using a similar process to train DLSS.From reading the patent, it sounds virtually impossible for this not to be a far superior solution to DLSS just because the strategy is so much more sophisticated because ....

the final image will actually have at least 1/4 of the output resolution rendered natively (so 1080p's worth of pixels in a 4K output) rather than 100% predicted from a lower mipmap like DLSS/XeSS

and in say a FPS where most of the holes will be superfluous data, the gamers is then still getting a native 4K render for picking(hit detection) and mouse/pad movement accuracy and FOV

PSSR's final image's natively render pixels will all be from high frequency detail or curved/silhouette geometry edges to if the gamer is mainly concentrating on high frequency data, which is normal, they aren't looking at inferred data, but real native rendered data.

and, although this probably isn't implemented in the solution yet..

Once the reconstruction has been complete and the 3/4 of hold date has been inferred, as a corrective neural network node, the algorithm can then remove the native 1/4 pixel data from the image, and then use the reconstructed 3/4 data to predict that 1/4 too. And then compare that prediction to the absolute truth of the natively rendered 1/4 pixels to then work out an inverse error of the 3/4 pixels that were reconstructed from the training and then feed that back into the training - using sparse matrix capability - and then predict the 3/4 even better in a 2nd pass.

PaintTinJr

Member

No, they aren't, this is patented tech now they'd have to license to use any of it with DLSSThat sounds like something you'd do in a server farm instead of at runtime. And pretty sure nvidia has been using a similar process to train DLSS.

If you think this reconstruction algorithm can't be done in 2ms on Pro, then you probably need a bit more background reading or a primer in regression maths to understand how ML AI upscaling works. The GoW Ragnarok paper gives a good feel for compute costs in real-time of inference even if it doesn't detail the specifics of them offline training.