Leonidas

AMD's Dogma: ARyzen (No Intel inside)

PowerColor leaks Radeon RX 7800 XT Red Devil, Navi 32 with 3840 cores and 16GB confirmed - VideoCardz.com

Red Devil RX 7800 XT has been revealed by PowerColor PowerColor has made a huge mistake by revealing their Radeon RX 7800 XT graphics card before AMD. The new RX 7800 XT Red Devil graphics card boasts a new RDNA3 GPU equipped with 3840 Stream Processors, just as many cores as the last-gen RX...

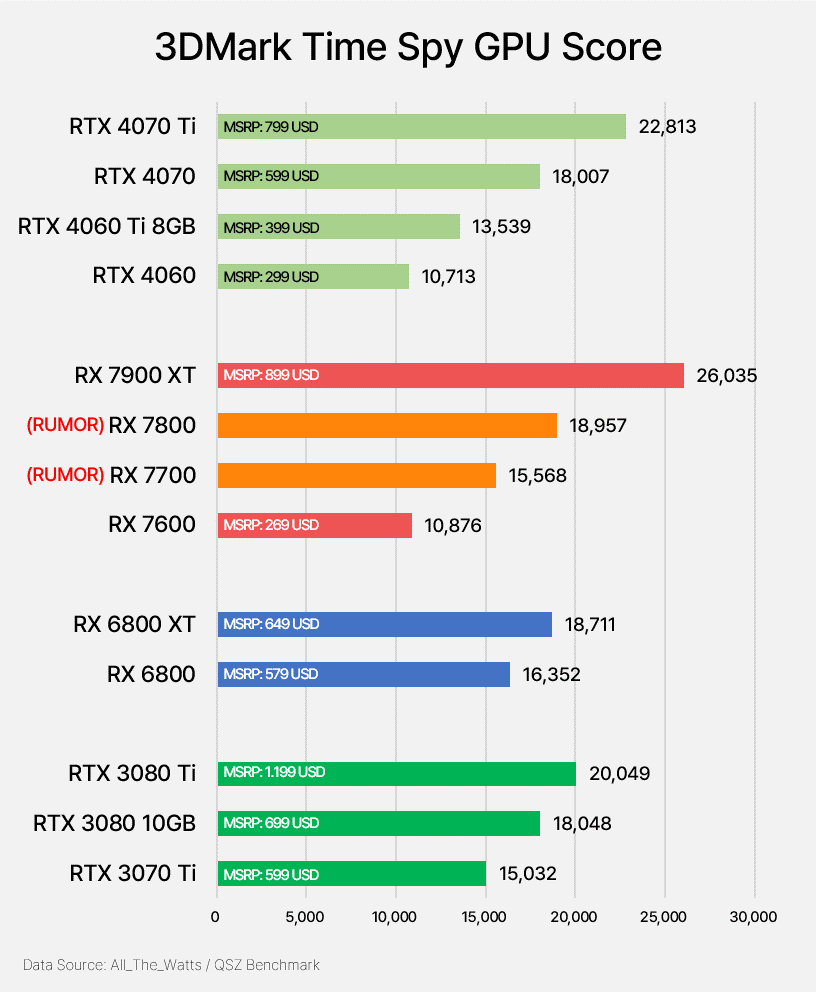

Not looking good. 6800 XT had 20% more CUs. Sad to see AMD give such a GPU the x800 XT name.

This could actually be worse than the predecessor...

Last edited: