You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Resident Evil 5 PC Benchmark

- Thread starter bee

- Start date

Metalic Sand

who is Emo-Beas?

Curufinwe said:Does motion blur affect performance much, and is it an option most of you guys plan to use?

Nope i always turn that shit off. Some prefer vaseline effects on there screen though

Edit: For performence im sure there is a small hit.

Xdrive05

Member

careksims said:Extremely awesome.

Got a B at a constant 59.9FPS

Intel Core 2 Quad Cpu

Nivida GeForce GTX 260

1280x720x32bit

DX 10

2X AA

VSYNC on

Awesome to see it run at that speed and tons of enemies. I have the PS3 version so this is a mighty step up!Will definitely get it.

Great, now try it with the vsync off and see what the game looks like at 200fps! If you're going to vsync cap it at 720p/60fps you might as well turn on 16xQ AA. Your fps will still be 60 and it will look much much better at that res.

Enjoy!

Xdrive05 said:Great, now try it with the vsync off and see what the game looks like at 200fps! If you're going to vsync cap it at 720p/60fps you might as well turn on 16xQ AA. Your fps will still be 60 and it will look much much better at that res.

Enjoy!

Thanks! I'll try it out.

-------

Okay I tried it!

First with no vsync and Maxed AA. Haha! S Ranking. Getting between 70-100+fps

With vsync on and maxed AA = constant 60FPS.

I am happy with that

fizzelopeguss

Banned

It won't run for me, some dx9 ERR09: unsupported function or some bollocks. Strange because the SFIV benchmark and game is demolished by my computer.

1280x800 with everything on low and no AA had me at 29 and a half fps, roughly. way lower during the cutscene part because apparently your settings have no effect on the cutscene models. (Why not just make it a movie file then?)

DX9 instead of DX10 got me an extra 5 fps or so.

gonna have to upgrade.

Currently running a 9400GT card with an Intel E2200 dual core processor. Lame, but it's what came off the shelf.

PC GAF, should I upgrade my card first or my processor? Also, I'm trying to stay with Nvidia and under $150 for my card, as I am just not able to drop 250-300 bucks on a card, especially since I am going to have to upgrade my ASS power supply, too. (250 Watts, what are you thinking, HP?) Is a GTS 250 a decent choice?

DX9 instead of DX10 got me an extra 5 fps or so.

gonna have to upgrade.

Currently running a 9400GT card with an Intel E2200 dual core processor. Lame, but it's what came off the shelf.

PC GAF, should I upgrade my card first or my processor? Also, I'm trying to stay with Nvidia and under $150 for my card, as I am just not able to drop 250-300 bucks on a card, especially since I am going to have to upgrade my ASS power supply, too. (250 Watts, what are you thinking, HP?) Is a GTS 250 a decent choice?

Minsc

Gold Member

K.Jack said:Hmm, let's see what ye old notebook can muster @ 1680x1050 max settings. Downloading now.

You must feel so outdated with the 2x 280M GTX laptops coming out next month. :lol

Xdrive05

Member

Minsc said:You must feel so outdated with the 2x 280M GTX laptops coming out next month. :lol

Christ almighty! The battery life while gaming must be measured in seconds...

Htown said:PC GAF, should I upgrade my card first or my processor? Also, I'm trying to stay with Nvidia and under $150 for my card, as I am just not able to drop 250-300 bucks on a card, especially since I am going to have to upgrade my ASS power supply, too. (250 Watts, what are you thinking, HP?) Is a GTS 250 a decent choice?

If you're setting $150 as a budget, you can probably squeeze in a GTX 260. Big improvement over the GTS 250.

Definitely upgrade the video card over the CPU. CPU should be your last priority, where you can maybe pick up an E8400 or something. You can probably get more juice out of your CPU by just overclocking it though, if your motherboard allows for it (is it custom built, or a manufacturer PC?).

I like that screen.Shadow780 said:FML, updated to newest driver, tried CF Xtension on or off. SAME result.:lol :lol

I'm beginning to think this is a CF thing, never had a problem with SFIV benchmark though, it ran perfectly.

http://i26.tinypic.com/2baas1.jpg

http://i26.tinypic.com/t9wc5e.jpg

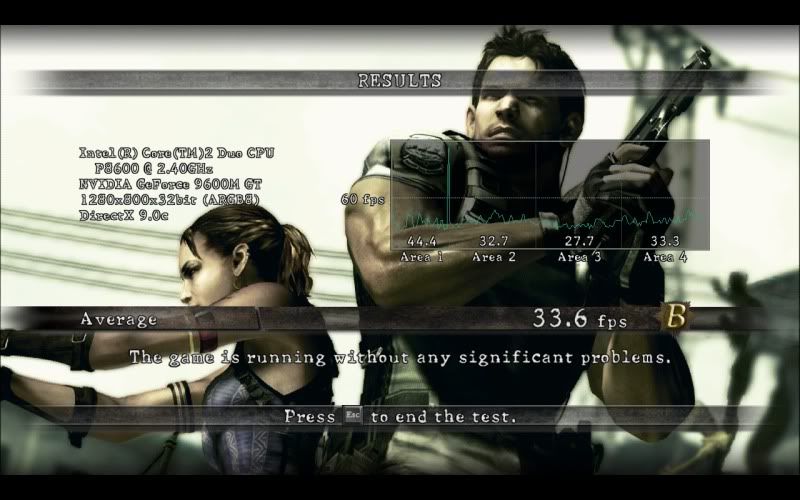

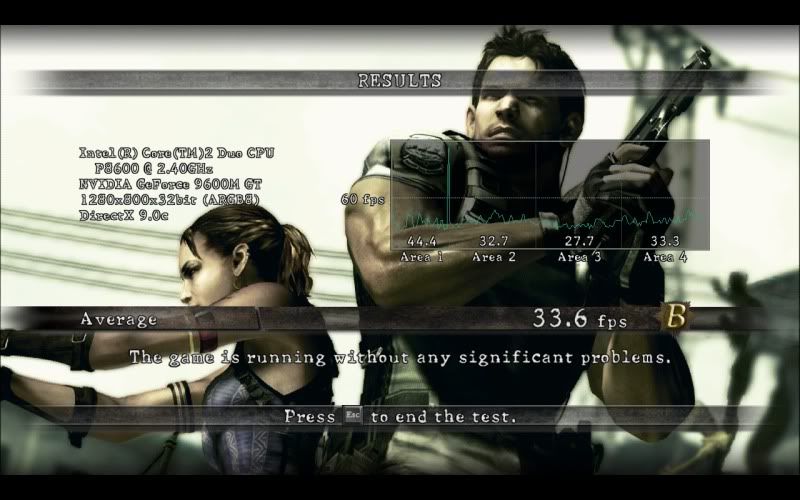

B

The game is running without any significant problems.

How About No

Member

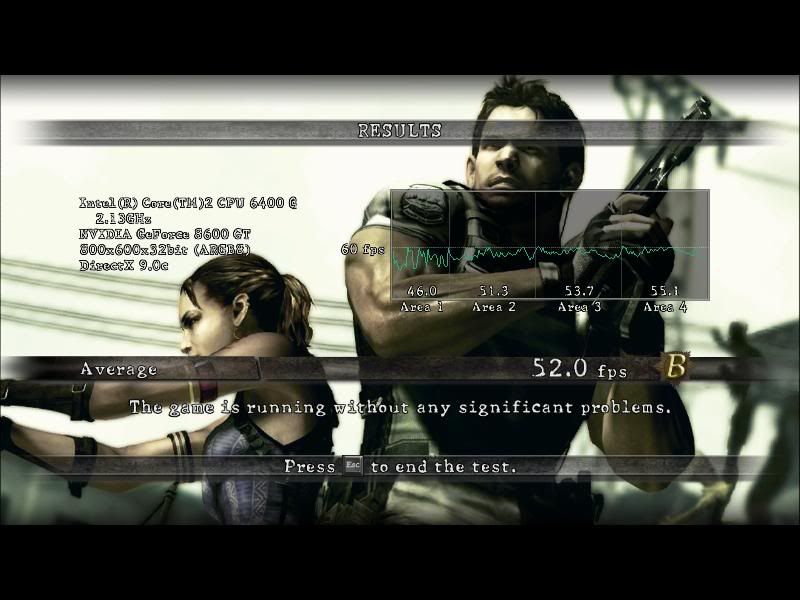

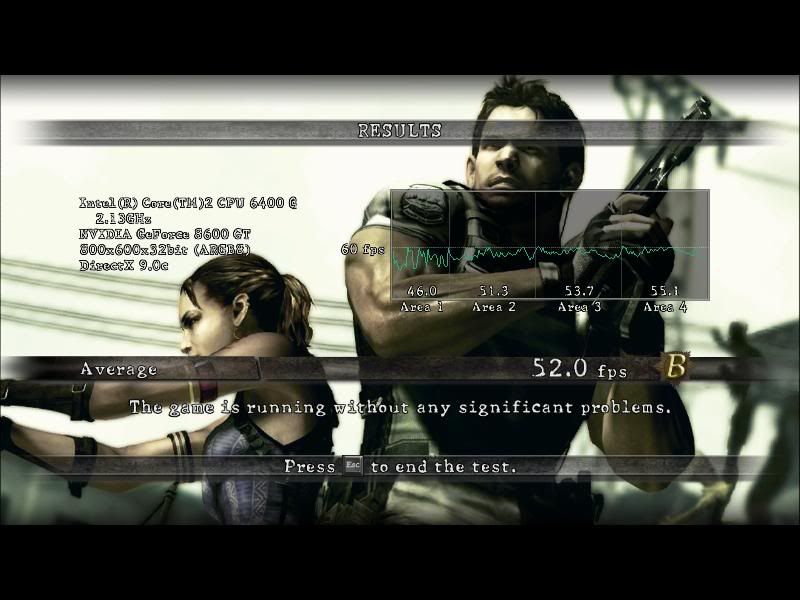

Low(RE4 Wiiish) Settings

Higher/PS3ish Settings

Yeah not looking too grape =/

Higher/PS3ish Settings

Yeah not looking too grape =/

Baloonatic

Member

Stupid question, but what difference does DX10 actually make? Like, what does it improve graphically?

I only ask because I can get 60fps in the gameplay sections using DX9 when I have AA turned off running at 1920x1080 (I don't even really notice jaggies that much on higher resolutions) so I'll probably just stick with that. I can't really see a difference myself but I guess I would if I knew what to look for.

I only ask because I can get 60fps in the gameplay sections using DX9 when I have AA turned off running at 1920x1080 (I don't even really notice jaggies that much on higher resolutions) so I'll probably just stick with that. I can't really see a difference myself but I guess I would if I knew what to look for.

How About No

Member

I think I noticed more bloom on objects. Some said it performs better than DX9.Baloonatic said:Stupid question, but what difference does DX10 actually make? Like, what does it improve graphically?

I only ask because I can get 60fps in the gameplay sections using DX9 when I have AA turned off running at 1920x1080 (I don't even really notice jaggies that much on higher resolutions) so I'll probably just stick with that. I can't really see a difference myself but I guess I would if I knew what to look for.

CabbageRed

Member

Youch. My 4870x2 got stopped halfway through the DX10 variable demo (20-30fps) although everything else was running at 50+fps. Hopefully things will be a bit more smooth at launch (and ATI will be on the ball getting a profile out).

The DX9 version started off at 30fps so I just quit it.

The DX9 version started off at 30fps so I just quit it.

Action Jackson

Member

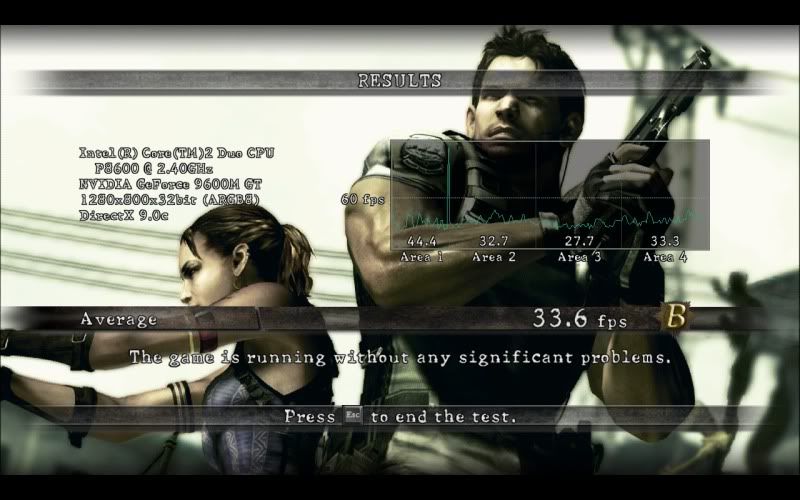

Laptop benchmark (everything on high, no AA).

WTF is this garbage?

Screenshots won't show up for some reason, but it drops to about 15fps in Area 3. Only got 37fps in the fixed benchmark (max settings at 1080p), I was expecting 70-80fps.

I have a 1GB 4890 with the latest 9.6 Catalyst version. I got 95fps with everything maxed out in the SF4 benchmark.

Why does this thing hate ATI? I wasn't taking the Nvidia logo seriously, did they actually fuck this up? Wow.

Screenshots won't show up for some reason, but it drops to about 15fps in Area 3. Only got 37fps in the fixed benchmark (max settings at 1080p), I was expecting 70-80fps.

I have a 1GB 4890 with the latest 9.6 Catalyst version. I got 95fps with everything maxed out in the SF4 benchmark.

Why does this thing hate ATI? I wasn't taking the Nvidia logo seriously, did they actually fuck this up? Wow.

Dr. Light said:WTF is this garbage?

Screenshots won't show up for some reason, but it drops to about 15fps in Area 3. Only got 37fps in the fixed benchmark (max settings at 1080p), I was expecting 70-80fps.

I have a 1GB 4890 with the latest 9.6 Catalyst version. I got 95fps with everything maxed out in the SF4 benchmark.

Why does this thing hate ATI? I wasn't taking the Nvidia logo seriously, did they actually fuck this up? Wow.

same problem here. So it wasn't just me then.

TheExodu5 said:ATi has had some problems with a few games recently. The Last Remnant runs pretty terribly on ATi. No clue why. Far Cry 2 performance isn't stellar either.

Last Remnant was fixable. Hopefully the retain version will be too.

Shadow780 said:Last Remnant was fixable. Hopefully the retain version will be too.

Out of curiosity, what was the fix? I'm wondering what the problem stems from in the first place.

Action Jackson said:Laptop benchmark (everything on high, no AA).

Same rig, same numbers

TheExodu5 said:Out of curiosity, what was the fix? I'm wondering what the problem stems from in the first place.

Well it's not really a fix per se., more of a compromise. Just turn shadow quality to anything but high, the game ran smoothly for me afterwards. Oh and force enable CF.

Baloonatic

Member

Shadow780 said:Well it's not really a fix per se., more of a compromise. Just turn shadow quality to anything but high, the game ran smoothly for me afterwards.

Yeah I had to do the same. Was awful before that. I also had to do it with Lost Planet.

ChoklitReign

Member

What's the difference in IQ between DX9 and DX10?

ChoklitReign said:What's the difference in IQ between DX9 and DX10?

No word on it yet, but I'll try to take some shots when I get home.

Xdrive05

Member

It's nice to see the 8800 cards still kicking ass after all these years. Those were insanely future proof as it turned out. Partially a function of coming out when they did in the console cycle, no doubt.

RE5 looks fantastic and fun @ 60fps+ in high resolutions. Might have to actually pick it up this time around!

RE5 looks fantastic and fun @ 60fps+ in high resolutions. Might have to actually pick it up this time around!

Ikuu said:65.7 FPS on DX9, Highest Settings and 4xAA

40 odd on DX10, Area 3 ran like shit for some reason

1920x1200

3.2GHz Q6600

4870 1GB

4GB RAM

Windows 7

So it's just a DX10 issue with the ATi cards. Not a big deal then.

I <3 Memes

Member

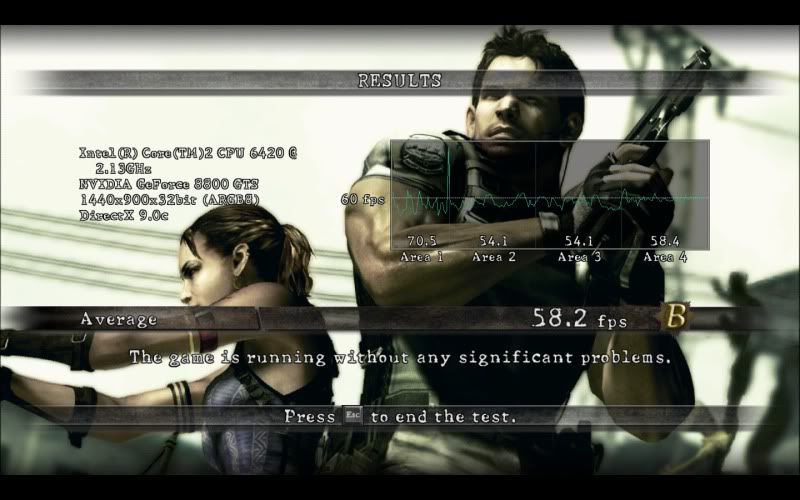

Running this on all high settings

Motion blur on

Vsync off

4xAA

1440x900

My results were a little lower then I expected.

Some screenshots.

This game is definitely more GPU bound then CPU bound. Not a big fan of the motion blur in this game, although it does look better in motion then it does in the screenshots.

Motion blur on

Vsync off

4xAA

1440x900

My results were a little lower then I expected.

Some screenshots.

This game is definitely more GPU bound then CPU bound. Not a big fan of the motion blur in this game, although it does look better in motion then it does in the screenshots.

gregor7777

Banned

I never run games in DX10 on my 4830. Everything I've tried runs glitchy as all hell. All sorts of weird issues.

All of that goes away when I run in DX9.

All of that goes away when I run in DX9.

sevenchaos

Banned

E8400

8800GT

Windows 7

Tried both DX10 and 9 on my monitor (1680x1050) and my HDTV (720p) with everything maxed. No AA.

Couldn't really tell a different between 9 and 10. Bounced between 45 and 60 fps on the monitor. Was getting over 60 (about 70) on HDTV.

Game looks great. Will buy.

8800GT

Windows 7

Tried both DX10 and 9 on my monitor (1680x1050) and my HDTV (720p) with everything maxed. No AA.

Couldn't really tell a different between 9 and 10. Bounced between 45 and 60 fps on the monitor. Was getting over 60 (about 70) on HDTV.

Game looks great. Will buy.

godhandiscen

There are millions of whiny 5-year olds on Earth, and I AM THEIR KING.

Area 3 has some awesome explosions in DX10 mode. My GTX295 took a hit down to 60fps from the usual 90fps it was getting. I can see where people are coming from.Dr. Light said:Wow. I just tried running at DX9 (same settings, 1920x1080 8xAA maxed) and got 70.3fps. Area 3 was smooth as silk.

What the hell is going on here?

Not only ATI, as I said, Area 3 has some hardcore explosions.Ikuu said:There is obviously something wrong with ATi cards running DX10 in Area 3.

Asskicker064

Member

Is co-op still in?

TheExodu5 said:ATi has had some problems with a few games recently. The Last Remnant runs pretty terribly on ATi. No clue why. Far Cry 2 performance isn't stellar either.

Hopefully it's fixed in a coming Catalyst update.

Really? I upped my old 8800 to an ati 4770 ($99 why not?), and I was surprised how well it ran compared to some other games.

...They did abruptly discontinue to 4770, so I hope I don't get screwed on new drivers.

I'll post my RE5 scores when it finishes downloading.

Thanks for the link OP.