EdibleKnife

Member

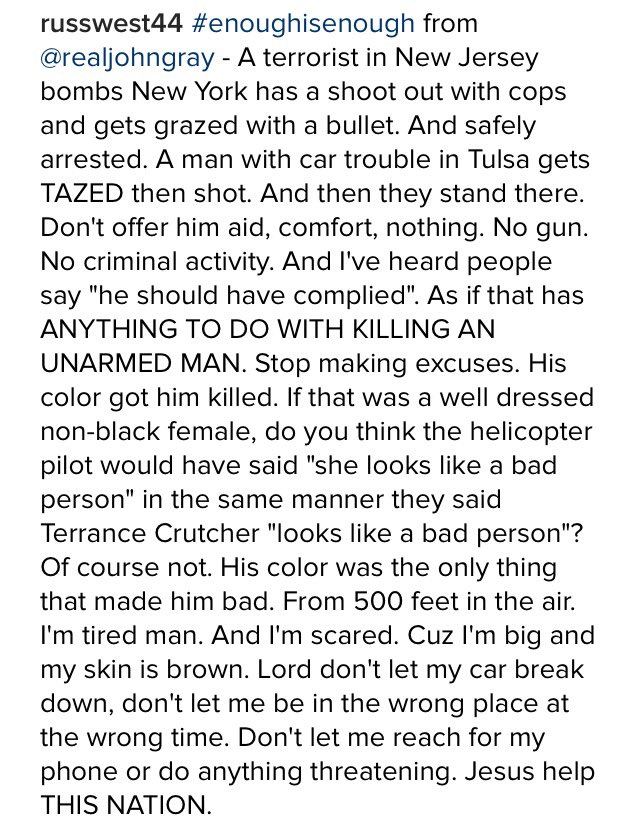

There is what?! Children sleep tonight and for the rest of their lives without a parent? Who gives a flying fuck what was in his car? He didn't reach for anything but the sky.

He's saying there it is to the police pulling out their justifications for seeing him as a threat. It's an expected part of these police shootings now.