You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Negotiator

Banned

Not true.For the case of Uncharted 2, the FMV cutscenes would have to be compressed further

ND used MPEG-2 for cutscenes on PS3, not MPEG-4 (H.264).

They had ample of space on BD-ROM, but it's not like they had unlimited amounts of compute for more elaborate decompression (H.264) or dedicated video co-processors.

Assembly programming is a religion now?Sounds like religion lol.

Fine, I'll take it!

UC2 had tons of physics... what are you gonna do with them? XBOX 360 didn't have 150 Gflops of compute power. It has nothing to do with DVD vs Blu-Ray.Witcher 3 was ported to the Switch. It's a matter of optimization.

UC3 even had a real-time ocean sequence with real-time physics that was actually playable, not a cutscene:

On a PC you'd need a GTX 280 for something remotely equivalent:

Cell with a GeForce 8 GPU would have been nuts!

PS4 is not a regular PC architecture, it uses a customized GCN GPU that tries to replicate Cell SPUs:First parties really pushed that hw back then, but since Uncharted Collection runs on PS4, Cell magic clearly can be ported to sorta regular PC architecture

Not to mention PS4 is like 5-10 times more powerful than the PS3... maybe XBOX ONE could run UC2-3 with no cutbacks on physics, but definitely not the 360.

1) Ridiculous statement. Source?1) I mean even Titanfall runs better on X360 than on XB1!

2) The developers who really compared both (at the end of the gen when all platform specific tricks were used) found out X360 was quite more powerful.

XB1 is far more powerful than the X360. Just because it failed commercially, it doesn't mean it's weaker. That's why it supports 360 BC easily.

2) Quite the opposite.

Late 7th gen multiplatforms run better on PS3 with better graphics settings.

Witcher 3 is a physics-heavy game?Did you see Witcher 3 on the Switch?!

So yeah, Uncharted 2 on the 360 was fucking possible

Golden Abyss is even worse than UC1 on PS3. It used pre-rendered backgrounds in real-time gameplay.Would love to know if Uncharted on the Vita is using the same engine as the PS3. Is this known?

Vita is definitely not a portable PS3. Better than the PS2, worse than the PS3 in terms of compute.

Last edited:

Trunx81

Member

So it is the Uncharted engine? I always thought they just took the Syphon filter engine or smth similar and tweaked it.Golden Abyss is even worse than UC1 on PS3. It used pre-rendered backgrounds in real-time gameplay.

Vita is definitely not a portable PS3. Better than the PS2, worse than the PS3 in terms of compute.

Of course the Vita's not a PS3.

Negotiator

Banned

It's a custom engine made by Bend, but they also had some help from ND:So it is the Uncharted engine? I always thought they just took the Syphon filter engine or smth similar and tweaked it.

Of course the Vita's not a PS3.

Postmortem: Sony Bend Studio's Uncharted: Golden Abyss

In this incredibly detailed postmortem, Sony Bend dives deeply into the the development of Uncharted: Golden Abyss for the PlayStation Vita, explaining precisely what roadblocks held back the system's premier launch title.

Trunx81

Member

1h and 8 min read. You just saved my evening. Thanks!It's a custom engine made by Bend, but they also had some help from ND:

Postmortem: Sony Bend Studio's Uncharted: Golden Abyss

In this incredibly detailed postmortem, Sony Bend dives deeply into the the development of Uncharted: Golden Abyss for the PlayStation Vita, explaining precisely what roadblocks held back the system's premier launch title.www.gamedeveloper.com

bitbydeath

Member

I membaIf they could get MSG4 on 360 I'm sure they could find a way here if they wanted. Multiple discs and an install.

Kumomeme

Member

i think the main issue is the DVD vs Bluray disc. thats all. the rest, i doubt it is something big that could held back everything.

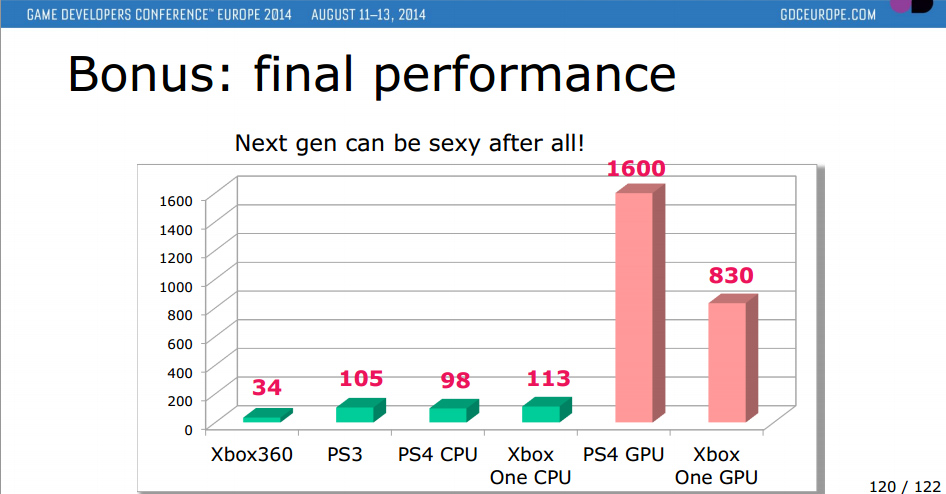

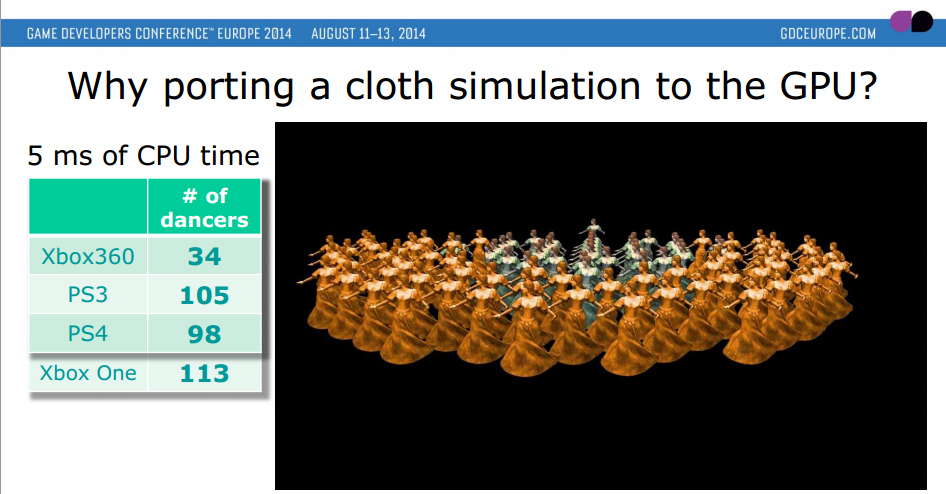

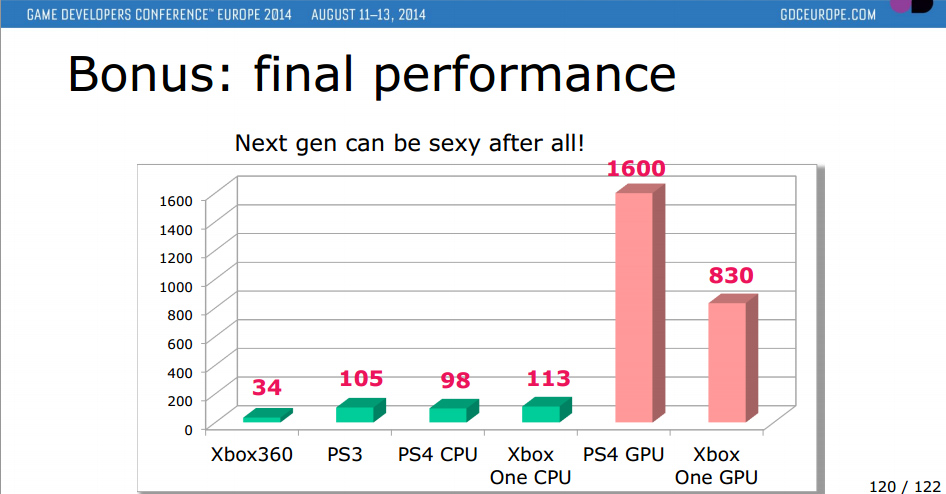

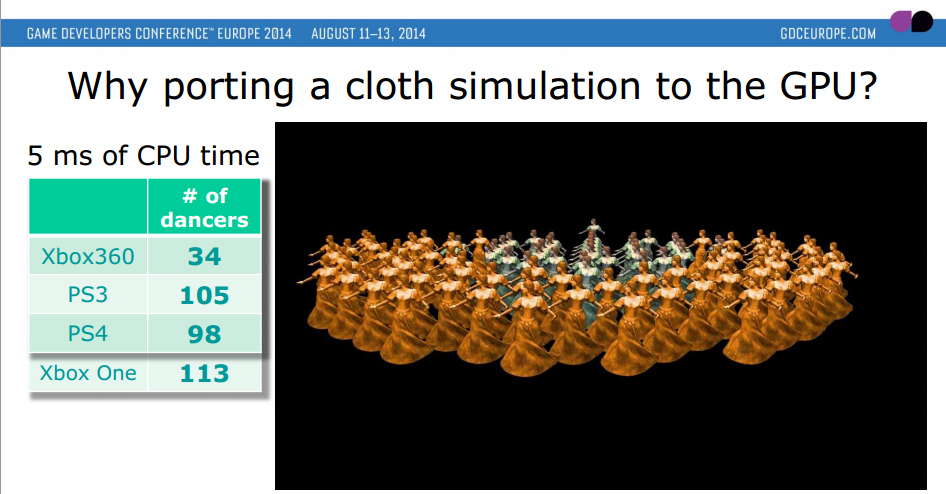

im no expert but we see here PS3 should has advantages in running more simulated object perscreen but so far given the nature linear game of Uncharted 2, i dont think what we got is on level of something that cant be run on Xbox 360. well may be im wrong so correct me if thats the case.

on paper Cell Processor is crazy powerfull but at same time Xbox 360 also has better gpu.

but on other hand, Metal Gear Rising run higher fps (60) on PS3 and with better shadow filtering quality & draw distance than Xbox 360 while Shadow of Mordor on both PS3 and Xbox 360 has similliar limited A.I features of Nemesis System than PS4/X1 counterpart. well this is also might due to the developers behind the scene. there is also other demanding game that run on par or better on Xbox 360.

eitherway, i doubt the game cant be run on the console. atmost, there might be a minor graphical differences or the way it handle loading or cutscene/cutscene bitrate quality(due to bluray vs dvd) thats all.

im no expert but we see here PS3 should has advantages in running more simulated object perscreen but so far given the nature linear game of Uncharted 2, i dont think what we got is on level of something that cant be run on Xbox 360. well may be im wrong so correct me if thats the case.

on paper Cell Processor is crazy powerfull but at same time Xbox 360 also has better gpu.

but on other hand, Metal Gear Rising run higher fps (60) on PS3 and with better shadow filtering quality & draw distance than Xbox 360 while Shadow of Mordor on both PS3 and Xbox 360 has similliar limited A.I features of Nemesis System than PS4/X1 counterpart. well this is also might due to the developers behind the scene. there is also other demanding game that run on par or better on Xbox 360.

eitherway, i doubt the game cant be run on the console. atmost, there might be a minor graphical differences or the way it handle loading or cutscene/cutscene bitrate quality(due to bluray vs dvd) thats all.

Last edited:

adamsapple

Or is it just one of Phil's balls in my throat?

2) Quite the opposite.

Late 7th gen multiplatforms run better on PS3 with better graphics settings.

That's not necessarily true.

I think you're referring to GTA V here primarily. Other than a ground texture blur difference, the texture quality, resolution, performance etc is the same. The only real major example that was clearly better on PS3 was FF13 where it ran at a higher resolution and better performance, but that was mostly pulled back and they became identical with XIII-2 and Lightning Returns. There's other examples like Portal 2 where you can say the PS3 might be better because of MLAA vs 360's older AA solution, but other than that they had the same resolution and performance.

But there were other big late-gen games that ran notably better on 360 as well. Phantom Pain (already posted on the last page) ran long stretches of 10~ fps faster on 360 and Crysis 3 ran not only a higher resolution, but also higher average performance.

Last edited:

PaintTinJr

Member

You posted the cinematic at 10fps less. The in-game graphics are working much harder on the PS3 with more geometry and shader precision, as was true of pretty much every multi-plat without a "better or equal clause" that xbox relied on all gen to feed DF negative PS3 coverage, but naturally a cinematic favours more memory in a single location, so there's that. But MGS5 isn't a patch on the fx in MGS4, anyway.That's not necessarily true.

I think you're referring to GTA V here primarily. Other than a ground texture blur difference, the texture quality, resolution, performance etc is the same. The only real major example that was clearly better on PS3 was FF13 where it ran at a higher resolution and better performance, but that was mostly pulled back and they became identical with XIII-2 and Lightning Returns. There's other examples like Portal 2 where you can say the PS3 might be better because of MLAA vs 360's older AA solution, but other than that they had the same resolution and performance.

But there were other big late-gen games that ran notably better on 360 as well. Phantom Pain (already posted on the last page) ran long stretches of 10~ fps faster on 360 and Crysis 3 ran not only a higher resolution, but also higher average performance.

A better example is the absence of the cutting edge PS3 light probe gathering system used in Split Second missing from the 360 - which became a staple technique from then on in the industry for Indirect lighting - and the game tearing with soft v-sync, and being sub-HD, and being less that 30fps frequently on 360, despite them being huge fans of the 360 from their article praise, and 360 render farm they had, and the Sony/PS3 criticisms their studio tech lead wrote in develop magazine throughout that gen, but still managed native 720p with solid frame-rate, hard vsync, and cutting edge lighting feature on the SPUs, because reality was the PS3 in the hands of skilled developers was significantly more powerful, like all the first party games showcased.

Last edited:

RaidenJTr7

Member

MGS V and Crysis 3 was possible on X 360 a better looking games with real time in game cinematic while Uncharted 2/3 used pre rendered FMV files.

PaintTinJr

Member

That is a very selective take on the features. The Xenos was 8bit(24bit) only when the RSX was 10bit(30bit) and did HDR as mentioned in the Drake's fortune making of. The Xenos was hard limited to 500-600Mpolys/s like all ATI GPUs of the time, but could render optimally regardless of data supplied or polygon ordering, where as the RSX could reach 1.1Billion polys/s but struggled with poorly supplied data, and needed optimal quad meshes ordered to optimise self culling...

on paper Cell Processor is crazy powerfull but at same time Xbox 360 also has better gpu.

..

Equally, for all the Xenos Esram performance for fill-rate, the size of the memory meant it lost lots of efficiency beyond its intended 1024x768 frame-buffer size, causing real performance efficiency problems for double buffering native 1280x720 at full colour and z precision - impacting fog equations - or with HDR, or native with stereoscopic 3D, when they eventually revised 360 hardware with hdmi 1.2, which were all easy for the RSX, which excelled even further when triple buffering.

And then there's the issue of the Xenos not being able to do true sRGB gamma correction, even when eating up shader cycles to mimic it, which was a free ASIC feature on the RSX.

The Xenos had some brute force strengths but was old hat because of the missing features and lack of ability to properly support 720p.

Zathalus

Member

Pretty sure this is in reference to the Async Compute Engines in the PS4, which the PS4 had 8 of, GCN 1.0 didn't have these but they were added in GCN 2.0.PS4 is not a regular PC architecture, it uses a customized GCN GPU that tries to replicate Cell SPUs:

adamsapple

Or is it just one of Phil's balls in my throat?

You posted the cinematic at 10fps less. The in-game graphics are working much harder on the PS3 with more geometry and shader precision

huh ?

more geometry and shader precision? where ?

A better example is the absence of the cutting edge PS3 light probe gathering system used in Split Second missing from the 360 - which became a staple technique from then on in the industry for Indirect lighting - and the game tearing with soft v-sync, and being sub-HD, and being less that 30fps frequently on 360, despite them being huge fans of the 360 from their article praise, and 360 render farm they had, and the Sony/PS3 criticisms their studio tech lead wrote in develop magazine throughout that gen, but still managed native 720p with solid frame-rate, hard vsync, and cutting edge lighting feature on the SPUs, because reality was the PS3 in the hands of skilled developers was significantly more powerful, like all the first party games showcased.

The highlighted things you've said are mostly false and can easily be found out, not sure why you're writing them.

Xbox 360 vs. PlayStation 3: Round 26

Another month, another multi-game Xbox 360 vs. PlayStation 3 Face-Off. Let's get the party started with all the stats, …

1. X360 had better visual effects:

The particle buffer has also been cut down. Both versions have low-resolution smoke, but the PS3's alpha buffer seems to be running with a pared-down horizontal resolution, leading to the occasional odd-looking artefact you don't see on 360.

2. X360 had higher level of post process and LoD:

For example, the Xbox 360 version features a higher level of post-processing in environments at further distances compared to the PS3 game.

3. Both X360 and PS3 had tearing, the PS3 dropped more frames

Engine stress can see tearing on 360 but very rarely do we see any kind of drop in overall frame-rate. PS3 has more tearing in similar scenarios and can be prone to dropping frames.

4. The only way the PS3 version was ahead was a slight increase in resolution

the 360 game is 1280x672 (as confirmed in the DF tech interview), up against native 720p on PS3.

as was true of pretty much every multi-plat without a "better or equal clause" that xbox relied on all gen to feed DF negative PS3 coverage, but naturally a cinematic favours more memory in a single location, so there's that. But MGS5 isn't a patch on the fx in MGS4, anyway.

oh, it's one of those posts.

Last edited:

Lysandros

Member

There are also misleading figures flying around as to max theoretical compute ceilings, apparently RSX operated at different clock speeds for pixel and vertex pipelines. Pixel side is 211 GFlops/4.4 Gpixels at 550Mhz and Vertex one is 40 GFlops at 500Mhz, so 251 GFlops in total. X360 Xenos is at 240 Glops/4 Gpixels (unified).That is a very selective take on the features. The Xenos was 8bit(24bit) only when the RSX was 10bit(30bit) and did HDR as mentioned in the Drake's fortune making of. The Xenos was hard limited to 500-600Mpolys/s like all ATI GPUs of the time, but could render optimally regardless of data supplied or polygon ordering, where as the RSX could reach 1.1Billion polys/s but struggled with poorly supplied data, and needed optimal quad meshes ordered to optimise self culling.

Equally, for all the Xenos Esram performance for fill-rate, the size of the memory meant it lost lots of efficiency beyond its intended 1024x768 frame-buffer size, causing real performance efficiency problems for double buffering native 1280x720 at full colour and z precision - impacting fog equations - or with HDR, or native with stereoscopic 3D, when they eventually revised 360 hardware with hdmi 1.2, which were all easy for the RSX, which excelled even further when triple buffering.

And then there's the issue of the Xenos not being able to do true sRGB gamma correction, even when eating up shader cycles to mimic it, which was a free ASIC feature on the RSX.

The Xenos had some brute force strengths but was old hat because of the missing features and lack of ability to properly support 720p.

Whole system for PS3: CELL BE 192+RSX 251=443 GFlops. Whole system for X360: Xenon 115+Xenos 240=355 GFlops. Not that it matters more than architectural differences.

Last edited:

Negotiator

Banned

It's not just GTA V.That's not necessarily true.

I think you're referring to GTA V here primarily. Other than a ground texture blur difference, the texture quality, resolution, performance etc is the same. The only real major example that was clearly better on PS3 was FF13 where it ran at a higher resolution and better performance, but that was mostly pulled back and they became identical with XIII-2 and Lightning Returns. There's other examples like Portal 2 where you can say the PS3 might be better because of MLAA vs 360's older AA solution, but other than that they had the same resolution and performance.

Far Cry 3:

The SPU’s are hungry - Maximizing SPU efficiency on Far Cry 3

The Far Cry 3 PS3 engine is extremely SPU-intensive, with over 60 different job types and 1,000 job instances executed each frame. Much development effort has been spent optimizing the scheduling and execution of SPU jobs—in particular in reducing the scheduling load on the PPU. This article...

Assassin's Creed Rogue:

Face-Off: Assassin's Creed Rogue

Designed with last-gen consoles in mind, Assassin's Creed Rogue slipped under the radar somewhat owing to the controver…

With the console versions of Assassin's Creed Rogue, there's a definite sense that the conversion work across both platforms isn't as closely matched as 2013's Black Flag. The Xbox 360 version is softer and noticeably fuzzier than the PS3 release: while both versions utilise a form of FXAA that attempts to mimic traditional multi-sampling style coverage across edges (but considerably blurring the image in the process), the PS3 version renders natively at 720p whereas a sub-HD resolution is in place on the Microsoft console. Pixel counting puts the ballpark native resolution on the 360 at around 1200x688.

Beyond the framebuffer set-up, we find the core art and most of the effects work is interchangeable between PS3 and 360, although there are some unexpected differences between the two platforms that were not present in Black Flag. For one, SSAO is present on PS3, helping to add depth to characters and the environment, while on Xbox 360 the effect is completely absent, lending more brightly lit scenes a generally flatter appearance. Secondly, in most cases we find that streaming is generally slower on 360, with low resolution textures left on-screen (sometimes without normal maps) for a few seconds during changes in camera angles in some cut-scenes, and when transitioning to gameplay. The situation is much improved on PS3, where the majority of the best quality assets are usually loaded in before the scene begins.

And if you want to see what a true metamorphosis looks like, have a look at Zone of the Enders HD Collection:

http://hexadrive.sblo.jp/article/71326168.html (it's in Japanese, but you can use Google Translate)

Zone of the Enders: how Konami remade its own HD remake

How the Second Runner finally got the remastering treatment it deserved.

The primary focus of this patch, then, was clearly performance. The patched version now runs very close to its target 60fps in even the most demanding scenes, with v-sync engaged at all times. This level of performance exceeds both the original HD release and the original PlayStation 2 version, though cut-scene performance sees less of an increase, with the original version occasionally besting the updated release (at the expense of visual quality). One of the key changes implemented by HexaDrive to solve the performance problem is the utilisation of the PlayStation 3's SPUs to handle the workload previously designed for PlayStation 2's vector units. ZOE 2 pushed the PlayStation 2 to its limits and the RSX simply doesn't have the muscle on its own to power through it without help. HexaDrive balanced performance across the entire system, including the SPUs, in order to avoid bottlenecks, resulting in a 10x increase in performance across the board.

They went from 720p30 to 1080p60 with a free patch!

Regarding MGS4, I don't know why people keep mentioning it. I played it and wasn't really impressed by the graphics. Is it just me?

Just because a game is exclusive on a certain platform, it doesn't necessarily mean it's fully optimized/properly coded.

Same for Bloodborne. It's a PS4 exclusive, but definitely not the best graphics showcase. I'm not arguing if it's a good game, that's subjective.

Some 3rd party games (like Doom 2016, Doom Eternal, Wolfenstein 2) are far better coded (GCN-optimized/Async Compute).

If you ask me, I believe exotic/custom architectures will make a comeback in the future.

The "most people/coders are too lazy" argument will hold no water when the AI will be able to write human-quality handwritten assembly in seconds, not to mention the Moore's Law slow down will make it absolutely necessary to exploit transistor budget/die space in the most efficient manner possible.

Who knows, maybe one day ChatGPT could even design its own Cell processor successor?

Last edited:

adamsapple

Or is it just one of Phil's balls in my throat?

It's not just GTA V.

Far Cry 3:

Assassin's Creed Rogue:

No one would argue there weren't multi-plat games that ran better on PS3 now and the too, but I think it's a safer bet that through the generation, more multi-plat games ran better on X360 than PS3

By 2012/2013, it was less lop-sided in 360's favor but they were still trading 'wins' every now and then.

RE: ZoE patch, Konami never commissioned anyone to patch the X360 version of the game so we can't really compare the post-patched version.

RE: MGS4. it's brought up often because it's that one elusive game that never got a 360 version everyone wanted, and was touted as something only doable on the PS3 back in the day. One of the first real major examples of its kind. But much later after the fact, we learned via Koji Pro producers that they had a version of the game running on the 360 devkits, ready to be ported, but the port was refused by Kojima directly.

Producer claims that Metal Gear Solid 4 "ran beautifully" on Xbox 360 | LevelUp

Ryan Payton revealed that members of Kojima Productions were Sony fans and rejected the Microsoft console

But then again, Kojima also came out and made some weird remarks like how MGS4 was the best the PS3 can do and how they over-estimated how strong the PS3 was going to be.

Hideo Kojima says Metal Gear Solid 4 is the best the PS3 can do

Link Looks like Kojima Jr. isn't the only one disappointed with Metal Gear Solid. The latest issue of the UK's Edge magazine contains an interview with Metal Gear creator Hideo Kojima, who expresses disappointment with the latest game in the series, Metal Gear Solid 4: Guns of the Patriots...

I remember saying three years ago that we wanted to create something revolutionary, but in reality we couldn't really do that because of the CPU. We're using the Cell engine to its limit., actually. Please don't get me wrong, I'm not criticizing the PS3 machine, it's just that we weren't really aware of what the full-spec PS3 offered - we were creating something we couldn't entirely see.

Last edited:

Horseganador

Member

PS3 is more powerful than X360 but it only demonstrated it with engines and exclusive games for it, it seemed like another console with exclusives than with multis, Bayonetta vs God of war 3 for example

PaintTinJr

Member

Without us having these discussions per game at the time of these games releasing with non-DF sources, I'm not sure what I'm looking at, now, or would be arguing over. I did own a 360 at the time of the Split Second releasing and it felt like a significant downgrade in the demo compared to the PS3 AFAIR, even ignoring the lighting technique.

Although it is interesting you didn't comment on the light probe gathering technique on the SPUs which was very significant and way beyond what the 360 could do, and is a bit of alarm bells when it couldn't even manage 720p for the game either without.

As for MGS5, I'm not sure what you are showing me, or why we have 2 1080p images from a game that only was 1080p on PS4 of all four versions.

Checkout the very first face comparison in this 4 way, and this is official Konami footage so above reproach, and you can see the 360 face features are all soft and lacking the sharpness of the other 3; especially on the eye patch and around the nose. And then notice how close tonally the PS3 looks to PS4 at night with normal mapped dirt in the first comparison, and how they sensible don't show that comparison with the 360, which then looks quite saturated in the daylight with a flat monotone dust/dirt straight after with statically lit tents in the background looking like sprite-esq.

adamsapple

Or is it just one of Phil's balls in my throat?

adamsapple

Without us having these discussions per game at the time of these games releasing with non-DF sources, I'm not sure what I'm looking at, now, or would be arguing over. I did own a 360 at the time of the Split Second releasing and it felt like a significant downgrade in the demo compared to the PS3 AFAIR, even ignoring the lighting technique.

If you can find what you claim to be an unbiased source substantiating your claims, you're welcome to post it.

But based on the one source we have, the X360 version of Split / Second looked and ran better.

Although it is interesting you didn't comment on the light probe gathering technique on the SPUs which was very significant and way beyond what the 360 could do, and is a bit of alarm bells when it couldn't even manage 720p for the game either without.

Because there's nothing tom comment about ?

The 4 way picture you've shown shows no perceptible difference between the PS3 and 360 versions. Only anecdotal ones at best.

And later you've posted a comparison from completely different places and times of day, which doesn't really show anything.

PaintTinJr

Member

It makes the lighting significantly more coherent - ie the game looks better with it, hence why the technique was reworked for general indirect lighting in games since.....

Because there's nothing tom comment about ?

The picture was just so you'd know where to look in the video. At Full HD or above it is noticeable and indicative of either lower resolution being upscaled more, or lower precision shader work.The 4 way picture you've shown shows no perceptible difference between the PS3 and 360 versions. Only anecdotal ones at best.

If you were someone that knew a lot more than DF, you wouldn't think that.And later you've posted a comparison from completely different places and times of day, which doesn't really show anything.

The night time scene on Ps3 has real-time dynamic lighting in the distance and foreground from multiple light sources showing off some quality rendering on the PS3, and good enough, that without a label could pass as PS4 footage in video right up until a direct comparison with PS4, because all the fx are there, just at a lower fidelity.

The failure to compare like for like in that moment is on Konami - probably as it doesn't show the game favourable in this marketing video - but the day time shot is more forgiving of lower frequency in a quick wipe, and only exposes the short range of shader fx running on the 360 version easily when viewing as a still because of the forward casting shadows. But half way up the image in the background the dynamic lighting/shaders are all disabled and we're just looking at baked textures, and the graphical composition workout is harder on the PS3 shot, anyway so both images seem representative of what Konami think each system can manage well with lighting and shaders to use for marketing.

adamsapple

Or is it just one of Phil's balls in my throat?

The failure to compare like for like in that moment is l on Konami - probably as it doesn't show the game favourable in this marketing video - but the day time shot is more forgiving of lower frequency in a quick wipe, and only exposes the short range of shader fx running on the 360 version easily when viewing as a still because of the forward casting shadows. But half way up the image in the background the dynamic lighting/shaders are all disabled and we're just looking at baked textures, and the graphical composition workout is harder on the PS3 shot, anyway so both images seem representative of what Konami think each system can manage well with lighting and shaders to use for marketing.

Ok, so there's no differences visible in DF's captures or contents.

There's no difference and it's Konami's marketing fault for not showing it.

But you know there's a difference, which you've yet to highlight in any direct comparison.

Got it.

PaintTinJr

Member

So rather than acknowledge that the PS3 version looked exceptional in the shots Konami chose and the 360 version doesn't look so great compared to even other games, like say the fidelity in MGS4Ok, so there's no differences visible in DF's captures or contents.

There's no difference and it's Konami's marketing fault for not showing it.

But you know there's a difference, which you've yet to highlight in any direct comparison.

Got it.

This thread is about Uncharted 2, and as shown in the making video of Uncharted 1, it has fx that are miles better than that 360 MGS5 marketing footage, but feel free to drink the DF cool aid that the 360 was on par or better than the PS3 hardware, and had results to rival PS3 first party games or exclusives.

Last edited:

Shadowstar39

Member

Although this is from the original uncharted, and this was on the disc or a spare disc that came with the original PS3 game IIRC, and most of it still applies. I've timestamped the video at the part that is most relevant to the technical discussion - in regards of the 360's GPU and what its version would have lost - but the whole video is still a good watch IMO.

This is one of the videos I was thinking of, it was a different era... Do any of those devs even work at naughty dog anymore? or did they leave after druckman took over?

We also don't see deep dives like this into new tech, it was such a common thing in the 360/ps3 gen. So much was changing. Look at early games from that gen compared to 2013 games. Way different in so many ways.

adamsapple

Or is it just one of Phil's balls in my throat?

So rather than acknowledge that the PS3 version looked exceptional in the shots Konami chose and the 360 version doesn't look so great compared to even other games, like say the fidelity in MGS4that looks exceptional, it is just the 360 being unfairly treated, yes?

Why would I acknowledge something that hasn't been presented? You chose screens from two completely different locations and different times. I posted picture(s) from the exact same places which show no difference.

I want you to SHOW me the differences, don't TELL me about it.

Show, don't tell.

This thread is about Uncharted 2, and as shown in the making video of Uncharted 1, it has fx that are miles better than that 360 MGS5 marketing footage, but feel free to drink the DF cool aid that the 360 was on par or better than the PS3 hardware, and had results to rival PS3 first party games or exclusives.

You're more focused on "teh DF bias" than an actual discussion.

The fact is that we won't know the answer to the Uncharted question because Naughty Dogs never had X360 devkits or developed any games for it.

That's just like me saying that PS3 wouldn't be able to handle Gears 3 because that one dev-build video that showed Gears 3 running on the PS3 runs like shit. Of course additional optimizations and dedicated work would iron out things like that, just like how if Naughty Dogs were a multi-platform developer making Uncharted 2, they would use the 360's strengths to get the same, or a very close version, of the game on that hardware.

Last edited:

PaintTinJr

Member

You posted images that aren't even native resolution for the games on PS3 and 360, so could be mislabelled Xbox1 for all I know. I choose footage from a reliable source wanting to sell all copies on all platforms and their choosen footage shows what it shows. Or are you saying Konami's chosen 360 clip isn't fair to say it is representative of what the hardware can do? Or are you saying they didn't choose something that looks as good as it could - so as not to sell 360 copies?Why would I acknowledge something that hasn't been presented? You chose screens from two completely different locations and different times. I posted picture(s) from the exact same places which show no difference.

I want you to SHOW me the differences, don't TELL me about it.

Show, don't tell.

You're more focused on "teh DF bias" than an actual discussion.

Last edited:

adamsapple

Or is it just one of Phil's balls in my throat?

You posted images that aren't even native resolution for the games on PS3 and 360, so could be mislabelled Xbox1 for all I know. I choose footage from a reliable source wanting to sell all copies on all platforms and their choosen footage shows what it shows. Or are you saying Konami's chosen 360 clip isn't fair to say it is representative of what the hardware can do? Or are you saying they didn't choose something that looks as good as it could - so a not to sell 360 copies?

sigh ...

1. The images are from the Digital Foundry article.

2. Why would I go through the trouble trying to pass XBO screens as X360? Are you saying that because before today you didn't actually know what the X360 version of the game looked like?

3. The 'chosen' Konami clip shows two completely different locations and times of day, you cannot draw a direct 1 : 1 comparison with them because they're not from the same places

You are the one talking about some kind of perceived light probe and geometrical improvements on PS3. Find me some relevant comparisons that show them, don't post one screen from the day time in one place and night time in another and say "see".

Also, the images you're posting aren't even from Phantom Pain, they're from Ground Zeroes.

Please reply to me when you have actual comparisons that show the differences you're talking about, or don't quote me.

PaintTinJr

Member

I didn't say you did, I just implied that after all these years - unless it was an archive.org link from the day they posted it - the reliability of the info isn't assured, and that's even assuming I thought Rich wasn't in it for clicks and actual cares about faceoffs beyond his business interest/popularity.sigh ...

1. The images are from the Digital Foundry article.

2. Why would I go through the trouble trying to pass XBO screens as X360? Are you saying that because before today you didn't actually know what the X360 version of the game looked like?

3. The 'chosen' Konami clip shows two completely different locations and times of day, you cannot draw a direct 1 : 1 comparison with them because they're not from the same places

You are the one talking about some kind of perceived light probe and geometrical improvements on PS3. Find me some relevant comparisons that show them, don't post one screen from the day time in one place and night time in another and say "see".

Also, the images you're posting aren't even from Phantom Pain, they're from Ground Zeroes.

Please reply to me when you have actual comparisons that show the differences you're talking about, or don't quote me.

Konami's footage on the other hand ...

Negotiator

Banned

Pal Engstad works at Apple as a senior software architect:This is one of the videos I was thinking of, it was a different era... Do any of those devs even work at naughty dog anymore? or did they leave after druckman took over?

We also don't see deep dives like this into new tech, it was such a common thing in the 360/ps3 gen. So much was changing. Look at early games from that gen compared to 2013 games. Way different in so many ways.

Pål-Kristian Engstad - A veteran in Computer Graphics and Game Programming | LinkedIn

A veteran in Computer Graphics and Game Programming · Great believer in the slogan: Keep it Simple and Fast. Specialties: Assembly programming, programming, optimization, graphics engines, computer graphics, animation systems, compression, software engineering, management and hiring. ·...

www.linkedin.com

www.linkedin.com

Great believer in the slogan: Keep it Simple and Fast.

Specialties: Assembly programming, programming, optimization, graphics engines, computer graphics, animation systems, compression, software engineering, management and hiring.

I feel like this is a dying breed of old school Demoscene programmers... at best we can hope one day the AI will fill their niche.

If Gears of War 3 fully utilizes 3 PPU cores (and I don't mean floating point operations by that, but more general purpose code), then there's no way the PS3 will be able to run it at acceptable framerates. And that's fine by me.That's just like me saying that PS3 wouldn't be able to handle Gears 3 because that one dev-build video that showed Gears 3 running on the PS3 runs like shit. Of course additional optimizations and dedicated work would iron out things like that, just like how if Naughty Dogs were a multi-platform developer making Uncharted 2, they would use the 360's strengths to get the same, or a very close version, of the game on that hardware.

I say bring back the glorious PS360 era! Less crunch, less wokeness, more games, more profitability, more bravado, less drama, more exclusives on both platforms... what's not to love from that era? Gaming is no longer the same these days.

Last edited:

Corporal.Hicks

Member

If a game is built from the ground up to take advantage of specific hardware features on a particular console, it would be difficult, if not impossible, to port it 1:1 to another platform. However, with clever optimisation (different graphics settings and leveraging the different strengths of each console), I believe it would be possible to port all PS3 games on X360 hardware (and vice versa).

The most impressive game of this generation, GTA5, runs the same on X360 and PS3, so I'll always remember both consoles as equally capable. Also games such as Tomb Raider or Gears Of War 3 have impressed me as much with their graphics as the Uncharted series.

The most impressive game of this generation, GTA5, runs the same on X360 and PS3, so I'll always remember both consoles as equally capable. Also games such as Tomb Raider or Gears Of War 3 have impressed me as much with their graphics as the Uncharted series.

Corporal.Hicks

Member

Not true.

ND used MPEG-2 for cutscenes on PS3, not MPEG-4 (H.264).

They had ample of space on BD-ROM, but it's not like they had unlimited amounts of compute for more elaborate decompression (H.264) or dedicated video co-processors.

Assembly programming is a religion now?

Fine, I'll take it!

UC2 had tons of physics... what are you gonna do with them? XBOX 360 didn't have 150 Gflops of compute power. It has nothing to do with DVD vs Blu-Ray.

UC3 even had a real-time ocean sequence with real-time physics that was actually playable, not a cutscene:

On a PC you'd need a GTX 280 for something remotely equivalent:

Cell with a GeForce 8 GPU would have been nuts!

PS4 is not a regular PC architecture, it uses a customized GCN GPU that tries to replicate Cell SPUs:

Not to mention PS4 is like 5-10 times more powerful than the PS3... maybe XBOX ONE could run UC2-3 with no cutbacks on physics, but definitely not the 360.

1) Ridiculous statement. Source?

XB1 is far more powerful than the X360. Just because it failed commercially, it doesn't mean it's weaker. That's why it supports 360 BC easily.

2) Quite the opposite.

Late 7th gen multiplatforms run better on PS3 with better graphics settings.

Witcher 3 is a physics-heavy game?

Golden Abyss is even worse than UC1 on PS3. It used pre-rendered backgrounds in real-time gameplay.

Vita is definitely not a portable PS3. Better than the PS2, worse than the PS3 in terms of compute.

Sony was losing over $200 on every PS3 console. So something like an 8800GTX in the PS3 was definitely out of the question.

However, I think that Sony has gimped the RSX too much (2x less memory bandwidth compared to the 7800GTX) making it more comparable to the 7600GT. PC games that run like a dream on the 7800GTX run with serious problems on the PS3.

If I remember correctly, Crysis 1 had some nice water physics, and that game ran quite well on my 8800Ultra at maxed out settings, so I don't think 280GTX would be needed to achieve similar effects as on PS3. I bought the PS3 and PC (Q6600 + 8800Ultra) in the same month and the gap between my PC and PS3 was just insane. Games that run at 20-30fps on the PS3 run at 60fps at 1440p on my PC.

Last edited:

ResilientBanana

Member

Forums just fight for them now.Let's go back in time, shall we back when developers and publishers were console warring.

I remembered this article stating that Uncharted 2 would be impossible to port on Xbox 360 due to many technical factors.

Sourcel

How true are these statements?

Do you miss the old days where developers were taking shots at one another?

Like this example from Sucker Punch:

Shadowstar39

Member

Was that infamous 2 or the first one? I can't believe they put that in there, its hilarious, Ring ring electronics , talk about a dig on the competition but subtle. Masterfully done.Let's go back in time, shall we back when developers and publishers were console warring.

I remembered this article stating that Uncharted 2 would be impossible to port on Xbox 360 due to many technical factors.

Sourcel

How true are these statements?

Do you miss the old days where developers were taking shots at one another?

Like this example from Sucker Punch:

Man this thread is something else, I miss those days. I think i will dig out my wired controller and play infamous on ps3 (damn you sony for not porting all the ps3 games to newer systems), or go play the uc collection.

PaintTinJr

Member

I agree with the premise of that, but putting aside the difficulty of finding a single player gaming workload that would map perfectly to those 2 extra 2way cores, I'm still not entirely sure that it couldn't be mapped to 4 or 5 SPUs, where 1 of the 4 or 5 was a branch predictor and prefetcher to feed the others and get the same throughput......

If Gears of War 3 fully utilizes 3 PPU cores (and I don't mean floating point operations by that, but more general purpose code), then there's no way the PS3 will be able to run it at acceptable framerates. And that's fine by me.

As much as GPGPU/CUDA couldn't do that, the SPUs stilling being full instruction set processors makes me doubt their inability to be used to divide and conquer virtually all general purpose compute problems.

Agreed.I say bring back the glorious PS360 era! Less crunch, less wokeness, more games, more profitability, more bravado, less drama, more exclusives on both platforms... what's not to love from that era? Gaming is no longer the same these days.

Negotiator

Banned

1) $300 loss, but yeah, I agree.1) Sony was losing over $200 on every PS3 console. So something like an 8800GTX in the PS3 was definitely out of the question.

2) However, I think that Sony has gimped the RSX too much (2x less memory bandwidth compared to the 7800GTX) making it more comparable to the 7600GT. PC games that run like a dream on the 7800GTX run with serious problems on the PS3.

3) If I remember correctly, Crysis 1 had some nice water physics, and that game ran quite well on my 8800Ultra at maxed out settings, so I don't think 280GTX would be needed to achieve similar effects as on PS3. I bought the PS3 and PC (Q6600 + 8800Ultra) in the same month and the gap between my PC and PS3 was just insance. Games that run at 20-30fps on the PS3 run at 50-60fps at 1440p on my 1440p.

2) Brute-force wise it's been gimped, but it has some nice customizations (more cache capacity, better bus, ability to render on 2 memory pools, special instructions) that let it punch above its weight:

RSX Reality Synthesizer - Wikipedia

3) It's not just the water physics, but the whole playable sequence (a ship sailing the ocean, tin cans following different routes every time you booted up the sequence etc.)

It's 2024 and I still haven't seen anything matching it gameplay-wise... those physics blend perfectly with the gameplay.

It makes you wonder why we cannot have something even remotely similar in the Teraflop era... I don't even understand why there are no ports of UC1-2-3 on PC, despite having the Remastered Collection on PS4.

Btw, I got an UC3 disc for 5 bucks today, booted it up, I even played splitscreen MP solo and let me tell you it holds up real good! I finished a few rounds solo, despite the framerate not being rock solid 30 fps.

Protip: don't buy the GOTY version (it doesn't support LAN mode) and the included DLC maps are basically useless without it. You can use a DualShock 4/DualSense controller for the 2nd player, but it needs a USB cable at all times, there's no vibration and no support for the system menu.

Why the fuck we cannot have even quad splitscreen in modern Teraflop machines?

Hell, quad splitscreen was a thing back in the N64 era.

Sorry, but I don't buy the "they need to sell more PS+ subscriptions" BS excuse anymore.

Fortnite is the most profitable game ever invented and it requires no subscriptions at all.

Optional cosmetic MTX is where it's at and ND dropped the ball long time ago:

How many people here remember that UC3 MP went F2P a few years after launch?

UNCHARTED 3 Multiplayer Goes Free-to-Play Today

After a few months of dropping hints our secret is ready to be made public. We are extremely fortunate to have some of the most devoted and amazing fans in gaming and we wanted to introduce those fans, and the world of UNCHARTED 3 multiplayer, to as many people as possible. We also wanted...

blog.playstation.com

blog.playstation.com

Come on, I can't be the only one thinking Sony has tons of criminally underrated gems (even PS Home is dead, despite the Metaverse trend) that they just don't know how to exploit them commercially to fund their highly expensive AAA SP games (nobody said they should stop making those, but they need to fund them somehow).

Why we cannot have UC2/UC3 MP remastered at 1080p 120 fps or 4K 60 fps? The graphics engine has already been ported by Bluepoint, it's just a matter of porting the netcode and reinstating the matchmaking/database servers.

Sony says they want more MP games, but their actions say otherwise. Kinda like women who say they wanna get married, but they still behave like thots, lol.

It's a shame Sony executives are so out of touch with the gaming market/industry these days...

Last edited:

GametimeUK

Member

I find it crazy Banjo Kazooie Nuts & Bolts was impossible on PS3 due to the physics.

intbal

Member

I find it crazy Banjo Kazooie Nuts & Bolts was impossible on PS3 due to the physics.

I don't know anything about that.

But I do know that Rare made use of the Xenos's tessellation hardware for Nuts & Bolts and Viva Pinata. Since RSX didn't have a hardware tessellator, that functionality would be done with the SPUs in Cell. Cell was certainly very capable when it came to tessellation, but Nuts & Bolts was a very physics-heavy game, and Cell would have been used extensively for physics code. The game still could have worked (there's no such thing as "impossible on XXXX console"), they would just have to use different methods for the terrain (viva) and water (nuts & bolts).

SirTerry-T

Member

The 360 hosted a pretty damned impressive port of Rise Of The Tomb Raider (Nixxes putting in the work), In sure with the right Devs it could have done a decent job of an Uncharted port.

semiconscious

Member

i miss uncharted

yep. & i miss this naughty dog...

Was the 25GB purely real time assets, or did this use the KZ3 method of recording a real time cutscene, turning it into linear video, and playing it back in some bad Bink compressed file? Killzone 3 was one of the best looking games on the system, and only required 5MB of HDD space. It also had cutscenes that looked worse than real time gameplay.

Anyway, linear games are the easiest to split between discs (pour one out for anyone who occupied 360 HDD space AND listened to that god forsaken optical drive for GTA V) so I would imagine one of the more skilled port houses could handle it.

Anyway, linear games are the easiest to split between discs (pour one out for anyone who occupied 360 HDD space AND listened to that god forsaken optical drive for GTA V) so I would imagine one of the more skilled port houses could handle it.

Stooky

Banned

after working in games for a while alot of programmers go into software programing. it less stressful/easier better quality of life (depending on the software) . its like nba players becoming sports commentaters.Pal Engstad works at Apple as a senior software architect:

Less crunch, higher pay... why work at ND anymore? His resume is filled up.

Pål-Kristian Engstad - A veteran in Computer Graphics and Game Programming | LinkedIn

A veteran in Computer Graphics and Game Programming · Great believer in the slogan: Keep it Simple and Fast. Specialties: Assembly programming, programming, optimization, graphics engines, computer graphics, animation systems, compression, software engineering, management and hiring. ·...www.linkedin.com

I feel like this is a dying breed of old school Demoscene programmers... at best we can hope one day the AI will fill their niche.

If Gears of War 3 fully utilizes 3 PPU cores (and I don't mean floating point operations by that, but more general purpose code), then there's no way the PS3 will be able to run it at acceptable framerates. And that's fine by me.

I say bring back the glorious PS360 era! Less crunch, less wokeness, more games, more profitability, more bravado, less drama, more exclusives on both platforms... what's not to love from that era? Gaming is no longer the same these days.

Ppu cores were general purpose cores meaning you gave it job it split it among the cores vs spus where scheduling and giving cores specific jobs was important. if spus weren't running near 100% you lose performance. if you did not do this your game would run like shit. at that time that type of coding for games was new it took really talented programers to make it sing. Ps3 could definitely run gears.

Last edited:

Hugare

Member

Downgraded? Sure, of course

At the same quality? Not a chance

The 360 had the advantage of being easier to develop to. Many multiplatform games ran better on it. Bayonetta ran at twice the framerate of the PS3 version.

But when Sony developers started understanding Cell's SPUs, the PS3 became untouchable.

Forget about Uncharted 2. Uncharted 3 looked even better. That thing was just ridiculous for the time.

Not to mention God of War 3 from 2010. Poseidon battle is impressive to this day.

At the same quality? Not a chance

The 360 had the advantage of being easier to develop to. Many multiplatform games ran better on it. Bayonetta ran at twice the framerate of the PS3 version.

But when Sony developers started understanding Cell's SPUs, the PS3 became untouchable.

Forget about Uncharted 2. Uncharted 3 looked even better. That thing was just ridiculous for the time.

Not to mention God of War 3 from 2010. Poseidon battle is impressive to this day.

PaintTinJr

Member

I guess the other point about the Xenon compared to Cell BE is that the general purpose PPE cores in both systems can only reach 40-50% throughput even in the most optimal workloads, and the SPUs can easily exceed that efficiency upto 80-90% in optimal real tasks IIRC which in the second half of the generation was common in first party games. but still probably not.There are also misleading figures flying around as to max theoretical compute ceilings, apparently RSX operated at different clock speeds for pixel and vertex pipelines. Pixel side is 211 GFlops/4.4 Gpixels at 550Mhz and Vertex one is 40 GFlops at 500Mhz, so 251 GFlops in total. X360 Xenos is at 240 Glops/4 Gpixels (unified).

Whole system for PS3: CELL BE 192+RSX 251=443 GFlops. Whole system for X360: Xenon 115+Xenos 240=355 GFlops. Not that it matters more than architectural differences.

Cell BE wiki "Tests by IBM show that the SPEs can reach 98% of their theoretical peak performance running optimized parallel matrix multiplication"

So not sure if the Xenon with VMX128 figure is correct. But going by 25.6Gflops/s per PPE core , in optimal real situations (for the 2nd & 3rd PPEs anyway) the Xenon was no more than 38.4Gflops total, and the Cell BE was no more than 12.8Gflops + 6x 80% SPU = 136.6 Gflops.

Given that the main PPE in both the Xenon and Cell wouldn't be doing vector maths exclsuively and would mostly be doing main program flow control, the 12.8Gflop is still overly inflated for the comparison, and the real comparison would really be Xenon 25.6Gflops vs Cell BE SPUs 122.8Gflops for helping their respective GPUs in optimal situations

Which give a comparison of 25.6+240 = 264.6Gflops + main PPE for Xbox 360 vs 122.8 + 251 = 373.8Gflops + main PPE for PS3. And obviously we aren't counting that a 7th working SPU inside the PS3 could have been recording TVTVTV, as it was on my unit frequently while playing games like AC1/2, MGS4, UC1-3,etc

RaySoft

Member

Ohh nice

Cool seeing my fellow countryman Pål Engstad in a Naughty Dog video. He was Lead Programmer on Uncharted 2 and did hell-of-a-lot of SPU microcode optimizations to their engine. Brilliant programmer.

They had a shit ton of effects that were added to the engine in comparison with Drake's Fortune, once they started using the SPUs.

DoF, Per-Object Motion Blur, SSS, the perfect AO, simulations, the constant streaming.. all thanks to Cell.

Cool seeing my fellow countryman Pål Engstad in a Naughty Dog video. He was Lead Programmer on Uncharted 2 and did hell-of-a-lot of SPU microcode optimizations to their engine. Brilliant programmer.

CuteFaceJay

Member

Always was BS, R* showed everyone up when they released GTA V on the 360.

Lysandros

Member

Thanks for the insight. I also had the higher SIMD saturation in mind leading to much higher efficiency making 1:1 compute comparisons somewhat misleading (as it tends to happen). And just to confirm the theoretical max; is the official figure for CELL PPU 33.8 GFlops or 25.6 GFlops (just like the SPEs)? Is it the exact same core as the Xenon one? I've had some difficulties to find the official Sony/IBM figures concerning those points.I guess the other point about the Xenon compared to Cell BE is that the general purpose PPE cores in both systems can only reach 40-50% throughput even in the most optimal workloads, and the SPUs can easily exceed that efficiency upto 80-90% in optimal real tasks IIRC which in the second half of the generation was common in first party games. but still probably not.

Cell BE wiki "Tests by IBM show that the SPEs can reach 98% of their theoretical peak performance running optimized parallel matrix multiplication"

So not sure if the Xenon with VMX128 figure is correct. But going by 25.6Gflops/s per PPE core , in optimal real situations (for the 2nd & 3rd PPEs anyway) the Xenon was no more than 38.4Gflops total, and the Cell BE was no more than 12.8Gflops + 6x 80% SPU = 136.6 Gflops.

Given that the main PPE in both the Xenon and Cell wouldn't be doing vector maths exclsuively and would mostly be doing main program flow control, the 12.8Gflop is still overly inflated for the comparison, and the real comparison would really be Xenon 25.6Gflops vs Cell BE SPUs 122.8Gflops for helping their respective GPUs in optimal situations

Which give a comparison of 25.6+240 = 264.6Gflops + main PPE for Xbox 360 vs 122.8 + 251 = 373.8Gflops + main PPE for PS3. And obviously we aren't counting that a 7th working SPU inside the PS3 could have been recording TVTVTV, as it was on my unit frequently while playing games like AC1/2, MGS4, UC1-3,etc

Edit: Since we are excluding the reserved 7th SPE on CELL, we also have to account the OS reservation on Xenon side.

Last edited: