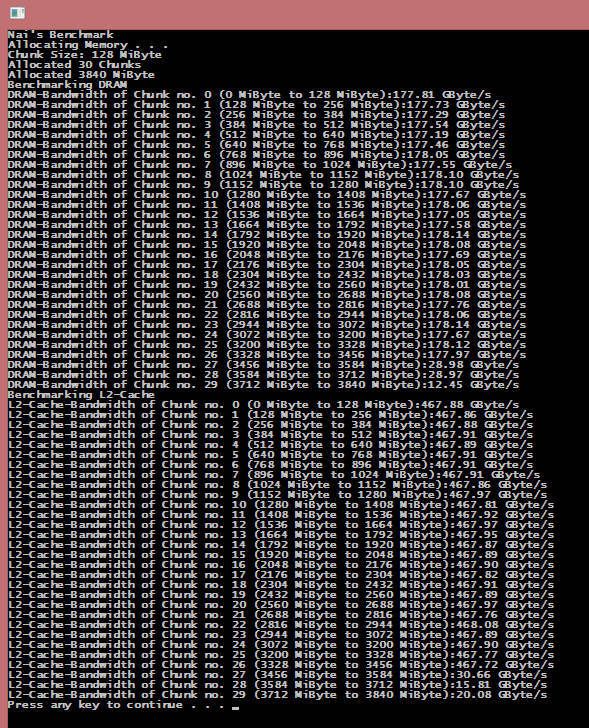

From the official bandwidthTest from the CUDA SDK.

I'm not sure what that's supposed to prove exactly. The lesser tests should be expected to result in lower bandwidth figures because you're moving such a small amount of data and your last test is 67MB -- not 67MB in addition to what you tested earlier but 67MB in total.